Archives

-

Uploading large files to WCF web services

Recently, we need to allow users to upload large files to a web service. Fortunately, WCF does support this scenario (see MSDN: Large Data and Streaming). MSDN recommends:

The most common scenario in which such large data content transfers occur are transfers of binary data objects that:

- Cannot be easily broken up into a message sequence.

- Must be delivered in a timely manner.

- Are not available in their entirety when the transfer is initiated.

For data that does not have these constraints, it is typically better to send sequences of messages within the scope of a session than one large message.

Since our files do not have these constraints, we decide to break them up into sequences of segments, and pass the segments to the web service as byte arrays. There are several remaining issues:

Firstly, by default, WCF uses Base64 encoding to encode byte arrays, resulting 4:3 ratio in encoding overhead. To avoid this overhead, WCF support both MTOM and Binary encoding. MTOM is a widely supported format that sends large binary data as MIME attachments after the soap message. Binary encoding is a WCF proprietary encoding format. It is extremely easy to use either formats. Just change the messageEncoding attribute in the binding element in the configuration from Text to Mtom or Binary.

Secondly, experiment shows that high level handshake through web service is fairly slow. Better performance can be achived by using a larger chunk size. By default, the maximum message size is 65536 and maximum array size is 8192. In order to transmit larger chunks, we need to increase the maximum message and array size. This can be done by increasing the maxBufferSize and maxReceivedMessageSize attributes of the binding element and the maxArrayLength attribute of the readerQuotas element. Note that increasing the maximum sizes also increases the risk of denial of service attack so select the size carefully.

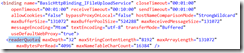

Thirdly, although it is possible to configure the IIS to automatically compress HTTP responses, HTTP clients cannot be configured to automatically compress the HTTP requests. WCF has a sample custom compression encoder. It is fairly easy to use the compression encoder. Just reference the GZipEncoder.dll on both the server and the client side. The configuration settings can be extracted from the sample. The only thing that is not apparent from the sample is how to increase the message size. The maxReceivedMessageSize attribute can be configured on the httpTransport element:

To change the maxArrayLength in readerQuotas, I actually have to make a modification to the sample code:

The finish proof-of-concept application can be downloaded here.

-

LINQ and IEnumerable may surprise you

Let us examine the following code snippet:

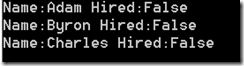

class Candidate { public string Name { get; set; } public bool Hired { get; set; } } class Program { static void Main(string[] args) { string[] names = { "Adam", "Byron", "Charles" }; var candidates = names.Select(n => new Candidate() { Name = n, Hired = false }); //now modify the value of each object foreach (var candidate in candidates) { candidate.Hired = true; } //noew print the value of each object foreach (var candidate in candidates) { Console.WriteLine(string.Format("Name:{0} Hired:{1}", candidate.Name, candidate.Hired)); } Console.Read(); } }Basically, I create an IEnumerable<Candidate> from LINQ, and them loop through the results to modify each object. At the end, you might expect each candidate hired. Wrong! Here is the output:

What happened was LINQ actually delayed the object generation until enumeration. When we enumerate again to print the objects, LINQ actually generated a new set of objects. If you use ToList() to convert the IEnumerable to List, you will get the expected result:

var candidates = names.Select(n => new Candidate() { Name = n, Hired = false }).ToList();ToList() will instantiate the objects immediately and the subsequent enumeration will be on the same objects. That is why you cannot find the .ForEach() extension method on IEnumerable<T>. You have to convert it to IList<T> to use the method.