Contents tagged with LINQ

-

Missing methods in LINQ: MaxWithIndex and MinWithIndex

The LINQ library has Max methods and Min methods. However, sometimes we are interested in the index location in the IEnumerable<T> rather than the actual values. Hence the MaxWithIndex and MinWithIndex methods.

These methods return a Tuple. The first item of the Tuple is the maximum or minimum value just the the Max and Min methods. The second item of the Tuple is the index location.

As usually, you might get my LINQ extension from NuGet:

PM>Install-Package SkyLinq.Linq

Usage examples in the unit test.

-

SkyLinq binaries are available on NuGet

After much hesitate, I finally published my SkyLinq binaries on NuGet. My main hesitation was that this is my playground so I am changing things at will. The main reason to publish is that I want to use these works myself so I need an easy way to get the latest binaries into my projects. NuGet is the easiest way to distribute and get updates, including my own projects. There are 3 packages:

- SkyLinq.Linq is a portal library that contains some LINQ extensions.

- SkyLinq.Composition contains my duck-typing implementation. It is similar to Impromptu-Interface but it is much simpler and it uses il.emit instead of LINQ Expressions to generate code. It also contains a LINQ query rewriting example.

- LINQPadHost is a simple hosting and executing environment for LINQPad queries. Live demo at http://skylinq.azurewebsites.net/SkyLINQPad.

-

Sky LINQPad, a minimum viable clone of LINQPad in the cloud

A while ago, I blogged about a simple LINQPad query host. It is fairly easy to put a web face on it. The only change that I had to make is to set the ApplicationBase for the AppDomains that I create as asp.net is quite different to an .exe app. A playground is now running at http://skylinq.azurewebsites.net/SkyLINQPad. One can upload an existing .linq files designed in LINQPad or type some queries directly into the page:

-

A simple LINQPad query host

I am a big fan of LINQPad. I use LINQPad routinely during my work to test small, incremental ideas. I used it so much so that I bough myself a premium license.

I always wish I can run queries designed in LINQPad in my own program. Before 4.52.1 beta, there was only a command line interface. In LINQPad v4.52.1 beta, there is finally a Util.Run method that allows me to run LINQPad queries in my own process. However, I felt that I did not have sufficient control on how I can dump the results. So I decided to write a simple host myself.

As in the example below, a .linq file starts with an xml meta data section followed by a blank line and then the query or the statements.

<Query Kind="Expression"> <Reference><RuntimeDirectory>\System.Web.dll</Reference> <Reference><ProgramFilesX86>\Microsoft ASP.NET\ASP.NET MVC 4\Assemblies\System.Web.Mvc.dll</Reference> <Namespace>System.Web</Namespace> <Namespace>System.Web.Mvc</Namespace> </Query> HttpUtility.UrlEncode("\"'a,b;c.d'\"")

The article “http://www.linqpad.net/HowLINQPadWorks.aspx” on the LINQPad website gives me good information on how to compile and execute queries. LINQPad uses CSharpCodeProvider (or VBCodeProvider) to compile queries. Although I was tempted to use Roslyn like ScriptCS, I decided to use CSharpCodeProvider to ensure compatible with LINQPad.

We only need 3 lines of code to the LINQPad host:

using LINQPadHost; ... string file = @"C:\Users\lichen\Documents\LINQPad Queries\ServerUtility.linq"; Host host = new Host(); host.Run<JsonTextSerializer>

(file); As I mentioned at the beginning. I would like to control the dumping of the results. JsonTextSerializer is one of the three serializers that I supplied. The other two serializers are IndentTextSerializer and XmlTextSerializer. Personally, I found that the JsonTextSerializer and IndentTextSerializer the most useful.

The source code could be found here.

Examples could be found here.

-

LINQ query optimization by query rewriting using a custom IQueryable LINQ provider

In my SkyLinq open source project so far, I tried to make LINQ better and created several extension methods (for example, see GroupBy and TopK) that are more memory efficient than the standard methods in some scenarios. However, I do not necessarily want people to bind into these extensions methods directly for the following reasons:

- Good ideas evolve. New methods may come and existing methods may change.

- There is a parallel set of methods in IEnumerable<T>, IQueryable<T> and to some degree, in IObservable<T>. Using custom LINQ extension breaks the parallelism.

A better approach would be to write the LINQ queries normally using the standard library methods and then optimize the queries using a custom query provider. Query rewriting is not a new idea. SQL server has been doing this for years. I would like to bring this idea into LINQ.

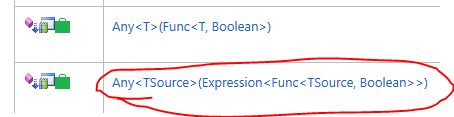

If we examine the IQueryable<T> interface, we will find there is a parallel set of LINQ methods that accepts Expression<Func> instead of <Func>.

There is a little C# compiler trick here. If C# see a method that accepts Expression<Func>, it would create Expressions of Lamda instead of compiled Lambda expression. At run time, these expressions are passed to an IQueryable implementation and then passed to the underlying IQueryProvider implementation. The query provider is responsible for executing the expression tree and return the results. This is how the magic of LINQ to SQL and Entity Frameworks works.

The .net framework already has a class called EnumerableQuery<T>. It is a query provider than turns IQueryable class into LINQ to objects queries. In this work, I am going one step further by creating an optimizing query provider.

A common perception of writing a custom LINQ provider is that it requires a lot of code. The reason is that even for the most trivial provider we need to visit the every possible expressions in the System.Linq.Expressions namespace. (Note that we only need to handle expressions as of .net framework 3.5 but do not need to handle the new expressions added as part of dynamic language runtime in .net 4.x). There are reusable framework such as IQToolkit project that makes it easier to create a custom LINQ provider.

In contrast, creating an optimizing query provider is fairly easy. The ExpressionVistor class already has the framework that I need. I only needed to create a subclass of ExpressionVisitor called SkyLinqRewriter to override a few methods. The query rewriter basically replaces all call to the Queryable class with equivalent calls to the Enumerable class and rewrite queries for optimization when an opportunity presents.

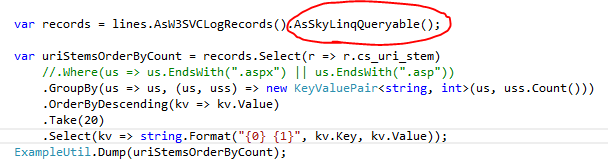

It is fairly easy to consume the optimizing query provider. All we need is to call AsSkyLinqQueryable() to convert IEnumerable<T> to IQueryable<T> and remaining code can stay intact:

An end-to-end example can be found at https://skylinq.codeplex.com/SourceControl/latest#SkyLinq.Example/LinqToW3SVCLogExample.cs.

To conclude this post, I would recommend that we always code to IQueryable<T> instead of IEnumerable<T>. This way, the code has the flexibility to be optimized by an optimizing query provider, or be converted from pull based query to push based query using Reactive Extension without rewriting any code.

-

An efficient Top K algorithm with implementation in C# for LINQ

The LINQ library currently does not have a dedicated top K implementation. Programs usually use the OrderBy function followed by the Take function. For N items, an efficient sort algorithm would scale O(n) in space and O(n log n) in time. Since we are counting the top K, I believe that we could devise an algorithm that scales O(K) in space.

Among all the sorting algorithms that I am familiar, the heapsort algorithm came to my mind first. A Heap has a tree-like data structure that can often be implemented using an array. A heap can either be a max-heap or a min-heap. In the case of min-heap, the minimum element of at the root of the tree and all the child elements are greater than their parents.

Binary heap is a special case of heap. A binary min-heap has the following characteristics:

- find-min takes O(1) time.

- delete-min takes O(log n) time.

- insert takes O(log n) time.

So here is how I implement my top K algorithm:

- Create a heap with an array of size K.

- Insert items into the heap until the heap reaches its capacity. This takes K O(log K) time.

- For each the remaining elements, if an element is greater than find-min of the heap, do a delete-min and then insert the element into the heap.

- Then we repeated delete-min until the heap is empty and arrange the deleted element in reverse order and we get our top 10 list.

The time it takes to find top K items from an N item list is:

O(N) * t1 + O((K + log N - log K) * log K) * t2

Here t1 is the time to compare an element in step 3 to find-min and t2 is the combined time of delete-min and the subsequent insert. So this algorithm is much more efficient that a call to OrderBy followed by a call to Take.

I have checked-in my implementation to my Sky LINQ project. The LINQ Top and Bottom is at in LinqExt.cs. The internal binary heap implementation is in BinaryHeap.cs. The LINQ example can be found in HeapSort.cs. The Sky Log example has also been updated to use the efficient Top K algorithm.

Note that this algorithm combined OrderBy and Take by GroupBy itself still uses O(N) splace where N is number of groups. There are probabilistic approximation algorithms that can be used to further alleviate memory foot print. That is something I could try in the future.

-

My first impression on Tx (LINQ to Logs and Traces)

Yesterday, I learnt about Tx (LINQ to Logs and Traces) from This Week On Channel 9. The name suggest that it has overlap with my end-to-end example on LINQ to W3SVC log files. It turns out that the overlap is minimal.

Tx is a Microsoft Open Technologies project. The source code commit history suggest it was originally part Reactive Extension (Rx) developed by the Rx team and became an independent project a year ago. Tx heavily uses Rx.

The Tx project has a page on “When to use Tx?” and I will quote a portion of it below.

When NOT to use Tx

Use only LINQ-to-Objects when:- There are no real-time feeds involved

- The data is already in memory (e.g. List <T>), or you are dealing with single file that is easy to parse - e.g. a text file, each line representing event of the same type.

Use only Reactive Extensions (Rx) when:- There are real-time feeds (e.g. mouse moves)

- You need the same query to work on past history (e.g. file(s)) and real-time

- Each feed/file contains a single type of events, like T for IObservable<T>

When to use Tx

Tx.Core adds the following new features to Rx:- Support for Multiplexed sequences (single sequence containing events of different types in order of occurence). The simplest example of turning multiplexd sequence into type-specific Obseravable-s is the Demultiplexor

- Merging multiple input files in order of occurence - e.g. two log files

- Hereogeneous Inputs - e.g. queries across some log files (.evtx) and some traces (.etl)

- Single-pass-read to answer multiple queries on file(s)

- Scale in # of queries. This is side effect of the above, which applies to both real-time and single-read of past history

- Providing virtual time as per event timestamps. See TimeSource

- Support for both "Structured" (like database, or Rx) and "Timeline" mode (like most event-viewing tools)

The Tx W3CEnumerable.cs does have overlap with my implementation of log parser. I wish I had noticed the project earlier. In the future, I will try to align my project with Tx to make it easier to use them together. I just had an implementation of duct-typing so interoperating different types from the same idea should be easy.

-

A c# implementation of duck typing

Eric Lippert’s blogs (and his old blogs) have been my source to the inner working of VBScript and C# in the past decade. Recently, his blog on “What is ‘duck typing’?” has drawn lots of discussions and generated additional blogs from Phil Haack and Glenn Block. The discussion injects new ideas into my thoughts on programming by composition. I previously argued that interfaces are too big a unit for composition because they often live in different namespaces and only a few of the well-known interfaces are widely supported. I was in favor of lambdas (especially those that consume or return IEnumerable<T>) as composition unit. However, as I worked through my first end-to-end example, I found that lambdas are often too small (I will blog about this in details later).

Duck typing may solve the interface incompatibility problem in many situations. As described in Wikipedia, The name came from the following phrase:

“When I see a bird that walks like a duck and swims like a duck and quacks like a duck, I call that bird a duck.”

If I would translate it into software engineering terms, I would translate it as:

The client expects certain behavior. If the server class has the behavior, then the client can call the server object even though the client does not explicitly know the type of the server objects.

That leads to the following implementation in a strongly-typed language like c#, consisting of:

- An interface defined by me to express my expected behaviors of duck, IMyDuck.

- An object from others that I want to consume. Let’s call its type OtherDuck.

- A function that checks if Duck has all the members of IMyDuck.

- A function (a proxy factory) that generates a proxy that implements IMyDuck and wrap around OtherDuck.

- In my software, I only bind my code to IMyDuck. The proxy factory is responsible for bridging IMyDuck and OtherDuck.

This implementation takes the expected behaviors as a whole. Except for the one time code generation for the proxy, everything else is strongly-typed at compile time. It is different to the “dynamic” in c# which implements late binding at the callsite.

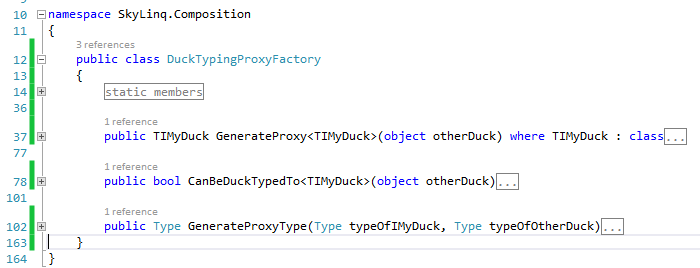

I have checked my implementation into my Sky LINQ project under the c# project of SkyLinq.Composition. The name of the class is DuckTypingProxyFactory. The methods look like:

I have also provided an example called DuckTypingSample.cs in the SkyLinq.Sample project.

So when would duck-typing be useful? Here are a few:

- When consuming libraries from companies that implement the same standard.

- When different teams in a large enterprise each implement the company’s entities in their own namespace.

- When a company refactors software (see the evolution of asp.net for example).

- When calling multiple web services.

Note that:

- I noticed the impromptu-interface project that does the same thing after I completed by project. Also, someone mentioned TypeMock and Castle in the comment of Eric’s log. So I am not claiming to be the first person with the idea. I am still glad to have my own code generator because I am going to use it to extend the idea of strongly-typed wrapper as comparing to data-transfer-objects.

- Also, nearly all Aspect-Oriented-Programming (AOP) and many Object-Relational-Mapping (ORM) frameworks have the similar proxy generator. In general, those in AOP frameworks are more complete because ORM frameworks primary concern with properties. I did looked at the Unity implementation when I implemented mine (thanks to the Patterns & Practices team!). My implementation is very bare-metal and thus easy to understand.

-

SkyLog: My first end-to-end example on programming by composition

I have completed my first end-to-end example as I am exploring the idea of programming by composition. SkyLog is an example on querying IIS Log files like what LogParser do, except:

- It is built on LINQ and thus can use the entire .net framework, not just the built-in functions of LogParser.

- It can use Parallel LINQ and thus uses multiple cores effectively.

- Any part of a query can be refactored into a function and thus reused.

- We just need to write the queries once and the queries can be used against one log file, a whole directory of log files, several servers, and in any storage, such as Azure Blog Storage.

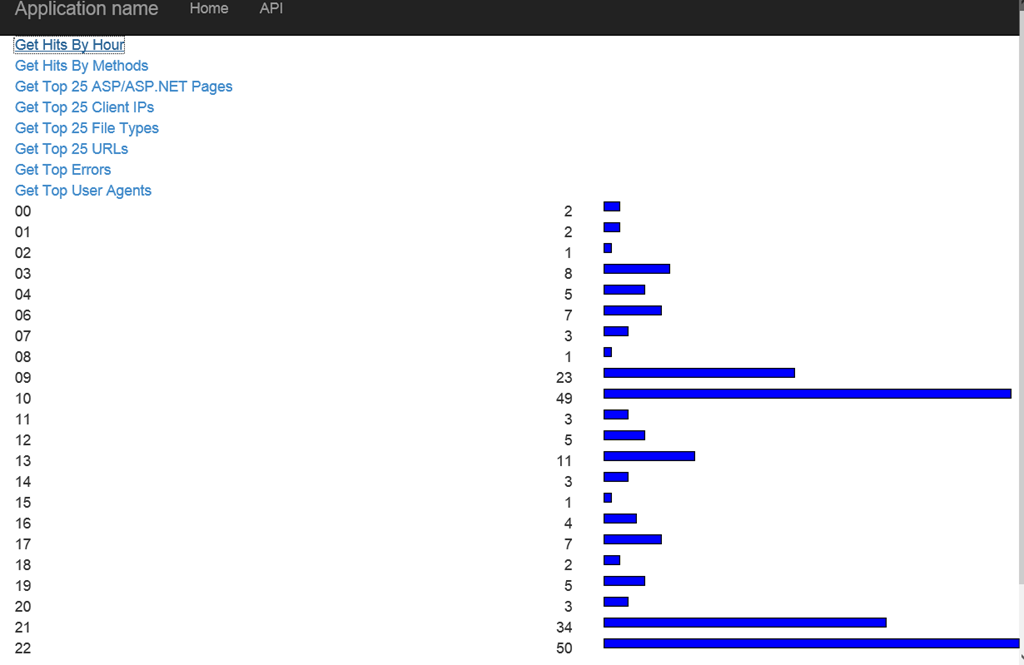

The running example are available at http://skylinq.azurewebsites.net/skylog. The example runs the query against some log files stored in Azure Blog Storage.

The queries in the example are from http://blogs.msdn.com/b/carlosag/archive/2010/03/25/analyze-your-iis-log-files-favorite-log-parser-queries.aspx.

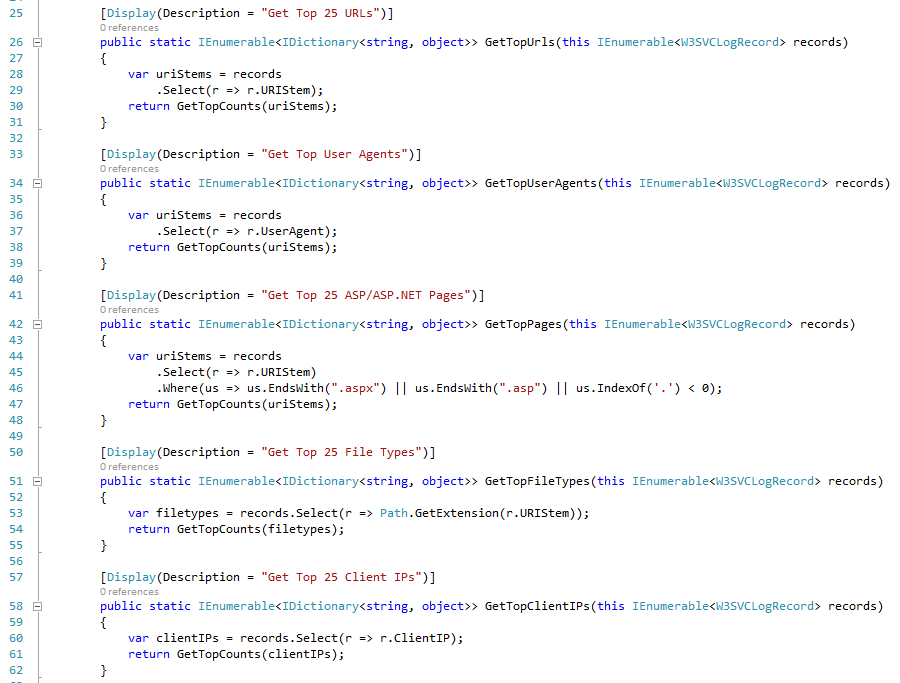

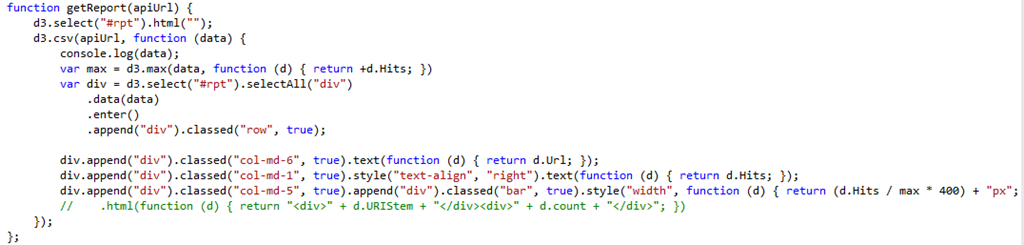

The queries look even simpler than what you normally write against LogParser or SQL Server:

That is because part of the queries were refactored into two reusable functions below. We cannot reuse part of query with LogParser. With SQL Server, we can create reusable units with View. With LINQ, we can reuse any part of the LINQ query.

Note that I used the memory efficient version of GroupBy written by me previously. The equivalent code using the GroupBy in LINQ is commented out.

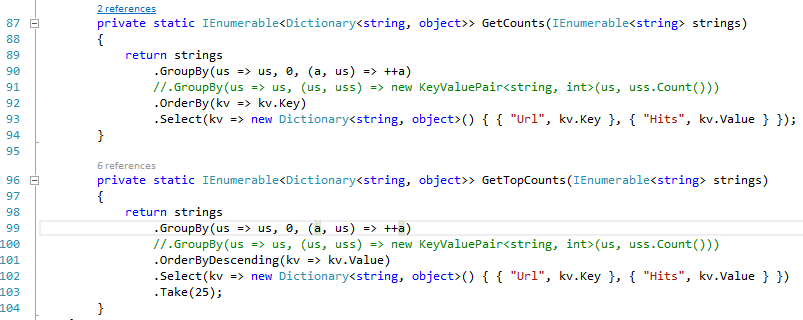

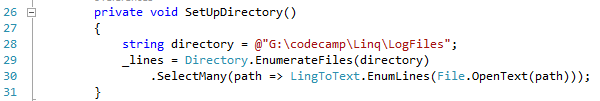

To run these reports, we just need records from the log files as IEnumerable<string>. To get them from a directory, the code would look like:

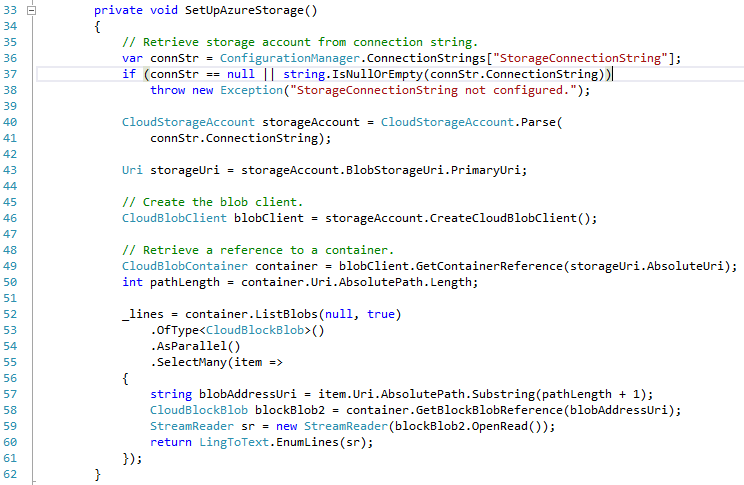

To get records from Windows Azure Blob Storage, the could would look like:

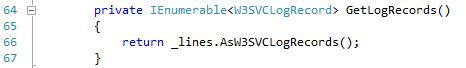

Lastly, we need to convert IEnumberable<string> to strongly-typed wrapper, IEnumerable<W3SVCLogRecord>, to be consumed by the reports:

I touched upon the idea of strongly-typed wrapper previously. The purpose of strongly-typed wrapper is to allow intelligence while minimize the garbage collection. The wrapper provides access to underlying data, but does not convert the data to a data-transfer-object.

The front-end is a simple ASP.NET MVC page. Reports are exposed through ASP.NET Web API using the CSV formatter that I built previously. The visualization was built using D3.js. The code is so simple that we could think each line of the code as Javascript based widgets. If we desire more sophisticated UI, we could compose the UI with a different widget. We could even introduce a client-side template engine such as Mustache.js.

Even with simple example, there are enough benefits in it so that I probably would never need to use LogParser again. The example is fully built on LINQ to IEnumerable. I have not used any cool feature from IQueryable and Rx yet. As usually, the latest code is in http://skylinq.codeplex.com. Stay tuned for more fun stuff.

-

Custom Web API Message Formatter

ASP.NET Web API supports multiple message formats. The framework uses the “Accept” request header from the client to determine the message format. If you visit a Web API URL with internet explorer, you will get a JSON message by default; if you use Chrome, which sends “application/xml;” in the accept header, you will get a XML message.

Message format is an extensivity point in Web API. There is a pretty good example on ASP.NET web site. Recently, when I worked on my SkyLinq open source cloud analytic project, I needed a way to expose data to the client in a very lean manner. I would like it to leaner than both XML and JSON, so I used csv. The example provided on the ASP.NET website is actually a CSV example. The article was lastly updated on March 8, 2012. Since ASP.NET is rapidly advancing, I found that I need to make the following modifications to make the example working correctly with Web API 2.0.

- CanReadType is now a public function instead of a proetected function.

- The signature of WriteToStream has changed. The current signature is

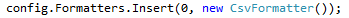

- When addition the Media Formatter to the Configuration, I have to insert my custom formatter into the formatters collection as the first. This is because the JSON Formatter actually recognizes the “application/csv” header and send JSON back instead. Example below: