Understanding Thread Local Storage(TLS) in TPL

.Net 4.0 simplified writing parallel code by introducing the parallel variant of For/ForEach loop constructs. Most often the code that we write using Parallel.For/ForEach looks something like this :

Parallel.ForEach(<some collection>,(item) =>

{

// do some work corresponding to each item and

// store the result set in a common collection

});

Parallelizing the input data, performing some action corresponding to each item is the easy part. Things get interesting when we have to store/combine the output of each action into a common collection. Say for e.g. storing the result in the form of key-value pair in a common dictionary class where key being the input item.

When we parallelize a collection, the framework partitions the data into various chunks and then invokes multiple threads in order to process these partitioned data(I know I am over simplifying things here). It could very well happen that multiple threads will finish their task at the same time(almost) and they will try to add the result-set into the common collection. Since adding items to a collection is not inherently thread-safe, we will have to provide some sort of locking. Take a look at the following code :

private static void TestLockPerformance()

{

var collection = new Dictionary<int, int>();

Stopwatch watch = Stopwatch.StartNew();

Parallel.ForEach(Enumerable.Range(1, 10000000),

(i) =>

{

int sum = i + i;

lock (collection) //explicit locking

{

collection.Add(i, sum);

}

});

int count = collection.Count;

Console.WriteLine("Time : " + watch.ElapsedMilliseconds);

}

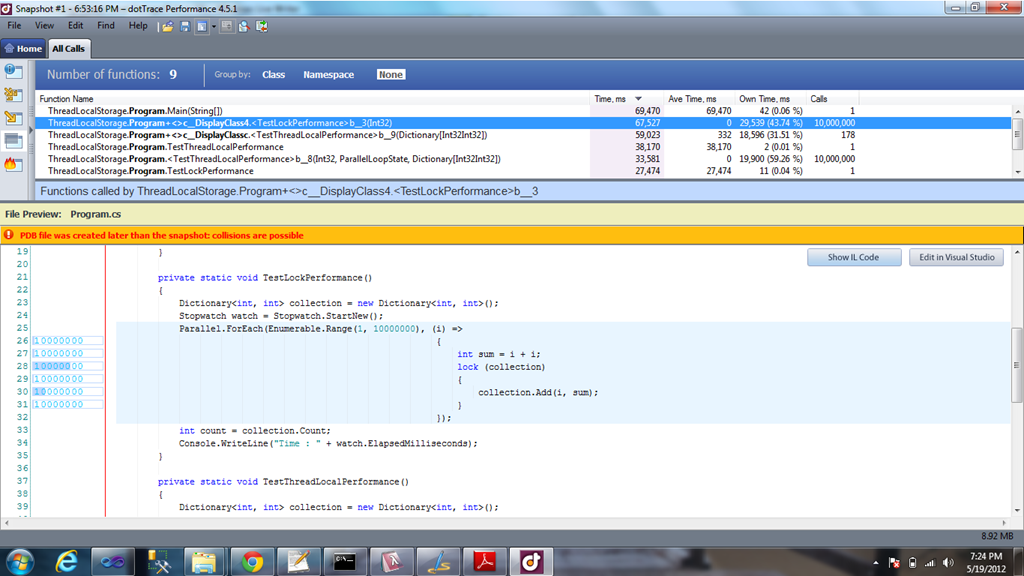

We will have to have explicit locking in place in order to avoid any locking kind of scenario. I profiled this code snippet via dotTrace and this is the what the result looked like.

As we can see in the highlighted section of the code that the lock statement is executed 10 million times. Similarly the width of the shaded area in the horizontal bar(line number 28) tells us that this is where the threads spent of most of their time. Threads spent most of their time waiting to acquire the lock, so that they can add the result-set to the common collection. This explicit locking hurts application performance and prevents a parallel program from truly utilizing the system resources.

Thread Local Storage

In the previous implementation, a given thread, picks up an item, process it and then wait/acquire the lock and then adds the item to the collection. The same is true for all the other threads which are processing the items in parallel. What if we can provide a mechanism wherein all the threads will have their own local collection classes in which they can add the results of the items which they have processed and finally once all the threads are done, we will combine the data of these “thread specific collection” into one giant collection. In this way we will be able to drastically reduce the contingency introduced via the lock statement. The thread specific collection/placeholder/<it could be anything> is known as thread local storage.

Good thing is that the Parallel.For/ForEach construct inherently support the concept of thread local storage via one of their method overloads. The method signature in case of Parallel.Foreach looks something like this :

public static ParallelLoopResult ForEach<TSource, TLocal>(

Partitioner<TSource> source,

Func<TLocal> localInit,

Func<TSource, ParallelLoopState, TLocal, TLocal> body,

Action<TLocal> localFinally

)

Note: For a full list of method overloads of Parallel.ForEach check this out : http://msdn.microsoft.com/en-us/library/system.threading.tasks.parallel.foreach.aspx

- Partitioner<TSource> source : First parameter is the collection that we need to parallelize

- Func<TLocal> localInit : As each thread is going to have its own collection, the initialization logic of that collection is defined via this system delegate.

- Func<TSource, ParallelLoopState, TLocal, TLocal> body : Body of the ForEach loop. The second last parameter in Func<> delegate i.e. TLocal signifies the thread local object. Similarly the last parameter signifies the return type of the Func<> delegate. We will see this in detail in the later section of this article. ParallelLoopState is not in the scope of this article, so just ignore it.

- Action<TLocal> localFinally : In the body of this delegate, we merge the data from each thread specific collection into one large collection. As we can see the type of the input parameter of the Action<> delegate is same as the return type of the Func<> delegate which signifies the body of the loop.

Code

1: private static void TestThreadLocalPerformance()

2: {

3: Dictionary<int, int> collection = new Dictionary<int, int>();

4: Stopwatch watch = Stopwatch.StartNew();

5: Parallel.ForEach(Enumerable.Range(1, 10000000),

6: () => new Dictionary<int, int>(),

7: (i, loopBody, localState) =>

8: {

9: int sum = i + i;

10: localState.Add(i, sum);

11: return localState;

12: },

13: (localstate) =>

14: {

15: lock (collection)

16: {

17: foreach (KeyValuePair<int, int> item in localstate)

18: {

19: collection.Add(item.Key, item.Value);

20: }

21: }

22: });

23:

24: int count = collection.Count;

25: Console.WriteLine("Time : " + watch.ElapsedMilliseconds);

26: }

In line number 6, we are initializing the thread specific dictionary class. In the body of the loop(line number between 7 & 12), we are accessing the thread specific object via “localState” object. Finally in the code block(line number between 13 & 22), we are adding the items from each of the thread specific collection into one common collection. In general the code flows in the following manner :

- Input data is partitioned

- Partitioned data is distributed among multiple threads

- Each thread process its own work item

- After processing, it enter the last Action block, where we merge the result-set into one common collection. In here, since multiple threads can come in at the same time, we need to explicit lock the collection object.

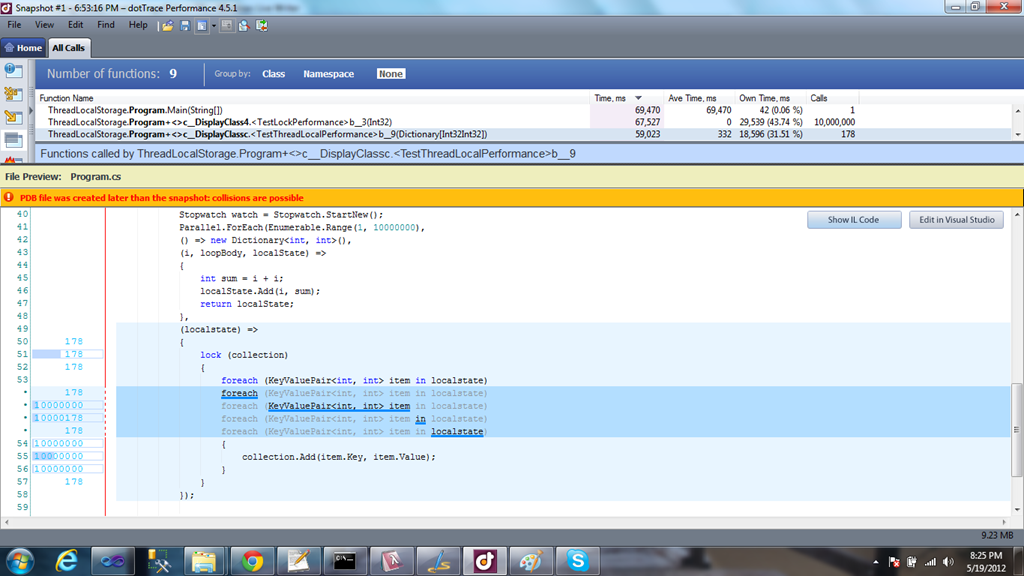

So, is this extra amount of code to increase the performance of the parallel block really worth? Are we sure that this code is going to perform much better that the previous one? Let’s see what the profiler has to say.

We can see here that there is absolutely no contingency in the body of the loop. The total number of time, the lock statement has been invoked is just 178(this number can vary depending upon the number of threads created by the framework).

In my machine, which is a dual core machine only two threads can run at a time. Given that there are other process running in parallel(e.g. VS 2010, Outlook etc) chances are high that the parallel versions of the code(with & without using thread local storage) might not differ a lot in terms of time taken to execute the code. But in the production environment wherein we have lots of cores(8,16 or even more) the code which uses “Thread Local Storage” will scale up much more that the other parallel version of the code.

Thread local storage concept is not something that is introduced in the new TPL framework. It has existed for quiet some time. Its just that the new Parallel API makes it really easy to use. While using the thread local storage concept do keep in mind that it will work only in those scenarios, wherein you have one-to-one relationship between the input & the output result-set. Finally, always analyze the performance of the sequential code before switching over too the parallel version of it.

I hope you enjoyed reading this article. Have a nice day :).