Contents tagged with Azure

-

I’m speaking at a Free AzureCraft event in London on June 3rd

I’ll be in the UK next week presenting at the free AzureCraft event being held on June 3rd and 4th. This event was created by the UK Azure User Group and is a great way to learn about Azure as well as engage with the Azure community in the UK.

-

Welcoming the Xamarin team to Microsoft

As the role of mobile devices in people's lives expands even further, mobile app developers have become a driving force for software innovation. At Microsoft, we are working to enable even greater developer innovation by providing the best experiences to all developers, on any device, with powerful tools, an open platform and a global cloud.

As part of this commitment I am pleased to announce today that Microsoft has signed an agreement to acquire Xamarin, a leading platform provider for mobile app development.

In conjunction with Visual Studio, Xamarin provides a rich mobile development offering that enables developers to build mobile apps using C# and deliver fully native mobile app experiences to all major devices – including iOS, Android, and Windows. Xamarin’s approach enables developers to take advantage of the productivity and power of .NET to build mobile apps, and to use C# to write to the full set of native APIs and mobile capabilities provided by each device platform. This enables developers to easily share common app code across their iOS, Android and Windows apps while still delivering fully native experiences for each of the platforms. Xamarin’s unique solution has fueled amazing growth for more than four years.

Xamarin has more than 15,000 customers in 120 countries, including more than one hundred Fortune 500 companies - and more than 1.3 million unique developers have taken advantage of their offering. Top enterprises such as Alaska Airlines, Coca-Cola Bottling, Thermo Fisher, Honeywell and JetBlue use Xamarin, as do gaming companies like SuperGiant Games and Gummy Drop. Through Xamarin Test Cloud, all types of mobile developers—C#, Objective-C, Java and hybrid app builders —can also test and improve the quality of apps using thousands of cloud-hosted phones and devices. Xamarin was recently named one of the top startups that help run the Internet.

Microsoft has had a longstanding partnership with Xamarin, and have jointly built Xamarin integration into Visual Studio, Microsoft Azure, Office 365 and our Enterprise Mobility Suite to provide developers with an end-to-end workflow for native, secure apps across platforms. We have also worked closely together to offer the training, tools, services and workflows developers need to succeed.

With today’s acquisition announcement we will be taking this work much further to make our world class developer tools and services even better with deeper integration and enable seamless mobile app dev experiences. The combination of Xamarin, Visual Studio, Visual Studio Team Services, and Azure delivers a complete mobile app dev solution that provides everything a developer needs to develop, test, deliver and instrument mobile apps for every device. We are really excited to see what you build with it.

We are looking forward to providing more information about our plans in the near future – starting at the Microsoft //Build conference coming up in a few weeks, followed by Xamarin Evolve in late April. Be sure to watch my Build keynote and get a front row seat at Evolve to learn more!

Thanks,

Scott

-

AzureCon Keynote Announcements: India Regions, GPU Support, IoT Suite, Container Service, and Security Center

Yesterday we held our AzureCon event and were fortunate to have tens of thousands of developers around the world participate. During the event we announced several great new enhancements to Microsoft Azure including:

- General Availability of 3 new Azure regions in India

- Announcing new N-series of Virtual Machines with GPU capabilities

- Announcing Azure IoT Suite available to purchase

- Announcing Azure Container Service

- Announcing Azure Security Center

We were also fortunate to be joined on stage by several great Azure customers who talked about their experiences using Azure including: Jet.com, Nascar, Alaska Airlines, Walmart, and ThyssenKrupp.

Watching the Videos

All of the talks presented at AzureCon (including the 60 breakout talks) are now available to watch online. You can browse and watch all of the sessions here.

My keynote to kick off the event was an hour long and provided an end-to-end look at Azure and some of the big new announcements of the day. You can watch it here.

-

Announcing General Availability of HDInsight on Linux + new Data Lake Services and Language

Today, I’m happy to announce several key additions to our big data services in Azure, including the General Availability of HDInsight on Linux, as well as the introduction of our new Azure Data Lake and Language services.

General Availability of HDInsight on Linux

Today we are announcing general availability of our HDInsight service on Ubuntu Linux. HDInsight enables you to easily run managed Hadoop clusters in the cloud. With today’s release we now allow you to configure these clusters to run using both a Windows Server Operating System as well as an Ubuntu based Linux Operating System.

HDInsight on Linux enables even broader support for Hadoop ecosystem partners to run in HDInsight providing you even greater choice of preferred tools and applications for running Hadoop workloads. Both Linux and Windows clusters in HDInsight are built on the same standard Hadoop distribution and offer the same set of rich capabilities.

Today’s new release also enables additional capabilities, such as, cluster scaling, virtual network integration and script action support. Furthermore, in addition to Hadoop cluster type, you can now create HBase and Storm clusters on Linux for your NoSQL and real time processing needs such as building an IoT application.

Create a cluster

-

Online AzureCon Conference this Tuesday

This Tuesday, Sept 29th, we are hosting our online AzureCon event – which is a free online event with 60 technical sessions on Azure presented by both the Azure engineering team as well as MVPs and customers who use Azure today and will share their best practices.

-

Better Density and Lower Prices for Azure’s SQL Elastic Database Pools

A few weeks ago, we announced the preview availability of the new Basic and Premium Elastic Database Pools Tiers with our Azure SQL Database service. Elastic Database Pools enable you to run multiple, isolated and independent databases that can be auto-scaled automatically across a private pool of resources dedicated to just you and your apps. This provides a great way for software-as-a-service (SaaS) developers to better isolate their individual customers in an economical way.

Today, we are announcing some nice changes to the pricing structure of Elastic Database Pools as well as changes to the density of elastic databases within a pool. These changes make it even more attractive to use Elastic Database Pools to build your applications.

Specifically, we are making the following changes:

- Finalizing the eDTU price – With Elastic Database Pools you purchase units of capacity that we can call eDTUs – which you can then use to run multiple databases within a pool. We have decided to not increase the price of eDTUs as we go from preview->GA. This means that you’ll be able to pay a much lower price (about 50% less) for eDTUs than many developers expected.

- Eliminating the per-database fee – In additional to lower eDTU prices, we are also eliminating the fee per database that we have had with the preview. This means you no longer need to pay a per-database charge to use an Elastic Database Pool, and makes the pricing much more attractive for scenarios where you want to have lots of small databases.

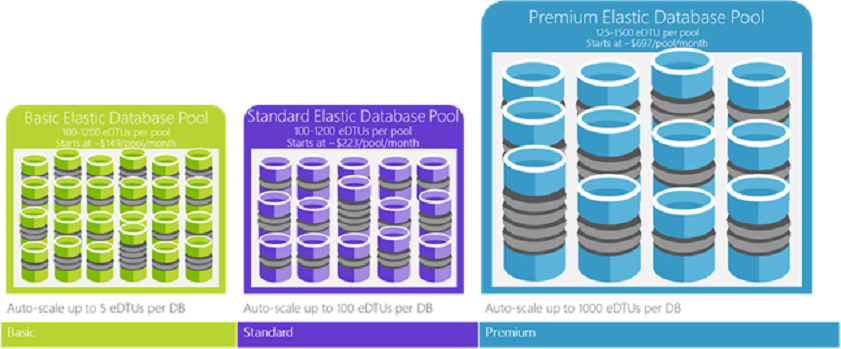

- Pool density – We are announcing increased density limits that enable you to run many more databases per Elastic Database pool. See the chart below under “Maximum databases per pool” for specifics. This change will take effect at the time of general availability, but you can design your apps around these numbers. The increase pool density limits will make Elastic Database Pools event more attractive.

-

Announcing the Biggest VM Sizes Available in the Cloud: New Azure GS-VM Series

Today, we’re announcing the release of the new Azure GS-series of Virtual Machine sizes, which enable Azure Premium Storage to be used with Azure G-series VM sizes. These VM sizes are now available to use in both our US and Europe regions.

Earlier this year we released the G-series of Azure Virtual Machines – which provide the largest VM size provided by any public cloud provider. They provide up to 32-cores of CPU, 448 GB of memory and 6.59 TB of local SSD-based storage. Today’s release of the GS-series of Azure Virtual Machines enables you to now use these large VMs with Azure Premium Storage – and enables you to perform up to 2,000 MB/sec of storage throughput , more than double any other public cloud provider. Using the G5/GS5 VM size now also offers more than 20 gbps of network bandwidth, also more than double the network throughout provided by any other public cloud provider.

These new VM offerings provide an ideal solution to your most demanding cloud based workloads, and are great for relational databases like SQL Server, MySQL, PostGres and other large data warehouse solutions. You can also use the GS-series to significantly scale-up the performance of enterprise applications like Dynamics AX.

The G and GS-series of VM sizes are available to use now in our West US, East US-2, and West Europe Azure regions. You’ll see us continue to expand availability around the world in more regions in the coming months.

GS Series Size Details

The below table provides more details on the exact capabilities of the new GS-series of VM sizes:

-

Announcing Great New SQL Database Capabilities in Azure

Today we are making available several new SQL Database capabilities in Azure that enable you to build even better cloud applications. In particular:

- We are introducing two new pricing tiers for our Elastic Database Pool capability. Elastic Database Pools enable you to run multiple, isolated and independent databases on a private pool of resources dedicated to just you and your apps. This provides a great way for software-as-a-service (SaaS) developers to better isolate their individual customers in an economical way.

- We are also introducing new higher-end scale options for SQL Databases that enable you to run even larger databases with significantly more compute + storage + networking resources.

Both of these additions are available to start using immediately.

Elastic Database Pools

If you are a SaaS developer with tens, hundreds, or even thousands of databases, an elastic database pool dramatically simplifies the process of creating, maintaining, and managing performance across these databases within a budget that you control.

A common SaaS application pattern (especially for B2B SaaS apps) is for the SaaS app to use a different database to store data for each customer. This has the benefit of isolating the data for each customer separately (and enables each customer’s data to be encrypted separately, backed-up separately, etc). While this pattern is great from an isolation and security perspective, each database can end up having varying and unpredictable resource consumption (CPU/IO/Memory patterns), and because the peaks and valleys for each customer might be difficult to predict, it is hard to know how much resources to provision. Developers were previously faced with two options: either over-provision database resources based on peak usage--and overpay. Or under-provision to save cost--at the expense of performance and customer satisfaction during peaks.

Microsoft created elastic database pools specifically to help developers solve this problem. With Elastic Database Pools you can allocate a shared pool of database resources (CPU/IO/Memory), and then create and run multiple isolated databases on top of this pool. You can set minimum and maximum performance SLA limits of your choosing for each database you add into the pool (ensuring that none of the databases unfairly impacts other databases in your pool). Our management APIs also make it much easier to script and manage these multiple databases together, as well as optionally execute queries that span across them (useful for a variety operations). And best of all when you add multiple databases to an Elastic Database Pool, you are able to average out the typical utilization load (because each of your customers tend to have different peaks and valleys) and end up requiring far fewer database resources (and spend less money as a result) than you would if you ran each database separately.

-

Announcing Windows Server 2016 Containers Preview

At DockerCon this year, Mark Russinovich, CTO of Microsoft Azure, demonstrated the first ever application built using code running in both a Windows Server Container and a Linux container connected together. This demo helped demonstrate Microsoft's vision that in partnership with Docker, we can help bring the Windows and Linux ecosystems together by enabling developers to build container-based distributed applications using the tools and platforms of their choice.

Today we are excited to release the first preview of Windows Server Containers as part of our Windows Server 2016 Technical Preview 3 release. We’re also announcing great updates from our close collaboration with Docker, including enabling support for the Windows platform in the Docker Engine and a preview of the Docker Engine for Windows. Our Visual Studio Tools for Docker, which we previewed earlier this year, have also been updated to support Windows Server Containers, providing you a seamless end-to-end experience straight from Visual Studio to develop and deploy code to both Windows Server and Linux containers. Last but not least, we’ve made it easy to get started with Windows Server Containers in Azure via a dedicated virtual machine image.

Windows Server Containers

Windows Server Containers create a highly agile Windows Server environment, enabling you to accelerate the DevOps process to efficiently build and deploy modern applications. With today’s preview release, millions of Windows developers will be able to experience the benefits of containers for the first time using the languages of their choice – whether .NET, ASP.NET, PowerShell or Python, Ruby on Rails, Java and many others.

Today’s announcement delivers on the promise we made in partnership with Docker, the fast-growing open platform for distributed applications, to offer container and DevOps benefits to Linux and Windows Server users alike. Windows Server Containers are now part of the Docker open source project, and Microsoft is a founding member of the Open Container Initiative. Windows Server Containers can be deployed and managed either using the Docker client or PowerShell.

Getting Started using Visual Studio

The preview of our Visual Studio Tools for Docker, which enables developers to build and publish ASP.NET 5 Web Apps or console applications directly to a Docker container, has been updated to include support for today’s preview of Windows Server Containers. The extension automates creating and configuring your container host in Azure, building a container image which includes your application, and publishing it directly to your container host. You can download and install this extension, and read more about it, at the Visual Studio Gallery here: http://aka.ms/vslovesdocker.

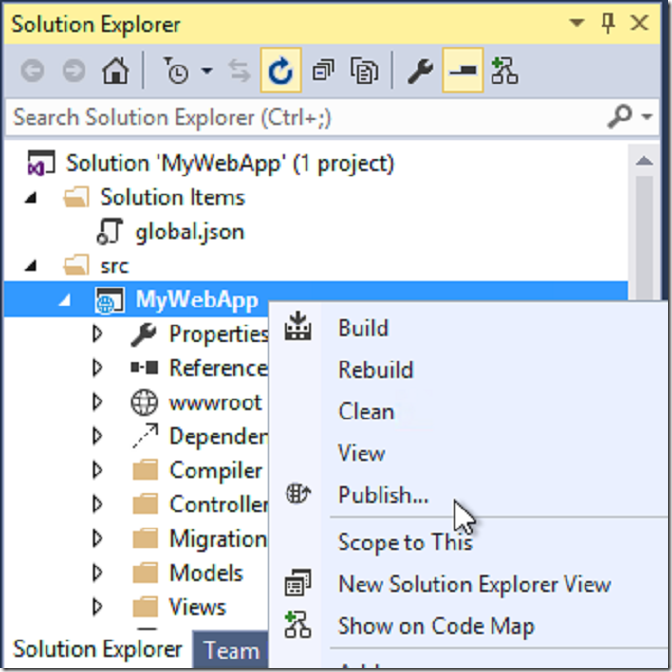

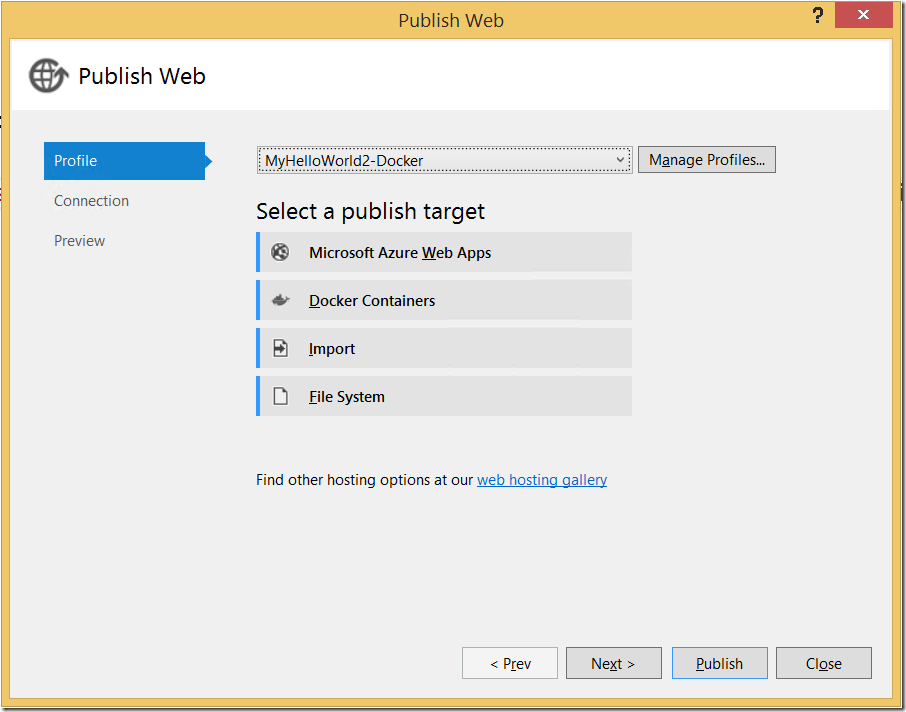

Once installed, developers can right-click on their projects within Visual Studio and select “Publish”:

Doing so will display a Publish dialog which will now include the ability to deploy to a Docker Container (on either a Windows Server or Linux machine):

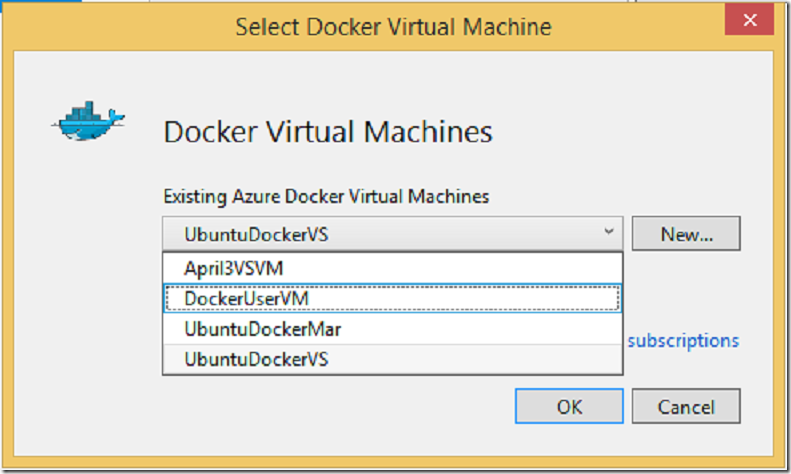

You can choose to deploy to any existing Docker host you already have running:

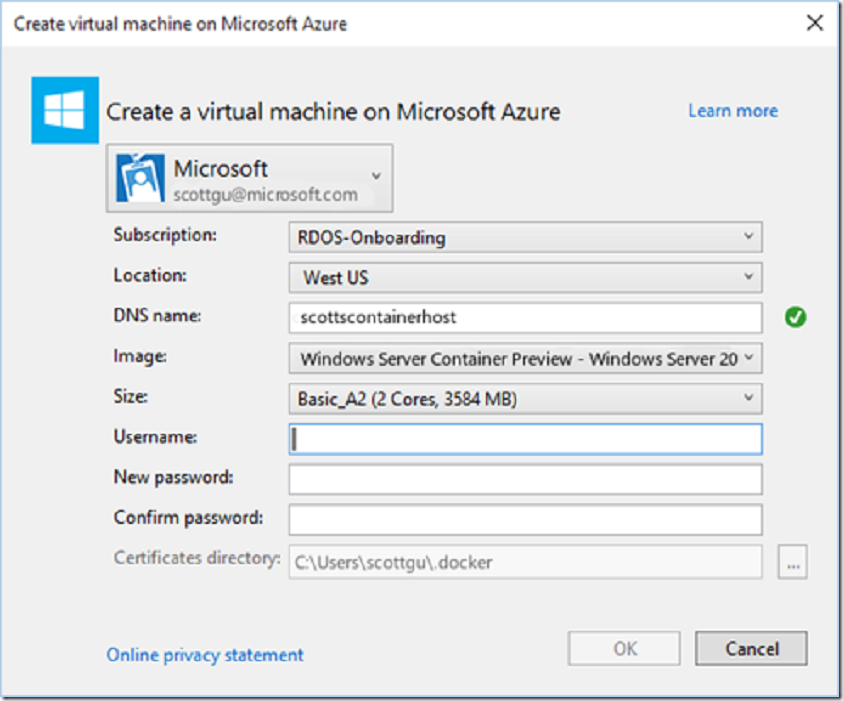

Or use the dialog to create a new Virtual Machine running either Window Server or Linux with containers enabled. The below screen-shot shows how easy it is to create a new VM hosted on Azure that runs today’s Windows Server 2016 TP3 preview that supports Containers – you can do all of this (and deploy your apps to it) easily without ever having to leave the Visual Studio IDE:

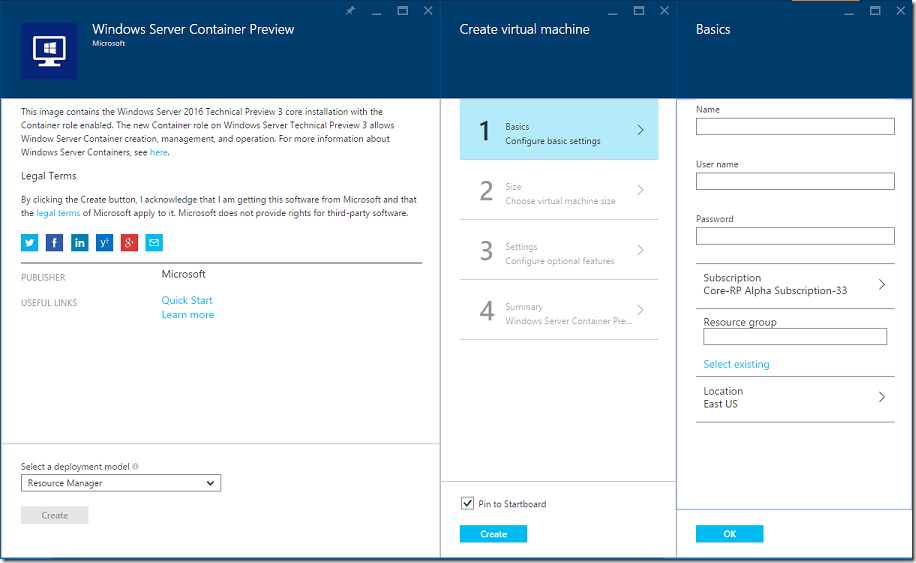

Getting Started Using Azure

In June of last year, at the first DockerCon, we enabled a streamlined Azure experience for creating and managing Docker hosts in the cloud. Up until now these hosts have only run on Linux. With the new preview of Windows Server 2016 supporting Windows Server Containers, we have enabled a parallel experience for Windows users.

Directly from the Azure Marketplace, users can now deploy a Windows Server 2016 virtual machine pre-configured with the container feature enabled and Docker Engine installed. Our quick start guide has all of the details including screen shots and a walkthrough video so take a look here https://msdn.microsoft.com/en-us/virtualization/windowscontainers/quick_start/azure_setup.

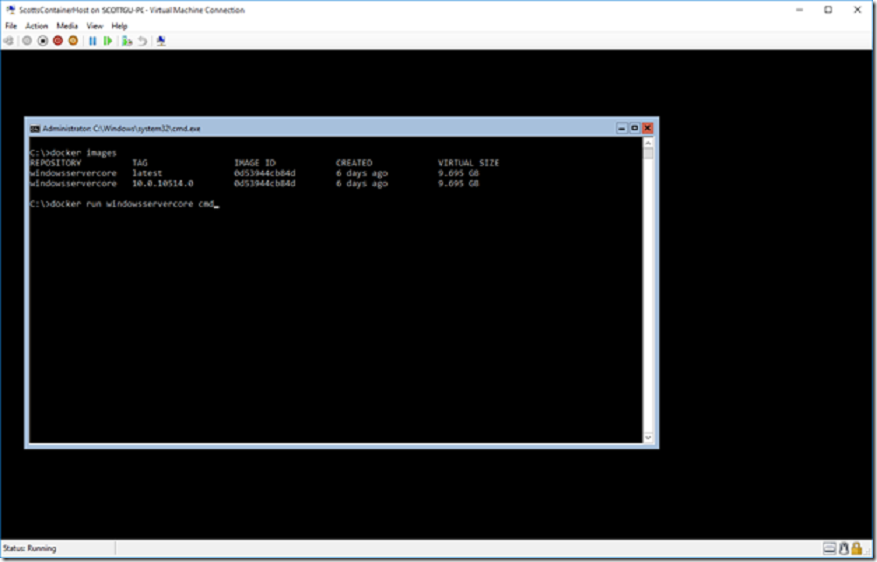

Once your container host is up and running, the quick start guide includes step by step guides for creating and managing containers using both Docker and PowerShell.

Getting Started Locally Using Hyper-V

Creating a virtual machine on your local machine using Hyper-V to act as your container host is now really easy. We’ve published some PowerShell scripts to GitHub that automate nearly the whole process so that you can get started experimenting with Windows Server Containers as quickly as possible. The quick start guide has all of the details at https://msdn.microsoft.com/en-us/virtualization/windowscontainers/quick_start/container_setup.

Once your container host is up and running the quick start guide includes step by step guides for creating and managing containers using both Docker and PowerShell.

Additional Information and Resources

A great list of resources including links to past presentations on containers, blogs and samples can be found in the community section of our documentation. We have also setup a dedicated Windows containers forum where you can provide feedback, ask questions and report bugs. If you want to learn more about the technology behind containers I would highly recommend reading Mark Russinovich’s blog on “Containers: Docker, Windows and Trends” that was published earlier this week.

Summary

At the //Build conference earlier this year we talked about our plan to make containers a fundamental part of our application platform, and today’s releases are a set of significant steps in making this a reality.’ The decision we made to embrace Docker and the Docker ecosystem to enable this in both Azure and Windows Server has generated a lot of positive feedback and we are just getting started.

While there is still more work to be done, now users in the Window Server ecosystem can begin experiencing the world of containers. I highly recommend you download the Visual Studio Tools for Docker, create a Windows Container host in Azure or locally, and try out our PowerShell and Docker support. Most importantly, we look forward to hearing feedback on your experience.

Hope this helps,

Scott

-

New Azure Billing APIs Available

Organizations moving to the cloud can achieve significant cost savings. But to achieve the maximum benefit you need to be able to accurately track your cloud spend in order to monitor and predict your costs. Enterprises need to be able to get detailed, granular consumption data and derive insights to effectively manage their cloud consumption.

I’m excited to announce the public preview release of two new Azure Billing APIs today: the Azure Usage API and Azure RateCard API which provide customers and partners programmatic access to their Azure consumption and pricing details:

Azure Usage API – A REST API that customers and partners can use to get their usage data for an Azure subscription. As part of this new Billing API we now correlate the usage/costs by the resource tags you can now set set on your Azure resources (for example: you could assign a tag “Department abc” or “Project X” to a VM or Database in order to better track spend on a resource and charge it back to an internal group within your company). To get more details, please read the MSDN page on the Usage API. Enterprise Agreement (EA) customers can also use this API to get a more granular view into their consumption data, and to complement what they get from the EA Billing CSV.