Li Chen's Blog

-

Sky LINQPad, a minimum viable clone of LINQPad in the cloud

A while ago, I blogged about a simple LINQPad query host. It is fairly easy to put a web face on it. The only change that I had to make is to set the ApplicationBase for the AppDomains that I create as asp.net is quite different to an .exe app. A playground is now running at http://skylinq.azurewebsites.net/SkyLINQPad. One can upload an existing .linq files designed in LINQPad or type some queries directly into the page:

-

A simple LINQPad query host

I am a big fan of LINQPad. I use LINQPad routinely during my work to test small, incremental ideas. I used it so much so that I bough myself a premium license.

I always wish I can run queries designed in LINQPad in my own program. Before 4.52.1 beta, there was only a command line interface. In LINQPad v4.52.1 beta, there is finally a Util.Run method that allows me to run LINQPad queries in my own process. However, I felt that I did not have sufficient control on how I can dump the results. So I decided to write a simple host myself.

As in the example below, a .linq file starts with an xml meta data section followed by a blank line and then the query or the statements.

<Query Kind="Expression"> <Reference><RuntimeDirectory>\System.Web.dll</Reference> <Reference><ProgramFilesX86>\Microsoft ASP.NET\ASP.NET MVC 4\Assemblies\System.Web.Mvc.dll</Reference> <Namespace>System.Web</Namespace> <Namespace>System.Web.Mvc</Namespace> </Query> HttpUtility.UrlEncode("\"'a,b;c.d'\"")

The article “http://www.linqpad.net/HowLINQPadWorks.aspx” on the LINQPad website gives me good information on how to compile and execute queries. LINQPad uses CSharpCodeProvider (or VBCodeProvider) to compile queries. Although I was tempted to use Roslyn like ScriptCS, I decided to use CSharpCodeProvider to ensure compatible with LINQPad.

We only need 3 lines of code to the LINQPad host:

using LINQPadHost; ... string file = @"C:\Users\lichen\Documents\LINQPad Queries\ServerUtility.linq"; Host host = new Host(); host.Run<JsonTextSerializer>

(file); As I mentioned at the beginning. I would like to control the dumping of the results. JsonTextSerializer is one of the three serializers that I supplied. The other two serializers are IndentTextSerializer and XmlTextSerializer. Personally, I found that the JsonTextSerializer and IndentTextSerializer the most useful.

The source code could be found here.

Examples could be found here.

-

Why every .net developer should learn some PowerShell

It has been 8 years since PowerShell v.1 was shipped in 2006. I have looked into PowerShell closely except for using it in the Nuget Console. Recently, I was forced to have a much closer look at PowerShell because we use a product that exposes its only interface in PowerShell.

Then I realized that PowerShell is such a wonderful product that every .net developer should learn some. Here are some reasons:

- PowerShell is a much better language that the DOS batch language. PowerShell is real language with variable, condition, looping and function calls.

- According to Douglas Finke in Windows Powershell for Developers by O’Reilly, PowerShell is a stop ship event, meaning no Microsoft server products ship without a PowerShell interface.

- PowerShell now has a pretty good Integrated Scripting Environments (ISE). We can create, edit, run and debug PowerShell. Microsoft has release OneScript, a script browser and analyzer that could be run from PowerShell ISE.

- We can call .NET and COM objects from PowerShell. That is an advantage over VBScript.

- PowerShell has a wonderful pipeline model with which we can filter, sort and convert results. If you love LINQ, you would love PowerShell.

- It is possible to call PowerShell script from .net, even ones on a remote machine.

Recently, I have to call some PowerShell scripts on a remote server. There are many piecewise information on the internet, but no many good examples. So I put a few pointers here:

- When connecting to remote PowerShell, the uri is : http://SERVERNAME:5985/wsman.

- It is possible to run PowerShell in a different credential using the optional credential.

- Powershell remoting only runs in PowerShell 2.0 or later. So download the PowerShell 2.0 SDK (http://www.microsoft.com/en-us/download/details.aspx?id=2560). When installed, it actually updates the 1.0 reference assemblies . On my machine, they are in: C:\Program Files (x86)\Reference Assemblies\Microsoft\WindowsPowerShell\v1.0

So the complete code runs like:

using System.Management.Automation; // Windows PowerShell namespace using System.Management.Automation.Runspaces; // Windows PowerShell namespace using System.Security; // For the secure password using Microsoft.PowerShell; Runspace remoteRunspace = null; //System.Security.SecureString password = new System.Security.SecureString(); //foreach (char c in livePass.ToCharArray()) //{ // password.AppendChar(c); //} //PSCredential psc = new PSCredential(username, password); //WSManConnectionInfo rri = new WSManConnectionInfo(new Uri(uri), schema, psc); WSManConnectionInfo rri = new WSManConnectionInfo(new Uri(""http://SERVERNAME:5985/wsman")); //rri.AuthenticationMechanism = AuthenticationMechanism.Kerberos; //rri.ProxyAuthentication = AuthenticationMechanism.Negotiate; remoteRunspace = RunspaceFactory.CreateRunspace(rri); remoteRunspace.Open(); using (PowerShell powershell = PowerShell.Create()) { powershell.Runspace = remoteRunspace; powershell.AddCommand(scriptText); Collectionresults = powershell.Invoke(); remoteRunspace.Close(); foreach (PSObject obj in results) { foreach (PSPropertyInfo psPropertyInfo in obj.Properties) { Console.Write("name: " + psPropertyInfo.Name); Console.Write("\tvalue: " + psPropertyInfo.Value); Console.WriteLine("\tmemberType: " + psPropertyInfo.MemberType); } } } -

Implement a simple priority queue

.NET framework does not have a priority queue built-in. There are several open source implementations. If you do not want to reference an entire library, it is fairly easy to implement one yourself. Many priority queue implementations use heap. However, if the number of levels of priorities is small, it is actually very easy and efficient to implement priority queues using an array of queues. There is a queue implementation in the .net framework.

My implementation is in the code below. The enum QueuePriorityEnum contains the number of levels of priorities. It is picked up automatically by the PriorityQueue class. The queue support 3 operations: Enqueue, Dequeue and Count. There behavior is modeled after the Queue class in the .net framework.

using System; using System.Collections.Generic; using System.Linq; namespace MyNamespace { // Modify this enum to add number of levels. It will picked up automatically enum QueuePriorityEnum { Low = 0, High =1 } class PriorityQueue<T>{ Queue<T> [] _queues; public PriorityQueue() { int levels = Enum.GetValues(typeof(QueuePriorityEnum)).Length; _queues = new Queue<T> [levels]; for (int i = 0; i < levels; i++) { _queues[i] = new Queue<T> (); } } public void Enqueue(QueuePriorityEnum priority, T item) { _queues[(int)priority].Enqueue(item); } public int Count { get { return _queues.Sum(q => q.Count); } } public T Dequeue() { int levels = Enum.GetValues(typeof(QueuePriorityEnum)).Length; for (int i = levels - 1; i > -1; i--) { if (_queues[i].Count > 0) { return _queues[i].Dequeue(); } } throw new InvalidOperationException("The Queue is empty. "); } } } -

integrating external systems with TFS

Recently, we need to integrate external systems with TFS. TFS is a feature-rich system and has a large API. The TFS sample on MSDN only scratch the top surface. Fortunately, a couple of good blog posts get me on the write direction:

- Team Foundation Version Control client API example for TFS 2010 and newer

- Team Foundation Server API: Programmatically Downloading Files From Source Control

The key is to use the VersionControlService. We need to reference the following assemblies:

Microsoft.TeamFoundation.Client.dll

Microsoft.TeamFoundation.Common.dll

Microsoft.TeamFoundation.VersionControl.Client.dll

Microsoft.TeamFoundation.VersionControl.Common.dll

The code would be something like:

using Microsoft.TeamFoundation.Client; using Microsoft.TeamFoundation.Framework.Common; using Microsoft.TeamFoundation.Framework.Client; using Microsoft.TeamFoundation.VersionControl.Client; ... TfsTeamProjectCollection pc = TfsTeamProjectCollectionFactory.GetTeamProjectCollection(tfsUri); VersionControlServer vcs = pc.GetService

(); Then to check whether a file exists, we can use:

vcs.ServerItemExists(myPath, ItemType.File)

Or check if a directory exists:

vcs.ServerItemExists(myPath, ItemType.Folder)

To get a list of directories or files, we can use the GetItems method. TFS is far more complicated than a file system. We can get a file, get the history of a file, get a changeset, etc. Therefore, the GetItems method has many overloads. To get a list files, we can use:

var fileSet = vcs.GetItems(myPath, VersionSpec.Latest, RecursionType.OneLevel, DeletedState.NonDeleted, ItemType.File); foreach (Item f in fileSet.Items) { Console.WriteLine(f.ServerItem); }Or get a list of directories:

var dirSet = vcs.GetItems(myPath, VersionSpec.Latest, RecursionType.OneLevel, DeletedState.NonDeleted, ItemType.Folder); foreach (Item d in dirSet.Items) { Console.WriteLine(d.ServerItem); } -

Converted ASP Classic Compiler project from Mercurial to Git

Like some other open source project developers, I picked the Mercurial as my version control system. Unfortunately, Git is winning in the Visual Studio echo systems. Fortunately, it is possible to contact Codeplex admin for manual conversion from Mercurial to Git. I have done exactly that for my open source ASP Classic Compiler project. Now I can add new examples in response to forum questions and check them in using my Visual Studio 2013. Now I am all happy.

-

Video review: RESTful Services with ASP.NET Web API from PACKT Publishing

Disclaimer: I was provided this video for free by PACKT Publishing. However, that does not affect my opinion about this video.

Upon request by PACKT Publishing, I agreed to watch and review the video “RESTful Service with ASP.NET Web API” by Fanie Reynders. Prior to the review, I have a EBook called “Designing Evolvable Web APIs with ASP.NET - Harnessing the power of the web” by Glenn Block, Pablo Cibraro, Pedro Felix, Howard Dierking and Darrel Miller from O’Reilly. I also have access to several videos on the Pluralsight.com on the same subject. So I would put my review in perspective with those other materials.

The video from Packt has the length of 2 hours 4 minutes. It gave a nice overview over the ASP.NET Web API. The video is available for watch online or for downloading to watch offline. The video has 8 chapters. It covers the ASP.NET Web API in a clear and accurate way and is surprisingly complete for this short length.

In comparison with other resources, I would recommend you get this video if you have never worked with ASP.NET Web API before and want to get a complete overview in a short time possible.

If you love video, Pluralsight is offering the equally good and slightly longer (3h 15m) “Introduction to the ASP.NET Web API” by Jon Flanders. You would need subscription to access the exercise materials thought. If you do subscribe, Pluralsight also has a couple of intermediate level videos by Shawn Wildermuth.

Lastly, if you want an in depth book that you can use for a extended period of time, it is hard to beat Glenn Block, etc.’s book from O’Reilly.

-

LINQ query optimization by query rewriting using a custom IQueryable LINQ provider

In my SkyLinq open source project so far, I tried to make LINQ better and created several extension methods (for example, see GroupBy and TopK) that are more memory efficient than the standard methods in some scenarios. However, I do not necessarily want people to bind into these extensions methods directly for the following reasons:

- Good ideas evolve. New methods may come and existing methods may change.

- There is a parallel set of methods in IEnumerable<T>, IQueryable<T> and to some degree, in IObservable<T>. Using custom LINQ extension breaks the parallelism.

A better approach would be to write the LINQ queries normally using the standard library methods and then optimize the queries using a custom query provider. Query rewriting is not a new idea. SQL server has been doing this for years. I would like to bring this idea into LINQ.

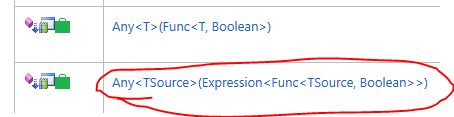

If we examine the IQueryable<T> interface, we will find there is a parallel set of LINQ methods that accepts Expression<Func> instead of <Func>.

There is a little C# compiler trick here. If C# see a method that accepts Expression<Func>, it would create Expressions of Lamda instead of compiled Lambda expression. At run time, these expressions are passed to an IQueryable implementation and then passed to the underlying IQueryProvider implementation. The query provider is responsible for executing the expression tree and return the results. This is how the magic of LINQ to SQL and Entity Frameworks works.

The .net framework already has a class called EnumerableQuery<T>. It is a query provider than turns IQueryable class into LINQ to objects queries. In this work, I am going one step further by creating an optimizing query provider.

A common perception of writing a custom LINQ provider is that it requires a lot of code. The reason is that even for the most trivial provider we need to visit the every possible expressions in the System.Linq.Expressions namespace. (Note that we only need to handle expressions as of .net framework 3.5 but do not need to handle the new expressions added as part of dynamic language runtime in .net 4.x). There are reusable framework such as IQToolkit project that makes it easier to create a custom LINQ provider.

In contrast, creating an optimizing query provider is fairly easy. The ExpressionVistor class already has the framework that I need. I only needed to create a subclass of ExpressionVisitor called SkyLinqRewriter to override a few methods. The query rewriter basically replaces all call to the Queryable class with equivalent calls to the Enumerable class and rewrite queries for optimization when an opportunity presents.

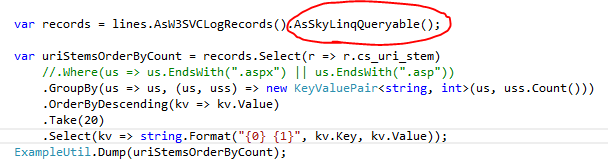

It is fairly easy to consume the optimizing query provider. All we need is to call AsSkyLinqQueryable() to convert IEnumerable<T> to IQueryable<T> and remaining code can stay intact:

An end-to-end example can be found at https://skylinq.codeplex.com/SourceControl/latest#SkyLinq.Example/LinqToW3SVCLogExample.cs.

To conclude this post, I would recommend that we always code to IQueryable<T> instead of IEnumerable<T>. This way, the code has the flexibility to be optimized by an optimizing query provider, or be converted from pull based query to push based query using Reactive Extension without rewriting any code.

-

Expression tree visualizer for Visual Studio 2013

Expression tree visualizer, as the name indicates, is a Visual Studio visualizer for visualizing expression trees. It is a must if you work with expressions frequently. Expression Tree Visualizer is a Visual Studio 2008 sample. There is a Visual Studio 2010 port available on codeplex. If you want to use it with a later version of Visual Studio, there is not one available. Fortunately, porting it to another version of Visual Studio is fairly simple:

- Download the original source code from http://exprtreevisualizer.codeplex.com/.

- Replace the existing reference to Microsoft.VisualStudio.DebuggerVisualizers assembly to the version in the Visual Studio you want to work with. For Visual Studio 2013, I found it in C:\Program Files (x86)\Microsoft Visual Studio 12.0\Common7\IDE\ReferenceAssemblies\v2.0\Microsoft.VisualStudio.DebuggerVisualizers.dll on my computer.

- Compile the ExpressionTreeVisualizer project and copy ExpressionTreeVisualizer.dll to the visualizer directory. To make it usable by one user, just copy it to My Documents\VisualStudioVersion\Visualizers. To make it usable by all users of a machine, copy it to VisualStudioInstallPath\Common7\Packages\Debugger\Visualizers.

If you do not want to walk through the process, I have one readily available at http://weblogs.asp.net/blogs/lichen/ExpressionTreeVisualizer.Vs2013.zip. Just do not sue me if it does not work as expected.

-

An efficient Top K algorithm with implementation in C# for LINQ

The LINQ library currently does not have a dedicated top K implementation. Programs usually use the OrderBy function followed by the Take function. For N items, an efficient sort algorithm would scale O(n) in space and O(n log n) in time. Since we are counting the top K, I believe that we could devise an algorithm that scales O(K) in space.

Among all the sorting algorithms that I am familiar, the heapsort algorithm came to my mind first. A Heap has a tree-like data structure that can often be implemented using an array. A heap can either be a max-heap or a min-heap. In the case of min-heap, the minimum element of at the root of the tree and all the child elements are greater than their parents.

Binary heap is a special case of heap. A binary min-heap has the following characteristics:

- find-min takes O(1) time.

- delete-min takes O(log n) time.

- insert takes O(log n) time.

So here is how I implement my top K algorithm:

- Create a heap with an array of size K.

- Insert items into the heap until the heap reaches its capacity. This takes K O(log K) time.

- For each the remaining elements, if an element is greater than find-min of the heap, do a delete-min and then insert the element into the heap.

- Then we repeated delete-min until the heap is empty and arrange the deleted element in reverse order and we get our top 10 list.

The time it takes to find top K items from an N item list is:

O(N) * t1 + O((K + log N - log K) * log K) * t2

Here t1 is the time to compare an element in step 3 to find-min and t2 is the combined time of delete-min and the subsequent insert. So this algorithm is much more efficient that a call to OrderBy followed by a call to Take.

I have checked-in my implementation to my Sky LINQ project. The LINQ Top and Bottom is at in LinqExt.cs. The internal binary heap implementation is in BinaryHeap.cs. The LINQ example can be found in HeapSort.cs. The Sky Log example has also been updated to use the efficient Top K algorithm.

Note that this algorithm combined OrderBy and Take by GroupBy itself still uses O(N) splace where N is number of groups. There are probabilistic approximation algorithms that can be used to further alleviate memory foot print. That is something I could try in the future.