Li Chen's Blog

-

My first impression on Tx (LINQ to Logs and Traces)

Yesterday, I learnt about Tx (LINQ to Logs and Traces) from This Week On Channel 9. The name suggest that it has overlap with my end-to-end example on LINQ to W3SVC log files. It turns out that the overlap is minimal.

Tx is a Microsoft Open Technologies project. The source code commit history suggest it was originally part Reactive Extension (Rx) developed by the Rx team and became an independent project a year ago. Tx heavily uses Rx.

The Tx project has a page on “When to use Tx?” and I will quote a portion of it below.

When NOT to use Tx

Use only LINQ-to-Objects when:- There are no real-time feeds involved

- The data is already in memory (e.g. List <T>), or you are dealing with single file that is easy to parse - e.g. a text file, each line representing event of the same type.

Use only Reactive Extensions (Rx) when:- There are real-time feeds (e.g. mouse moves)

- You need the same query to work on past history (e.g. file(s)) and real-time

- Each feed/file contains a single type of events, like T for IObservable<T>

When to use Tx

Tx.Core adds the following new features to Rx:- Support for Multiplexed sequences (single sequence containing events of different types in order of occurence). The simplest example of turning multiplexd sequence into type-specific Obseravable-s is the Demultiplexor

- Merging multiple input files in order of occurence - e.g. two log files

- Hereogeneous Inputs - e.g. queries across some log files (.evtx) and some traces (.etl)

- Single-pass-read to answer multiple queries on file(s)

- Scale in # of queries. This is side effect of the above, which applies to both real-time and single-read of past history

- Providing virtual time as per event timestamps. See TimeSource

- Support for both "Structured" (like database, or Rx) and "Timeline" mode (like most event-viewing tools)

The Tx W3CEnumerable.cs does have overlap with my implementation of log parser. I wish I had noticed the project earlier. In the future, I will try to align my project with Tx to make it easier to use them together. I just had an implementation of duct-typing so interoperating different types from the same idea should be easy.

-

A c# implementation of duck typing

Eric Lippert’s blogs (and his old blogs) have been my source to the inner working of VBScript and C# in the past decade. Recently, his blog on “What is ‘duck typing’?” has drawn lots of discussions and generated additional blogs from Phil Haack and Glenn Block. The discussion injects new ideas into my thoughts on programming by composition. I previously argued that interfaces are too big a unit for composition because they often live in different namespaces and only a few of the well-known interfaces are widely supported. I was in favor of lambdas (especially those that consume or return IEnumerable<T>) as composition unit. However, as I worked through my first end-to-end example, I found that lambdas are often too small (I will blog about this in details later).

Duck typing may solve the interface incompatibility problem in many situations. As described in Wikipedia, The name came from the following phrase:

“When I see a bird that walks like a duck and swims like a duck and quacks like a duck, I call that bird a duck.”

If I would translate it into software engineering terms, I would translate it as:

The client expects certain behavior. If the server class has the behavior, then the client can call the server object even though the client does not explicitly know the type of the server objects.

That leads to the following implementation in a strongly-typed language like c#, consisting of:

- An interface defined by me to express my expected behaviors of duck, IMyDuck.

- An object from others that I want to consume. Let’s call its type OtherDuck.

- A function that checks if Duck has all the members of IMyDuck.

- A function (a proxy factory) that generates a proxy that implements IMyDuck and wrap around OtherDuck.

- In my software, I only bind my code to IMyDuck. The proxy factory is responsible for bridging IMyDuck and OtherDuck.

This implementation takes the expected behaviors as a whole. Except for the one time code generation for the proxy, everything else is strongly-typed at compile time. It is different to the “dynamic” in c# which implements late binding at the callsite.

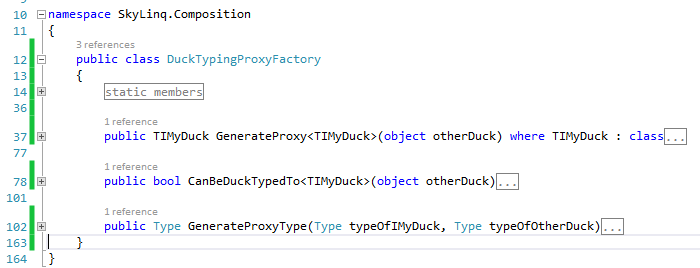

I have checked my implementation into my Sky LINQ project under the c# project of SkyLinq.Composition. The name of the class is DuckTypingProxyFactory. The methods look like:

I have also provided an example called DuckTypingSample.cs in the SkyLinq.Sample project.

So when would duck-typing be useful? Here are a few:

- When consuming libraries from companies that implement the same standard.

- When different teams in a large enterprise each implement the company’s entities in their own namespace.

- When a company refactors software (see the evolution of asp.net for example).

- When calling multiple web services.

Note that:

- I noticed the impromptu-interface project that does the same thing after I completed by project. Also, someone mentioned TypeMock and Castle in the comment of Eric’s log. So I am not claiming to be the first person with the idea. I am still glad to have my own code generator because I am going to use it to extend the idea of strongly-typed wrapper as comparing to data-transfer-objects.

- Also, nearly all Aspect-Oriented-Programming (AOP) and many Object-Relational-Mapping (ORM) frameworks have the similar proxy generator. In general, those in AOP frameworks are more complete because ORM frameworks primary concern with properties. I did looked at the Unity implementation when I implemented mine (thanks to the Patterns & Practices team!). My implementation is very bare-metal and thus easy to understand.

-

SkyLog: My first end-to-end example on programming by composition

I have completed my first end-to-end example as I am exploring the idea of programming by composition. SkyLog is an example on querying IIS Log files like what LogParser do, except:

- It is built on LINQ and thus can use the entire .net framework, not just the built-in functions of LogParser.

- It can use Parallel LINQ and thus uses multiple cores effectively.

- Any part of a query can be refactored into a function and thus reused.

- We just need to write the queries once and the queries can be used against one log file, a whole directory of log files, several servers, and in any storage, such as Azure Blog Storage.

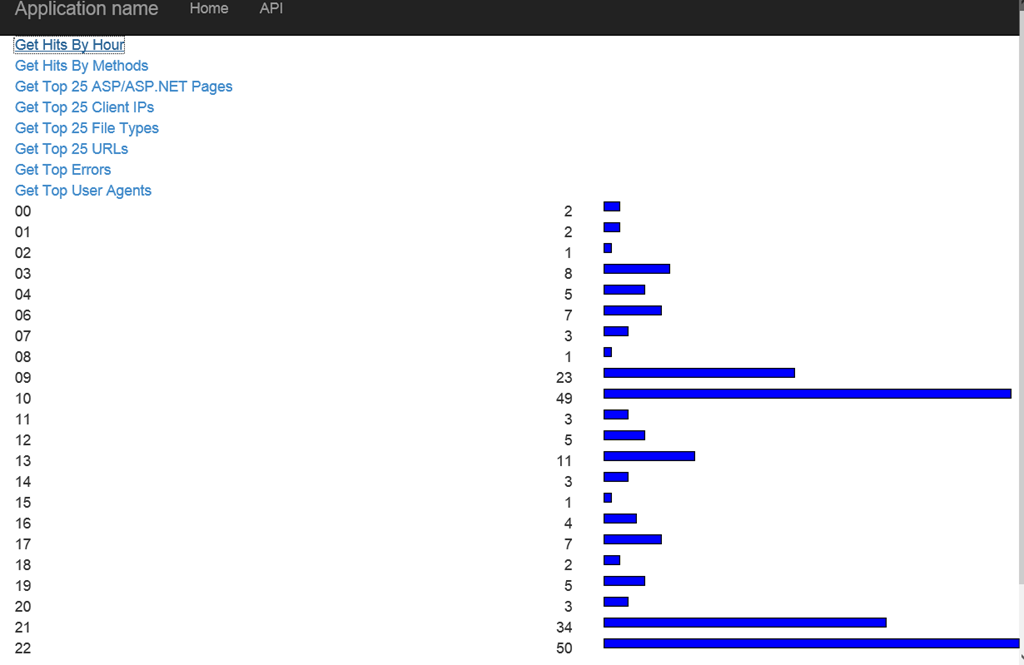

The running example are available at http://skylinq.azurewebsites.net/skylog. The example runs the query against some log files stored in Azure Blog Storage.

The queries in the example are from http://blogs.msdn.com/b/carlosag/archive/2010/03/25/analyze-your-iis-log-files-favorite-log-parser-queries.aspx.

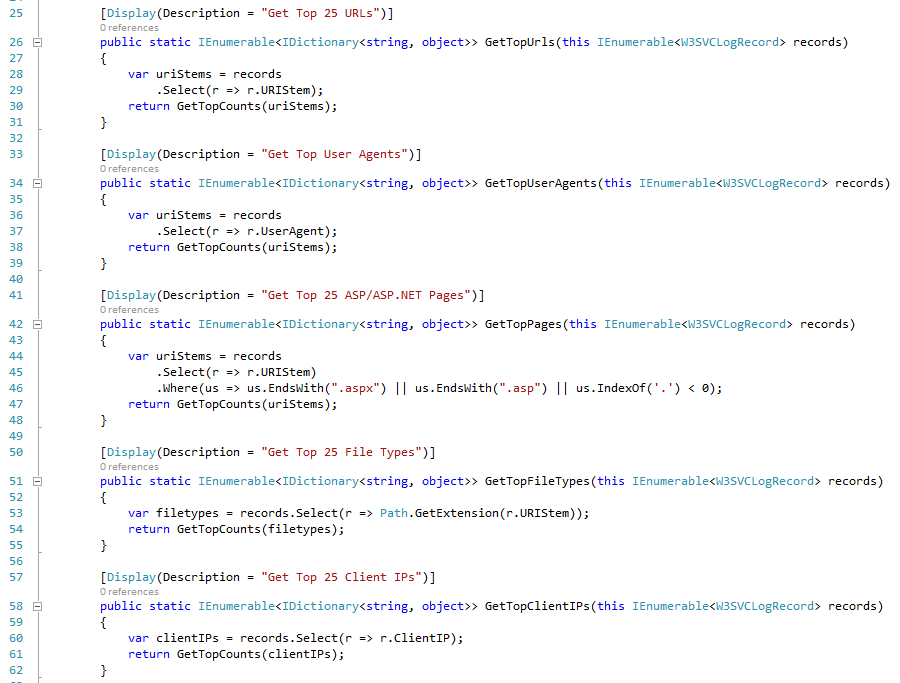

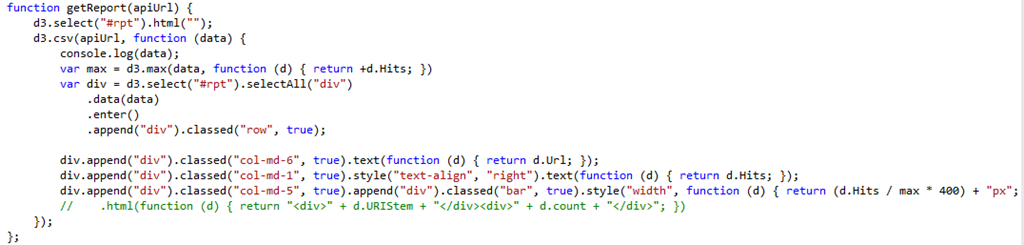

The queries look even simpler than what you normally write against LogParser or SQL Server:

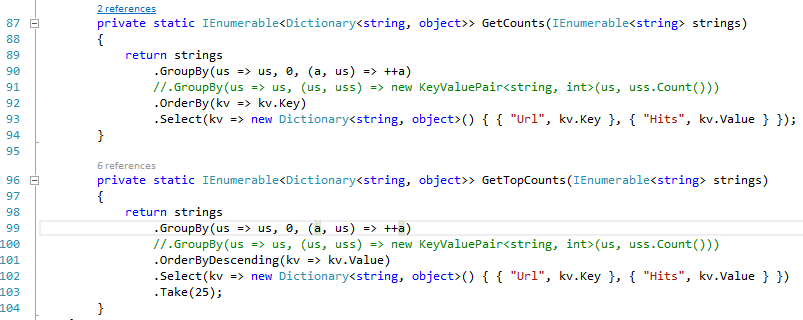

That is because part of the queries were refactored into two reusable functions below. We cannot reuse part of query with LogParser. With SQL Server, we can create reusable units with View. With LINQ, we can reuse any part of the LINQ query.

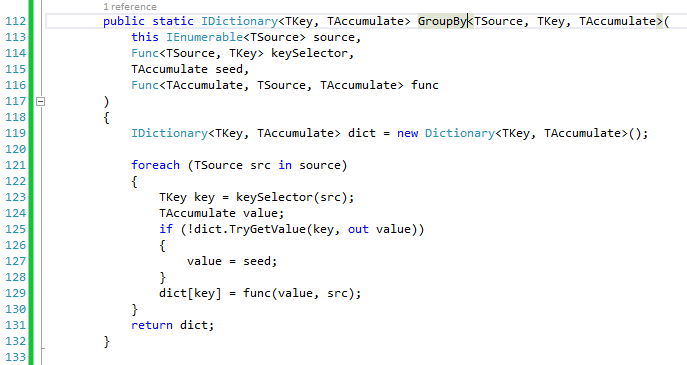

Note that I used the memory efficient version of GroupBy written by me previously. The equivalent code using the GroupBy in LINQ is commented out.

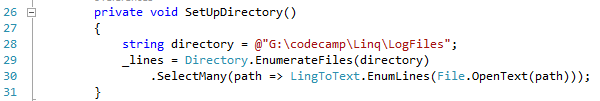

To run these reports, we just need records from the log files as IEnumerable<string>. To get them from a directory, the code would look like:

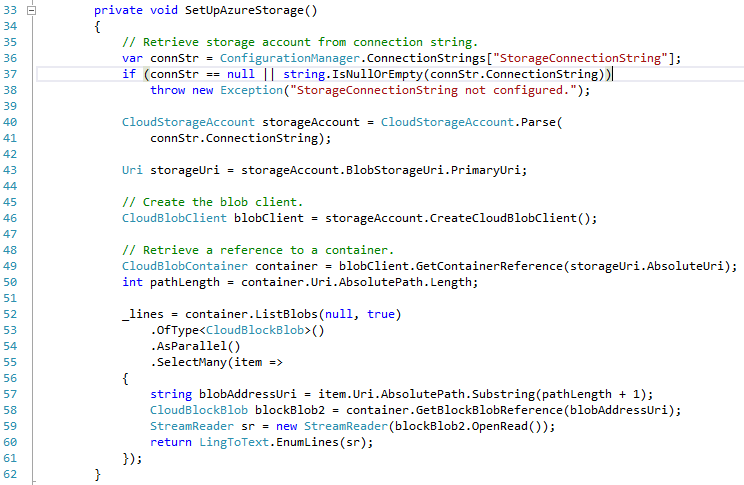

To get records from Windows Azure Blob Storage, the could would look like:

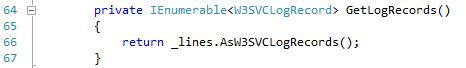

Lastly, we need to convert IEnumberable<string> to strongly-typed wrapper, IEnumerable<W3SVCLogRecord>, to be consumed by the reports:

I touched upon the idea of strongly-typed wrapper previously. The purpose of strongly-typed wrapper is to allow intelligence while minimize the garbage collection. The wrapper provides access to underlying data, but does not convert the data to a data-transfer-object.

The front-end is a simple ASP.NET MVC page. Reports are exposed through ASP.NET Web API using the CSV formatter that I built previously. The visualization was built using D3.js. The code is so simple that we could think each line of the code as Javascript based widgets. If we desire more sophisticated UI, we could compose the UI with a different widget. We could even introduce a client-side template engine such as Mustache.js.

Even with simple example, there are enough benefits in it so that I probably would never need to use LogParser again. The example is fully built on LINQ to IEnumerable. I have not used any cool feature from IQueryable and Rx yet. As usually, the latest code is in http://skylinq.codeplex.com. Stay tuned for more fun stuff.

-

Review the WPF ICommand interface

I have not worked with WPF for a while. Recently, I need to make enhancement to a WPF project that I did 20 months ago. It is time to refresh my memory on several WPF unique things. The first thing that came to my mind is WPF Commanding.

In the old days when we create a VB 1-6 or Windows Forms applications, we just double click a button from the designer to create an event handler. With WPF, we can still do it the old way but there is a new way: we bind the Command property of the control to a property of the view model that implements ICommand. So what is ICommand?

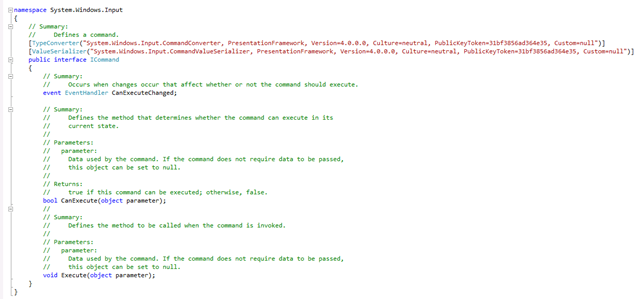

The ICommand interface has 3 members: Execute, CanExecute and CanExecuteChanged.

Why the complications? That is because the WPF Commanding allows enable/disable the command sources such as button and menu item without resorting to spaghetti code.The basic workflow is simple:

- Whenever we change a state in the view model that we think that might affect CanExecute state of the command, we call the this.MyCommand.CanExecuteChanged() method.

- This will trigger the view to call the CanExecute method the command object to determine whether the command source should be enabled.

- When the command source is enabled and clicked, the Execute method of the command object is called; there is where the event handler would be implemented.

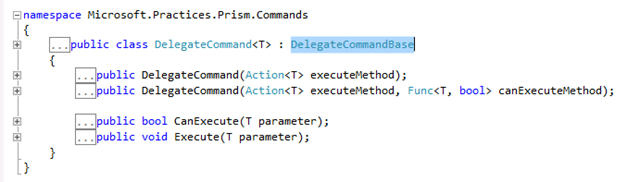

The prism project has a nice implementation of ICommand called DelegateCommand. Its declaration is fairly simple:

All we need is to instantiate it and give it a executeMethod call back and an optionally canExecuteMethod call back.

Noticed that both CanExecute and Execute functions allow one argument? We could use the mechanism to bind multiple controls to a single command on the view model. We can use CommandParameter of the control to differentiate the controls.

-

Custom Web API Message Formatter

ASP.NET Web API supports multiple message formats. The framework uses the “Accept” request header from the client to determine the message format. If you visit a Web API URL with internet explorer, you will get a JSON message by default; if you use Chrome, which sends “application/xml;” in the accept header, you will get a XML message.

Message format is an extensivity point in Web API. There is a pretty good example on ASP.NET web site. Recently, when I worked on my SkyLinq open source cloud analytic project, I needed a way to expose data to the client in a very lean manner. I would like it to leaner than both XML and JSON, so I used csv. The example provided on the ASP.NET website is actually a CSV example. The article was lastly updated on March 8, 2012. Since ASP.NET is rapidly advancing, I found that I need to make the following modifications to make the example working correctly with Web API 2.0.

- CanReadType is now a public function instead of a proetected function.

- The signature of WriteToStream has changed. The current signature is

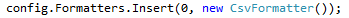

- When addition the Media Formatter to the Configuration, I have to insert my custom formatter into the formatters collection as the first. This is because the JSON Formatter actually recognizes the “application/csv” header and send JSON back instead. Example below:

-

Thoughts on programming by composition

Programming by composition is about putting together a large, complex program from small pieces. In the past, when we talk about composition in the object-oriented world, we declare interfaces. We then pass objects from classes that implement interfaces to anyplace that accepts these interfaces. However, there are some limitations in this approach:

- Interfaces live in namespaces. So except for a few well known interfaces, most interfaces live in their private namespaces so components have to be explicitly aware of each other to compose.

- Interfaces with multiple members are often too big a unit for composition. We often desire smaller composition units.

Composition is much easier in the functional world because the basic units are functions (or lambdas). Functions do not have namespace and functions are smallest units possible. For as long as the function signature matches, we can adapt them together. In addition, some functional language relies on remarkably smaller number of types, for example, Cons in Scheme. Is there a middle ground we can do the same in C# which is an object-oriented language with some functional features?

As the Linq library has demonstrated, types implementing IEnumerable<T> can be easily composed in a fluent style. In additional, my blog on Linq to graph shows that some more complicated data structures can be flattened to IEnumerable<T> and thus consumed by Linq. This takes care the problems where T is a simple type or a well known type. For more complicated data types, a type that implements IDictionary<string, object> can store arbitrary complex data structure as demonstrated in scripting languages like Javascript. Many data sources, such as a record from a comma separated file, or IDataReader are naturally Dictionary<string, object>. Many outputs of the programs such as JSON and XML are also IDictionary. The remaining question is whether we can use IDictionary in the heart of the programs.

Unfortunately, C# code to access dictionary is ugly; there are currently no syntactic sugars to make them pretty (while VB.NET has). A combination of C# dynamic keyword and DynamicObject is the closest thing to make IDictionary access pretty but DynamicObject carries a huge overhead. So the solution that I propose is to create a strongly-typed wrapper over the underlying dictionary when strong-type is needed. This is not a new idea; strongly-typed dataset already uses it. We can in fact use C# dynamic to duck-typing to strongly-typed wrapper from any namespaces. The duck-typing code generated by C# compiler is far more efficient than DynamicObject. It is fairly easy to generate strongly-typed wrapper code from the meta data and templates and I intent to demonstrate that in my later blog posts.

For an example of IDictionary implementation over delimited file record, see the Record class in SkyLinq. For an example of strongly-typed wrapper, see the W3SVCLogRecord class. I do not have many composition example yet, but I promise that as the SkyLinq project unfold the entire system would build on composition.

-

Be aware of the memory implication of GroupBy in LINQ

LINQ functions generally have very small memory footprint. LINQ functions usually use lazy evaluation whenever possible. An item is not evaluated until MoveNext() and Current of the Enumerator are called. It only needs memory to hold one element at a time.

GroupBy is an exception. An IEnumerable<T> are often grouped into one IEnumerable<T> for each group before it is further processed. LINQ often has no choice but to accumulate each group using a List<T> like structure, resulting in the entire IEnumerable<T> loaded into memory at once.

If we are only interested in the aggregated results of each group, we can optimize GroupBy with the following implementation:

We use a dictionary to hold the accumulated value for each group. Each element from IEnumerable<T> is immediately consumed by the accumulated as it is read in. This way, we only need as much memory to hold the number of groups, far less than the entire IEnumerable<T> if each group has a large number of elements.

If the IEnumerable<T> is ordered by the key order, we can further optimize the code. We would need memory to hold only one group at a time.

As usual, all LINQ samples are available in the codeplex SkyLinq project.

-

LINQ to Graph

As promised in my previous, I now provides some details about an example I gave during my talk at SoCal Code Camp titled “LINQ to Objects A-Z”. In this blog, I will discuss LINQ to Graph.

LINQ to object has a large number of functions to query IEnumerable<T>. What if we are dealing with a hierarchy? It turns out LINQ can flatten one-level-down hierarchies with one of the SelectMany methods. For deeper hierarchies, we need to do either the depth-first-search (DFS) or the breath-first-search (BFS). In this post, I will provide generic implementations of BFS and DFS. These functions return an IEnumerable<T> that can subsequently processed with LINQ.

With DFS, we need to recursively place exposed nodes on a stack and search each node until nothing left on the stack. With BFS, we use a queue instead of a stack to store unsearched nodes. The implementation of DFS is relatively simple as recursive function calls already provide a stack implicitly. The following code snippets show the DFS implementation.

The DFS function accept the three arguments. The first argument is the starting node. The second argument getInners is a lambda function that returns the child inner nodes of a parent node. The third argument is a lambda function that returns the leaf nodes of a parent node.

The following code snippets demonstrate using DFS to search a direction for files recursively. We pass the path of the starting directory as the first argument. We use Directory.EnumerateDirectories to implement the getInners function and use Directory.EnumerateFiles to implement the getLeafs function.

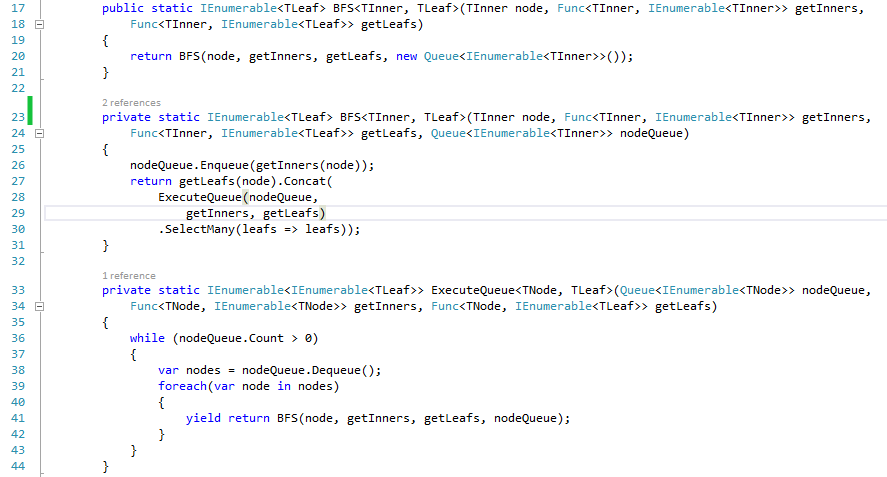

The implementation of BFS is longer. We need to implement a queue explicitly. Two helper functions are defined to help managing the queue. The BFS function can be used in place of DFS in the recursive problem; only the order of the outputs will change.

The source code of this blog could be found at http://skylinq.codeplex.com. In future blogs, I will continue discussing application of LINQ in combinatorial problems.

-

Is it time for cloud-based ASP.NET IDE? (round 2)

8 months ago, I asked whether it is time for cloud-based ASP.NET IDE. I have long been dreaming of being able to create web application on the spot while talking to users. I was able to do that 20 years ago with VB3. Today, the closest thing I can do with web application is with a CMS like Orchard. To work on a live website, we need an editor that is accessing the live site. We also need a tool to indicate the link between the html in the browser and the code that generate the html.

A lot has happened in the past 8 months. For the cloud based editor, first I saw Scott Hanselman’s blog about Microsoft’s own cloud editor. Then we found this editor to appear in Visual Studio Online.

For the tools that link html with source code, I first saw the very impressive shape tracing tool in Orchard. Then we saw the browser link and remote debugging feature in Visual Studio 2013.

So whether the IDE itself is in the cloud or not, the new VS2013 features together with the Azure feature of deploying directly from a repository brings up ever close to being able to work on a live web application in front of a customer.

-

Gave a talk at SoCal Code Camp at USC today titled “Linq to Objects A-Z”

I gave a talk at SoCal Code Camp on Linq to Objects. With careful categorization of Linq functions, I was able to cover the entire set of Linq functions in only 35 minutes. I was able to spend the rest time on demos.

In my first demo, I show I was able to write a top 20 URL type of query using 4 lines of library code and 9 line of Linq code without tools like Log Parser. I also demonstrated that I only need to change 2 lines of code from querying a single log file to a whole directory of log files. It would be as simple to run the query against multiple servers in parallel.

In my second demo, I discussed how to turn into graph depth-first-search (DFS) and breath-first-search (BFS) in the a Linq queryable problem. The class LingToGraph contains the only DFS and BFS code I ever have to write; the rest could be done the the lambda passed to the DFS or BFS calls.

In future blogs, I will provide more details explanation of code.

Links:

Link to Powerpoint slides.

![image[2] image[2]](https://aspblogs.blob.core.windows.net/media/lichen/Media/image2_0D6BC28B.png)

![image[5] image[5]](https://aspblogs.blob.core.windows.net/media/lichen/Media/image5_7CF3518F.png)