Speech Recognition in ASP.NET

Speech synthesis and recognition were both introduced in .NET 3.0. They both live in System.Speech.dll. In the past, I already talked about speech synthesis in the context of ASP.NET Web Form applications, this time, I’m going to talk about speech recognition.

.NET has in fact two APIs for that:

-

System.Speech, which comes with the core framework;

-

Microsoft.Speech, offering a somewhat similar API, but requiring a separate download: Microsoft Speech Platform Software Development Kit.

I am going to demonstrate a technique that makes use of HTML5 features, namely, Data URIs and the getUserMedia API and also ASP.NET Client Callbacks, which, if you have been following my blog, should know that I am a big fan of.

First, because we have two APIs that we can use, let’s start by creating an abstract base provider class:

1: public abstract class SpeechRecognitionProvider : IDisposable

2: {

3: protected SpeechRecognitionProvider(SpeechRecognition recognition)

4: {

5: this.Recognition = recognition;

6: }

7:

8: ~SpeechRecognitionProvider()

9: {

10: this.Dispose(false);

11: }

12:

13: public abstract SpeechRecognitionResult RecognizeFromWav(String filename);

14:

15: protected SpeechRecognition Recognition

16: {

17: get;

18: private set;

19: }

20:

21: protected virtual void Dispose(Boolean disposing)

22: {

23: }

24:

25: void IDisposable.Dispose()

26: {

27: GC.SuppressFinalize(this);

28: this.Dispose(true);

29: }

30: }

It basically features one method, RecognizeFromWav, which takes a physical path and returns a SpeechRecognitionResult (code coming next). For completeness, it also implements the Dispose Pattern, because some provider may require it.

In a moment we will be creating implementations for the built-in .NET provider as well as Microsoft Speech Platform.

The SpeechRecognition property refers to our Web Forms control, inheriting from HtmlGenericControl, which is the one that knows how to instantiate one provider or the other:

1: [ConstructorNeedsTag(false)]

2: public class SpeechRecognition : HtmlGenericControl, ICallbackEventHandler

3: {

4: public SpeechRecognition() : base("span")

5: {

6: this.OnClientSpeechRecognized = String.Empty;

7: this.Mode = SpeechRecognitionMode.Desktop;

8: this.Culture = String.Empty;

9: this.SampleRate = 44100;

10: this.Grammars = new String[0];

11: this.Choices = new String[0];

12: }

13:

14: public event EventHandler<SpeechRecognizedEventArgs> SpeechRecognized;

15:

16: [DefaultValue("")]

17: public String Culture

18: {

19: get;

20: set;

21: }

22:

23: [DefaultValue(SpeechRecognitionMode.Desktop)]

24: public SpeechRecognitionMode Mode

25: {

26: get;

27: set;

28: }

29:

30: [DefaultValue("")]

31: public String OnClientSpeechRecognized

32: {

33: get;

34: set;

35: }

36:

37: [DefaultValue(44100)]

38: public UInt32 SampleRate

39: {

40: get;

41: set;

42: }

43:

44: [TypeConverter(typeof(StringArrayConverter))]

45: [DefaultValue("")]

46: public String [] Grammars

47: {

48: get;

49: private set;

50: }

51:

52: [TypeConverter(typeof(StringArrayConverter))]

53: [DefaultValue("")]

54: public String[] Choices

55: {

56: get;

57: set;

58: }

59:

60: protected override void OnInit(EventArgs e)

61: {

62: if (this.Page.Items.Contains(typeof(SpeechRecognition)))

63: {

64: throw (new InvalidOperationException("There can be only one SpeechRecognition control on a page."));

65: }

66:

67: var sm = ScriptManager.GetCurrent(this.Page);

68: var reference = this.Page.ClientScript.GetCallbackEventReference(this, "sound", String.Format("function(result){{ {0}(JSON.parse(result)); }}", String.IsNullOrWhiteSpace(this.OnClientSpeechRecognized) ? "void" : this.OnClientSpeechRecognized), String.Empty, true);

69: var script = String.Format("\nvar processor = document.getElementById('{0}'); processor.stopRecording = function(sampleRate) {{ window.stopRecording(processor, sampleRate ? sampleRate : 44100); }}; processor.startRecording = function() {{ window.startRecording(); }}; processor.process = function(sound){{ {1} }};\n", this.ClientID, reference);

70:

71: if (sm != null)

72: {

73: this.Page.ClientScript.RegisterStartupScript(this.GetType(), String.Concat("process", this.ClientID), String.Format("Sys.WebForms.PageRequestManager.getInstance().add_pageLoaded(function() {{ {0} }});\n", script), true);

74: }

75: else

76: {

77: this.Page.ClientScript.RegisterStartupScript(this.GetType(), String.Concat("process", this.ClientID), script, true);

78: }

79:

80: this.Page.ClientScript.RegisterClientScriptResource(this.GetType(), String.Concat(this.GetType().Namespace, ".Script.js"));

81: this.Page.Items[typeof(SpeechRecognition)] = this;

82:

83: base.OnInit(e);

84: }

85:

86: protected virtual void OnSpeechRecognized(SpeechRecognizedEventArgs e)

87: {

88: var handler = this.SpeechRecognized;

89:

90: if (handler != null)

91: {

92: handler(this, e);

93: }

94: }

95:

96: protected SpeechRecognitionProvider GetProvider()

97: {

98: switch (this.Mode)

99: {

100: case SpeechRecognitionMode.Desktop:

101: return (new DesktopSpeechRecognitionProvider(this));

102:

103: case SpeechRecognitionMode.Server:

104: return (new ServerSpeechRecognitionProvider(this));

105: }

106:

107: return (null);

108: }

109:

110: #region ICallbackEventHandler Members

111:

112: String ICallbackEventHandler.GetCallbackResult()

113: {

114: AsyncOperationManager.SynchronizationContext = new SynchronizationContext();

115:

116: var filename = Path.GetTempFileName();

117: var result = null as SpeechRecognitionResult;

118:

119: using (var engine = this.GetProvider())

120: {

121: var data = this.Context.Items["data"].ToString();

122:

123: using (var file = File.OpenWrite(filename))

124: {

125: var bytes = Convert.FromBase64String(data);

126: file.Write(bytes, 0, bytes.Length);

127: }

128:

129: result = engine.RecognizeFromWav(filename) ?? new SpeechRecognitionResult(String.Empty);

130: }

131:

132: File.Delete(filename);

133:

134: var args = new SpeechRecognizedEventArgs(result);

135:

136: this.OnSpeechRecognized(args);

137:

138: var json = new JavaScriptSerializer().Serialize(result);

139:

140: return (json);

141: }

142:

143: void ICallbackEventHandler.RaiseCallbackEvent(String eventArgument)

144: {

145: this.Context.Items["data"] = eventArgument;

146: }

147:

148: #endregion

149: }

SpeechRecognition implements ICallbackEventHandler for a self-contained AJAX experience; it registers a couple of JavaScript functions and also an embedded JavaScript file, for some useful sound manipulation and conversion. Only one instance is allowed on a page. On the client-side, this JavaScript uses getUserMedia to access an audio source and uses a clever mechanism to pack them as a .WAV file in a Data URI. I got these functions from http://typedarray.org/from-microphone-to-wav-with-getusermedia-and-web-audio/ and made some changes to them. I like them because they don’t require any external library, which makes all this pretty much self-contained.

The control exposes some custom properties:

-

Culture: an optional culture name, such as “pt-PT” or “en-US”; if not specified, it defaults to the current culture in the server machine;

-

Mode: one of the two providers: Desktop (for System.Speech) or Server (for Microsoft.Speech, of Microsoft Speech Platform);

-

OnClientSpeechRecognized: the name of a callback JavaScript version that will be called when there are results (more on this later);

-

SampleRate: a sample rate, by default, it is 44100;

-

Grammars: an optional collection of additional grammar files, with extension .grxml (Speech Recognition Grammar Specification), to add to the engine;

-

Choices: an optional collection of choices to recognize, if we want to restrict the scope, such as “yes”/”no”, “red”/”green”, etc.

The mode enumeration looks like this:

1: public enum SpeechRecognitionMode

2: {

3: Desktop,

4: Server

5: }

The SpeechRecognition control also has an event, SpeechRecognized, which allows overriding the detected phrases. Its argument is this simple class that follows the regular .NET event pattern:

1: [Serializable]

2: public sealed class SpeechRecognizedEventArgs : EventArgs

3: {

4: public SpeechRecognizedEventArgs(SpeechRecognitionResult result)

5: {

6: this.Result = result;

7: }

8:

9: public SpeechRecognitionResult Result

10: {

11: get;

12: private set;

13: }

14: }

Which in turn holds a SpeechRecognitionResult:

1: public class SpeechRecognitionResult

2: {

3: public SpeechRecognitionResult(String text, params String [] alternates)

4: {

5: this.Text = text;

6: this.Alternates = alternates.ToList();

7: }

8:

9: public String Text

10: {

11: get;

12: set;

13: }

14:

15: public List<String> Alternates

16: {

17: get;

18: private set;

19: }

20: }

This class receives the phrase that the speech recognition engine understood plus an array of additional alternatives, in descending order.

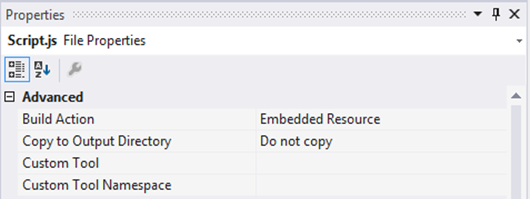

The JavaScript file containing the utility functions is embedded in the assembly:

You need to add an assembly-level attribute, WebResourceAttribute, possibly in AssemblyInfo.cs, of course, replacing MyNamespace for your assembly’s default namespace:

1: [assembly: WebResource("MyNamespace.Script.js", "text/javascript")]

This attribute registers a script file with some content-type so that it can be included in a page by the RegisterClientScriptResource method.

And here is it:

1: // variables

2: var leftchannel = [];

3: var rightchannel = [];

4: var recorder = null;

5: var recording = false;

6: var recordingLength = 0;

7: var volume = null;

8: var audioInput = null;

9: var audioContext = null;

10: var context = null;

11:

12: // feature detection

13: navigator.getUserMedia = navigator.getUserMedia || navigator.webkitGetUserMedia || navigator.mozGetUserMedia || navigator.msGetUserMedia;

14:

15: if (navigator.getUserMedia)

16: {

17: navigator.getUserMedia({ audio: true }, onSuccess, onFailure);

18: }

19: else

20: {

21: alert('getUserMedia not supported in this browser.');

22: }

23:

24: function startRecording()

25: {

26: recording = true;

27: // reset the buffers for the new recording

28: leftchannel.length = rightchannel.length = 0;

29: recordingLength = 0;

30: leftchannel = [];

31: rightchannel = [];

32: }

33:

34: function stopRecording(elm, sampleRate)

35: {

36: recording = false;

37:

38: // we flat the left and right channels down

39: var leftBuffer = mergeBuffers(leftchannel, recordingLength);

40: var rightBuffer = mergeBuffers(rightchannel, recordingLength);

41: // we interleave both channels together

42: var interleaved = interleave(leftBuffer, rightBuffer);

43:

44: // we create our wav file

45: var buffer = new ArrayBuffer(44 + interleaved.length * 2);

46: var view = new DataView(buffer);

47:

48: // RIFF chunk descriptor

49: writeUTFBytes(view, 0, 'RIFF');

50: view.setUint32(4, 44 + interleaved.length * 2, true);

51: writeUTFBytes(view, 8, 'WAVE');

52: // FMT sub-chunk

53: writeUTFBytes(view, 12, 'fmt ');

54: view.setUint32(16, 16, true);

55: view.setUint16(20, 1, true);

56: // stereo (2 channels)

57: view.setUint16(22, 2, true);

58: view.setUint32(24, sampleRate, true);

59: view.setUint32(28, sampleRate * 4, true);

60: view.setUint16(32, 4, true);

61: view.setUint16(34, 16, true);

62: // data sub-chunk

63: writeUTFBytes(view, 36, 'data');

64: view.setUint32(40, interleaved.length * 2, true);

65:

66: // write the PCM samples

67: var index = 44;

68: var volume = 1;

69:

70: for (var i = 0; i < interleaved.length; i++)

71: {

72: view.setInt16(index, interleaved[i] * (0x7FFF * volume), true);

73: index += 2;

74: }

75:

76: // our final binary blob

77: var blob = new Blob([view], { type: 'audio/wav' });

78:

79: var reader = new FileReader();

80: reader.onloadend = function ()

81: {

82: var url = reader.result.replace('data:audio/wav;base64,', '');

83: elm.process(url);

84: };

85: reader.readAsDataURL(blob);

86: }

87:

88: function interleave(leftChannel, rightChannel)

89: {

90: var length = leftChannel.length + rightChannel.length;

91: var result = new Float32Array(length);

92: var inputIndex = 0;

93:

94: for (var index = 0; index < length;)

95: {

96: result[index++] = leftChannel[inputIndex];

97: result[index++] = rightChannel[inputIndex];

98: inputIndex++;

99: }

100:

101: return result;

102: }

103:

104: function mergeBuffers(channelBuffer, recordingLength)

105: {

106: var result = new Float32Array(recordingLength);

107: var offset = 0;

108:

109: for (var i = 0; i < channelBuffer.length; i++)

110: {

111: var buffer = channelBuffer[i];

112: result.set(buffer, offset);

113: offset += buffer.length;

114: }

115:

116: return result;

117: }

118:

119: function writeUTFBytes(view, offset, string)

120: {

121: for (var i = 0; i < string.length; i++)

122: {

123: view.setUint8(offset + i, string.charCodeAt(i));

124: }

125: }

126:

127: function onFailure(e)

128: {

129: alert('Error capturing audio.');

130: }

131:

132: function onSuccess(e)

133: {

134: // creates the audio context

135: audioContext = (window.AudioContext || window.webkitAudioContext);

136: context = new audioContext();

137:

138: // creates a gain node

139: volume = context.createGain();

140:

141: // creates an audio node from the microphone incoming stream

142: audioInput = context.createMediaStreamSource(e);

143:

144: // connect the stream to the gain node

145: audioInput.connect(volume);

146:

147: /* From the spec: This value controls how frequently the audioprocess event is

148: dispatched and how many sample-frames need to be processed each call.

149: Lower values for buffer size will result in a lower (better) latency.

150: Higher values will be necessary to avoid audio breakup and glitches */

151: var bufferSize = 2048;

152:

153: recorder = context.createScriptProcessor(bufferSize, 2, 2);

154: recorder.onaudioprocess = function (e)

155: {

156: if (recording == false)

157: {

158: return;

159: }

160:

161: var left = e.inputBuffer.getChannelData(0);

162: var right = e.inputBuffer.getChannelData(1);

163:

164: // we clone the samples

165: leftchannel.push(new Float32Array(left));

166: rightchannel.push(new Float32Array(right));

167:

168: recordingLength += bufferSize;

169: }

170:

171: // we connect the recorder

172: volume.connect(recorder);

173: recorder.connect(context.destination);

174: }

OK, let’s move on the the provider implementations; first, Desktop:

1: public class DesktopSpeechRecognitionProvider : SpeechRecognitionProvider

2: {

3: public DesktopSpeechRecognitionProvider(SpeechRecognition recognition) : base(recognition)

4: {

5: }

6:

7: public override SpeechRecognitionResult RecognizeFromWav(String filename)

8: {

9: var engine = null as SpeechRecognitionEngine;

10:

11: if (String.IsNullOrWhiteSpace(this.Recognition.Culture) == true)

12: {

13: engine = new SpeechRecognitionEngine();

14: }

15: else

16: {

17: engine = new SpeechRecognitionEngine(CultureInfo.CreateSpecificCulture(this.Recognition.Culture));

18: }

19:

20: using (engine)

21: {

22: if ((this.Recognition.Grammars.Any() == false) && (this.Recognition.Choices.Any() == false))

23: {

24: engine.LoadGrammar(new DictationGrammar());

25: }

26:

27: foreach (var grammar in this.Recognition.Grammars)

28: {

29: var doc = new SrgsDocument(Path.Combine(HttpRuntime.AppDomainAppPath, grammar));

30: engine.LoadGrammar(new Grammar(doc));

31: }

32:

33: if (this.Recognition.Choices.Any() == true)

34: {

35: var choices = new Choices(this.Recognition.Choices.ToArray());

36: engine.LoadGrammar(new Grammar(choices));

37: }

38:

39: engine.SetInputToWaveFile(filename);

40:

41: var result = engine.Recognize();

42:

43: return ((result != null) ? new SpeechRecognitionResult(result.Text, result.Alternates.Select(x => x.Text).ToArray()) : null);

44: }

45: }

46: }

What this provider does is simply receive the location of a .WAV file and feed it to SpeechRecognitionEngine, together with some parameters of SpeechRecognition (Culture, AudioRate, Grammars and Choices)

Finally, the code for the Server (Microsoft Speech Platform Software Development Kit) version:

1: public class ServerSpeechRecognitionProvider : SpeechRecognitionProvider

2: {

3: public ServerSpeechRecognitionProvider(SpeechRecognition recognition) : base(recognition)

4: {

5: }

6:

7: public override SpeechRecognitionResult RecognizeFromWav(String filename)

8: {

9: var engine = null as SpeechRecognitionEngine;

10:

11: if (String.IsNullOrWhiteSpace(this.Recognition.Culture) == true)

12: {

13: engine = new SpeechRecognitionEngine();

14: }

15: else

16: {

17: engine = new SpeechRecognitionEngine(CultureInfo.CreateSpecificCulture(this.Recognition.Culture));

18: }

19:

20: using (engine)

21: {

22: foreach (var grammar in this.Recognition.Grammars)

23: {

24: var doc = new SrgsDocument(Path.Combine(HttpRuntime.AppDomainAppPath, grammar));

25: engine.LoadGrammar(new Grammar(doc));

26: }

27:

28: if (this.Recognition.Choices.Any() == true)

29: {

30: var choices = new Choices(this.Recognition.Choices.ToArray());

31: engine.LoadGrammar(new Grammar(choices));

32: }

33:

34: engine.SetInputToWaveFile(filename);

35:

36: var result = engine.Recognize();

37:

38: return ((result != null) ? new SpeechRecognitionResult(result.Text, result.Alternates.Select(x => x.Text).ToArray()) : null);

39: }

40: }

41: }

As you can see, it is very similar to the Desktop one. Keep in mind, however, that for this provider to work you will have to download the Microsoft Speech Platform SDK, the Microsoft Speech Platform Runtime and at least one language from the Language Pack.

Here is a sample markup declaration:

1: <web:SpeechRecognition runat="server" ID="processor" ClientIDMode="Static" Mode="Desktop" Culture="en-US" OnSpeechRecognized="OnSpeechRecognized" OnClientSpeechRecognized="onSpeechRecognized" />

If you want to add specific choices, add the Choices attribute to the control declaration and separate the values by commas:

1: Choices="One, Two, Three"

Or add a grammar file:

1: Grammars="~/MyGrammar.grxml"

By the way, grammars are not so difficult to create, you can find a good documentation in MSDN: http://msdn.microsoft.com/en-us/library/ee800145.aspx.

To finalize, a sample JavaScript for starting recognition and receiving the results:

1: <script type="text/javascript">

1:

2:

3: function onSpeechRecognized(result)

4: {

5: window.alert('Recognized: ' + result.Text + '\nAlternatives: ' + String.join(', ', result.Alternatives));

6: }

7:

8: function start()

9: {

10: document.getElementById('processor').startRecording();

11: }

12:

13: function stop()

14: {

15: document.getElementById('processor').stopRecording();

16: }

17:

</script>

And that’s it. You start recognition by calling startRecording(), get results in onSpeechRecognized() (or any other function set in the OnClientSpeechRecognized property) and stop recording with stopRecording(). The values passed to onSpeechRecognized() are those that may have been filtered by the server-side SpeechRecognized event handler.

A final word of advisory: because generated sound files may become very large, do keep the recordings as short as possible.

Of course, this offers several different possibilities, I am looking forward to hearing them from you! ![]()