Blazor discovery part 1: You can't entirely escape Javascript

(This is the first of a series of reposts from my personal blog, which may contain politics and things you don't care about. If you're just here for the .NET and Blazor content, read on!)

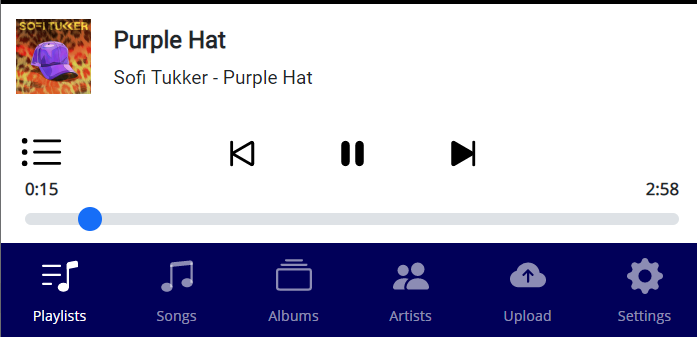

Way back in July, I thought I was mostly joking to myself when I said, "I should build my own cloud music locker service!" But the dire warnings from Google about the forthcoming end of Google Music were alarming, and doubly so when I saw that migrating my stuff to YouTube Music led to a pretty horrible experience with videos and ads, just to listen to the music I already owned. A few weeks later, I messed around to see how hard it was to extract the metadata of MP3's with various .NET libraries, and of course that was easy enough. A month and a half passed, when something convinced me I should look harder at Blazor as a viable alternative to Vue.js or something else as a front-end. A few weekends later, I have something totally usable, on my phone as a progressive web app, and I use it every single day.

I put the project on GitHub, because I felt like someone else might get some use out of this, even if was just experimentation. I called it "MLocker," as in "music locker," the term used to describe services where you upload your tunes and listen to them anywhere. By early October, I was able to prove out that I could extract song data and persist it, and save the file in Azure storage, so on October 11, I went into extreme prototyping mode to see if I could actually play back some music in the browser. I had never touched an HTML audio tag in my life, so it was new territory. I made some totally amazing discoveries at this point, among them the fact that API's in .NET already supported byte ranges and HTTP 206 responses, so the audio tag in every modern browser already knew how to quasi-stream by chunking up a song and not getting the whole thing up front. Neat!

Before I get to the meat of this post, the high level architecture is that there's an API that acts as the plumbing between the front-end Blazor WASM app, a database and Azure blob storage. Blazor for the server seems like a terrible idea for anything beyond pre-rendering, so these posts are just about WASM. For simplicity, I have the API project serving up the Blazor front-end, but you could serve it from literally any static location, if that's your thing. Please don't consider anything here as a "best practice." This discovery process has been fun, and this stuff is way easier than I expected, but the result thus far is not necessarily well-factored or battle tested.

I learned that I would have to get to know Javascript interop a bit, because the audio object has some events not surfaced via Blazor, and controlling it would also require mostly Javascript. In this post, I'll go into how I arrived at the place I am for the player component.

While I've never gone hardcore into any of the modern front-end frameworks (because enormous npm dependency trees give me anxiety), I have gone deep enough into Vue.js and been around React enough to understand the rich component model and the use of shared state machines. Blazor works in largely the same way, only with C#. You can break down these bits of user interface into reusable parts and hook them up to code that they use to respond to events or source data. The player for an app like this gets hit from a lot of different angles. Fortunately, there's only ever one instance of it, so after some iteration, I decided to break it up like this (links go to the source code):

Player.razor: This is the component with the HTML markup and code to tie it to a service.PlayerService: This is where the real playback logic happens. It's a C# class that let's callers know what song is playing, where in the queue the player is, etc.m.js: This is the fairly simple library of Javascript functions to support the things that Blazor can't do directly.

The markup in Player.razor is really simple, with a few lines to display the current song, and some span's that act as buttons. C# code in this file responds to button clicks and manipulates CSS classes on those elements. For example, the play/pause button calls a simple method:

@inject IPlayerService PlayerService

...

<span class="@_playClass playerButton" @onclick="PlayPause"></span>

...

@code{

string _playClass;

private async Task PlayPause()

{

var isPlaying = await PlayerService.TogglePlayer();

UpdatePlayerButton(isPlaying);

}

private void UpdatePlayerButton(bool isPlaying)

{

_playClass = isPlaying ? "pauseButton" : "activePlayButton";

StateHasChanged();

}

}

Calling the PlayerService.TogglePlayer(), it returns a boolean letting you know if you're playing or paused, and the second method sets that class on the button, which has either a play or pause icon. Easy right? The service in this case isn't doing anything exotic at all, and in fact it's just a pass-through to the Javascript that will do the actual interaction with the audio tag. This works because the PlayerService uses dependency injection to resolve an instance of IJSRuntime, which Blazor automatically wires up for you.

public async Task TogglePlayer()

{

var isPlaying = await _jsRuntime.InvokeAsync<bool>("TogglePlayer");

return isPlaying;

}

Moving down the stack, we can see a straight forward call here that finds the audio element, checks its play status, then either plays or pauses it, sending the new state back up the chain.

window.TogglePlayer = () => {

var player = document.getElementById('player');

if (player.paused) {

player.play();

return true;

} else {

player.pause();

return false;

}

}

In the event that you have Javascript returning promises, make sure that your chain is always returning (I learned this the hard way). Blazor will unwrap the promise and give you a result.

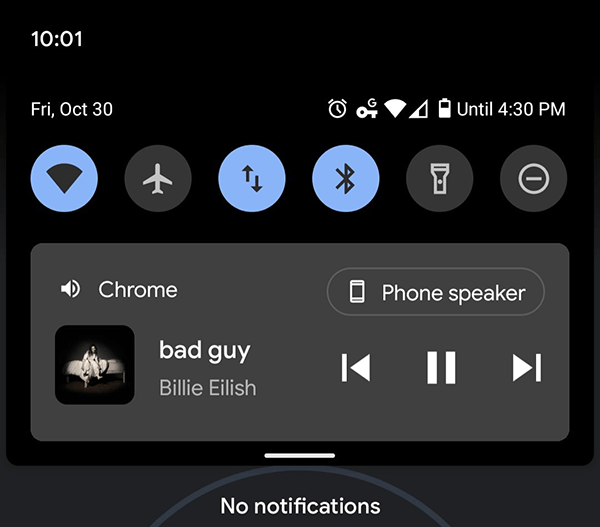

There are certainly times though that you need to go in the opposite direction. For example, Android and Windows fully support the MediaSession API, which is the thing that shows you playback controls and album covers on your lock screen (Android) or next to the volume as it appears on the desktop (Windows). So if someone pushes the play/pause button, I want to respond to that. In Javascript, I have to wire up an event handler and then call the interop library that Blazor loads:

navigator.mediaSession.setActionHandler('play', () => {

player.play();

DotNet.invokeMethodAsync('MLocker.WebApp', 'IsPlaying', true);

});

navigator.mediaSession.setActionHandler('pause', () => {

player.pause();

DotNet.invokeMethodAsync('MLocker.WebApp', 'IsPlaying', false);

});

These calls take two or more parameters: The compiled assembly name of your app, a static method being called, and then any parameters to pass in. As far as I can tell, the static methods can be in any class or component, but in my case I've got it in the Player.razor component. The trick here is that you need to wire up the static method to a static event, which has handlers attached to it from the instance of the component. Let's walk through that. The player component has the static method, with the JSInvokable attribute (important!), and all it does is call the static action.

private static Action<bool> _isPlayingAction;

[JSInvokable]

public static void IsPlaying(bool isPlaying)

{

_isPlayingAction.Invoke(isPlaying);

}

Right now nothing will happen, because we haven't added any instance methods to the Action that should fire. We can set these up in the OnInitialized() or OnInitializedAsync() overridden methods in the component, as simply as this:

_isPlayingAction = UpdatePlayerButton;

Wait, UpdatePlayerButton, haven't we seen that? Yes. Yes we have. We used that above to change the CSS class on the button. The Javascript did the work of making the audio element play or pause, so we just needed to let Blazor know and change the look of the button. We already had some bits for that, so we're done.

There are a number of other events that are wired up from the Javascript calling into the Blazor bits, and you can check the source code for that. Specifically, we want to react when a song ends, so we can play the next one in the queue, and also react to the next/previous buttons from the MediaSession API.

This wasn't the only thing I needed to hook into JS interop for. I'm using context menus on songs, to add them to playlists, add to the queue, go to artist, etc., and I'm using the awesome Popper.js library for that. It allows you to attach a DOM element to another, while staying visible in the window. I'm not going to get deep into that implementation, but basically there's a click handler in the ListRow.razor component that passes the element ID of the button to a JS interop call that uses Popper.js to attach the context menu, already in the markup but hidden, to the context menu button. Sure, you could render the context menu for each song in the list, but that would mean thousands of them!

There's more interop to talk about, but it gets into the caching of the songs and the album covers using a service worker. I'll get into that in a later post. Next time, I want to talk about the navigation and the act of hiding and showing markup based on state.