Building a live blog app in Windows Azure

If you're a technology nerd, then you've probably seen one technology news site or another do a "live blog" at some product announcement. This is basically a page on the Web where text and photo updates stream into the page as you sit there and soak it in. I don't remember which year these started to appear, but you may recall how frequently they failed. The traffic would overwhelm the site, and down it would go.

So I got to thinking, how would I build something like this? We've got a pretty big media day at Cedar Point coming up for GateKeeper, and it would be fun to post updates in real time. Ars Technica posted an article about how they tackled the problem a couple of months ago, and while elegant, it wasn't how I would do it.

My traffic expectations are lower. I don't expect to get tens of thousands of simultaneous visitors, but a couple thousand is possible. The last time we even had the chance to publish real-time from an event was 2006, for Skyhawk. Maverick got delayed the next year, and that event was scaled back to a few hours in the morning. Still, the server got stressed enough back in 2006 with a lot of open connections, in this case because I was serving video myself, and I didn't write the code that I write today. Regardless, I still wanted to build this using cloud services, as if I was expecting insane traffic. The resulting story, from a development standpoint, is wholly unremarkable, but I'll get to why that's important.

So the design criteria went something like this:

- Be able to add instances on the fly to address rising traffic conditions.

- Update in real-time with open connections, not a browser polling mechanism.

- Keep as much stuff in memory as possible.

- Serve media from a CDN or something not going through the Web site itself.

- Not spend a ton of time on it.

The first thing I did was wire up the bits on the server and client to fire up a SignalR connection, and have an admin push content to the browsers. I won't go deeper into that, because there are plenty of examples around the Internets showing how to do it with a few lines of code. Later in the process, I added the extra line of code and downloaded the package to make SignalR work through Azure Service Bus. This means that if I ran three instance of the app, the admin pushing content out from one instance, will have the content go via the service bus to the other instances, where other users are connected via SignalR. It's stupid easy. Adding instances on the fly and make it real-timey, check.

Next, I needed a way to persist the content. Originally I toyed with using table storage for this, because it's cheaper than SQL. However, ordering in a range becomes a problem, because while you can take a number of entities in a time stamp range, and then order them in code, there's no guarantee you'll get that number of entities. After thinking about it, SQL is $5/month for 100 MB, and I was only going to be using it for a few days. Performance differences would likely be negligible, and since I was going to cache the heck out of everything, that was even less important. I used SQL Azure instead.

Instead of using the Azure Web Sites, I used Web roles, or "cloud services" as they're labeled in the Azure portal. These are the original PaaS things that I was originally drawn to. Sure, they're technically full blown VM's, you can even RDP into them, but I like the way they're intended to exist for a single purpose, maintained and updated for you. More to the point, they have Azure Cache available, which is essentially AppFabric spun up across every instance you have. So if you have two instances up, and they use 30% of the memory of these small instances, that adds up to around a gigabyte of distributed cache, for free. Yes, please! I had my data repositories use this cache liberally. The infinite scroll functionality takes n content items after a certain date, which means different people starting up the page at different times will have different "pages" of data, but I cache those pages despite the overlap. Why not? It's cheap! Keep stuff in memory, check.

The CDN functionality is pretty easy too. I probably didn't need this at all, but why not? Again, for a day or two, given the amount of content, it's not an expensive endeavor. The Azure CDN is simply an extension of your blob storage, so there's little more to do beyond turning it on, adding a CNAME to my DNS, and off we go. CDN, check.

I stole a bunch of stuff from POP Forums, too. Image resizing was already there, the infinite scrolling, the date formatting, the time updating... all copy and paste with a few tweaks. I didn't do the page design either. Granted, most of it wasn't used, but my PointBuzz partner Walt did that. Total time into this endeavor was around 10 hours. Not spend a lot of time, check.

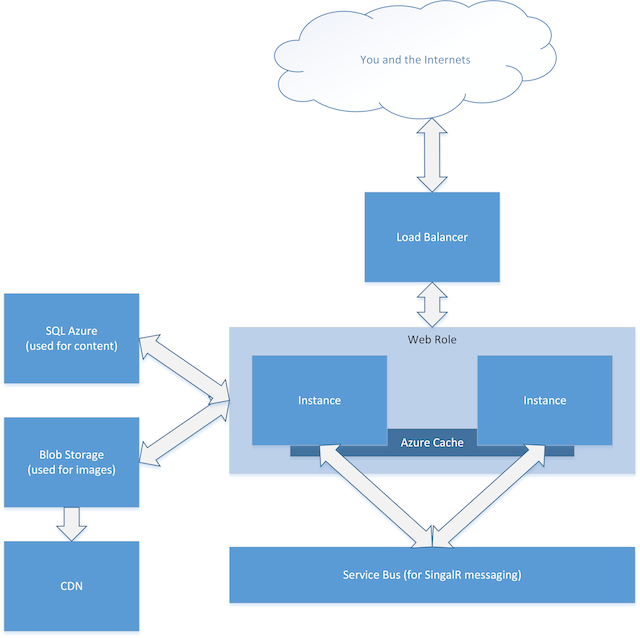

Here's the Visio diagram:

As I said, if this sounds unremarkable from a development standpoint, it is, and that's really the point. I'm whipping up and provisioning a long list of technologies without having to buy a rack full of equipment. That's awesome. Think about what this app is using:

- Two Web servers

- Distributed cache (on the Web servers, in this case)

- Database server

- A service bus

- External storage

- A CDN

For the four days or so that I'll use this stuff, it's going to cost me less than twenty bucks. This, my friends, is why cloud infrastructure and platform services get me so excited. We can build these enterprisey things with practically no money at all. Compare this to 2000, when the most cost effective way to run a couple of quasi-popular Web sites was to get a T-1 to my house, where I ran my own box, and paid $1,200 a month for 1.5 mbits. Things are so awesome now.

I'll let you know how it goes after the live event on May 9!