Azure: 99.95% SQL Database SLA, 500 GB DB Size, Improved Performance Self-Service Restore, and Business Continuity

Earlier this month at the Build conference, we announced a number of great new improvements coming to SQL Databases on Azure including: an improved 99.95% SLA, support for databases up to 500GB in size, self-service restore capability, and new Active Geo Replication support. This 3 minute video shows a segment of my keynote where I walked through the new capabilities:

Last week we made these new capabilities available in preview form, and also introduced new SQL Database service tiers that make it easy to take advantage of them.

New SQL Database Service Tiers

Last week we introduced a new Basic and Standard tier option with SQL Databases – which are additions to the existing Premium tier we previously announced. Collectively these tiers provide a flexible set of offerings that enable you to cost effectively deploy and host SQL Databases on Azure:

- Basic Tier: Designed for applications with a light transactional workload. Performance objectives for Basic provide a predictable hourly transaction rate.

- Standard Tier: Standard is the go-to option for cloud-designed business applications. It offers mid-level performance and business continuity features. Performance objectives for Standard deliver predictable per minute transaction rates.

- Premium Tier: Premium is designed for mission-critical databases. It offers the highest performance levels and access to advanced business continuity features. Performance objectives for Premium deliver predictable per second transaction rates.

You do not need to buy a SQL Server license in order to use any of these pricing tiers – all of the licensing and runtime costs are built-into the price, and the databases are automatically managed (high availability, auto-patching and backups are all built-in). We also now provide you the ability to pay for the database at the per-day granularity (meaning if you only run the database for a few days you only pay for the days you had it – not the entire month).

The price for the new SQL Database Basic tier starts as low as $0.16/day ($4.96 per month) for a 2 GB SQL Database. During the preview period we are providing an additional 50% discount on top of these prices. You can learn more about the pricing of the new tiers here.

Improved 99.95% SLA and Larger Database Sizes

We are extending the availability SLA of all of the new SQL Database tiers to be 99.95%. This SLA applies to the Basic, Standard and Premium tier options – enabling you to deploy and run SQL Databases on Azure with even more confidence.

We are also increasing the maximum sizes of databases that are supported:

- Basic Tier: Supports databases up to 2 GB in size

- Standard Tier: Supports databases up to 250 GB in size.

- Premium Tier: Supports databases up to 500 GB in size.

Note that the pricing model for our service tiers has also changed so that you no longer need to pay a per-database size fee (previously we charged a per-GB rate) - instead we now charge a flat rate per service tier.

Predictable Performance Levels with Built-in Usage Reports

Within the new service tiers, we are also introducing the concept of performance levels, which are a defined level of database resources that you can depend on when choosing a tier. This enables us to provide a much more consistent performance experience that you can design your application around.

The resources of each service tier and performance level are expressed in terms of Database Throughput Units (DTUs). A DTU provides a way to describe the relative capacity of a performance level based on a blended measure of CPU, memory, and read and write rates. Doubling the DTU rating of a database equates to doubling the database resources. You can learn more about the performance levels of each service tier here.

Monitoring your resource usage

You can now monitor the resource usage of your SQL Databases via both an API as well as the Azure Management Portal. Metrics include: CPU, reads/writes and memory (not available this week but coming soon), You can also track your performance usage relative (as a percentage) to the available DTU resources within your service tier level:

Dynamically Adjusting your Service Tier

One of the benefits of the new SQL Database Service Tiers is that you can dynamically increase or decrease them depending on the needs of your application. For example, you can start off on a lower service tier/performance level and then gradually increase the service tier levels as your application becomes popular and you need more resources.

It is quick and easy to change between service tiers or performance levels — it’s a simple online operation. Because you now pay for SQL Databases by the day (as opposed to the month) this ability to dynamically adjust your service tier up or down also enables you to leverage the elastic nature of the cloud and save money.

Read this article to learn more about how performance works in the new system and the benchmarks for each service tier.

New Service-Service Restore Support

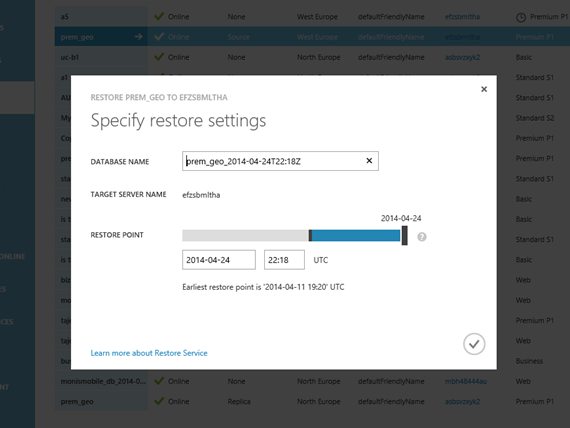

Have you ever had that sickening feeling when you’ve realized that you inadvertently deleted data within a database and might not have a backup? We now have built-in Service Service Restore support with SQL Databases that helps you protect against this. This support is available in all service tiers (even the Basic Tier).

SQL Databases now automatically takes database backups daily and log backups every 5 minutes. The daily backups are also stored in geo-replicated Azure Storage (which will store a copy of them at least 500 miles away from your primary region).

Using the new self-service restore functionality, you can now restore your database to a point in time in the past as defined by the specified backup retention policies of your service tier:

- Basic Tier: Restore from most recent daily backup

- Standard Tier: Restore to any point in last 7 days

- Premium Tier: Restore to any point in last 35 days

Restores can be accomplishing using either an API we provide or via the Azure Management Portal:

New Active Geo-replication Support

For Premium Tier databases, we are also adding support that enables you to create up to 4 readable, secondary, databases in any Azure region. When active geo-replication is enabled, we will ensure that all transactions committed to the database in your primary region are continuously replicated to the databases in the other regions as well:

One of the primary benefits of active geo-replication is that it provides application control over disaster recovery at a database level. Having cross-region redundancy enables your applications to recover in the event of a disaster (e.g. a natural disaster, etc).

The new active geo-replication support enables you to initiate/control any failovers – allowing you to shift the primary database to any of your secondary regions:

This provides a robust business continuity offering, and enables you to run mission critical solutions in the cloud with confidence. You can learn more about this support here.

Starting using the Preview of all of the Above Features Today!

All of the above features are now available to starting using in preview form.

You can sign-up for the preview by visiting our Preview center and clicking the “Try Now” button on the “New Service Tiers for SQL Databases” option. You can then choose which Azure subscription you wish to enable them for. Once enabled, you can immediately start creating new Basic, Standard or Premium SQL Databases.

Summary

This update of SQL Database support on Azure provides some great new features that enable you to build even better cloud solutions. If you don’t already have a Azure account, you can sign-up for a free trial and start using all of the above features today. Then visit the Azure Developer Center to learn more about how to build apps with it.

Hope this helps,

Scott

P.S. In addition to blogging, I am also now using Twitter for quick updates and to share links. Follow me at: twitter.com/scottgu