Azure: Machine Learning Service, Hadoop Storm, Cluster Scaling, Linux Support, Site Recovery and More

Today we released a number of great enhancements to Microsoft Azure. These include:

- Machine Learning: General Availability of the Azure Machine Learning Service

- Hadoop: General Availability of Apache Storm Support, Hadoop 2.6 support, Cluster Scaling, Node Size Selection and preview of next Linux OS support

- Site Recovery: General Availability of DR capabilities with SAN arrays

I've also included details in this blog post of other great Azure features that went live earlier this month:

- SQL Database: General Availability of SQL Database (V12)

- Web Sites: Support for Slot Settings

- API Management: New Premium Tier

- DocumentDB: New Asia and US Regions, SQL Parameterization and Increased Account Limits

- Search: Portal Enhancements, Suggestions & Scoring, New Regions

- Media: General Availability of Content Protection Service for Azure Media Services

- Management: General Availability of the Azure Resource Manager

All of these improvements are now available to use immediately (note that some features are still in preview). Below are more details about them:

Machine Learning: General Availability of Azure ML Service

Today, I’m excited to announce the General Availability of our Azure Machine Learning service. The Azure Machine Learning Service is a powerful cloud-based predictive analytics service that makes it possible to quickly create analytics solutions. It is a fully managed service - which means you do not need to buy any hardware nor manage VMs manually.

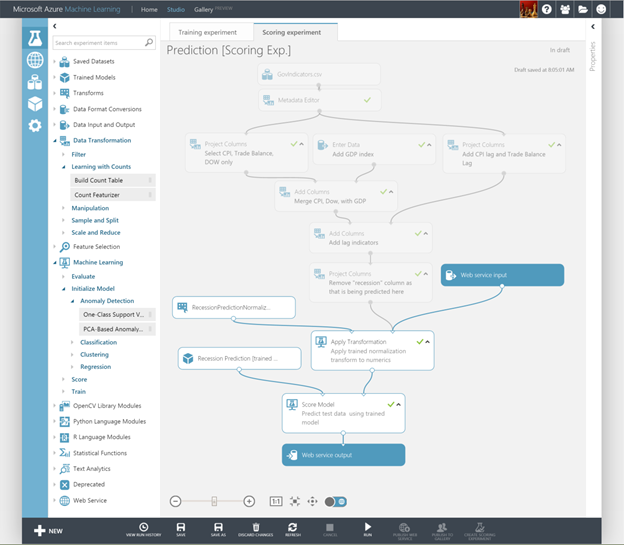

Data Scientists and Developers can use our innovative browser-based machine learning IDE to quickly create and automate machine learning workflows. You can literally drag/drop hundreds of existing ML libraries to jump-start your predictive analytics solutions, and then optionally add your own custom R and Python scripts to extend them. Our Machine Learning IDE works in any browser and enables you to rapidly develop and iterate on solutions:

With today's General Availability release you can easily discover and create web services, train/retrain your models through APIs, manage endpoints and scale web services on a per customer basis, and configure diagnostics for service monitoring and debugging. Additional new capabilities with today's release include:

- The ability to create a configurable custom R module, incorporate your own train/predict R-scripts, and add python scripts using a large ecosystem of libraries such as numpy, scipy, pandas, scikit-learn etc. You can now train on terabytes of data using “Learning with Counts”, use PCA or one-class SVM for anomaly detection, and easily modify, filter, and clean data using familiar SQLite.

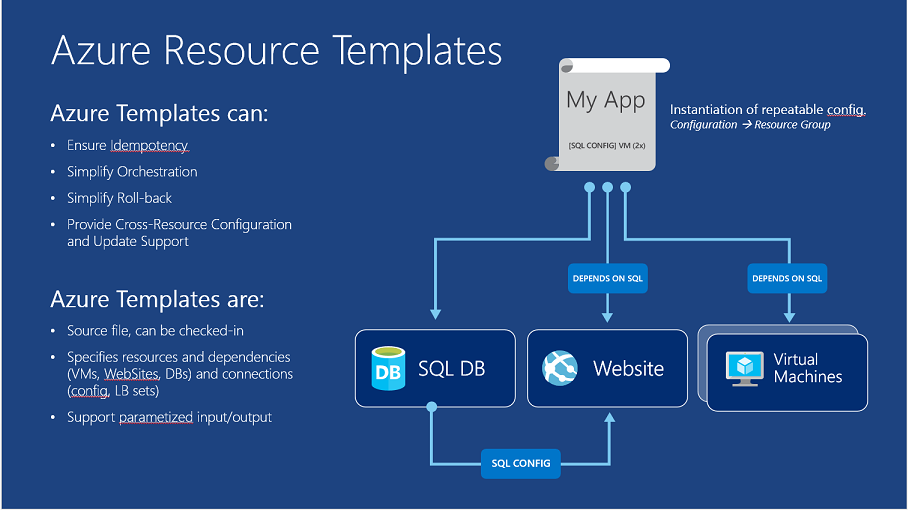

- Azure ML Community Gallery that allows you to discover & learn experiments, and share through Twitter and LinkedIn. You can purchase marketplace apps through an Azure subscription and consume finished web services for Recommendation, Text Analytics, and Anomaly Detection directly from the Azure Marketplace.

- A step-by-step guide for the Data Science journey from raw data to a consumable web service to ease the path for cloud-based data science. We have added the ability to use popular tools such as iPython Notebook and Python Tools for Visual Studio along with Azure ML.

Get Started

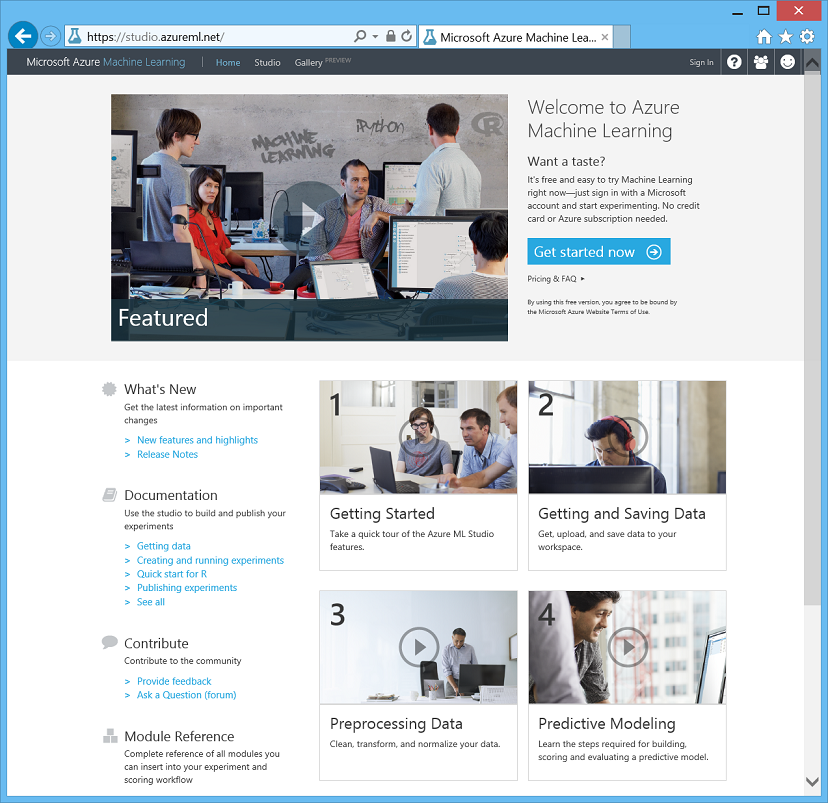

You can learn the basics of predictive analytics and machine learning using our step-by-step data science guide and tutorials. No sign-up or credit card is required to get started using Azure Machine Learning (you can use the machine learning IDE and try experiments for free):

Also browse our machine learning gallery to run existing machine learning experiments others have already built - and optionally publish your own experiments for others to learn from:

Machine Learning and predictive analytics will fundamentally change the way all applications are built in the future. The new Azure Machine Learning service provides an incredibly powerful and easy way to achieve this. Start using it for production apps today!

HDInsight: General Availability of Apache Storm, Cluster Scaling, Hadoop 2.6, Node Sizes, and Preview of HDInsight on Linux

Today I’m happy to also announce several major enhancements to HDInsight, our managed Hadoop service for powering Big Data workloads in Azure.

General Availability of Apache Storm support

With today's release, we are making it easy for you to do real-time streaming analytics using Hadoop by providing Apache Storm as a fully managed Service and making it generally available on HDInsight. This makes it incredibly easy to stand up and manage Storm clusters. As part of the Storm service on HDInsight we have improved productivity by enabling some key features:

- Integration with our Azure Event Hubs service - which allows you to easily process any data that is collected via Event Hubs

- First class .NET experience on top of Apache Storm giving you the option to use both Java and .NET with it

- Library of spouts and bolts let you easily integrate other Azure services like SQL, HBase and DocumentDB

- Visual Studio integration that makes it easy for developers to do full project management from within the Visual Studio environment

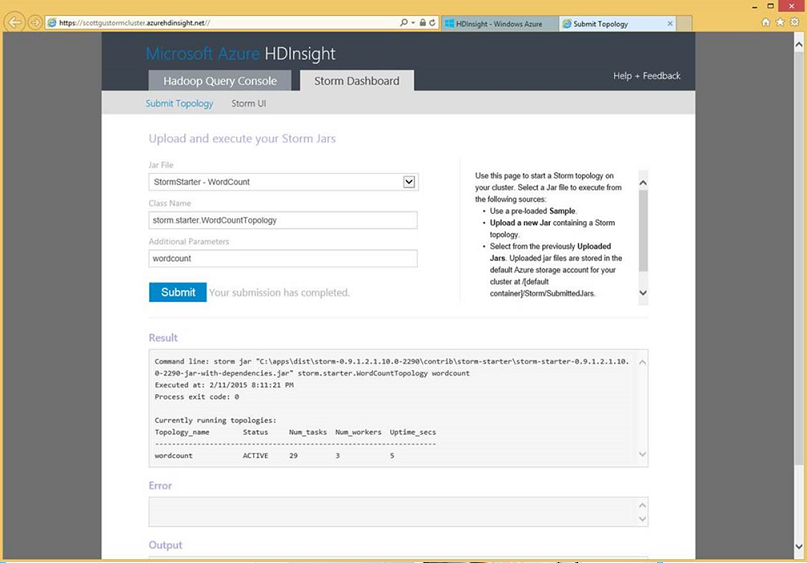

Creating Storm cluster and running a sample topology

You can easily spin up a new Storm cluster from the Azure management portal. The Storm Dashboard allows you to either upload an existing Storm topology or pick one of the sample topologies from the dropdown. Topologies can be authored in code, or higher level programming models like Trident can be used. You can also monitor and manage all the topologies that are currently on your cluster via the Storm Dashboard.

.NET Topologies and a Visual Studio Experience

One of the big improvements we have done on top of Storm is to enable developers to write Storm topologies in .NET. One of the things I am particularly excited about with the Storm release is the Visual Studio experience that we have enabled for Storm on HDInsight. With the latest version of the Azure SDK, you will get Storm project templates under HDInsight. This will quickly get you started with writing Storm topologies without having to worry or setup the right references or write the skeleton code that is needed for every Storm topology.

Since Storm is available as part of the HDInsight service, all HDInsight features also apply to Storm clusters. For example, you can easily scale up or scale down a Storm cluster with no impact to the existing running topologies. This will enable you to easily grow or shrink Storm clusters depending on the speed of ingest data and latency requirements with no impact on the data which is being processed. At the time of the cluster creation you have the choice to pick from a long list of available VMs to use for their Storm cluster on HDInsight.

HDInsight 3.2 Support

I’m pleased to announce the availability of the next major version of Hadoop in HDInsight clusters for Windows and Linux. This includes Hadoop 2.6, Hive 0.14, and substantial updates to all of the components in the stack. Hive 0.14 contains work to improve performance and scalability through Tez, adds a powerful cost based optimizer, and introduces capabilities for handling UPDATE, INSERT and DELETE SQL statements, temporary tables which live for the duration of a development session and more. You can find more details on the Hive 0.14 release here. Pig 0.14 adds support for ORC, allowing a single high performance format to be leveraged across Pig and Hive. Additionally Pig can now target Tez instead of Map/Reduce, resulting in substantial performance improvements by changing the execution engine. Details on the Pig 0.14 release are here. These bring the latest improvements in the open source ecosystem to HDInsight.

To get started with a 3.2 cluster, use the Azure Management portal or the command-line. In addition to the VS tools for Storm, we've also updated the VS tools to include Hive query authoring. We've also added improved statement completion, local validation, access in Visual Studio to the YARN task logs, and support for HDInsight clusters on Linux. In order to get these, you just need to install the Azure SDK for Visual Studio which contains the latest HDInsight tooling.

Cluster Scaling

Many of our customers have asked for the ability to change HDInsight cluster sizes on the fly. This capability is now accessible in both the Azure portal, as well as through the command line and SDK's. You can grow or shrink a Hadoop cluster to fit your workload by simply dragging the sizing slider. We'll add more nodes to your cluster while it is processing and when your larger jobs are done, you can reduce the size of the cluster. If you need more cores available in your subscription, you can open a Billing support ticket to request a larger quota.

Node Size Selection

Finally, you can also now specify the VM sizes for the nodes within your HDInsight cluster. This lets you optimize your cluster's resources to fit your workload. We've made the entire A and D series of VM sizes available. For each of the different types of roles within a cluster, we'll let you specify the machine type. This allows you to tune the amount of CPU, RAM and SSD available to your jobs.

HDInsight on Linux

Today we are also releasing a preview version of our HDInsight service that allows you to deploy HDInsight clusters using Ubuntu Linux containers. This expands the operating system options you can use when running managed Hadoop workloads on Azure (previously HDInsight only supported Windows Server containers).

The new Linux support enables you to easily use familiar tools like SSH and Ambari to build Big Data workloads in Azure. HDInsight on Linux clusters are built on the same Hadoop distribution as the Windows clusters, are fully integrated with Azure storage, and make it easy for customers leveraging Hadoop to take advantage of the SLA, management and support that HDInsight offers. To get started, sign up for the preview here. You can then easily create Linux clusters using the Azure Management Portal or via our command-line interfaces.

SSH connectivity to your HDInsight clusters is enabled by default for all HDInsight on Linux clusters. You can use an SSH client of your choice to connect to the cluster. Additionally, SSH tunneling can be leveraged for forwarding traffic from your browser to all of the Hadoop web applications.

Learn More

For more information about Azure HDInsight, check out the following resources:

Site Recovery: General Availability of Enterprise DR with SANs

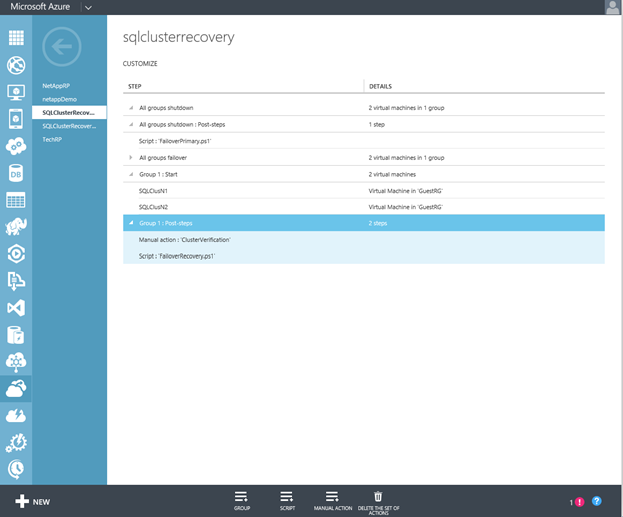

With today’s Azure release, we are also adding another significant capability to Azure Site Recovery’s disaster recovery and replication portfolio. Enterprises that seek to leverage their Storage Area Network (SAN) Arrays to enable high performance synchronous and asynchronous replication across their on-premises Hyper-V private clouds can now orchestrate end-to-end storage array-based replication and disaster recovery with Azure Site Recovery and System Center Virtual Machine Manager (SCVMM).

The addition of SAN as a replication channel enables key scenarios such as Synchronous Replication, Multi-VM Consistency, and support for Guest Clusters with Azure Site Recovery. With support for Shared VHDX and iSCSI Target LUNs, ASR will now be able to better meet the needs of enterprise-class applications such as SQL Server, SharePoint, and SAP etc.

To enable SAN Replication, in the Azure Management Portal select SAN when configuring SCVMM clouds in ASR. ASR in turn validates that the cloud being configured has host clusters that have been correctly zoned to a Storage Array, either via Fibre Channel or iSCSI. Once the cloud configuration is complete and the storage pools have been mapped, Replication Groups (group of storage LUNs that replicate together and thereby enable multi-VM replication consistency) can be enabled for replication. ASR automates the creation of target LUNs, target Replication Groups, and starts the array-based replication.

Here’s an example of a Recovery Plan that can failover a SQL Guest Cluster deployed on a Replication Group:

Learn More

Visit the Azure Site Recovery forum on MSDN for additional information.

Getting started with Azure Site Recovery is easy - all you need is to simply sign up for a free Microsoft Azure trial.

SQL Database: General Availability of SQL Database (V12)

Earlier this month we released the general availability version of our SQL Database (V12) service version. We introduced a preview of this new release last December, and it includes a ton of new capabilities. These include:

-

Better management of large databases. We now support heavier database workload management with parallel queries, table partitioning, online indexing, worry-free large index rebuilds with the previous 2GB size limit removed, and more alter database commands.

-

Support for more programmability capabilities: You can now build even more robust applications with CLR, T-SQL Windows functions, XML index, and change tracking support.

-

Up to 100x performance improvements with support for In-memory columnstore queries for data mart and analytic workloads.

-

Improved monitoring and troubleshooting: Extended Events (XEvents) and visibility into over 100 new table views via an expanded set of Database Management Views (DMVs).

-

New S3 performance level: Today's preview introduces a new pricing option for SQL Databases. The new "S3" performance tier delivers 100 DTU of performance (twice the DTU level of the existing S2 tier) and all of the features available in the Standard tier. It enables an even more cost effective way to run applications with higher performance needs.

You can now take advantage of all of these features in general availability - with all databases backed by an enterprise grade SLA.

Upcoming Security Features

I'm also excited to announce a number of new security features that will start rolling out this month and this spring. These features will help customers better protect their cloud data and help further meet corporate and industry compliance policies. These security enhancements include:

- Row-Level Security

- Dynamic Data Masking

- Transparent Data Encryption

Available in preview today, customers can now implement Row-Level Security on databases to enable implementation of fine-grained access control over rows in a database table for greater control over which users can access which data.

Coming soon, SQL Database will introduce Dynamic Data Masking which is a policy-based security feature that helps limit the exposure of data in a database by returning masked data to non-privileged users who run queries over designated database fields, like credit card numbers, without changing data on the database. Finally, Transparent Data Encryption is coming soon to SQL Database V12 databases for encryption at rest on all databases.

Stay tuned over the coming months for details as we continue to rollout the V12 service general availability and upcoming security features.

Web Sites: Support for Slot Settings

The Azure Web Sites service has always provided the ability to store application settings and connection strings as a part of your Web Site’s metadata. Those settings become available at runtime via environment variables and, if you use .NET, the standard configuration manager API. This feature has now been updated to work better with another Web Sites feature: deployment slots.

Deployment slots provide an easy way for you to safely deploy and test new releases of your web applications prior to swapping them live into production. Let’s say you have a website called mysite.azurewebsites.net with a deployment slot at mysite-staging.azurewebsites.net. You can swap these slots at any given time, and with no downtime. This provides a nice infrastructure for upgrading your website. Until now, when you swapped the staging slot with the production site, all settings and connection strings would swap as well. Sometimes that’s exactly what you want and it works great.

But what if, for testing purposes, your site uses a database and you explicitly want each slot to have its own database (e.g. a production database and a testing database)? Prior to this month's release that would have been difficult to automate since the swap operation would move the staging connection string to the production site and vice versa. You would have to do something unnatural like going to the staging slot and manually updating the settings to the production values before performing the swap operation. Then, you would execute the swap, and finally manually update the staging settings to point to the staging database. That workflow is very complicated and error prone.

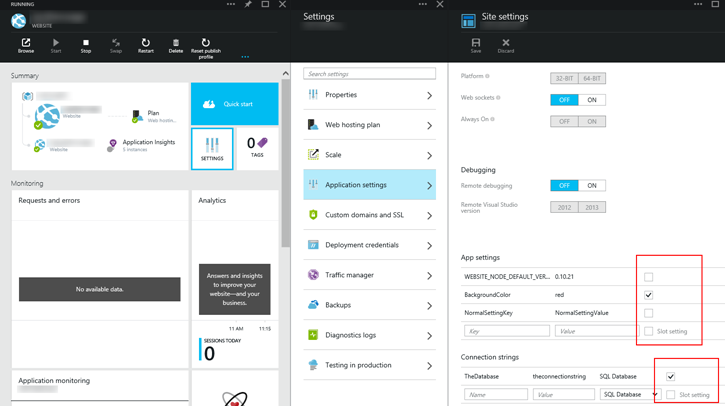

New Slot Settings Support

Slot specific settings are the solution to this problem. Simply go to the Azure Preview Portal, navigate to your Web Site’s Settings page, and you’ll see a new checkbox next to each app setting and connection string. Check the boxes next to each app settings setting and/or connection string that should not participate in swap operations. Each deployment slot has its own version of this settings page where you can go and enter the slot specific setting values. You now have a lot more flexibility when it comes to managing deployment slots and flowing configuration between them during swaps:

API Management: New Premium Tier

Earlier this month we released a preview of our new Premium Tier for our API Management Service. The Azure API Management Service provides a great offering that helps customers expose web-based APIs to customers - and provides support for API protection via rate-limiting, quotas and keys, detailed analytics, easy developer on-boarding and much more.

As the strategic value of APIs increase, customers are demanding even more performance, higher availability and more enterprise-grade features. And in response we're delighted to introduce a new Premium tier of API Management which will offer a 99.95% SLA after preview and includes a number of key new features:

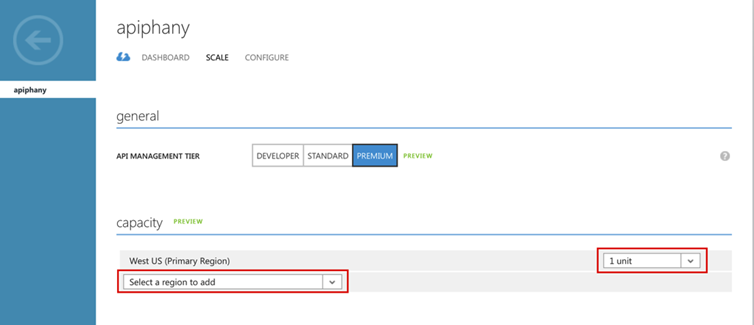

Multiple Geography Deployment

Until now each API Management service resided in a single region selected when the service is created. I’m pleased to announce the introduction of a new multi-region deployment feature that allows API publishers to easily distribute a single API Management service across any number of Azure regions. Customers who want to reduce latency for distributed API consumers and require extremely high availability can now enable multi-geo with minimal configuration.

Premium tier customers will now see an updated capacity section on the scale tab of the Azure Management portal. Additional units and regions can be added with a few clicks of the relevant dropdown controls and API Management will provision additional proxies beyond the primary region in a matter of minutes.

Multi-geo is particularly effective when combined with the API Management caching policy, which can provide a CDN-like capability for your mission critical and performance sensitive APIs. For more information on multiple-geography deployment, check out the documentation.

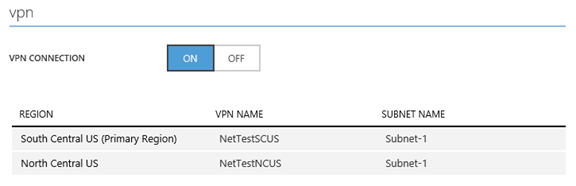

Azure Virtual Network / VPN integration

Many customers are already managing their on-premises APIs using API Management's mutual certificate authentication to secure their backend. The new Premium offering introduces a great new capability for organizations that prefer to use a VPN solution or want to leverage their Azure ExpressRoute connection. Available in the Premium Tier, VPN connectivity settings are available on the configure tab of the Azure Management Portal and can even be combined with multi-geo, with a separate VPN for each region. More information is available in the documentation.

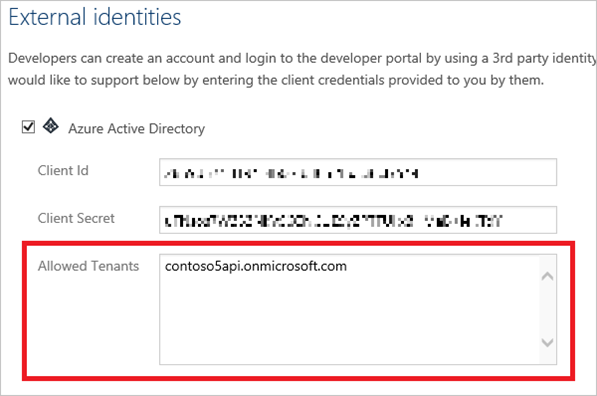

Active Directory Integration

Prior to today’s release, API Management's developer portal allowed developers to self-serve sign up using a custom account created with their e-mail address or using popular social identity providers like Facebook, Twitter, Google and Microsoft account. Sometimes businesses and enterprises want more control and would like to restrict sign in options, often preferring Azure Active Directory.

With our latest release, we now allow you to configure Azure Active Directory as an identity provider for Azure API Management. Administrators can disable all other identity providers and restrict access to APIs and documentation based on AD group membership. What's more, access can be extended to allow multiple AAD tenants to access your developer portal, making it even easier to share your APIs with business partners.

Learning More

Check out the Azure Active Directory documentation for more information on the integration, and the pricing page for more information on the new premium tier.

DocumentDB: New Asia and US Regions, SQL Parameterization and Increased Account Limits

Earlier this month we released the following new features and capabilities in our Azure DocumentDB service - which provides a fully managed NoSQL JSON database service:

- New regional availability

- Larger accounts and documents: Increased the number of capacity units per account and upported document size doubled

- SQL parameterization: Support for handle and escape user input, preventing accidental exposure of data

New Regions

We have added new support for provisioning DocumentDB accounts in the East Asia, Southeast Asia, and US East Azure regions (in addition to our existing US West, East Europe and West Europe regions). We’ll continue to invest in regional expansion in order to give you the flexibility and choice you need when deciding where to locate your DocumentDB data.

Larger Accounts and Documents

Throughout the preview process we’ve steadily increased the maximum document and database sizes. With this month's release we've increased the maximum size of an individual document from 256Kb to 512Kb. The Capacity Unit (CU) limit per DocumentDB Account has also been raised from 5 to 50 which means you can now scale a single DocumentDB account to 500GB of storage and 100,000 Request Units of provisioned throughput. As always, our preview quotas can be adjusted on a per account basis - contact us if you have a need for increased capacity.

SQL Parameterization

Instead of inventing a new query language, DocumentDB supports querying documents using SQL (Structured Query Language) over hierarchical JSON documents. We are pleased to announce that we have extended our SQL query capabilities by adding support for parameterized SQL queries in the Azure DocumentDB REST API and SDKs. Using this feature, you can now write parameterized SQL queries. Parameterized SQL provides robust handling and escaping of user input, preventing accidental exposure of data through “SQL injection”.

Let’s take a look at a sample using the .NET SDK. In addition to plain SQL strings and LINQ expressions, we’ve added a new SqlQuerySpec class that can be used to build parameterized queries. Here’s a sample that queries a “Books” collection with a single user supplied parameter for author name:

IQueryable<Book> queryable = client.CreateDocumentQuery<Book>(

collectionSelfLink,

new SqlQuerySpec {

QueryText = "SELECT * FROM books b WHERE (b.Author.Name = @name)",

Parameters = new SqlParameterCollection() {

new SqlParameter("@name", "Herman Melville")

}

});

Note:

- SQL parameters in DocumentDB use the familiar @ notation borrowed from T-SQL

- Parameter values can be any valid JSON (strings, numbers, Booleans, null, even arrays or nested JSON)

- Since DocumentDB is schema-less, parameters are not validated against any type

- You could just as easily supply additional parameters by adding additional SqlParameters to the SqlParameterCollection

The DocumentDB REST API also natively supports parameterization. The .NET sample shown above translates to the following REST API call. To use parameterized queries, you need to specify the Content-Type Header as application/query+json and the query as JSON in the body, as shown below.

POST https://contosomarketing.documents.azure.com/dbs/XP0mAA==/colls/XP0mAJ3H-AA=/docs

HTTP/1.1 x-ms-documentdb-isquery: True

x-ms-date: Mon, 18 Aug 2014 13:05:49 GMT

authorization: type%3dmaster%26ver%3d1.0%26sig%3dkOU%2bBn2vkvIlHypfE8AA5fulpn8zKjLwdrxBqyg0YGQ%3d

x-ms-version: 2014-08-21

Accept: application/json

Content-Type: application/query+json

Host: contosomarketing.documents.azure.com

Content-Length: 50

{

"query": "SELECT * FROM books b WHERE (b.Author.Name = @name)",

"parameters": [

{"name": "@name", "value": "Herman Melville"}

]

}

Queries can be issued against document collections, as well as system metadata collections like Databases, DocumentCollections, and Attachments using the approach shown above. To try this out, download the latest build of the DocumentDB SDK on any of the supported platforms (.NET, Java, Node.js, JavaScript, or Python).

As always, we’d love to hear from you about the DocumentDB features and experiences you would find most valuable. Submit your suggestions on the Microsoft Azure DocumentDB feedback forum.

Search: Portal Enhancements, Suggestions & Scoring, New Regions

Earlier this month we released a bunch of great enhancements to our Azure Search service. Azure Search provides developers with all of the features needed to build out search experiences for web and mobile applications without having to deal with the typical complexities that come with managing, tuning and scaling a large search service.

Azure Portal Enhancements

Last month we added the ability to create and manage your search indexes from the Azure Preview Portal. Since then, you have told us that this has really helped to speed up development as it greatly reduced the amount of code required, but we also heard that you needed more. As a result, we extended the portal by adding the ability to add Scoring Profiles as well as configure Cross Origin Resource Sharing from the portal.

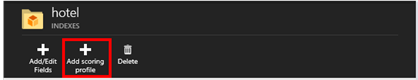

Portal Support of Scoring Profiles

Scoring Profiles boost items up in the search results based on different factors that you control. For example, below, I have a hotels index and all other things being equal, I want highly rated hotels close to the users’ current location to appear at the top of the users search results. To do this, in the Azure Preview Portal, choose Add Scoring Profile and provide a name for it. In this case I am going to call it “closeToUser”. You can create one or more scoring profiles and name them as needed in the search request, allowing you to provide different search results based on different use cases.

Once closeToUser has been created, I can start adding weights and functions. For example, in this scoring profile, I chose to add:

- Weighting: Use hotelName as a weighted field, such that if the search term is found in the hotelName, it gets a weighted boost

- Distance: Leverage the spatial capabilities of Azure Search to boost a hotel if it is found to be closer to the user’s specified location

- Magnitude: Provide a boost to the hotels that have higher ratings

All of these functions and weights are then combined into a final score that is used to rank documents.

Scoring Profiles can often be tricky and it tends to be mixed with the rest of the query. With Azure Search, scoring profiles experience has been simplified and they are separated from search queries so the scoring model stays outside of application code and can be updated independently. In addition, these scoring profiles are modeled as a set of high-level scoring functions combined with a way to do the typical field weights making editing and maintenance of scoring much simpler.

As demonstrated above, this user experience requires no coding and you can simply choose the fields that are important and apply the function or weight that makes the most sense. It is important to note that scoring profiles is a method of boosting the relevance of a document and should not be confused with sorting. There are a number of other functions available which you can learn more about in the MSDN documentation.

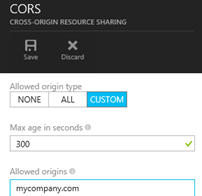

Cross Origin Resource Sharing (CORS)

Web Browsers commonly apply a same-origin restriction policy to network requests, preventing client-side web applications from issuing requests to another domain for security reasons. For example, JavaScript code that came from http://www.contoso.com could not issue a request to another domain such as http://www.northwindtraders.com. For Azure Search developers, this is important in cases where all the data is already publicly accessible and they want to save on latency by going straight to the search index from mobile devices or a browser.

CORS is a method that allows you to relax this restriction in a controlled way so you don’t compromise security. Azure Search uses CORS to allow JavaScript code inside browsers to make search requests directly to the Azure Search service and eliminate the need to proxy all requests through the originating server. We now offer the ability to configure CORS from the Azure Preview Portal, allowing you to easily enable cross-domain access and limit it to specific origins. This can be done from the index management portion of your search service as shown below.

Tag Boosting

As discussed with Scoring Profiles, there are many examples of where you may want to boost certain relevant items. To this end, we have also introduced a new and highly requested function to our set of scoring profile functions called Tag Boosting. This feature is currently part of our experimental API version, made available to you so you can test and provide feedback on these potential new features.

Tag Boosting allows you to boost documents that have tags in common with the search query. The tags for the search query are provided as a scoring parameter in each search request and then any document that contain these terms would get a boost. This capability can not only be helpful to enable search result customization, but could also be used for cases where you have specific items you want to promote. As an example, during a sporting event, a retailer might want to promote items that are related to the teams participating in that sporting event.

Improved Suggestions

Suggestions (auto-complete) is a feature that allows you to provide type-ahead suggestions as the user types. Just like scoring profiles, this is a great way to allow your users to find the content they are looking for quickly. When we first implemented search suggestions in Azure Search, we heard a number of requests to extend the capabilities of this feature to better suit your requirements. As a result, we have an entirely new implementation of suggestions to address these items. In particular, it will do infix matching for suggestions and if fuzzy matching is enabled, it’ll show more flexibility for spelling mistakes. It also allows up to 100 suggestions per result, has no limit in length other than field limits and doesn’t have the 3-character minimum length.

This enhancement is still under the experimental API version as we are continuing to gather feedback. For more information on this and to see a more detailed example of suggestions, please see the post on the Suggestions in the Azure Blog.

New Regions

As a final note, I wanted to point out that we are continuing to expand the global footprint of Azure Search. With the addition of East Asia and West Europe you can now provision Azure Search services in 8 regions across the globe.

Media: General Availability of Content Protection Service

Earlier this month we released the general availability of our new Content Protection service for Azure Media Services. This is backed by an enterprise grade SLA for all customers.

We understand the importance of protecting your premium media content, and our robust new DRM offering features both static and dynamic encryption with first party PlayReady license delivery and an AES 128-bit key delivery service. You can either dynamically encrypt during delivery of your media or statically encrypt during the content processing workflow, and our content protection options are available for both live and on-demand workflows.

For more information on functionality and pricing, visit the Media Services Content Protection blog post, the Media Services Pricing webpage, or this Securing Media article.

Management: General Availability of the Azure Resource Manager

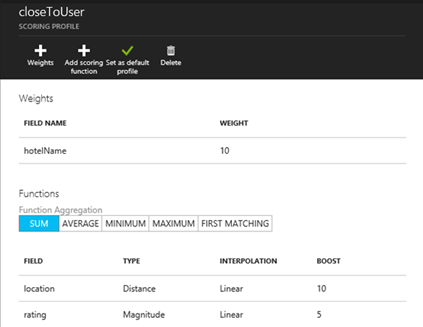

Earlier this month we reached general availability of the new Azure Resource Manager, and now provide a world-side SLA of the service. The Azure Resource Manager provides a core set of management capabilities that are fundamental to the Microsoft Azure Platform and form the basis of our new deployment and management model for all Azure services. You can use the Azure Resource Manager to deploy and manage your Azure solutions at no cost.

The Azure Resource Manager provides a simple, and customizable experience to manage your applications running in Azure along with enterprise grade authentication and authorization capabilities. Benefits include:

Application Lifecycle Boundaries: Azure Resource Manager provides a deployment container called a Resource Group that serves as the lifecycle boundary of resources/services deployed in it - making it easy for you to deploy, manage and visualize services that are contained within it. You no longer have to deploy parts of your application ala carte and then stitch them together manually. A resource Group container supports one-click deployment and tear down of the entire application in a single operation.

Enterprise Grade Access Control: OAuth and Role-Based Access Control (RBAC) are now natively integrated into Azure Management and consistently apply to all services supported by the Resource Manager. Access and operations performed on these services are also logged automatically to enable you to audit them later. You can now use a rich set of platform and resource specific roles that can be applied at the subscription, resource group, or resource level - giving you granular control over who has access to what operation within your organization.

Rich Tagging and Categorization: The Azure Resource Manager supports metadata tagging of resource groups and contained resources, and you can use this tagging support to group objects in ways suitable to your own needs such as management, billing or monitoring. For example, you could mark certain resources or resource groups as being "Dev/Test" and use that to help filter your resources or charge back their bills differently to internal groups in your organization. This provides the power needed to manage and monitor departmental applications, subscriptions, and billing data in a more streamlined fashion, especially for larger organizations.

Declarative Deployment Templates: The new Azure Resource Manager supports both an imperative API as well as a declarative template model that you can use to deploy rich multi-tier applications on Azure. These applications can be composed from multiple Azure services (including both IaaS and PaaS based services) and support the ability for you to pass parameters and connection-strings across them. For example, you could declarative create a SQL DB, Web Site and VM using a single template and automatically wire-up the connection-string details between them.

Learn More

Check out the following resources to learn more about the Azure Resource Manager, and start using it today:

- Azure Resource Manager REST API Reference

- Azure Resource Manager Template Language

- Using Windows PowerShell with Resource Manager and Azure Resource Manager Cmdlets

- Using the Azure Cross-Platform Command-Line Interface with the Resource Manager

Summary

Today’s Microsoft Azure release enables a ton of great new scenarios, and makes building applications hosted in the cloud even easier.

If you don’t already have a Azure account, you can sign-up for a free trial and start using all of the above features today. Then visit the Microsoft Azure Developer Center to learn more about how to build apps with it.

Hope this helps,

Scott

P.S. In addition to blogging, I am also now using Twitter for quick updates and to share links. Follow me at:twitter.com/scottgu