Lightweight Containers and Plugin Architectures: Dependency Injection and Dynamic Service Locators in .NET

Note: this entry has moved.

Required reading: Inversion of Control Containers and the Dependency Injection pattern by Martin Fowler. If you haven't read it, you will not understand what I'm talking about, and I'm not fond of reproducing other's work here. It's better if you just read it, it's a very interesting article.

I'd like to analyze Fowler's article in the light of .NET and what we have now in v1.x. After reading his article, he seems to imply that lightweight containers are a new concept mainly fuelled by the Java community unsatisfied with heavyweight EJB containers. It turns out that .NET supported and heavily used this approach since its very early bits, released back in PDC'00 (July 14th 2000).

The basic building blocks for lightweight containers in .NET live in the

System.ComponentModel namespace. Core interfaces are:

-

IContainer: the main interface implemented by containers in .NET. -

IComponent: provides a very concrete definition of what a component is in this context. It's any class implementing this interface, and which can therefore participate in .NET containers. -

ISite/IServiceProvider: the former inherits the later. It provides the vital link between a component and the container it lives in (its site), which enables service retrieval by the component.IComponenthas aSiteproperty of this type. -

IServiceContainer: a default container for services, thatIComponents can access through theISite. Not actually required as theIContainercan store available services through other means.

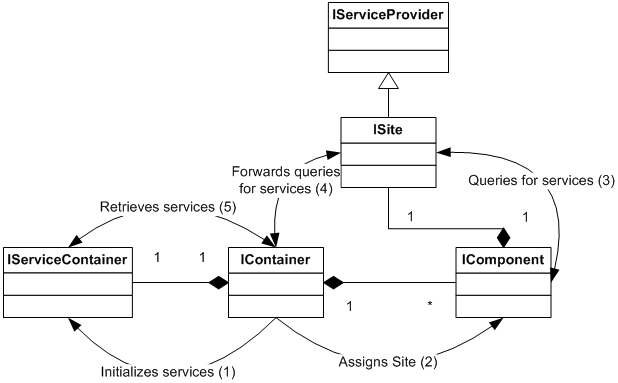

The relationship between these clases can be depicted as follows:

A typical container/component/service interaction is:

- Specific container class is created by client code.

-

Container initializes all services that components will have access to

(optionally through an internal

IServiceContainer). Client can optionally (if the container exposes its ownIServiceContainer) add further services at will. - Either the container or client code adds components.

-

Container "sites" these components by setting their

IComponent::Siteproperty, with anISiteimplementation that offers services that are retrieved from the optional internalIServiceContaineror another implementation. -

Client code can access components by name or index through

IContainerindexer. -

Components can perform actions requiring services that are retrieved

through

IComponent::Site::GetService(Type). This method is inherited fromIServiceProvideractually.

So, in Fowler's

terms, the IContainer implementation performs Interface Dependency

Injection (through IComponent) of a single dependency, the

Dynamic Service Locator (ISite : IServiceProvider). The former

happens because the IContainer automatically sets the IComponent::Site

property upon receiving the component to add through its IContainer::Add(IComponent)

method. The later is the implementation of IServiceProvider::GetService(Type)

method, which allows dynamic retrieval of services from the container.

Fowler dislikes dynamic service locators because he says they rely on string

keys and are loosely typed. In .NET IServiceProvider, you don't

pass a string key but the actual Type of the service you request. What's more,

the default interface implementation, System.ComponentModel.Design.ServiceContainer,

checks that services published with a certain Type key are actually assignable

to that type. Therefore, it's safe to cast them back upon retrieval. At most

you get a null value from a provider, but never an InvalidCastException.

Following his example so that this is a natural adaptation to the .NET world,

his MovieLister component will look like the following:

It's common, that instead of implementing IComponent directly,

concrete components inherit from its built-in default implementation Component,

which provides a GetService shortcut method that also checks that

the Site property is set before requesting a service from it.

Lifecycle of components is handled through three states:

-

IComponent::ctor: at construction time, the component is still not ready for work, as it can't access services. -

IComponent::Site { set; }: the component is "sited", therefore it's fully functional now. At this (overridable) point, components can further configure themselves, for example by caching a reference to a service they use frequently:public class MovieLister : Component, IMovieLister { public override ISite Site { get { return base.Site; } set { base.Site = value; // Cache the finder. this._finder = (IMovieFinder) GetService( typeof(IMovieFinder) ); } } -

IComponent::Dispose: when it's not needed anymore, a component may be diposed using theIDisposeinterface inherited byIComponent.

Fowler notes some drawbacks in general with regards to the service locator approach:

So the primary issue is for people who are writing code that expects to be used in applications outside of the control of the writer. In these cases even a minimal assumption about a Service Locator is a problem.By standarizing on

IComponent and ISite from System.ComponentModel,

this isn't a problem anymore in .NET. Any component that uses these interfaces

can be hooked into any container, and query services. This doesn't require

dependencies on external products or unproven approaches: .NET uses extensively

this feature. Since with an injector you don't have a dependency from a component to the injector, the component cannot obtain further services from the injector once it's been configured.As injection is being done for the service locator itself (the

ISite : IServiceProvider instance), this isn't

a problem anymore. Further services can be easily requested from it. A common reason people give for preferring dependency injection is that it makes testing easier. The point here is that to do testing, you need to easily replace real service implementations with stubs or mocks. However there is really no difference here between dependency injection and service locator: both are very amenable to stubbing. I suspect this observation comes from projects where people don't make the effort to ensure that their service locator can be easily substituted.

I agree completely on this view. Aren't these architectures all about the

ability to dynamically remove dependencies/hook/replace implementations

dynamically? It's obvious to me that if such objective is not

achieved, it's clearly not because injection vs service locator

choice, but an implementation bug. Testing and stubbing with .NET

containers is straightforward as components retrieve services by interface

type, so stub impls. of those interfaces can be plugged into a testing IContainer

implementation without problems.

Note that additionally, the IContainer can expose its internal

IServiceContainer as yet another service, so that a component could

publish a new service for consumption by others:

This combination of IServiceProvider, IContainer and

IComponent is in broad usage TODAY in Win and Web

Forms platforms, as well as design-time and generally the IDE

infrastructure. You're usin them everytime you create a Windows Form, Windows

User Control, WebForm, etc.

Layering Service Containers

One scenario that .NET System.ComponentModel supports and

that hasn't even been discussed by Fowler is that of chained service

containers. Let's say you have a component, sited in a container, that performs

some quite complex functionality. Now, let's say this complex functionality

requires additional services that are provided by a specialized container and

further components. In this case, the "main" component needs to instantiate a

new container and execute further components. Needless to say, these components

may require not only services from this new "child" container but also the

parent one, the one where the "main" component lives.

Stacking service providers at this point is extremely useful. What

you actually need is a Chain of Responsibility pattern where the service

implementation is returned by the first provider in the chain that can respond

to the request for it. This would allow you not only to chain different sets of

services, but also to override implementations from a parent service provider.

This is supported natively in .NET through the ServiceContainer implementation,

and is heavily used in Visual Studio.NET IDE: some services are offered to

components by a specific designer, or a VS package, or the IDE itself. Most

requests for services propagate up the chain if necessary until they reach the

IDE main container.

I've used exactly the same architecture for an upcoming automatic wizard framework for Shadowfax that acts as a child container inside the IDE. Some components need execution of yet another lower layer, a transformation engine that works with code templates to generate code (among other things), which is also a child container. At this point, the three layers, IDE, wizard and transformation engine, are chained together, so any component in the transformation engine, for example, can query services that are being offered by the IDE itself.

This is an extremely powerful and flexible approach, as you don't have to build monolythic container but can instead rely on components instantiating more specialized child containers to perform specific work.

Container Configuration

Of course, any good container should be configurable either programmatically and through configuration files. Fowler discusses the following with regards to configuration:

I often think that people are over-eager to define configuration files. Often a programming language makes a straightforward and powerful configuration mechanism. Modern languages can easily compile small assemblers that can be used to assemble plugins for larger systems. If compilation is a pain, then there are scripting languages that can work well also.

I agree absolutely. One usual dual config mechanism (XML + API) in .NET is creating an XSD for the file, get classes generated ready for XML Serialization, and support config either through the file reference, which is simply deserialized into the object model generated for the XSD, or through this object model itself, like so:

However, unless codegen customization is used, this raw XML serialization model is very poor when it comes to programmatic configuration, as classes only have parameterless ctors (so all initialization has to be done through property setters), there's no way to know which properties are required or optional, by default multi-value properties are arrays instead of typed collections, and so on.

Non-language configuration files work well only to the extent they are simple. If they become complex then it's time to think about using a proper programming language.

It's very interesting how most people nowadays perceive programmatic configuration APIs as a drawback over XML config files. I can't really understand why. With dynamic compilation becoming almost common place (i.e. ASP.NET v2 model, upcoming XAML, and so on), having a good programmatic API coupled with full programming language intellisense surely surpasses XML files in usability and productivity, specially for complex stuff.

It's usually the case (i.e. most of .NET) that after inventing a huge daunting configuration file format, admin UIs are created to manipulate them (i.e. .NET Framework Configuration, upcoming ASP.NET v2 admin console, etc.). At this point you start wondering: if nobody is ever going to touch those files except from those UIs, which is the advantage of having it in XML? Why don't just have those UIs generate compiled controllers that programmatically hook and configure everything? Just imagine the savings in parsing, validating, loading time... After all, you have to XCopy deploy those configs, just like the "assemblers" would...

The missing feature?

So, all the plumbing and required interfaces for implementing lightweight containers in .NET are already in-place. The framework doesn't contain any class to perform configuration of a container, though. This is not necessarily a bad thing, as it doesn't force any concrete file format or configuration API, leaving that to implementers. Creating such feature for an specific container (such as the Shadowfax Wizard container, or the transformation engine - code-named T3 for Templated Text Transformations) is almost trivial. Reading config, loading types, hooking services and components and that's it.

So, once more, we can see that .NET is the pioneer on supposedly "new" patterns. It's true that this pattern (and many others found throughout the .NET Framework) don't have enough advertising, and that may be the cause for their scarse use in .NET application architectures.

In a future post I'll discuss Apache Avalon and the Spring Framework, and how they compare to what's built-in .NET.

Update: maybe I should also mention that I've been using this tecnique with excelent results since the initial release of an opensource XML-based code generator back in Nov-2002