Fear and Loathing

Gonzo blogging from the Annie Leibovitz of the software development world.

-

Power Outage Maps from Around the World

Whew. It's been a long time since I blogged but I think it's fine time to get back into it and this seemed to be a useful start.

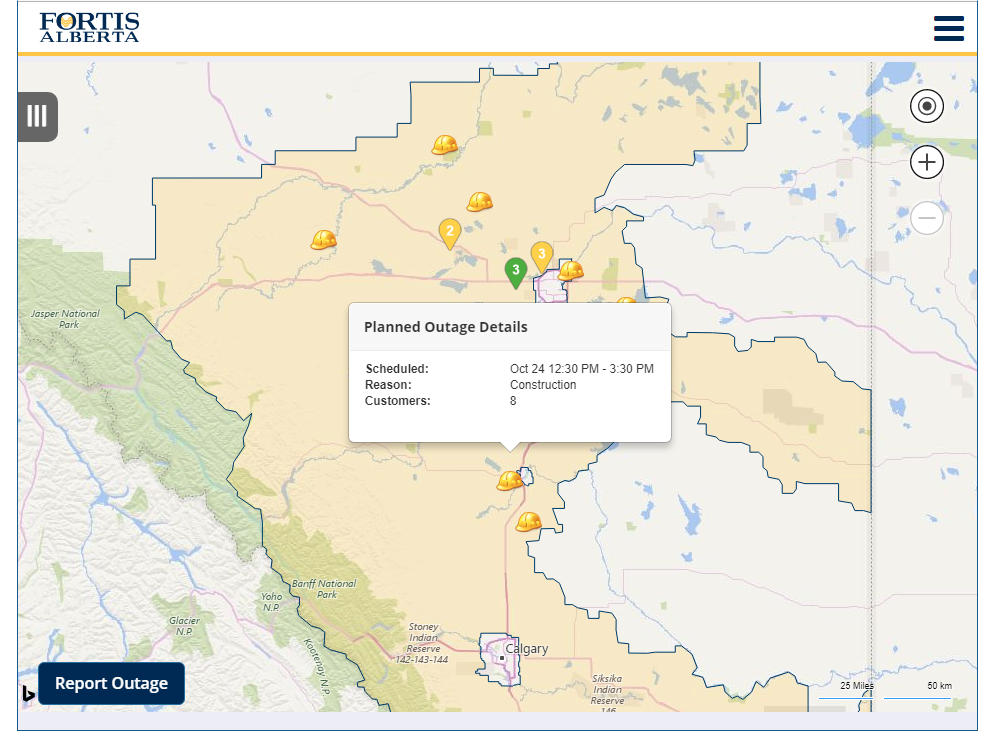

My job for the last few years has been in the electrical industry, specifically dealing with electrical distribution for Alberta in Canada. A few years back I came across a list published by Edward Vielmetti that listed power outage maps for all 50 states and other countries. Since I built it, I contributed my own map for FortisAlberta to the list. The original list has since gone by the wayside so I rescued it from the Wayback Machine and will continue to maintain it here. Feel free to email me with updates, questions, ideas, small marsupials, credit card numbers, etc. As I find time I will update the images and information as best I can.

IMPORTANT!! The last version I could get of this content from the Internet was from May of 2016 and the last update to that version of the list was from May of 2015. So this information is terribly out of date. Phone numbers, links, and information here has not been updated yet. Please do not use this for emergency reference. Always refer to your local authorities and resources for the latest information during a power outage.

The list is known to be incomplete, but the list from Wikipedia (outage management system) is also incomplete. To be overly comprehensive, if there are screen captures that I can gather, I will; they will not be current when you look at them, but I will time and date stamp them as best I can.

Wherever possible, I'm also capturing service area or service territory maps, mobile device apps, high capacity transmission interconnection maps, media contact information, regulatory agencies, and regional coordinating bodies.

Updates

May 7, 2015. Updates to Texas, including Coserv (Denton County TX).

November 2, 2014. Updates to Maine, due to winter storms there; added Maine Public Utilities Commission map. Added load shedding page for Eskom (South Africa) and PGCB (Bangladesh) after large scale power outage in both countires. Added NB Power (New Brunswick, Canada), no maps.

August 1, 2014. Updated Fortis Alberta.

March 13-14, 2014. Updated Maine, Maryland, Virginia.

February 11, 2014. Added Sawnee EMC in Georgia north of Atlanta.

December 21, 2013. Reviewing Texas. Added load graph from ERCOT, map for San Bernard Electric Cooperative, map for AEP Texas, entry for Sam Houston Electric Cooperative, map for Austin Electric.

October 4, 2013. Added South Dakota Rural Electric Association map during early season winter storm Atlas. Update Progress Energy Florida to reflect merger with Duke Energy. Added map for Gulf Power (Florida), Mississippi Power. Still no map for Alabama Power. Preparations for TS Karen in the Gulf. Updates to Arizona, Saskatchewan, PEI.

July 10, 2013. Updated Twitter account for Arizona Public Service, added central Arizona's Salt River Project; also updates for the Toronto, Ontario, Canada area.

June 13, 2013. Added new maps from the Southern Company for Georgia Power. No map available for Alabama Power and Gulf Power, also Southern Company utilities.

October 26-29, 2012. Updates for Hurricane Sandy. Con Edison (NYC) has a new link to their storm center. Updated PEPCO media relations contact. Add Delmarva Power mobile apps. Update PSEG twitter. Add NSTAR (Massachusetts).

August 28, 2012 updates several Louisiana electric cooperatives in advance of Hurricane Isaac, as well as details for Mississippi Power. Quick link: Entergy Storm Center (New Orleans + much of Louisiana). News coverage: WWL-TV.

July 10, 2012 updates Alberta after AESO initiates rolling blackouts on EPCOR in response to extreme heat and power plants going offline.

June 25, 2012 has additional Florida updates for Tropical Storm Debby.

May 27, 2012 has updates from Florida for Tropical Storm Beryl, including new information in Jacksonville, Florida (JEA), Tampa Florida (TECO / Tampa Electric), and updated for Clay Electric (Gainesville).

May 14-16, 2012 notes visits from SurvivalBlog (thanks for the link) and an update of Progress Energy's map for North and South Carolina; maps also added for Vermont (CVPS), eastern Washington (Avista), metro Atlanta (GreyStone), Colorado Springs Utilities, Duke Energy (Indiana) and an entry for Wyoming (Rocky Mountain Power).

April 30, 2012 adds a Twitter feed for Oklahoma Gas and Electric (@OGandE). Some of the OG&E maps render at weird sizes in the Chrome browser I'm using.

April 15, 2012 adds Kansas City Power and Light's map, powered by Obvient Strategies (now a division of Ventyx).

January 12, 2012 adds the service alert map from Puget Sound Energy, which serves Washington State. This map is only activated in severe weather; PSE reported 220,000 homes without service due to heavy snowfall.

November 30 - December 1, 2011 looks at the Santa Ana winds that are affecting California (LA Times), causing power outages and a risk of fires. An LADWP storm outage map at 6:00 p.m. December 1, 2011 shows widespread outages affecting about 118,000 of that utility's 1.4 million customers. Outages in Utah (no maps) and New Mexico are also blamed on high winds.

November 15, 2011 looks at Ohio and Texas.

November 11-12, 2011 adds media contacts and maps for Duke Energy in North Carolina. I'm also working to incorporate a set of international outage information for telco and internet services, which doesn't neatly fit the state-by-state organization of the power grid. If you are in western Massachusetts, a WMECO ratepayer has built their own outage page suitable for even very dumb mobile phones.

A November 9, 2011 update starts to collect media contact pages, especially for utility companies that do not currently publish maps, and I'm looking at Alaska, Michigan, Washington, and Wisconsin.

A November 7, 2011 update adds PSE&G in New Jersey and RG&E in Rochester, NY, and updates several maps in Oklahoma.

The October 30, 2011 "Snowtober" update includes hits to the power grid from the snowstorm .

The September 9, 2011 updates focus on southern California and Arizona, where a widespread power outage occured when the North Gila – Hassayampa 500 kV transmission line near Yuma, Ariz went off line; maps added include the SDGE outage map, a 2003 map of high capacity California interconnects, and a map of utility service areas in California.

The September 6, 2011 updates include new KML (GeoRSS) thematic maps for ConEdison (NY), Pepco (DC), Progress Energy (NC/SC), Delmarva (DE/MD) and Atlantic City Electric (NJ), via Google Crisis Response, plus a new map for Allegheny Power.

The August 25 and 29, 2011 updates includes nine new maps along the US east coast, covering power outages due to Hurricane Irene.

Warning

Don't touch a downed wire. If you don't know who to call to report an emergency associated with a power outage, call 911. Avoid dire mofo.

United States. Eaton Blackout Tracker. Aggregated information about power outages, their causes, and the relative impact of each one; not real time.

United States (Midwest): Midwest ISO. Regional coordination of wholesale power transfers in Michigan, Indiana, Illinois, Missouri, Iowa, Minnesota and Manitoba. Midwest ISO Real Time Locational Marginal Price Contour Map.

United States (14 western states). Western Electricity Coordinating Council. The Principal Transmission Map and the Planned Facilities Map are available to WECC members only or on receipt of approval from WECC’s Manager of Planning Services.United States (Northwest). Northwest Council: Power Generation in the Northwest map shows top power producers in each category, or those producing more than 25mw.

Alabama: Alabama Power storm center (no maps). Alabama Power makes maps for internal use; here's how they do it, in a 2009 ESRI user group presentation. A Southern Company. Twitter: @alabamapower. Facebook: AlabamaPower.

Alabama (Dothan): Wiregrass Electric Cooperative Outage Map. Map requires Silverlight plugin. The WEC Android app also has outage information for mobile devices.

Alabama. CAEC Outage Viewer. Call 1-800-619-5460 to report an outage or dangerous condition.Alaska (statewide): Alaska Power Association. Statewide electric utility trade association. Member and public relations: 907-771-5711.

Alaska (Juneau): Alaska Electric Light and Power outage log. Detailed reporting of each outage incident, with the cause, location and duration noted. Juneau is not connected to a larger power grid. No map. Please call 1-888-434-9844 if you experience an outage

Alaska (Anchorage): Municipal Light and Power (no maps). To report an outage, call 907-279-7671

Arizona (Phoenix). Arizona Public Service (APS) Outage Center (no maps). Twitter: @apsFYI. Facebook: apsfyi. Call 855-OUTAGES to report an outage.

c

Arizona (Central Arizona). Salt River Project Public Outage Map. (requires Silverlight) Twitter: @srpconnect.

Arizona (Tucson). Tucson Electric Power (no outage center, no maps). Twitter: @TEPOutageInfo. Updated October 5, 2013.

Arizona (Southern - Pima, Santa Cruz counties, excluding Tucson): TRICO Electric Coop. No maps.

Arizona (Southern, Western - Santa Cruz, Mohave County): Unisource Energy (No maps). Twitter: @UESPowerOutages. Updated October 5, 2013.

Arkansas (Little Rock). Entergy Storm Center. In addition to showing customer level problems, Entergy Arkansas also shows lines that are de-energized in its distribution system. Mobile access via the Entergy App (iPhone).

Arkansas (Fayetteville, Texarkana). SWEPCO Outages and Problems. Twitter: @swepconews. Facebook: Southwestern Electric Power Company - SWEPCO. Please report safety hazards by calling 1-888-218-3919.

Arkansas (Northwest): Empire District Electric Company Power Outage Map. See the Missouri entry for Empire for a map; only a few customers are served in Arkansas.California (entire state). California Independent System Operator (no maps). Power grid coordination among utilities at the wholesale level. Graph of aggregate California actual and forecast electric supply and demand.

California (statewide). California Energy Commission Energy Maps of California. Detailed statewide maps, including this map of California electric utility service areas.

California. Consortium of Electric Reliability Technology Solutions. Research into grid reliability and stability, hosted at Lawrence Berkeley Laboratories. 2003 California power grid study includes this map.

California (far north). Pacific Power Outage Information For California (no maps). To report an outage or if you do not see your outage listed and would like an update, call us anytime toll free at 1-877-508-5088. To improve service to you, we are working to enhance our online outage information to include interactive maps in the future. (as of December 2011)California (San Francisco). PG&E Outage Map. Twitter: @PGE4Me . If you need to report an outage, please call 1-800-743-5002.

California (Los Angeles). Los Angeles Department of Water and Power. For real-time outage information, please call us anytime at 1-800-DIAL DWP. Twitter: @LADWP . News updates: LADWPNews.com. No interactive map tracker for the general public. The map below was produced in response to the December 1, 2011 Santa Ana winds and shows over 114,000 customers without power in LA.

California. Southern California Edison Outage Center, Outage Maps (new), Outage List View (suitable for mobile use). Twitter: @SCE. Facebook: SCE. If your electricity stays off for longer than a few minutes, call 800-611-1911. [Updated 3/12/14.]

California (San Diego) SDGE Outage Map. Twitter: @SDGE . Call 800-411-7343. Area code 619.Colorado (Denver). Xcel Energy Outage Map. To report an outage, call 1-800-895-1999. Twitter: @XcelEnergyCO.

Colorado (Colorado Springs). Colorado Springs Utilities Electric Outage Map.bhe

Connecticut. Connecticut Light and Power Outage Map. Table of Connecticut Light and Power Outages (text, formatted for mobile phone). Twitter: @CTLightandPower. Call 800-286-2000. [Confirmed 10/27/12]

Connecticut (New Haven). United Illuminating Outage Map. Call 800.722.5584. Twitter: @unitedillum [Confirmed 10/27/12]

Delaware. Delmarva Power Outage Map. Delmarva Thematic Areas Map (KML). Twitter: DelmarvaConnect. The Delmarva Power mobile apps include support for iOS, Android, and Blackberry. [Updated 10/27/12]

Florida (Southern, Miami, Tampa). Florida Power and Light FPL Power Tracker. Twitter: @insidefpl. Report an outage: 1-800-468-8243.

Florida. Progress Energy Florida Outage Map. Twitter: @progressenergy, @ProgEnergyFL. Facebook: Progress Energy Florida. Call (800) 228-8485 with outage reports, or use the Progress Energy outage reporting tool to report online. Map below shows damage due to Tropical Storm Debby; it is updated 4 times a day. Progress Energy merged with Duke Energy in 2012; though the Twitter account is still listed on the Duke Energy site, it appears to be down. Twitter: @dukeenergy , @dukeenergystorm .

Florida (Orlando). Orlando Utilities Commission Outage Map. If you need to report an outage, call the Emergency Service Hotline at 407.823.9150 (24 hours) in Orlando/Orange County and 407.892.2210 (24 hours) in St. Cloud/Osceola County.Florida (Northern, Gainesville). Clay Electric Cooperative Outage Map. A Touchstone Energy Cooperative. Outage map shows diamond-shaped outage grid, plus a per-county report showing the percentage of customers out in each area. Call 1-888-434-9844 to report an outage.

Florida (Jacksonville). JEA Outage Info. To report a problem, call (800) 683-5542. Twitter: @NewsfromJEA. News media coverage includes WOKV News/Talk 690 AM (Facebook)., WOKV.COM, newspaper Florida Times-Union at Jacksonville.com.

Florida (Tampa). Tampa Electric Outage Map. Tampa Electric is part of TECO. Call 877-588-1010.

Florida (Pensacola to Panama City). Gulf Power, a Southern Company. Facebook: Gulf Power Company; used in times of emergency to distribute updates from their Distribution Control Center. New map: Gulf Power Outage Center. Updated October 4, 2013.Florida (Panhandle). Talquin Electric Cooperative Outage Status Map. Facebook: Talquin Electric Cooperative. "Powered by DataVoice". Updated October 4, 2013.

Florida (Gainesville). Gainesville Regional Utilities (GRU). No maps. Twitter: @GRUStormCentral. Call 352-334-3434.

Georgia (Atlanta, statewide). Georgia Power Outage Center . Twitter: @georgiapower. Call 888-891-0938 to report an outage. Southern Company service area map. Georgia Power uses NaviGate from Gatekeeper Systems for visualization of their distribution network (case study). New: Georgia Power Outage Map. Map credit: iFactor.

Georgia (NE of Atlanta, Athens). Jackson EMC Outage Map. Map hosted by Sienatech.

Georgia (NW of Atlanta, Cobb County). GreyStone Power Outage Status Map. Map powered by dataVoice. Unusually, this map reports outages by substation as well as by city and county.

Georgia (NE of Atlanta). Sawnee EMC Current Outages. The Twitter account @SawneeAssist is protected. A mobile outage map is set up for smart phones and tablets. Service area map.Hawaii. Hawaiian Electric Company (HECO). No maps.

Idaho. Idaho Power Service Area Map (no outage information). Follow @idahopower on Twitter or the Idaho Power Facebook page for information during severe power outage incidents.

Illinois. Ameren llinois Outage Center; Ameren Service Territory Map (includes Missouri)

Illinois (Chicago area, northeast): ComEd Outage Map. Call 1-800-Edison-1 to report a downed power line. For News Media Inquiries, contact ComEd Media Relations at +1 312 394 3500. ComEd is an Exelon company. Exelon Corporation: Contact Us.

Indiana. Duke Energy Current Outage Map. If the power goes out, call 1-800-343-3525. [Updated 10/27/12]Indiana (northwest). Indiana Michigan Power Outages and Problems map.

Indiana (north) NIPSCO Current Power Outages.

Indiana (Indianapolis). Indianapolis Power and Light Company OutageMap. If you are currently experiencing an outage, please call 317-261-8111.

Iowa. Iowa Association of Electric Cooperatives Outages.

Iowa. MidAmerican Energy MEC Outage Watch.

Kansas (Kansas City). Kansas City Power and Light Powerwatch. Powered by Obvient Strategies.

Kansas (Topeka, Wichita). Westar Energy Outage Map.

Kentucky (Louisville, statewide). LG&E / KU / ODP Outage MapKentucky (eastern). Kentucky Power Outages and Problems.

Kentucky (Lexington). Blue Grass Energy Outage Viewer. To report a power outage please call 1-888-655-4243.

Louisiana. CLECO Current Power Outage Information. Map shows overlay of weather radar, with Hurricane Isaac of 2012 displayed.

Louisiana. (Lafayette) SLEMCO Current Outages.

Louisiana (New Orleans). Entergy Storm Center. This screen shows outages reported just after Hurricane Isaac made landfall in 2012. Mobile access via the Entergy App (iPhone).

Louisiana (Slidell). Washington - St Tammany Electric Cooperative Storm Center. Detail view shown; note that the outage reporting lets you gather detail down to the substation and feeder level. A Touchstone Energy partner.

Louisiana (Shreveport): SWEPCO Outages and Problems.

Maine. Transmission and distribution map for utilities statewide, from the Maine Public Utilities Commission. [Updated 11/2/14]

Maine (Augusta): Central Maine Power outage reporting (no maps). CMP Service area map (PDF). To report an outage, call 1-800-696-1000. Twitter: @CMPCo [Updated 11/2/14]

Maine. Bangor Hydro and Maine Public Service merged in January 2014 to form Emera Maine. The Emera Maine outage map also includes detail on every single outage in the service territory, and a total count of outages system wide. Contact 1-855-EMERA-11 for more info. Twitter: @EmeraME. [Updated 11/2/14].

Maine (Bangor): Bangor Hydro outage map. Merged with Maine Public Service to form Emera Maine. [Updated 3/12/14]. Old service territory map.

Maryland. Electric utility service area map, as of 2008; from PPRP.

Maryland (Baltimore area, BWI): Baltimore Gas and Electric power outage map. Power Out or Downed Wire? Call 877-778-2222. Media only: 888.232.1919 . An Exelon Company. [Updated 3/12/14]

Maryland (Delmarva), Delmarva Power Outage Map. (See listing under Delaware). Download a mobile app from Delmarva Power. [Updated 3/12/14].

Maryland: Allegheny Power, A FirstEnergy Corporation. Allegheny Power Outages Map.

Maryland (Delmarva): Choptank Electric Cooperative Outages. Choptank serves 9 counties on Maryland's Eastern Shore: Caroline, Cecil, Dorchester, Kent, Queen Anne's, Somerset, Talbot, Wicomico, Worcester. [Updated 3/12/14].

Maryland (DC suburbs): Pepco StormCenter. KML: Pepco Thematic Areas Map. Stay away from downed wires, call 1-877-737-2662 to report an outage. Media relations (reporters only): 202/872-2680 Twitter: @PepcoConnect 8a-5p M-F. [Updated 3/12/14].

Maryland (Southern): Southern Maryland Electric Cooperative. SMECO Outage Map. Report an outage: 1-877-747-6326. [Updated 3/12/14].

Massachusetts. (Mass. Electric): National Grid Outage Map.

Massachusetts (WMECO, Springfield, Amherst) Western Massachusetts Electric Outage Map. WMECO delivers power to more than 200,000 customers in 59 towns in western MA. Twitter @WMECO monitored during business hours. A Northeast Utilities company. A subscriber has built their own WMECO service outage page suitable for mobile phones.

Massachusetts (Cape Cod, Boston): NSTAR Outage Map. For mobile devices or low bandwidth: NSTAR Outages. Call to report a power outage. 1-800-592-2000. Twitter: @NSTAR_News. [Added 10/29/12]Michigan (statewide). Michigan Public Service Commission. Statewide energy regulator. Michigan Electric Utility Service Area Map.

Michigan (Central Upper Peninsula, Ishpeming, Negaunee, Escanaba, Houghton, Hancock): Upper Peninsula Power Compan Current Electric Outages.

Michigan (City of Detroit municipal system). Detroit Department of Public Lighting (no maps). Contact (313) 267-7202.Michigan (Detroit metro area, DTW). DTE Energy Outage Map (interactive), DTE Zip Code Outage Map (PDF). iPhone users can download the DTE iPhone outage tracker app for free. Twitter: @DTE_Energy . Call 800-477-4747. Media relations: 313-235-5555.

Michigan (central, western, northern Lower Peninsula) Consumers Energy: Report an Electric Outage (no outage maps). Electric and Gas Service Territories. If you are news media: Consumers Energy Media Contacts. Twitter: @consumersenergy .

Michigan (southwest). Indiana Michigan Power Outage Map.Minnesota (statewide). Minnesota Geospatial Information Office Public Utilities Infrastructure Information for Minnesota. Electric, natural gas, water and sewer maps. Detailed statewide electric utility service area boundaries are not currently available. A detailed Minnesota electric transmission lines and substations map from 2007 shows service of 60 kV or larger; this map is an excerpt.

Minnesota (Duluth). Minnesota Power Outage Map.

Minnesota (Northeast). Lake Country Power Outage Viewer.

Mississippi (Western). Entergy Mississippi Storm Center. Mobile access via the Entergy App (iPhone). Facebook: Entergy Mississippi. Twitter: @entergyms . For storm alerts, text REG to 368374. To report a power outage, call 1-800-9OUTAGE. Entergy also serves adjacent Louisiana, Arkansas, and Texas customers with outage reporting on the same map.

Mississippi. Mississippi Power. Facebook: Mississippi Power. If you lose power, call 1-800-ITS-DARK (1-800-487-3275). Map: Mississippi Power Outage Map. Updated October 4, 2013.

Mississippi. Coast Electric Power Association (no maps). Report an outage: 877-7MY-CEPA. Facebook: Coast Electric. Serves 77,000 customers in southern Mississippi.

Missouri (Kansas City, Independence). Kansas City Power and Light Powerwatch. "Powered by Obvient Strategies".

Missouri (St Louis area): Ameren Power Outage Map; Ameren Service Territory Map.

Missouri (west of St Louis): Cuivre River Electric Outage Viewer. Please call 1-800-392-3709 to report an outage. Smart phone link.Missouri (Southwest, Joplin area): Empire District Outage Map.

Montana. Northwestern Energy. (No maps.) In June 2011, a fawn dropped by an eagle cut power to a neighborhood in East Missoula, affecting about 30 homes (Reuters story).

Nebraska. Nebraska Public Power District Storm Center Outage Map. Twitter: @NPPDstormcenter. If you have an outage and get your electric bill from NPPD, call us at (toll free): 1-877-ASK-NPPD (275-6773).

Nebraska. Omaha Public Power District Power Outage Map. Outages are reported on a grid, either as absolute numbers or as % of total subscribers (shown).

Nevada. NV Energy (no maps).

New Hampshire. New Hampshire Electric Co-op Outage Map. About 78,500 customers served.

New Hampshire. Public Service of New Hampshire Outage Map. Mobile site: PSNH Outage List. Call to report a power outage. 1-800-662-7764. Twitter: @PSNH

New Hampshire (Capitol, Seacoast regions). Unitil Energy Outage Information (no maps). Twitter: @Unitil.New Jersey (northern). Orange and Rockland Storm Center.

New Jersey (Jersey Central Power and Light). FirstEnergy Current System Outage Map. Phone 1-888-544-4877.

New Jersey (Bergen, Essex, Hudson, Mercer, Middlesex, Passaic, Somerset and Union counties): PSE&G Outage Center. PSE&G, NJ's largest utility, services 2.1 million electric customers and 1.7 million gas customers. Call 800-436-PSEG. Twitter: @PSEGdelivers. [Updated 10/29/12]New Jersey (Atlantic City, southern). Atlantic City Electric Outage Map. KML: Atlantic City Electric Thematic Areas Map. If you see a downed line, call 800-833-7476. Twitter: @ACElecConnect

New Mexico. El Paso Electric Trouble and Outages (no maps)

New Mexico (Albuquerque). PNM Power Outage Map. Call 1-888-DIAL-PNM (888-342-5766) to report your outage. This map is from the windstorms of December 1, 2011.

New York (statewide). New York Independent System Operator. Wholesale power coodination across New York State.

New York /New Jersey (New York City area). NYCPowerStatus.com has consolidated information from Con Edison, LIPA, and PSEG, with graphs that chart system outage and restoration.

New York (New York City). Con Edison Storm Center. KML: ConEdison Power Outages Thematic Areas Map.

New York (Long Island). Long Island Power Authority Storm Center Outage Map.

New York (Poughkeepsie, Kingston) Central Hudson Storm Center, powered by iFactor.New York (Buffalo, upstate). National Grid Outage Map.

New York (Upstate). NYSEG Outage Central. No maps, but detailed city by city and outage by outage information in table format. Call 1.800.572.1131. Twitter: @NYSEandG.

New York (Rochester). RG&E Outage Central. No maps. Outages are reported by city and by street.

New York (Rochester). RGE Outages plots the approximate locations of power outages in Rochester, New York, and is updated every ten minutes. The source data for this map is published by RG&E, but all map-related blame should go to Ryan Tucker. You can find the source code on GitHub.

North Carolina. (Charlotte, Greensboro). Duke Energy Current Outages. Call 1-800-POWERON to report outages and get an estimated time of restoration. Los clientes hispanos pueden llamar 1-866-4-APAGON. Media contact line: 800-559-3853 or 704-382-8333 for news media only.

North Carolina (Raleigh/Durham, Asheville). Progress Energy Outage Map. An option on the map shows an outage history with restoration progress, as well as a breakdown of outages by county. KML: Progress Energy Thematic Areas Map. Powered by iFactor. Progress Energy also serves a part of eastern South Carolina.

North Dakota (Fargo). Xcel Energy Outage Map.

Ohio (statewide). Electric industry maps of Ohio, provided by the Public Utilities Commission of Ohio.

Ohio (City of Cleveland): Cleveland Public Power Outage Information (no maps). In the event of an outage, call 216-664-3156. Dial 811 before digging. CPP serves much of the city of Cleveland.

Ohio: (northern, Toledo, Cleveland suburbs, Youngstown): FirstEnergy Current System Outage Map. Consolidated map for Toledo Edison, Ohio Edison, and Illuminating Company. Media contacts for news media only.

Ohio: (southeast, Columbus, Findlay, Athens, Canton, Chillicothe): AEP Ohio Outages.

Ohio (south central) South Central Power Company Outage Map.

Ohio: (Cincinnati): Duke Energy Power Outage Map

Ohio (Dayton): Dayton Power and Light Outage Map.Oklahoma (southwestern): Cotton Electric Cooperative Outage Map.

Oklahoma (Tulsa, Lawton, McAlester): PSO Outages and Problems map. Public Service Company of Oklahoma, a unit of AEP, serves over 530,000 customers in 45 Oklahoma counties. Twitter: @publicserviceco

Oklahoma (OKC): OG&E System Watch map. Includes graphs of outages by hour of day and recent historical outage counts, plus a weather overlay. Updated every 15 minutes on the quarter hour. Twitter: @OGandE

Oregon (Portland, Beaverton, Salem). Portland General Electric Outage Map.

Pennsylvania (statewide). Pennsylvania Public Utility Commission. Regulation and reporting on statewide energy utilities, with jurisdiction over 11 electric utility companies that serve most of the commonwealth.Pennsylvania. Citizens Electric of Lewisburg outage map.

Pennsylvania (Pittsburgh). Duquene Light Company Storm Center. (no maps) During storms, the Twitter account @DuquesneLight is updated. Area codes: 412, 878.

Pennsylvania (Philadelphia). PECO outage map. Call 800-841-4141 to report an outage. PECO is an Exelon company. Area codes: 215, 267.

Pennsylvania (Penn Power, Penelec, Met-Ed): FirstEnergy Current System Outage Map - PennsylvaniaPennsylvania (suburban Pittsburgh, State College). Allegheny Energy Outage Status. Call 1-800-255-3443 to report an outage.

Pennsylvania (PPL; eastern) . PPL Outage Map. PPL service territory map. [Updated 11/2/12]

Pennsylvania: Northwestern REC Outage Web Map.

Rhode Island. National Grid outage map. Table of Rhode Island power outages (text, data from National Grid, hosted by WPRI).

South Carolina. Duke Energy Outage Map. Duke Energy Service Area Map.

South Carolina (Columbia, coastal). SCE&G Outage Map. Call 800-251-7234. Twitter: @scegnewsSouth Carolina (eastern, coastal). Progress Energy outage map. An option on the map shows an outage history with restoration progress, as well as a breakdown of outages by county. KML: Progress Energy Thematic Areas Map. Powered by iFactor. Progress Energy also serves a part of North Carolina. Progress Energy has been acquired by Duke Energy.

South Dakota. Xcel Energy. Call 1-800-895-1999. Xcel Energy Outage Map.

South Dakota. NorthWestern Energy (no maps). Call (800) 245-6977.

South Dakota (Rapid City). Black Hills Power Current Outages. Call 1-800-839-8197 to report an outage.

South Dakota. South Dakota Rural Electric Association Outages. Consolidated mapping, includes Black Hills Electric Coop, Butte Electric Coop, Grand Electric Coop, West River Electric Association, and 20+ other cooperatives.

Tennessee (statewide). Tennessee Valley Authority (no outage maps). Regional map shows TVA distribution map (PDF) to local power companies.

Tennessee (Memphis). MLGW Electric Outage Summary Map. First map I've seen that does outage accounting on a grid rather than by political boundaries, zip codes or internal subdivisions. Twitter: @MLGW.

Tennessee (Nashville). Nashville Electric Service (NES) Outage Map. Mobile version: Estimated customers without power. Facebook: Nashville Electric Service. Customer service: (615) 736-6900.

Tennessee (southern suburbs of Nashville): Middle Tennessee Electric Membership Corporation Outages. Report an outage through MTEMC's automated outage reporting line at 1-877-777-9111.Texas: ERCOT grid reliability; aggregate demand, pricing. No maps.

Texas: San Bernard Electric Coop (Columbus, Hempstead, Sealy; west of Houston) http://66.63.235.29/Outages/

Texas: Sam Houston Electric Cooperative Storm Central. Call 1-888-444-1207 to report an outage.

Texas: CPS Energy Outage Center (San Antonio)

Texas: AEPTexas.com https://www.aeptexas.com/outages/ Outages by zipcode at the county level.

Texas (Austin): Austin Energy Storm Center.

Texas (southeast of Austin, including Bastrop): Bluebonnet Electric Outage Viewer. The map depicted shows damage to the system from the Bastrop County Complex Fire and was added on September 9, 2011.

Texas (El Paso): El Paso Electric Trouble and Outage (no maps). Call (915) 877-3400 to report an outage. News coverage from the El Paso Times.

Texas (Dallas/Fort Worth, Waco, Odessa, Midland): ONCOR Storm Center. Powered by iFactor, this map includes a weather overlay and detailed zip code and county level outage counts. Review at EPC Updates.

Texas (Houston): CenterPoint Energy Outage Tracker.

Texas: Texas New Mexico Power Service Areas. (no outage map)

Texas (Denton County): CoServ. Map includes weather overlay, powered by iFactor. Twitter: @CoServ_Energy (Updated May 7, 2015.)Utah. Division of Public Utilities. Map of consumer owned electric utilities.

Utah. Rocky Mountain Power Outages (no map). To report an outage or if you do not see your outage listed and would like an update, call us anytime toll free at 1-877-508-5088. "To improve service to you, we are working to enhance our online outage information to include interactive maps in the future." (December 2011)

Vermont. Vermont Outages. Consolidated information from 20 utilities. The map depicts damage after Hurricane Irene caused widespread flooding.

Vermont. Central Vermont Public Service Outage Map. The map depicts outages as well as the CVPS service territory.

Virginia (State Corporation Commission). Virginia Electric Utility Electric Service Territories map (PDF).Virginia (Norfolk, Richmond, Fairfax). Dominion Electric Outage Map. Call 1-866-366-4357. A Dominion Electric power outage summary breaks down outages by service region. Twitter: @DomVAPower. Facebook: Dominion Virginia Power. The map depicts damage after widespread flooding and high winds from Hurricane Irene. News coverage: NBC12 (WWBT), Richmond Times-Dispatch. [Confirmed 3/12/14]

Virginia (Lynchburg, Roanoke): Appalachian Power Outages and Problems.Virginia (Blue Ridge, Bowling Green, Culpeper). Rappahannock Electric Cooperative Outage Map. A Touchstone Energy Cooperative; technology by Lockheed Martin.

Virginia (Manassas, Loudon, Fairfax, Prince William). NOVEC Outage Map. Facebook: NOVEC. Twitter: @NOVEC. [Updated 3/12/14]

Washington. The Washington Utilities and Transportation Commission regulates energy companies in the state. The Access Washington government portal has a comprehensive page of where to report a power outage to your Washington utility company, with over 35 company contact numbers listed.

Washington (Spokane, eastern Washington). Avista Power Outages. Avista serves over 350,000 customers. Call 1-800-227-9187 to report an outage; 24-Hour Media Line: 509-495-4174 for press inquiries only. Maps depict both outages and recent power restorations.

Washington (Seattle): Seattle City Light System Status. Seattle City Light provides power to nearly 1 million Seattle area residents. News release: New Outage Management System Enhances City Light Storm Response, November 2010. Outage Hotline (206) 684-7400

Washington. Puget Sound Energy. Call us for assistance any time of day at 1-888-225-5773. Puget Sound Energy serves more than 1.2 million electric & gas customers in Washington State, in Island, Jefferson, Kitsap, King, Pierce, Skagit, Thurston, Whatcom and Kittitas counties. A service alert map is activated only when there are major outages. Twitter: @PSEtalk. Facebook: pugetsoundenergy. The map shown depicts the January 2012 snowstorm.

Washington DC: Pepco StormCenter. KML: Pepco Thematic Areas Map. Stay away from downed wires, call 1-877-737-2662 to report an outage. Media relations (reporters only): 202/872-2680 Twitter: @PepcoConnect

West Virginia (northern). Allegheny Energy Outage Status. Call 1-800-255-3443 to report an outage.

West Virginia (Charleston): Appalachian Power Outage Map.

Wisconsin (Northeast: Green Bay, Waupaca, Stevens Point, Wausau, Antigo, Merrill, Rhinelander): Wisconsin Public Service Current Electric Outages. Two maps here; the first depicts damage from a November 9, 2011 snowstorm. WPS is a subsidiary of Integrys Energy Group. Twitter: @WPSstorm. To report an outage or down power line call 800-450-7240. Media: Integrys Media Contacts. Area media: WSAW (CBS 7).

Wisconsin (Milwaukee): WE Energies Outage Map

Wisconsin (Madison): Madison Gas and Electric Power OutagesWyoming. Rocky Mountain Power Large-Scale Outage Information for Wyoming. Selective listing of outages affecting more than 500 customers; no maps. Contact: 1-877-508-5088.

Canada:

Alberta: AESO, Alberta Electric System Operator. AESO manages demand among Alberta utilities. Same day and near future records of system load; no maps.

Alberta (Northern, east central, east southern): ATCO Electric Outage Information. Twitter: @ATCOElectric.

Alberta (Edmonton). EPCOR. Twitter: @EPCOR. No maps.

Alberta. Fortis Alberta. The FortisAlberta Outage Map shows both scheduled and unscheduled outages. Twitter: @FortisAlberta. Updated August 1, 2014. Thanks to Bil Simser.

British Columbia: BC Hydro Power Outages Map. Call 1-888 POWERON (1 888 769-3766) to report an outage. RSS outages feeds show outage details broken down by municipality and region, available in standard RSS formats for automated use updated each 15 minutes.

New Brunswick: NB Power Current Power Outages. (no map) (updated 11/2/2014)

Newfoundland: Newfoundland Power Outages Map.

Nova Scotia: NS Power Outages is an independent outage tracking log, based on data and reports from the Nova Scotia Power Live Outage and Restoration Map.

Ontario: Hydro One Storm Centre. Call 1-800-434-1235 to Report a Power Problem.

Ontario (Guelph) Guelph Hydro Power Outage Map. Map overlay shows both power outages and recent lightning strikes. Powered by Arcgis. Twitter: @GuelphHydro.

Ontario (Missisauga) Enersource Power Outage Map. To Report Outages please call 905-273-9050.

Ontario (Ottawa): Hydro Ottawa Power Outage Map.

Ontario (Toronto): Toronto Hydro Power Outage Map. To report a power outage, please call 416-542-8000. Twitter: @TorontoHydro. Facebook: TorontoHydro.

Prince Edward Island. Maritime Electric, a Fortis Company. No maps. Call 1-800-670-1012.

Quebec. Hydro Quebec Power Outage Information (no maps). A table of Hydro Quebec power outages is provided (in French only) with detail to service interruption locations.

Saskatchewan. SaskPower Outages (no maps).

Australia (SE Queensland). Energex. Energex Current Interruptions (no maps).

Bangladesh. Power Grid Company of Bangladesh, Ltd (no maps). Hourly generation and load shedding table shows unmet demand. (Updated 11/2/2014).

Kenya. Kenya Power. Facebook: @KenyaPowerLtd. Twitter: @KenyaPower.

Mexico. CFE (Comisión Federal de Electricidad) (no maps). Twitter: @CFEmx

New Zealand: Orion New Zealand Outages. Twitter: @OrionNZ

South Africa: Eskom (no maps). When demand exceeds supply, Eskom does load shedding. Twitter: @Eskom_SA ; Twitter hashtag #eskom . [Updated 11/2/14]

Venezuela: Crisis Eléctrica Venezolana is a weblog by Jose Aller in Venezuela who is tracking the widespread power outages in that country in 2011. Map is based on tweets with the tag #sinluz.

Original content by Edward Vielmetti.

-

Automatically Publishing NuGet packages from GitHub

About an hour ago I didn’t have a MyGet account (although I knew about the service) but did have a repository on GitHub with a package that I was manually updating and pushing to NuGet. Thanks to a recommendation from Phil Haack and an hour of messing around with some files I now have a push to GitHub updating my NuGet package with a click of a button. Dead simple. Read on to get your own setup working.

I’m currently in the midst of a reboot of Terrarium, a .NET learning tool that lets you build creatures that survive in an online ecosystem. More on that later but right now I’ve setup a NuGet package that you add to your own creation to get all the functionality of a creature in Terrarium. The problem was is that I was manually editing a batch file every time I built a new version and pushing that build up to NuGet.

This is 2014. There must be a better way. We have the technology. We have the capability to make this process easy.

Thanks to a few tweets and one suggestion from Phil Haack I checked out MyGet. At first it didn’t look like what I wanted to do. I didn’t want package hosting, I wanted to build my package from GitHub and publish it to NuGet. After looking at a few pages I realized that MyGet was really a perfect way to setup “test” packages. Packages I could push and push and push and never make public then with the click of a button publish the version from MyGet upstream to NuGet. In addition to a few pages of documentation on MyGet I stumbled over Xavier Decoster’s blog post on how he does it with his ReSharper Razor extension. The post was good and had enough steps to get me going. The MyGet docs are there but the screenshots and some of the steps are a little obscure (and didn’t always match what I was seeing in my browser) so hopefully this post will clear things up and give you a detailed step-by-step on how to do this (with the current version of MyGet, it may change in the future).

What You’ll Need

You’re going to need a few things setup first for this to work:

- A GitHub repository. BitBucket is also supported as well as others but I’ll document GitHub here. Check out the MyGet documentation on what they support.

- A MyGet account. I hadn’t signed up for one until about an hour ago so this is easy (and free!)

- A NuGet account.

- A .nuspec file for your project (we’ll create it in this post)

- A batch file to build your package (again, we’ll create it later)

MyGet Feed

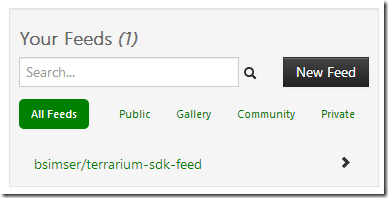

You’re going to need to create a MyGet feed first before anything. So once you have an account you’ll add a feed. There are various places on MyGet to add one (and I think once you verify your account you’re thrown into the screen to create one). In the top login bar next to your name you’ll see a file icon that says “New Feed”

There’s also a “New Feed” button in the Your Feeds box on your activity stream page.

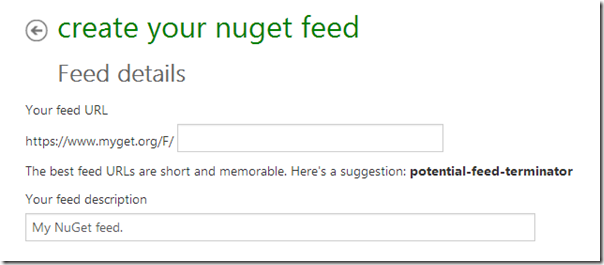

Once you click it you’ll be taken to a page where you enter the details of your new feed.

Enter a name for the feed. The first part of the URL is already filled in for you but you specify whatever you want. This will be your URL that you’ll enter into the Package Manager to consume your feed. You can also enter a description here.

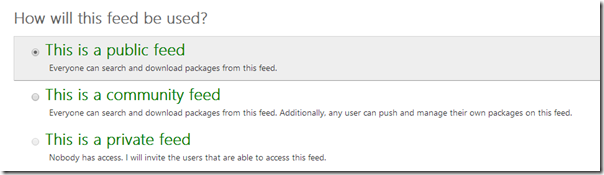

Next you get to determine how your feed is to be used. By default it’s public but you can also create a community feed (where anyone can push and manage their own packages on the feed).

With a paid account you can also have a private feed where you invite users to access it.

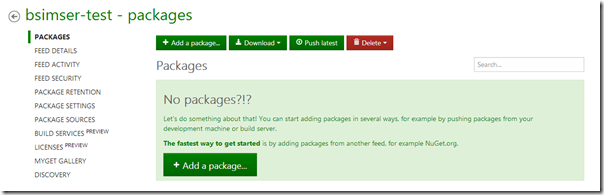

Click on the Create feed button to finish the setup. Once you create the feed you’ll be dumped to the main page of the package where you can select various settings to configure your package feed. By default there’s nothing there so we’ll build up our package.

Getting Your Code

First thing you want to do is add a Build Service. A build service is a mechanism to tell MyGet how to build your project. This will clone your GitHub repository on MyGet and build your package. Detailed information about the Build Services can be found here.

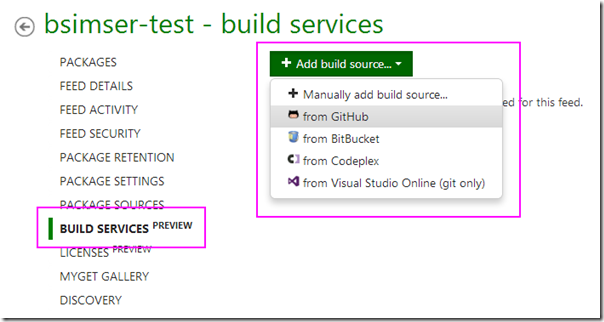

Click on Build Services then click on the Add build source button. This will allow you to select a build source where you’ll pull your code from.

Here you can see you can add a GitHub repository, BitBucket, CodePlex, or Visual Studio Online service (or you can add one manually).

Choose GitHub and you’ll be asked to authorize MyGet to interact with GitHub (along with maybe signing into GitHub). Once you authorize it you’ll be shown a list of repositories on GitHub you can select from. Choose the one you’re going to build the package from by clicking on the Link? checkbox and click Add (some repositories below are obscured but yours won’t be).

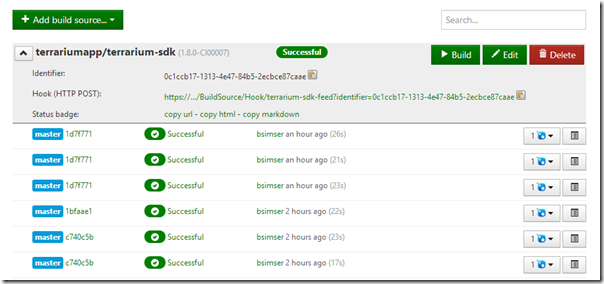

Once you add the build source you now have the information to setup GitHub for the webhook. Expand the build source to show the information MyGet created for it and you’ll see something like this:

Initially you won’t have any builds but this is where you’ll see those builds and their status. What’s important here is the Hook url. This is the value we’re going to use on GitHub to trigger MyGet to fetch the source when a push occurs (and build the package).

Copy the Hook url (there’s a small icon you can click on to copy it to your clipboard, typing is for weenies) and head over to your GitHub project you selected for the build source.

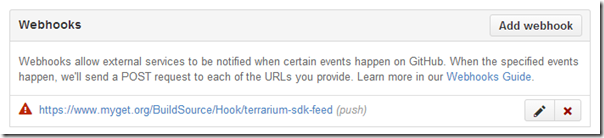

Webhooks

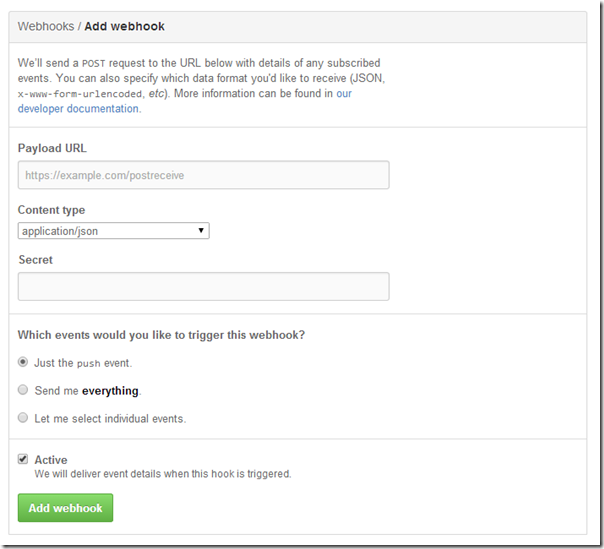

On GitHub in your repository go to Settings then click on Webhooks and Services. Click on Add webhook to show this dialog:

In the Payload URL paste the Hook URL that you got from MyGet. Leave the Content type as application/json and the radio button trigger to just work off the push event then click Add webhook.

This will add the webhook to your GitHub repository which, on a push to the repo, will trigger MyGet to do it’s stuff.

Building

Now its time to setup your package creation in GitHub and create a package. Remember, MyGet is going to be doing the fetching of the source code from GitHub and compiling and packaging. The trigger will be when you push a change to GitHub (you can also trigger the build manually from MyGet if you need to).

By default MyGet will search for a variety of things to try to figure out what to do with your codebase once it gets it. While it does look for .sln files and .csproj files (and even .nuspec files) I prefer to be specific and tell it what to do. The build service will initially look for a build.bat (or build.cmd or build.ps1) file and run it so let’s give it one to follow.

Xavier provides one in his RazorExtensions repository so I (mostly) copied that but he’s doing a few things to support his plugin. Here’s a more generic build.bat you can create that should be fine for your project:

Failed loading gist https://gist.github.com/8c5d08f0f5fc0b7626d0.json: timeout- Line 2-5: These lines setup the configuration we’re going to use. This would be “Release” or “Debug” or whatever you have defined in your solution. If it’s blank it will use “Release”.

- Line 7-10: This sets up the PackageVersion value that will be passed onto NuGet when creating the package. Again, if it’s blank it will use 1.0.0 as the default.

- Line 12-15: Here we’re setting the nuget executable. If you have a specific one in your solution you can use it, otherwise it’ll just use the one available on MyGet (which is always the latest version)

- Line 17: This is the msbuild command to run your solution file. My solution file is in a subfolder called “src” so I specify that way, yours might be at the root so adjust accordingly. The %config% parameter is used here to select the build configuration from the solution file. The other values are for diagnostic purposes to provide information to MyGet (and you) in case the build fails.

- Line 19-21: Here I’m just setting up the output directories for NuGet. All packages will get put into a folder called “Build” and follows the standard naming for NuGet packages. Right now I just have a .NET 4.0 library but other files may come later. Build up your package folder structure accordingly (the docs on NuGet describe it here).

- Line 23: Finally we build the NuGet package specifying our .nuspec file (again mine is in a subfolder called “src”) and the version number. The “-o Build” option is use the Build directory to output the package.

Okay now we have a build.bat file that MyGet will run once it grabs the latest code from GitHub. Commit that (but don’t push yet, we’re not ready). Next up is to build the .nuspec file that will tell NuGet what’s in our package. You can create the .nuspec file manually or using NuGet to scaffold it for you (docs here). There are also some GUI tools and whatnot to build it so choose your weapon.

Here’s my .nuspec for the Terrarium SDK:

Failed loading gist https://gist.github.com/37115a0d4d3558d41b7b.json: timeoutPretty typical for a .nuspec file and pretty simple. It contains a single file, the .dll, that’s in the “lib/net40” folder. A few things to note:

- My assembly is in the project directory but the .nuspec file is in the “src” folder so the package contents are relative to where the .nuspec file is, not where the system is running it from (which is always the root with MyGet)

- We use the $version$ and $configuration$ token to replace paths and names here. These are set in the batch file prior to invoking NuGet.

Okay now we’ve got the .nuspec to define our pacakge, the source code wired up to notify MyGet, MyGet to get it on a push, and a batch file to create our package.

Push the code changes into GitHub and sit back. You can go to your Build Services page on MyGet for your feed, expand the chevron next to your feed name, and watch an automatically updated display pull your code down and create your package.

If things go wrong feel free to ping me and I can try to help, but realize that everyone’s setup is different. Check the build log for immediate errors. When MyGet fetches your repository it’ll clone it into a temporary directory and then start looking for build.bat and other files to invoke. That’s why we want to put our build.bat file in our root (mine isn’t but I’ll probably change that at some point) so we can get his party started.

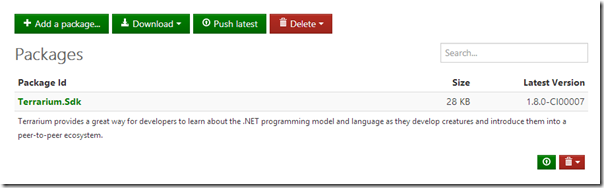

A Package Is Born

Once you have a successful build you’ll see your new package under the Packages section.

Cool. You can click on the Package Id to see more detailed information about the package and also manage old releases. Unlike NuGet, MyGet let’s you delete packages rather than just unlist them.

One thing to note here is the version number. Where did that come from? In the screenshot above my version number is 1.8.0-CI0007.

MyGet follows Semantic Versioning (which is a good thing). I do recommend you give it a quick read and consider adopting it. It’s easy and lets people know when things are potentially going to break (if you follow the 3 simple rules about major, minor, and patch version). Semantic Versioning regards anything after the patch number to be whatever your want (pre-release, beta, etc.)

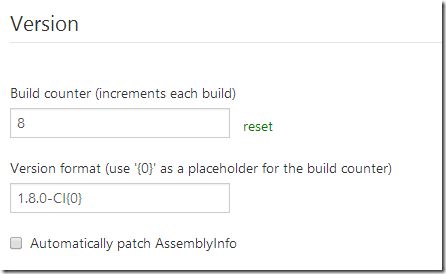

So about that build version. Go back to Build Services in MyGet and for your GitHub source you’ll see a button next to Build called Edit. Click it and it’ll bring up the details about the source that lets you configure how the code is fetched from GitHub.

So down a bit you’ll see a section called Version. Here you can set (or reset) a build counter. Each build on MyGet increments the counter so you know how many times the system was built (you can also use this to track what version is available or how far it’s drifted from source control or different builds in different environments, etc.). The version format field lets you enter your Semantic Versioning formatted value and use {0} as a placeholder for the counter. By default it's set to 1.0.0-CI{0} meaning that your first build is going to be labeled 1.0.0-CI000001 (the build number is prefixed with zeros).

That’s where it came from so anytime you need to bump up the major, minor, or patch version just go here to change it. As for the extra bit we’ll get to it shortly but the default version works and won’t make it’s way into our “production” versions of our package.

Consuming the Package

Now that we have a package we can add it to our package sources in Visual Studio and install it. Again this post is written from the perspective of using MyGet as your own personal repository where you can test out packages before releasing them to the public. While public packages are the default (and free) version on MyGet, you can keep pushing pre-release versions up to MyGet until you’re happy with it then “promote” it to NuGet.

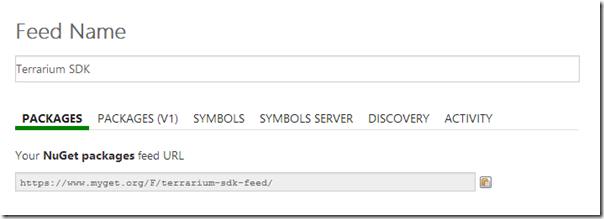

In MyGet click on your package and select Feed Details from the menu. You’ll see something like this:

This is your NuGet feed from MyGet for this package. Copy it (again with the handy icon) and head into Visual Studio.

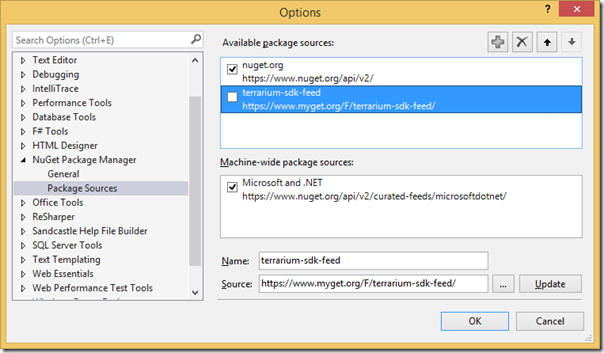

In the package manager settings add this as a package source. I just add it while I’m developing and testing the package and then disable it (so it doesn’t get confused with the “official” NuGet server). You can easily flip back and forth between packages this way.

I just name the MyGet feed after the package because it’s for testing. Activate the MyGet feed (and to avoid confusion disable the NuGet one) and then return to the managing pacakges for your solution. Select your MyGet feed from the Online choices in NuGet to see your package.

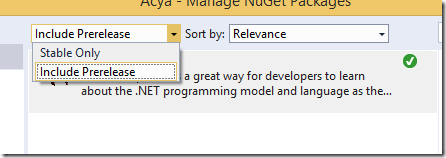

Remember that MyGet is (by default) building a pre-release version (as indicated by the –CI{0} in your version number). This means you need to select the “Include Prerelease” dropdown in the Package Manager:

You should see your package with the same version as MyGet is showing.

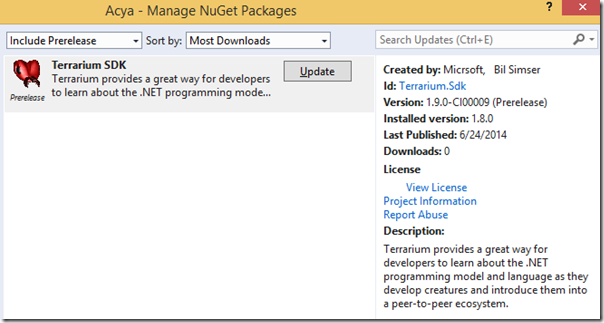

At this point you can install your package into a test or client app or wherever you’re using the package. Make changes to it, push the changes to GitHub and magically MyGet will fetch the changes and republish the package for you. Just go into the Updates section in NuGet to see them. Here’s a new package that was created after pushing a change up to GitHub as it appears in the Package Manager GUI in Visual Studio:

Here you can see we have 1.8.0 installed (from NuGet.org). I changed my package source to MyGet feed and there’s a new version available. It’s labeled as 1.9.0-CI00009 (Prerelease).

Like I said, you can keep pushing more versions up to GitHub and go nuts until you’re happy with the package and are ready to release.

Upstream to NuGet

The last piece in our puzzle is having MyGet push a package up to NuGet. This is a single click operation and while it can be automatic (for example pushing everything from MyGet to NuGet) you might not want that. Users would get the pitchforks out and start camping on your doorstep if you pushed out a new version of your package 10 times in a day.

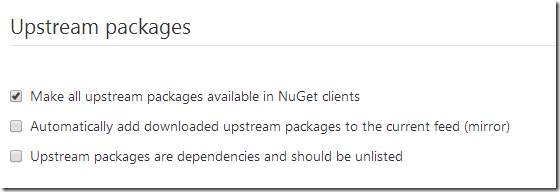

Package Sources are the way to get your feed out. By default one for NuGet.org is even created for you, but it’s not configured or enabled. Click on the Package Sources and you’ll see the NuGet.org one. Click on edit (assuming you have a NuGet.org account and API key).

Enter your information to allow MyGet to talk to NuGet and in the upstream section you’ll see this:

Check the first option. This allows you to push a package from MyGet to NuGet.

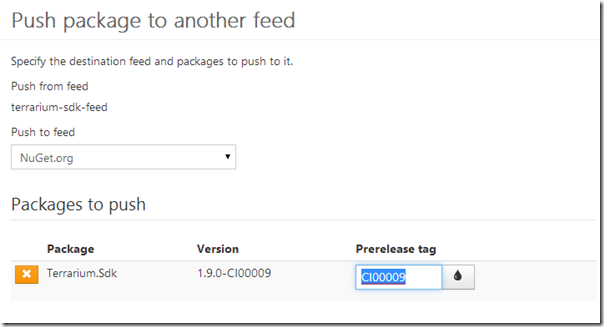

Finally we’re ready to go but we don’t want version 1.9.0-CI00108 to be going public. So here’s where Semantic Versioning comes into play. Back in MyGet click on Packages on your package and then click the Push Latest button.

Here’s where the magic happen. MyGet recognizes the “–CI00009” is the prerelease tag in our version. If we leave this in, it’ll push the prerelease up to NuGet. Maybe this is okay but for my use I want to only push up tested packages when I’m ready to release them.

Clear the Prerelease tag field out. This means that will ignore that tag that’s in your version number and only push the version up using the major.minor.patch (in this case 1.9.0 will be pushed up to NuGet.org).

Click Push and in a few minutes it’ll be on NuGet with the newest version.

That’s about it. I know this was long but it was step-by-step and hopefully it should clear up some fuzziness with the process of automatically publishing your GitHub repositories as NuGet packages. As typical it actually took me longer to write this up then to actually do it.

I encourage you to check out more documentation on MyGet as this just scratches the surface as to what it can do and even in this project there are additional options you can leverage like automatically patching AssemblyInfo.cs in your build, labeling the source when you push from MyGet to NuGet, etc.

Feel free to leave your comments or questions below and enjoy!

-

Live Kitten Juggling with ASP.NET MVC

Are you as excited as I am about ASP.NET MVC but want to know more. Or have you been struggling all your life in Web Forms Hell and want to make the leap to MVC and don’t know where to start or who to turn to?

Jon Galloway and Christopher Harrison are presenting a fast-paced live virtual session (no travel required, except to get from the couch to the computer which I know is a task but we can do it) that walks you through getting introduced to MVC, Visual Studio, Bootstrap, Controllers, Views, and bears oh my!

It’s a free-as-in-beer all-day session. If you know some C#, a little HTML, and some JavaScript and want to amp up your game then I encourage you to drop by. The free Microsoft Virtual Academy event runs from 9:00am – 5:00pm PDT on June 23, 2014. Check out this page for more details where you can also register for the event.

See you there!

-

SPListCollection ContainsList Extension Method

This is such a simple thing but something every SharePoint developer should have in their toolkit (well, actually, this is something Microsoft should put into the product). The SPFieldCollection has a nice method called ContainsField so you can check for the existence of a field (without throwing an exception if you get the spelling wrong). This is my version of the same method for SharePoint Lists.

Enjoy.public static class SPListExtensions

{

public static bool ContainsList(this SPListCollection lists, string displayName)

{

try

{

return lists.Cast<SPList>().Any(list => string.Equals(list.Title, displayName, StringComparison.OrdinalIgnoreCase);

}

catch (Exception)

{

return false;

}

}

}

-

Enhancing your SharePoint Team Site Homepage

A team site can be a boring place. Just a site with some documents, a list or two, maybe a calendar. Here’s a super simple way to make the page a little more interesting looking.

Your team site might look like this right now, with a calendar on the home page:

Simple and effective but bland. Also the title just blends into the background doesn’t it?

Edit the web part and under Advanced settings, set the Title Icon Image URL to the same value as the Calendar Icon Image URL:

Now the calendar shows a small icon next to the title and breaks up the page a little.

Simple but easy to do. By default, the Title icon is blank but IMHO it should be defaulted to the type of list that’s being shown (Calendar, Task List, Document Library, etc.). Like I said, it’s simple but it breaks up the page and also is a visual reminder of what you’re looking at. It also shows where the clickable title is for a list or library that’s been put on a page. When you have a page full of web parts, it’s not immediately obvious where the title is in a sea of text. A little graphic goes a long way for readability.

You can use any icon for any list but I like using the one suited to the task. Here’s a list of the icons to use for each list/library type:

List/Library Type Icon Custom List /_layouts/images/itgen.gif Calendar /_layouts/images/itevent.png Contact List /_layouts/images/itcontct.gif Site /_layouts/images/SharePointFoundation16.png Document Library /_layouts/images/itdl.png Announcements /_layouts/images/itann.png Discussion Board /_layouts/images/itann.png Issue Tracker /_layouts/images/itissue.png Picture Library /_layouts/images/itil.png Links /_layouts/images/itlink.png Tasks /_layouts/images/ittask.png Survey /_layouts/images/itsurvey.png Enjoy!

-

Virtual Machine Tips for running SharePoint

Just a few simple Virtual Machine tips when developing in SharePoint

-

My VMs are 40GB in size and pre-allocated. After installing SharePoint, SQL Server, and Visual Studio and all the tools I need I have about 10GB for data which is more than sufficient for most jobs. The pre-allocation helps performance but you need a lot of disk space so keep that in mind when planning your VM.

-

I run my VMs disconnected with their own Domain Controller (for development I usually just run a single VM with DC, SQL, and SharePoint all in one). I reconnect them via a bridge to the host network adapter when I'm at home off my corporate network. This is to get patches and keep things up to date. Running disconnected is nice because you know when things fail and why. Got a rogue web part that's behaving strangely? Put it in your VM and take a look at it to make sure it's not relying on some external service (which may be throwing proxy errors or something from your corporate network)

-

I rely on source control to store my code and only pull down copies of current projects when I'm ready to work on something and keep things fresh with mulitple check-ins per day. With VMWare the nice thing is that I can mount the VM as a disk image in case I need to pull something off (without having to fire up the VM). Having a source control system like GIT or Mercurial instead of Subversion or VSS will let you do local check-ins then you can sync when you're done at the end of the day.

-

VMs on the local drive (if you're running a SSD) are waaaaaaaaaaay faster than on an external drive. If you have to use an external drive for your VMs (I've since stopped doing this with the increased size of SSDs these days) then go with eSATA for the best performance (although some people will argue USB 3.0 is faster). Pre-allocating the VM on an external drive will help with performance as well.

-

Give your VM lots of memory. Any SharePoint developer must be running at *least* 8GB on their host machine. Give your VM 4-5 GB. No watching movies while you work, defer that to another machine. For SharePoint 2013 you really need to run 16GB on your host machine and give your VMs 8-12 GB of RAM.

-

Copying large files into a VM (like an installer) then deleting it will cause fragmentation that you might not get back during regular usage. Make sure you use your virtualization tools to defragment your VMs on a regular basis.

-

A really great tool to keep your VMs under control size wise is SpaceSniffer. It visually shows you where things are gobbling up space in the OS so you can pinpoint things that you don't need and zap 'em! Get it. It's free!

That's it for now. Just a few simple tips that might help out. Happy developing!

-

-

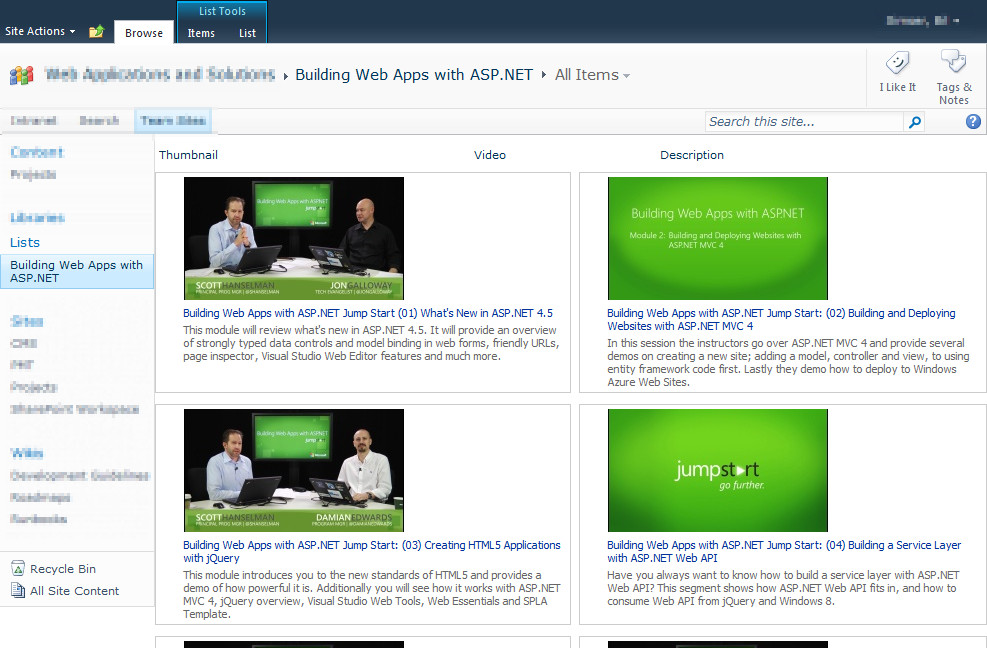

ASP.NET Training Videos Delivered via SharePoint

Recently Scott Hanselman posted on his blog an ASP.NET Jump Start session they had which featured 9 videos (over 8 hours of content) on developing apps with ASP.NET. This is a great resource and I wanted to share it with the rest of my team. Problem is that a) the team generally doesn’t have access to video content on the web as it’s generally blocked by proxy b) streaming an hour video over the Internet might be okay for one but not for an entire team and c) there must be a better way to share this other than passing out links to Scott’s blog or the Channel 9 site.

We have a team site in SharePoint so I thought this would be a great opportunity to share the information that way. The problem was that even at medium resolution the files are just too big to host inside of SharePoint.

Sidebar. There have been a lot of discussions about “Large file support” in SharePoint 2010 (and beyond) and that’s great but for me the bottom line is that extremely large files (over the default 50MB in size) just isn’t meant for an Intranet. I can hear the arguments now about it but a few things to consider with large files (specifically media files like videos). Uploading a 100MB file to the server means 100MB of memory gets gobbled up by the w3wp.exe process (the process attached to the Application Pool running your site) during the entire time the file is being uploaded. LANs are fast but even a 100MB file only gets uploaded at a certain speed (regardless of how big your pipe is). Also uploading the video commits the user to that web front end (usually pegging the server or process) so your load balancing is shot. In short, many people bump up the maximum size of SharePoint’s default of 50MB without taking any of these things into consideration then wonder why their amazing Intranet site is running slow (and usually they toss more memory on the front-ends thinking it will help). Basically 50MB should be the limit for files on any web application when users are uploading.

In any case, an option is to specify the files via UNC paths which means that a) I can just use the Windows Desktop top copy the files so no size limitations and b) I can address the files via the file:// protocol. This works much for most Intranets and large files and should be considered over stuffing a file into SharePoint (regardless of how you get it in). Remember that a 100MB file in SharePoint generally takes up at least 150MB of space (and if the file is versioned, eek! just watch your content database explode in size!).

So my first step was to download the files and put them onto a file share. Simple enough. 9 files. I grabbed the medium size files but you can get the HD ones or whatever you want (I just didn’t want to wait around for 500MB per file to download with the high quality WMV files). Medium quality is good enough for training as you can clearly see the code in the IDE and not be annoyed by pixel artifacts on the playback.

Now that I had my 9 videos of content on the corporate LAN it was time to set something up to display them. You can get way fancier than I did here with some jQuery, doing some Client Object Model code to write out a video carousel but this is a simple solution that required no code (and I do mean absolutely zero code) and a few minutes of time. In fact it took longer to copy the files than it did to setup the list.

First create a Custom List on the site you want to server your video catalog up from. Then I added three fields (in addition to the default Title field):

- Video. This was set to Hyperlink field that would be the UNC path to the video itself on the file share.

- Thumbnail. This was a Hyperlink field formatted as a picture and would offer up a snapshot of what the user was going to see.

- Description. A multiline field set to Text only that would hold a brief description of the video.

Here’s the Edit form for a video item:

- The title is whatever you want (it’s not used for the catalog)

- The Video is a hyperlink to the file on the file share. This will take the form of “file://” instead of “http://” and point to your UNC path to the file. Put the full title (or whatever the user is going to see to click on) in the description field. With Hyperlink fields if you leave the description field blank it gets automatically filled in with the full link (which isn’t very user friendly).

- The Thumbnail is a hyperlink to an image. You can choose to make your own (if your content is your own). I cheated here and just grabbed the image directly off of Scott’s blog (which is up on some Microsoft content delivery network location). As this field is formatted as a Picture it’ll just display the image so make sure it points to an image file SharePoint recognizes (JPEG is probably preferred here).

- The Description field is just copied straight off the blog. Again, change this to whatever you want so users know what the video is about.

Once you populate the list with the videos you almost ready to go. Here’s the default view of the list:

This is okay but not very user friendly for viewing videos. Users might click on the Title field which would open up the List Item instead of launching the video. So instead either create a new view or modify the default one. If you create a new view you might want to set it as a new default so when a user visits the list they see the right view.

In your new view we’ll set a few options:

- Set the first three columns to be Thumbnail, Video, and Description (in this order). This is to create the catalog view of the world so you might want another view for editing content (in case you’re adding something or want to update a value).

- Turn off Tabular View as we don’t need it here

- Under Style choose “Boxed, no labels”

Now here’s the updated view in the browser:

Pretty slick and only took 5 minutes to build the view. Users click on the title and the video launches in Windows Media Player (or whatever player is associated with your video file format you’re pointing to).

Note that this posts talks about a specific set of files for a solution but the video content is up to you. If you have some high quality/large format audio or video files in your organization and want to surface them up in a catalog this solution might work for you. It’s not about the content, it’s about serving up that content.

Enjoy!

-

Convention over Configuration with MVC and Autofac

One of the key things to wrap your head around when doing good software development using frameworks like ASP.NET MVC is the idea of convention over configuration (or coding by convention).

The idea is that rather than messing around with configuration files about where to find things, how to register IoC containers, etc. that we used to do, you follow a convention, a way of doing things. The example normally expressed is a class in your model called “Sale” and a database named “Sales”. If you don’t do anything that’s how the system will work but if you decide to change the name of the database to “Products_Sold” then you need some kind of configuration to tell the system how to find the backend database. Otherwise it can naturally find it based on the naming strategy of your domain.

MVC does this by default. When you create a controller named “Home” the class is HomeController and the views of the HomeController are found in /Views/Home for your project. When dealing with IoC containers there’s the task of registering your types to resolve correct. So let’s take a step back and take a look at a project with several repositories in it (a repository here being some kind of abstraction over your data store).

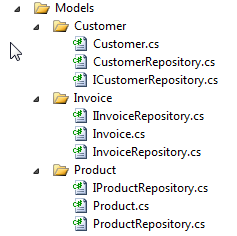

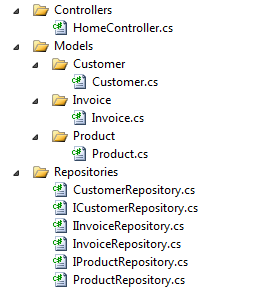

Here we have under our Models: Customer, Invoice, and Product with their respective classes, repositories, and interfaces:

This might be how your project is typically setup. I want to inject the dependencies on my repositories into my controller (via my IoC system) like this:

1: public class HomeController : Controller

2: {3: private readonly ICustomerRepository _customerRepository;

4: private readonly IInvoiceRepository _invoiceRepository;

5: private readonly IProductRepository _productRepository;

6:7: public HomeController(

8: ICustomerRepository customerRepository,9: IInvoiceRepository invoiceRepository,10: IProductRepository productRepository)11: {12: _customerRepository = customerRepository;13: _invoiceRepository = invoiceRepository;14: _productRepository = productRepository;15: }16:17: //

18: // GET: /Home/

19: public ActionResult Index()

20: {21: return View();

22: }23: }Then somewhere in my controller I’ll use the various repositories to fetch information and present it to the user (or write back values gathered from the user). How do my IoC know how to resolve an ICustomerRepository object?

Here’s how I have my IoC engine setup for this sample using Autofac. You can use any IoC engine you want but I find Autofac works well with MVC. First in Global.asax.cs I just follow the same pattern that the default projects setup and add a new static class called ContainerConfig.RegisterContainer()

1: public class MvcApplication : System.Web.HttpApplication

2: {3: protected void Application_Start()

4: {5: AreaRegistration.RegisterAllAreas();6:7: WebApiConfig.Register(GlobalConfiguration.Configuration);8: FilterConfig.RegisterGlobalFilters(GlobalFilters.Filters);9: RouteConfig.RegisterRoutes(RouteTable.Routes);10: BundleConfig.RegisterBundles(BundleTable.Bundles);11: ContainerConfig.RegisterContainer();12: }13: }Next is setting up Autofac. First add the Autofac MVC4 Integration package either through the NuGet UI or the Package Manager Console:

PM> Install-Package Autofac.Mvc4

Next here’s my new ContainerConfig class I created which will register all the types I need:

1: public class ContainerConfig

2: {3: public static void RegisterContainer()

4: {5: var builder = new ContainerBuilder();

6: builder.RegisterControllers(Assembly.GetExecutingAssembly());7: builder.RegisterType<CustomerRepository>().As<ICustomerRepository>();8: builder.RegisterType<InvoiceRepository>().As<IInvoiceRepository>();9: builder.RegisterType<ProductRepository>().As<IProductRepository>();10: var container = builder.Build();

11: DependencyResolver.SetResolver(new AutofacDependencyResolver(container));

12: }13:14: }What’s going on here:

Line 5 Create a new ContainerBuilder Line 6 Register all the controllers using the assembly object Line 7-9 Register each repository Line 10 Build the container Line 11 Set the default resolver to use Autofac Pretty straight forward but here are the issues with this approach:

- I have to keep going back to my ContainerConfig class adding new repositories as the system evolves. This means not only do I have to add the classes/interfaces to my system, I also have to remember to do this configuration step. New developers on the project might not remember this and the system will blow up when it can’t figure out how to resolve INewRepository

- I have to pull in a new namespace (assuming I follow the practice of namespace = folder structure) into the ContainerConfig class and whatever controller I add the new repository to.

- Repositories are scattered all over my solution (and in a big solution this can get a little ugly)

A little messy. We can do better with convention over configuration.

First step is to move all of your repositories into a new folder called Repositories. With ReSharper you can just use F6 to do this and it’ll automatically move and fix the namespaces for you, otherwise use whatever add-in your want or move it manually. This includes both the classes and interfaces. Here’s what our solution looks like after the move:

Pretty simple here but how does this affect our code? Really minimal. In the controller(s) that you’re injecting the repository into, you just have to remove all the old namespaces and replace it with one (whatever namespace your repositories live in).

The other change is how you register your ContainerConfig. Here’s the updated version:

1: public class ContainerConfig

2: {3: public static void RegisterContainer()

4: {5: var builder = new ContainerBuilder();

6: builder.RegisterControllers(Assembly.GetExecutingAssembly());7: builder.RegisterAssemblyTypes(Assembly.GetExecutingAssembly())8: .Where(x => x.Namespace.EndsWith(".Repositories"))

9: .AsImplementedInterfaces();10: var container = builder.Build();

11: DependencyResolver.SetResolver(new AutofacDependencyResolver(container));

12: }13: }Note that a) we only have one line to register all the repositories now and b) the namespace dependency we had in our file is now gone.

The call to RegisterAssemblyTypes above using the convention of looking for any class/interface in a namespace that ends with “.Repositories” and then simply registers them all.

So for the new developer on the project the instructions to them are to just create new repository classes and interfaces in the Repositories folder. That’s it. No configuration, no mess.

Hope that helps!

-

Defaulting Values in a Multi-Lookup Form in SharePoint

This was a question asked on the MSDN Forums but I thought it was worthy of a blog post as I could get more in depth with the explanation and show some pretty pictures (plus the fact I’ve never done it so thought it would be fun).

The problem was a user wanted to default multiple values in a lookup field in SharePoint. First problem, there are no defaults in a lookup field. Second problem, how do you do default multiple values?

First we’ll start with the setup. Create yourself a list which will hold the lookup values. In this case it’s a list of country names but it can be anything you want. Just a custom list with the Title field is enough.

Now we need a list with a lookup column to select our countries from. Create another custom list and add a column to it that looks something like this. Here’s the name and type:

And here’s the additional column settings where we get our information from (MultiLookupDefaultSpikeSource is the name of the list we created to hold our values)

Here’s what our form looks like when we add a new item:

Thinking about the problem I first though we could manipulate the form in SharePoint Designer but realized that the Form Web Part is going to retrieve all of our values from the list, defaults, etc. and really what we need to do is manipulate the list at runtime in the DOM.

It’s jQuery to the RESCUE!

First we take a look at the original state of the form to find our list boxes. Here’s the snippet we’re interested in, the first listbox:

<select name="ctl00$m$g_478fe6d2_8fdb_48e8_be57_7739de1c3b8f$ctl00$ctl05$ctl01$ctl00$ctl00$ctl04$ctl00$ctl00$SelectCandidate" title="Country possible values" id="ctl00_m_g_478fe6d2_8fdb_48e8_be57_7739de1c3b8f_ctl00_ctl05_ctl01_ctl00_ctl00_ctl04_ctl00_ctl00_SelectCandidate" style="width: 143px; height: 125px; overflow: scroll;" ondblclick="GipAddSelectedItems(ctl00_m_g_478fe6d2_8fdb_48e8_be57_7739de1c3b8f_ctl00_ctl05_ctl01_ctl00_ctl00_ctl04_ctl00_ctl00_MultiLookupPicker_m); return false" onchange="GipSelectCandidateItems(ctl00_m_g_478fe6d2_8fdb_48e8_be57_7739de1c3b8f_ctl00_ctl05_ctl01_ctl00_ctl00_ctl04_ctl00_ctl00_MultiLookupPicker_m);" multiple="multiple"> <OPTION title=Africa selected value=5>Africa</OPTION> <OPTION title=Asia value=1>Asia</OPTION> <OPTION title=Europe value=3>Europe</OPTION> <OPTION title=India value=4>India</OPTION> <OPTION title=Ireland value=6>Ireland</OPTION> <OPTION title=Singapore value=2>Singapore</OPTION> </select>We can see that it has an ID that ends in “_SelectCandidate” so we’ll use this for selection.

Another part of the puzzle is a hidden set of fields that store the actual values used in the list. There are three of them and they’re well documented in a blog post here by Marc Anderson on SharePoint Magazine. In it he talks about multiselect columns and breaks down the three hidden fields used (the current set of values, the complete set of values, and the default values).

The second listbox looks like this:

<select name="ctl00$m$g_478fe6d2_8fdb_48e8_be57_7739de1c3b8f$ctl00$ctl05$ctl01$ctl00$ctl00$ctl04$ctl00$ctl00$SelectResult" title="Country selected values" id="ctl00_m_g_478fe6d2_8fdb_48e8_be57_7739de1c3b8f_ctl00_ctl05_ctl01_ctl00_ctl00_ctl04_ctl00_ctl00_SelectResult" style="width: 143px; height: 125px; overflow: scroll;" ondblclick="GipRemoveSelectedItems(ctl00_m_g_478fe6d2_8fdb_48e8_be57_7739de1c3b8f_ctl00_ctl05_ctl01_ctl00_ctl00_ctl04_ctl00_ctl00_MultiLookupPicker_m); return false" \ onchange="GipSelectResultItems(ctl00_m_g_478fe6d2_8fdb_48e8_be57_7739de1c3b8f_ctl00_ctl05_ctl01_ctl00_ctl00_ctl04_ctl00_ctl00_MultiLookupPicker_m);" multiple="multiple">Easy enough. It has an ID that contains “_SelectResult”.

Now a quick jQuery primer when selecting items:

- $("[id='foo']"); // id equals 'foo'

- $("[id!='foo']") // id does not equal 'foo'

- $("[id^='foo']") // id starts with 'foo'

- $("[id$='foo']") // id ends with 'foo'

- $("[id*='foo']") // id contains 'foo'

Simple. We want to find the control that ends with “_SelectCandidate” and remove some items, then find the control that ends with “_SelectResult” and append our selected items.

So a few lines of heavily commented JavaScript: