Azure: Redis Cache, Disaster Recovery to Azure, Tagging Support, Elastic Scale for SQLDB, DocDB

Over the last few days we’ve released a number of great enhancements to Microsoft Azure. These include:

- Redis Cache: General Availability of Redis Cache Service

- Site Recovery: General Availability of Disaster Recovery to Azure using Azure Site Recovery

- Management: Tags support in the Azure Preview Portal

- SQL DB: Public preview of Elastic Scale for Azure SQL Database (available through .NET lib, Azure service templates)

- DocumentDB: Support for Document Explorer, Collection management and new metrics

- Notification Hub: Support for Baidu Push Notification Service

- Virtual Network: Support for static private IP support in the Azure Preview Portal

- Automation updates: Active Directory authentication, PowerShell script converter, runbook gallery, hourly scheduling support

All of these improvements are now available to use immediately (note that some features are still in preview). Below are more details about them:

Redis Cache: General Availability of Redis Cache Service

I’m excited to announce the General Availability of the Azure Redis Cache. The Azure Redis Cache service provides the ability for you to use a secure/dedicated Redis cache, managed as a service by Microsoft. The Azure Redis Cache is now the recommended distributed cache solution we advocate for Azure applications.

Redis Cache

Unlike traditional caches which deal only with key-value pairs, Redis is popular for its support of high performance data types, on which you can perform atomic operations such as appending to a string, incrementing the value in a hash, pushing to a list, computing set intersection, union and difference, or getting the member with highest ranking in a sorted set. Other features include support for transactions, pub/sub, Lua scripting, keys with a limited time-to-live, and configuration settings to make Redis behave more like a traditional cache.

Finally, Redis has a healthy, vibrant open source ecosystem built around it. This is reflected in the diverse set of Redis clients available across multiple languages. This allows it to be used by nearly any application, running on either Windows or Linux, that you host inside of Azure.

Redis Cache Sizes and Editions

The Azure Redis Cache Service is today offered in the following sizes: 250 MB, 1 GB, 2.8 GB, 6 GB, 13 GB, 26 GB, 53 GB. We plan to support even higher-memory options in the future.

Each Redis cache size option is also offered in two editions:

- Basic – A single cache node, without a formal SLA, recommended for use in dev/test or non-critical workloads.

- Standard – A multi-node, replicated cache configured in a two-node Master/Replica configuration for high-availability, and backed by an enterprise SLA.

With the Standard edition, we manage replication between the two nodes and perform an automatic failover in the case of any failure of the Master node (because of either an un-planned server failure, or in the event of planned patching maintenance). This helps ensure the availability of the cache and the data stored within it.

Details on Azure Redis Cache pricing can be found on the Azure Cache pricing page. Prices start as low as $17 a month.

Create a New Redis Cache and Connect to It

You can create a new instance of a Redis Cache using the Azure Preview Portal. Simply select the New->Redis Cache item to create a new instance.

You can then use a wide variety of programming languages and corresponding client packages to connect to the Redis Cache you’ve provisioned. You use the same Redis client packages that you’d use to connect to your own Redis instance as you do to connect to an Azure Redis Cache service. The API + libraries are exactly the same.

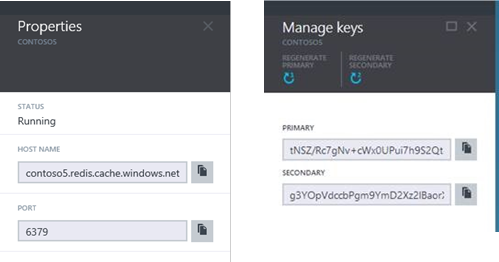

Below we’ll use a .NET Redis client called StackExchange.Redis to connect to our Azure Redis Cache instance. First open any Visual Studio project and add the StackExchange.Redis NuGet package to it, with the NuGet package manager. Then, obtain the cache endpoint and key respectively from the Properties blade and the Keys blade for your cache instance within the Azure Preview Portal.

Once you’ve retrieved these, create a connection instance to the cache with the code below:

var connection = StackExchange.Redis.ConnectionMultiplexer.Connect("contoso5.redis.cache.windows.net,ssl=true,password=...");

Once the connection is established, retrieve a reference to the Redis cache database, by calling the ConnectionMultiplexer.GetDatabase method.

IDatabase cache = connection.GetDatabase();

Items can be stored in and retrieved from a cache by using the StringSet and StringGet methods (or their async counterparts – StringSetAsync and StringGetAsync).

cache.StringSet("Key1", "HelloWorld");

cache.StringGet("Key1");

You have now stored and retrieved a “Hello World” string from a Redis cache instance running on Azure. For an example of an end to end application using Azure Redis Cache, please check out the MVC Movie Application blog post.

Using Redis for ASP.NET Session State and Output Caching

You can also take advantage of Redis to store out-of-process ASP.NET Session State as well as to share Output Cached content across web server instances.

For more details on using Redis for Session State, checkout this blog post: ASP.NET Session State for Redis.

For details on using Redis for Output Caching, checkout this MSDN post: ASP.NET Output Cache for Redis

Monitoring and Alerting

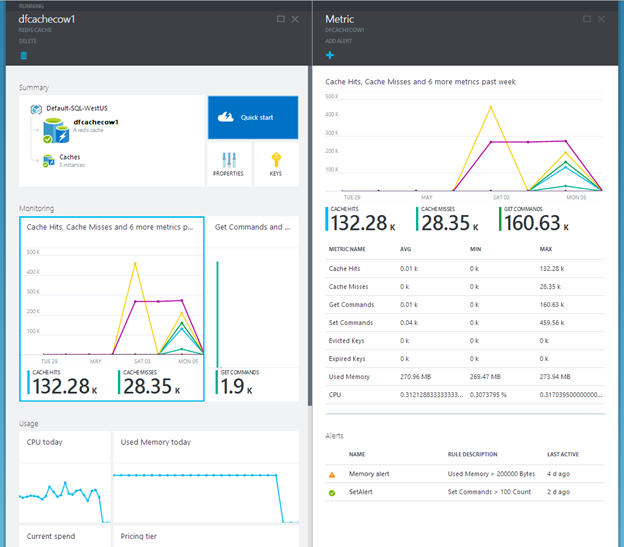

Every Azure Redis cache instance has built-in monitoring support on by default. Currently you can track Cache Hits, Cache Misses, Get/Set Commands, Total Operations, Evicted Keys, Expired Keys, Used Memory, Used Bandwidth and Used CPU. You can easily visualize these using the Azure Preview Portal:

You can also create alerts on metrics or events (just click the “Add Alert” button above). For example, you could create an alert rule to notify the cache administrator when the cache is seeing evictions. This in turn might signal that the cache is running hot and needs to be scaled up with more memory.

Learn more

For more information about the Azure Redis Cache, please visit the following links:

- Azure Blog: Lap around Azure Redis Cache

- Channel 9 Videos: Redis Cache 101, 102, 103

- Home Page : Azure Redis Cache

- MSDN Documentation: Azure Redis Cache

- Questions? : Azure Cache Forum

- Feature requests: Azure Cache UserVoice

Site Recovery: Announcing the General Availability of Disaster Recovery to Azure

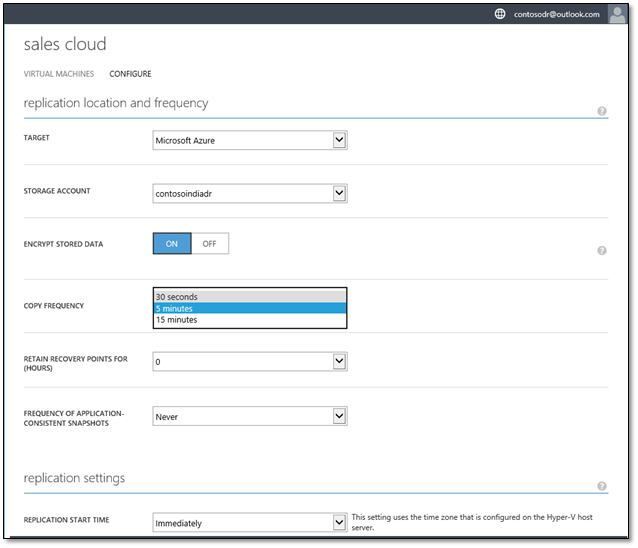

I’m excited to announce the general availability of the Azure Site Recovery Service’s new Disaster Recovery to Azure functionality. The Disaster Recovery to Azure capability enables consistent replication, protection, and recovery of on-premises VMs to Microsoft Azure. With support for both Disaster Recovery and Migration to Azure, the Azure Site Recovery service now provides a simple, reliable, and cost-effective DR solution for enabling Virtual Machine replication and recovery between on-premises private clouds across different enterprise locations, or directly to the cloud with Azure.

This month’s release builds upon our recent InMage acquisition, and the integration of InMage Scout with Azure Site Recovery enables us to provide hybrid cloud business continuity solutions for any customer IT environment – regardless of whether it is Windows or Linux, running on physical servers or virtualized servers using Hyper-V, VMware or other virtualization solutions. Microsoft Azure is now the ideal destination for disaster recovery for virtually every enterprise server in the world.

In addition to enabling replication to and disaster recovery in Azure, the Azure Site Recovery service also enables the automated protection of VMs, remote health monitoring of them, no-impact disaster recovery plan testing, and single click orchestrated recovery - all backed by an enterprise-grade SLA. A new addition with this GA release is the ability to also invoke Azure Automation runbooks from within Azure Site Recovery Plans, enabling you to further automate your solutions.

Learn More about Azure Site Recovery

For more information on Azure Site Recovery, check out the recording of the Azure Site Recovery session at TechEd 2014 where we discussed the preview. You can also visit the Azure Site Recovery forum on MSDN for additional information and to engage with the engineering team or other customers.

Once you’re ready to get started with Azure Site Recovery, check out additional pricing or product information, and sign up for a free Azure trial.

Beginning this month, Azure Backup and Azure Site Recovery will also be available in a convenient, and economical promotion offer available for purchase via a Microsoft Enterprise Agreement. Each unit of the Azure Backup & Site Recovery annual subscription offer covers protection of a single instance to Azure with Site Recovery, as well as backup of data with Azure Backup. You can contact your Microsoft Reseller or Microsoft representative for more information.

Management: Tag Support with Resources

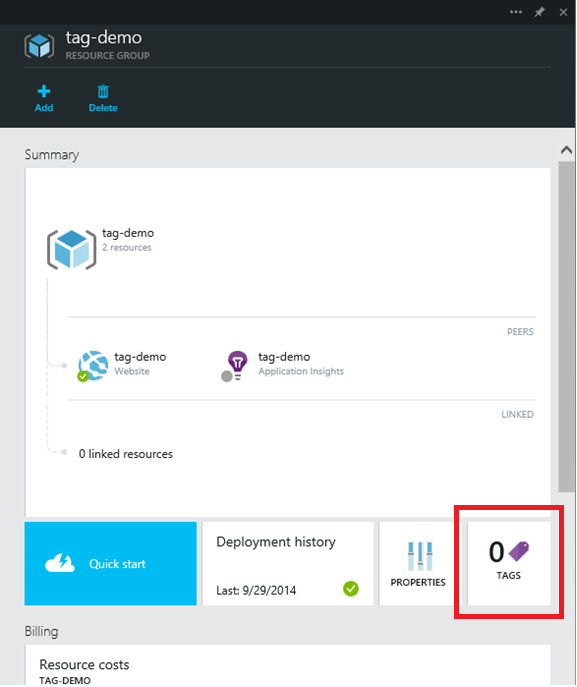

I’m excited to announce the support of tags in the Azure management platform and in the Azure preview portal.

Tags provide an easy way to organize your Azure resources and resources groups, by allowing you to tag your resources with name/value pairs to further categorize and view resources across resource groups and across subscriptions. For example, you could use tags to identify which of your resources are used for “production” versus “dev/test” – and enable easy filtering/searching of the resources based on which tag you were interested in – regardless of which application or resource group they were in.

Using Tags

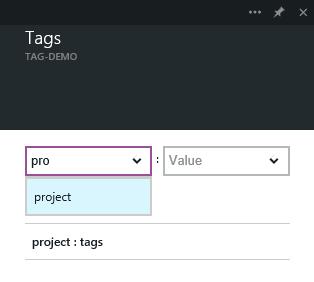

To get started with the new Tag support, browse to any resource or resource group in the Azure Preview Portal and click on the Tags tile on the resource.

On the Tags blade that appears, you'll see a list of any tags you've already applied. To add a new tag, simply specify a name and value and press enter. After you've added a few tags, you'll notice autocomplete options based on pre-existing tag names and values to better ensure a consistent taxonomy across your resources and to avoid common mistakes, like misspellings.

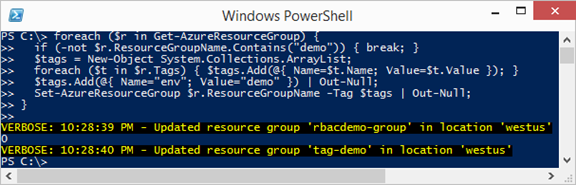

You can also use our command-line tools to tag resources as well. Below is an example of using the Azure PowerShell module to quickly tag all of the resources in your Azure subscription:

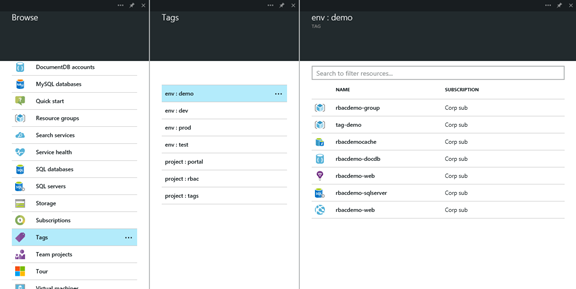

Once you've tagged your resources and resource groups, you can view the full list of tags across all of your subscriptions using the Browse hub.

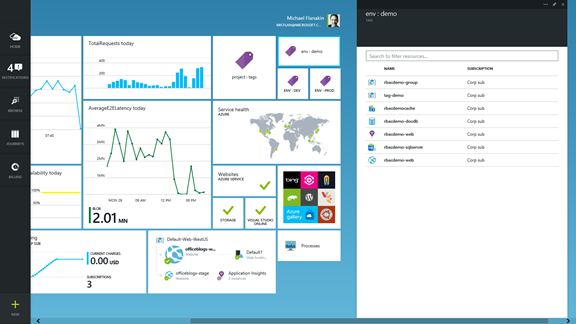

You can also “pin” tags to your Startboard for quick access. This provides a really easy way to quickly jump to any resource in a tag you’ve pinned:

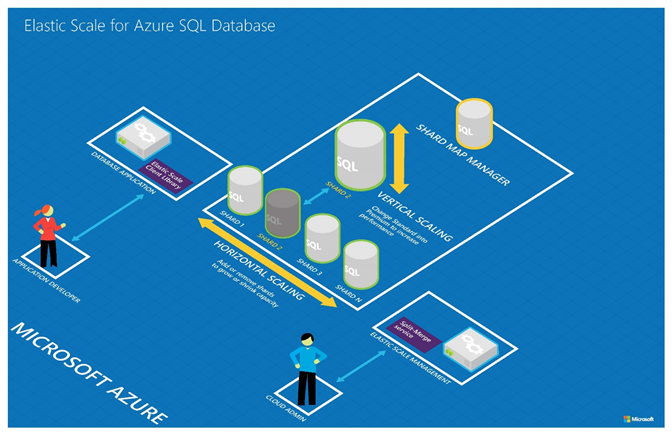

SQL Databases: Public Preview of Elastic Scale Support

I am excited to announce the public preview of Elastic Scale for Azure SQL Database. Elastic Scale enables the data-tier of an application to scale out via industry-standard sharding practices, while significantly streamlining the development and management of your sharded cloud applications. The new capabilities are provided through .NET libraries and Azure service templates that are hosted in your own Azure subscription to manage your highly scalable applications. Elastic Scale implements the infrastructure aspects of sharding and thus allows you to instead focus on the business logic of your application.

Elastic Scale allows developers to establish a “contract” that defines where different slices of data reside across a collection of database instances. This enables applications to easily and automatically direct transactions to the appropriate database (shard) and perform queries that cross many or all shards using simple extensions to the ADO.NET programming model. Elastic Scale also enables coordinated data movement between shards to split or merge ranges of data among different databases and satisfy common scenarios such as pulling a busy tenant into its own shard.

We are also announcing the Federation Migration Utility which is available as part of the preview. This utility will help current SQL Database Federations customers migrate their Federations application to Elastic Scale without having to perform any data movement.

Get Started with the Elastic Scale preview today, and watch our Channel 9 video to learn more.

DocumentDB: Document Explorer, Collection management and new metrics

Last week we released a bunch of updates to the Azure DocumentDB service experience in the Azure Preview Portal. We continue to improve the developer and management experiences so you can be more productive and build great applications on DocumentDB. These improvements include:

- Document Explorer: View and access JSON documents in your database account

- Collection management: Easily add and delete collections

- Database performance metrics and storage information: View performance metrics and storage consumed at a Database level

- Collection performance metrics and storage information: View performance metrics and storage consumed at a Collection level

- Support for Azure tags: Apply custom tags to DocumentDB Accounts

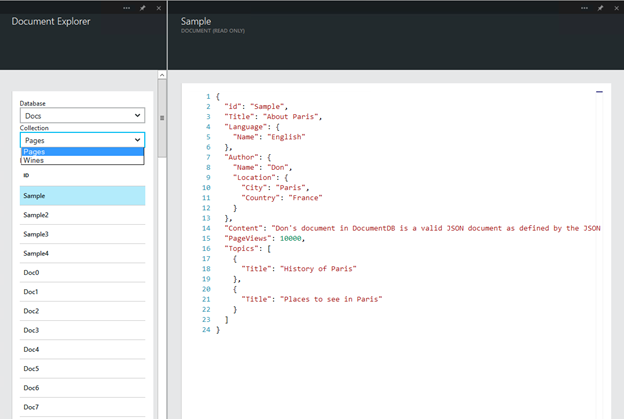

Document Explorer

Near the bottom of the DocumentDB Account, Database, and Collection blades, you’ll now find a new Developer Tools lens with a Document Explorer part.

This part provides you with a read-only document explorer experience. Select a database and collection within the Document Explorer and view documents within that collection.

Note that the Document Explorer will load up to the first 100 documents in the selected Collection. You can load additional documents (in batches of 100) by selecting the “Load more” option at the bottom of the Document Explorer blade. Future updates will expand Document Explorer functionality to enable document CRUD operations as well as the ability to filter documents.

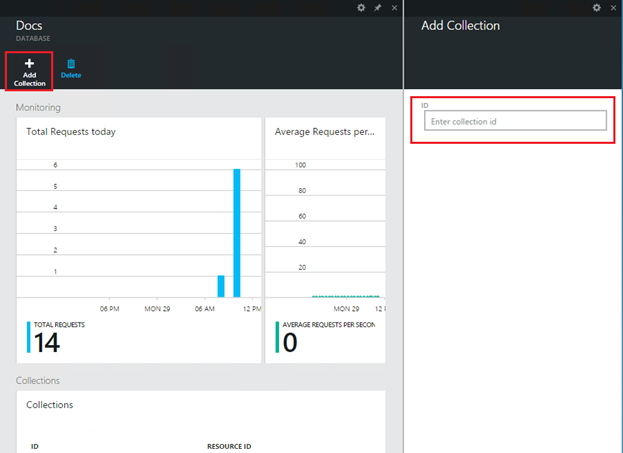

Collection Management

The DocumentDB Database blade now allows you to quickly create a new Collection through the Add Collection command found on the top left of the Database blade.

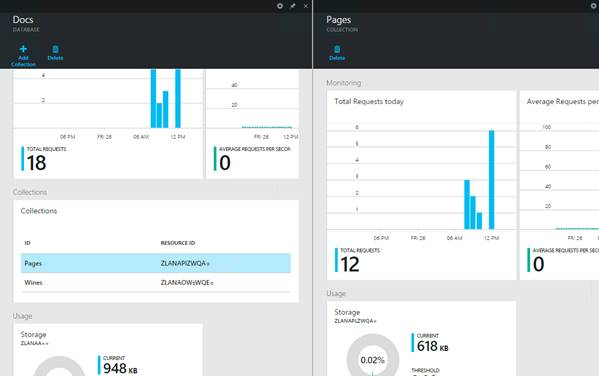

Health Metrics

We’ve added a new Collection blade which exposes Collection level performance metrics and storage information. You can access this new blade by selecting a Collection from the list of Collections on the Database blade.

The Database and Collection level metrics are available via the Database and Collection blades.

As always, we’d love to hear from you about the DocumentDB features and experiences you would find most valuable within the Azure portal. You can submit your suggestions on the Microsoft Azure DocumentDB feedback forum.

Notification Hubs: support for Baidu Cloud Push

Azure Notification Hubs enable cross platform mobile push notifications for Android, iOS, Windows, Windows Phone, and Kindle devices. Thousands of customers now use Notification Hubs for instant cross platform broadcast, personalized notifications to dynamic segments of their mobile audience, or simply to reach individual customers of their mobile apps regardless which device they use. Today I am excited to announce support for another mobile notifications platform, Baidu Cloud Push, which will help Notification Hubs customers reach the diverse family of Android devices in China.

Delivering push notifications to Android devices in China is no easy task, due to a diverse set of app stores and push services. Pushing notifications to an Android device via Google Cloud Messaging Service (GCM) does not work, as most Android devices in China are not configured to use GCM. To help app developers reach every Android device independent of which app store they’re configured with, Azure Notification Hubs now supports sending push notifications via the Baidu Cloud Push service.

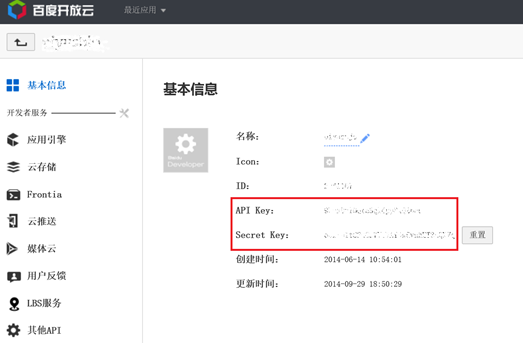

To use Baidu from your Notification Hub, register your app with Baidu, and obtain the appropriate identifiers (UserId and ChannelId) for your application.

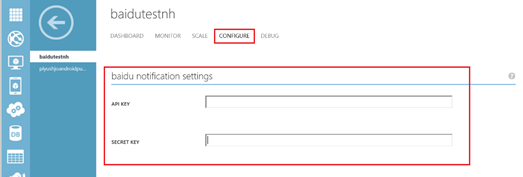

Then configure your Notification Hub within the Azure Management Portal with these identifiers:

For more details, follow the tutorial in English & Chinese. You can learn more about Push Notifications using Azure at the Notification Hubs dev center.

Virtual Machines: Instance-Level Public IPs generally available

Azure now supports the ability for you to assign public IP addresses to VMs and web or worker roles so they become directly addressable on the Internet - without having to map a virtual IP endpoint for access. With Instance-Level Public IPs, you can enable scenarios like running FTP servers in Azure and monitoring VMs directly using their IPs.

For more information, please visit the Instance-Level Public IP Addresses webpage.

Automation: Updates

Earlier this year, we introduced preview availability of Azure Automation, a service that allows you to automate the deployment, monitoring, and maintenance of your Azure resources. I am excited to announce several new features in Azure Automation:

- Active Directory Authentication

- PowerShell Script Converter

- Runbook Gallery

- Hourly Scheduling

Active Directory Authentication

We now offer an easier alternative to using certificates to authenticate from the Azure Automation service to your Azure environment. You can now authenticate to Azure using an Azure Active Directory organization identity which provides simple, credential-based authentication.

If you do not have an Active Directory user set up already, simply create a new user and provide the user with access to manage your Azure subscription. Once you have done this, create an Automation Asset with its credentials and reference the credential in your runbook. You need to do this setup only once and can then use the stored credentials going forward, greatly simplifying the number of steps that you need to take to start automating. You can read this blog to learn more about getting set up with Active Directory Authentication.

PowerShell Script Converter

Azure Automation now supports importing PowerShell scripts as runbooks. When a PowerShell script is imported that does not contain a single PowerShell Workflow, Automation will attempt to convert it from PowerShell script to PowerShell Workflow, and then create a runbook from the result. This allows the vast amount of PowerShell content and knowledge that exists today to be more easily leveraged in Azure Automation, despite the fact that Automation executes PowerShell Workflow and not PowerShell.

Runbook Gallery

The Runbook Gallery allows you to quickly discover Automation sample, utility, and scenario runbooks from within the Azure management portal. The Runbook Gallery consists of runbooks that can be used as is or with minor modification, and runbooks that can serve as examples of how to create your own runbooks. The Runbook Gallery features content not only by Microsoft, but also by active members of the Azure community. If you have created a runbook that you think other users may benefit from, you can share it with the community on Script Center and it will show up in the Gallery. If you are interested in learning more about the Runbook Gallery, this TechNet article describes how the Gallery works in more detail and provides information on how you can contribute.

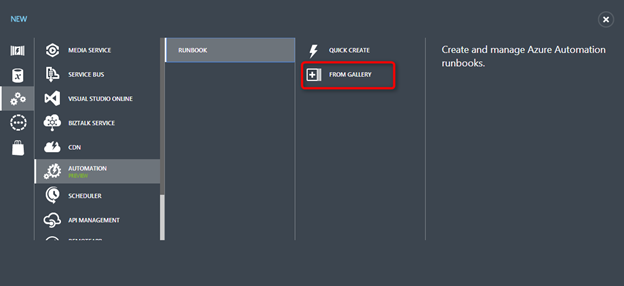

You can access the Gallery from +New, and then selecting App Services > Automation > Runbook > From Gallery.

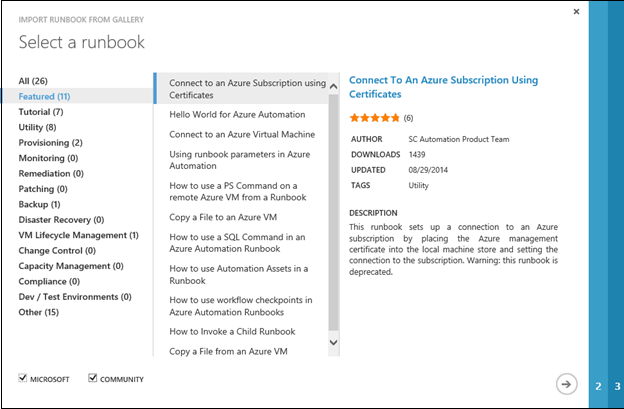

In the Gallery wizard, you can browse for runbooks by selecting the category in the left hand pane and then view the description of the selected runbook in the right pane. You can then preview the code and finally import the runbook into your personal space:

We will be adding the ability to expand the Gallery to include PowerShell scripts in the near future. These scripts will be converted to Workflows when they are imported to your Automation Account using the new PowerShell Script Converter. This means that you will have more content to choose from and a tool to help you get your PowerShell scripts running in Azure.

Hourly Scheduling

Based on popular request from our users, hourly scheduling is now available in Azure Automation. This feature allows you to schedule your runbook hourly or every X hours, making it that much easier to start runbooks at a regular frequency that is smaller than a day.

Summary

Today’s Microsoft Azure release enables a ton of great new scenarios, and makes building applications hosted in the cloud even easier.

If you don’t already have a Azure account, you can sign-up for a free trial and start using all of the above features today. Then visit the Microsoft Azure Developer Center to learn more about how to build apps with it.

Hope this helps,

Scott

P.S. In addition to blogging, I am also now using Twitter for quick updates and to share links. Follow me at: twitter.com/scottgu