Archives

-

Sharing session between ASP Classic and ASP.NET using ASP.NET Session state server

The different session state used by ASP Classic and ASP.NET is the most significant obstacle in ASP and ASP.NET interoperability. I started an open source project called NSession with the goal to allow ASP Classic to access ASP.NET out-of-process session stores in the same way that ASP.NET accesses them, and thus share the session state with ASP.NET.

How does ASP.NET access session state?

The underpinning of ASP.NET session state was discussed in detail by Dino. ASP.NET retrieves the session state from the session store and de-serializes it into an in-memory session dictionary at the beginning of page request. If the page requests for a read-writable session, ASP.NET will lock the session exclusively until the end of the page request, and then serialize the session state into the session store and release the lock. If a page requests for a read-only session, session state is retrieved without an exclusive lock.

We intend to mimic the behavior of ASP.NET in ASP Classic.

How does NSession work?

You need to instantiate one of the COM objects in your ASP classic page before it accesses session state, either:

set oSession = Server.CreateObject("NSession.Session")

or

set oSession = Server.CreateObject("NSession.ReadOnlySession")

If you instantiate NSession.Session, the session state in the session store will be transferred to the ASP Classic session dictionary, and an exclusive lock will be placed in the session store. You do not need to change your existing code that accesses the ASP Classic session object. When NSession.Session goes out of scope, it will save the ASP Classic session dictionary back to the session store and release the exclusive lock.

If you have done with the session state, you can release the lock early with

set oSession = Nothing

If you instantiate NSession.ReadOnlySession, the session state in the session store will be transferred to the ASP Classic session dictionary but no locks will be placed.

Installation

You may download the binary code from the Codeplex site. There are 2 DLLs to register. The first dll NSession.dll is a .net framework 4.0 dll and it is independent of 32 or 64 bit environment. Since it is accessed by ASP Classic, it needs to be registered as a COM object using RegAsm. It also needs to be place in global assembly cache using gacutil. You need .NET framework 4 on the machine but you can run any version of ASP.NET as the DLL is not loaded into the same AppDomain as the ASP.NET application. The reason that I chose .NET framework 4.0 is that I can use the C# dynamic feature to cut down the reflection.

The second dll NSessionNative.dll is a C++ dll and is platform dependent. You need to register the appropriate version depending on whether you are running the 32 or 64 bit application pool. The reason that we need a C++ dll is that we need deterministic finalization to serialize the session at the end of page request. We chose C++ over VB6 because it can create both 32 bit and 64 bit COM objects.As this is still a beta ware, I suggest that you use it like any pre-release software. Please report any bug and issue to the project site forum.

Future works

As this time, I have only implemented the code to access ASP.NET state server. The following are some of the ideas that I have in mind. Throw in yours in the Issue Tracker at the project site.

1. Support Sql Server session store.

2. Optimization. ASP.NET caches some configuration and objects. NSession could use the idea too.

3. Configuration setting to allow clearing of ASP Classic session dictionary and the end of page request.

4. Implement a filter mechanism. The ASP.NET may store some objects that do not make sense for ASP Classic and vice versa. A filter mechanism can be used to filter out these objects.

-

Gave back-to-back presentations on Javascript at SoCal Code Camp today

Today, I gave two back to back presentations on Javascript at SoCal Code Camp earlier today. The titles are:

The presentation material can be downloaded here.

-

I was asked to install .net framework 3.5 even though I already have it on my machine

Today, when I try to install a Click Once application, I was asked to install .net framework 3.5 even though I already have it on my machine. It turns out that the web application relies on the User-Agent header to determine if I have .net framework 3.5. However, IE9 no longer sends feature token as part of User-Agent. Instead, feature tokens are included in the value returned by the userAgent property of the navigator object. The work around? Just set the IE9 to IE8 compatibility mode and the website is happy.

-

5 minutes guide to exposing .NET components to COM clients

I occasionally write COM objects in .NET. I have to remind myself the right things to do every time. Finally, I just decide to write it up so I don’t have to do the same research again.

If you try to register a .NET component as COM using regasm tool, by default, the tool will expose all exposable public classes. The exposable mean that the class must satisfy some rules such as the type must have a public default constructor. For complete list of rules, see MSDN.

The Visual Studio template for class libraries actually turn off the COM visibility in the AssemblyInfo.cs file using the assembly attribute [assembly: ComVisible(false)]. This is a good practice as we do not want to flood the registry with all the types in our library.

Next, we need to apply ComVisible(true) attribute to all classes and interfaces that we want to expose. It is a good practice to apply the attribute [ClassInterface(ClassInterfaceType.None)] to classes and implement interfaces explicitly. Why? That is because by default .NET will automatically create a class interface to contain all exposable methods. We do not have much control of the interface. So it is a good idea to turn off the class interface and control our interfaces explicitly.

Lastly, it is a good idea to explicitly apply Guid attributes to classes and interfaces. This helps avoiding adding lots of orphan ClassId entries in the registry in our development machine. Visual Studio has Create GUID tool right under the Tools menu.

This is just a brief discussion and is no substitution for MSDN. Adam Nathan wrote an ultimate book called .NET and COM. This book was out of print for a while so even a used one was very expensive. Fortunately, the publisher reprinted it. Adam has also some nice articles on InformIT (need to click the Articles tab).

-

Take pain out of Windows Communication Foundation (WCF) configuration (2)

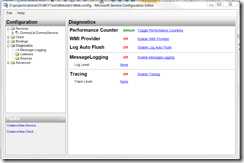

I am continuing my previous part. I am going to discuss how to trouble shoot WCF problems by using WCF tracing and message logging. Again I am going to use Microsoft Service Configuration Editor discussed in the previous part. When you click the Diagnostics node on the left, you will see the following:

You would want to enable tracing and message logging. If you want to see the log entries immediately, you also want to enable log auto flush. What is the difference between tracing and message logging? Tracing captures the life cycle of WCF service call while the message logging capture the message. If you get a message deserialization error, you would need message logging to inspect the message. Once enabling the logging, you need to go to each listener to configure the log location and go to each source to configure the trace level. If you want to log the entire soap message, go Message Logging node and set LogEntireMessage to true. MSDN has a nice article on recommended settings for development and production environments.

Also, do not change the .svclog file extension when you specify the log file. This is a registered extension for the WCF tracing log file. If you double click a log file, another tool in the same directory of configuration editor called SvcTraceViewer will show up and display the contents of the log.

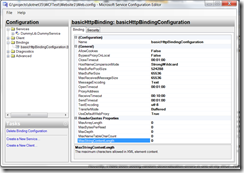

Recently, I have been seeing random deserialization errors in one of my WCF. After turning on full message logging, I was able to inspect the full soap message, and I see nothing wrong with the message. So what is the problem? By default, to prevent denial-of-service attack, WCF has some limits on message length, array length, string length. This can be configured in the binding configuration:

The default limit for the size of string parameter is 8192 bytes. There are clients sending string longer than that. So I had to increate the MaxStringContentLength attribute under the ReaderQuotas property. For the default of other quotas, please visit this page on MSDN.

WCF is big. I cannot discuss all the various configuration options. There are no substitutions for MSDN or a good book. I hope I have covered some common scenarios for help you get through the troubles quickly without spending lots of time navigating the MSDN documents.

-

Take pain out of Windows Communication Foundation (WCF) configuration (1)

WCF is a very flexible technology. The flexibility comes with complexity; there are many configurable pieces. It is usually relative easier to start with an ASP.NET project and add WCF service to it. However, as we start changing things and deploying web service to production, many unexpected problem could occur. I have written many WCF web services in my life but I don’t spend high enough percentage of my time working WCF. So I went through the same struggle again each time when I work with WCF. So I thought I would start documenting the experience to stop the pain.

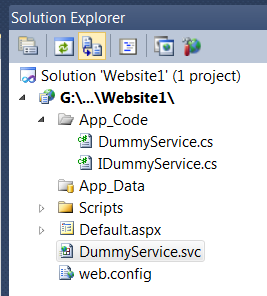

If I started with an empty website called website1 and add a WCF service called DummyService, Visual Studio will added the DummyService.svc as well as the associated interface and implementation in the App_Code directory.

The contents of the .svc file looks like:

Everything works fine and makes sense, but both the service interface and implementation class lives in the global namespace. Now supposing I like to put business logic in a library project, I add another library project with namespace DummyLib and move the interface and implementation into the library project. I add the reference to System.ServiceModel in my DummyLib project and add a project reference to DummyLib into my website. Now I need to change my .svc file. Obvious, I do not need the CodeBehind attribute any more as my code is compiled by my library project. How do I reference the class? According to MSDN, the Service attribute should point to "Service, ServiceNamespace". Unfortunately, that does not work. Fortunately, like any other place where a type is referenced, I can use a type name like “TypeName, AssemblyName, etc”. Look at the web.config file and look for a type attribute and you will know what I am talking about. So now I .svc file looks like:

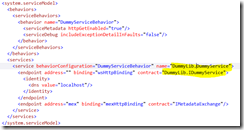

Is it enough? No. WCF actually stores very few information in the class and stores lots information in the web.config; that is how it could be configurable. So we need to modify the web.config to reference both the namespaced interface and namespaced class (two changes).

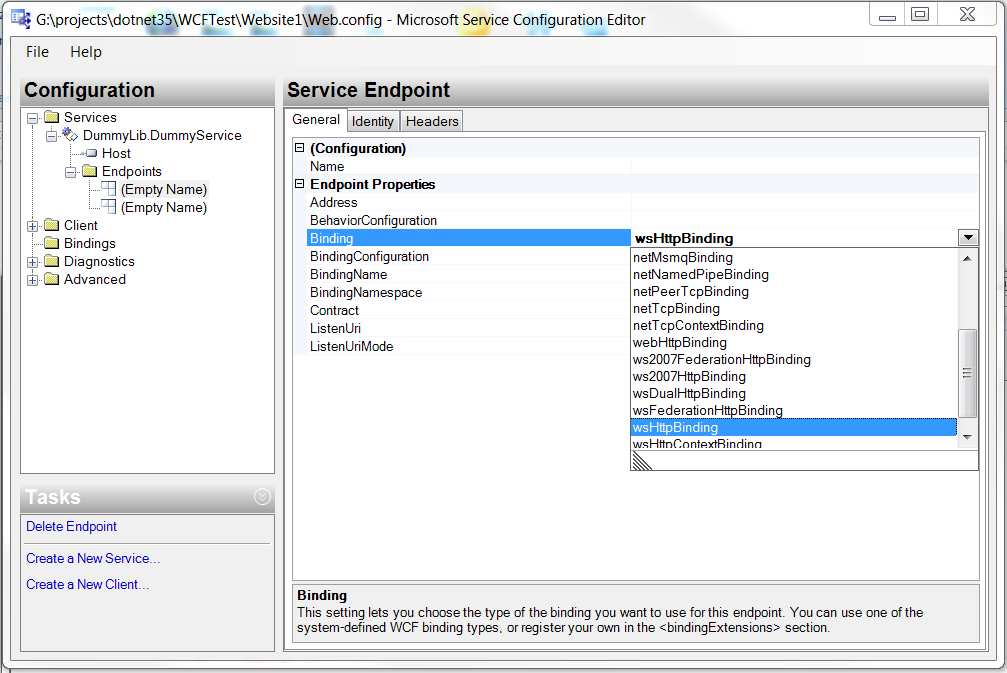

Now it is time to examine our web.config file. There is a serivce element that points to our implementation type. The service exposes 2 end points: one is our service end point; the “mex” endpoint if for retrieving meta data, in our case, WSDL. The web service end points to the wsHttpBinding and the mex endpoint poinsts to the mexHttpBinding. Finally, the service point to a behavior that is HttpEnabled and does not include Exception Details in fault. Confused? It is not easy to create configuration like this from scratch, but once the Visual Studio created this for us, we can feel our way to move around a bit. Fortunately, Windows SDK has a really nice tool called SvcConfigEditor. It is normally in C:\Program Files\Microsoft SDKs\Windows\v7.0A\Bin or C:\Program Files (x86)\Microsoft SDKs\Windows\v7.0A\Bin. If I open my web.config file with SvcConfigEditor, and look at the choice for end point binding:

There are lots of choices. If we work with SOAP, two common choices are basicHttpBinding and wsHttpBinding. basicHttpBinding supports an older standard of SOAP. It does not support message level security, but is very compatible with all kinds of clients. wsHttpBinding supports newer SOAP standard and many of the WS* extensions. Now supposing we have legacy clients so we need to use basicHttpBinding. We also want to use Https to secure us at the transport level. Let us see how many changes that we need to make.

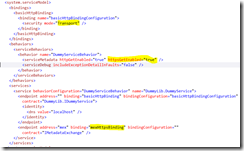

Firstly, basicHttpBinding does not support https by default. We need to create a basicHttpBindingConfiguration to change the default. So I click the Bindings node on the left and then add a binding configuration calls basicHttpBindingConfiguration. Then I can click the Security tab and then the Mode from “None” to “Transport”. Now I can return to the end point and the configuration I just created is available to select in the BindingConfiguration attribute. Next, we need to go to the behavior, and add HttpsGetEnabled=”True”. Lastly, we need to go to the “mex” endpoint to change binding from mexHttpBinding to mexHttpsBinding. So to change from http to https, we need to make 3 modifications and our web.config now looks like:

In the next part, I will talk about WCF tracing and message logging. It really makes trouble shooting easier.

-

Sharing session state over multiple ASP.NET applications with ASP.NET state server

There are many posts about sharing session state using Sql Server, but there are little information with out-of-process session state. The primary difficulty is that there is not an official way. While the source code for the Sql Server Session State is available in ASP.NET provider toolkit, there are scarce information on state server. The method introduced in this post is based on both public information as well as a little help from Reflector. I should warn that this method is considered as hacking as I use reflection to change private member. Therefore, it will not in partially trusted environment. Future version of the OutOfProcSessionStateStore class could break the compatibility too; users should use the principle discussed in the post to find the solution.

I will first discuss how ASP.NET state server used by out-of-proc session state works. According to the published protocol specification, ASP.NET state server is essentially a mini HTTP Server talking in customized HTTP. The key that ASP.NET used to lookup data is composed of an application id from HttpRuntime.AppDomainAppId, a hush of it and the SesionId stored in cookie. This is very similar to the Sql Server session state. Developers has been using reflection to modify the _appDomainAppId private variable before it is used by the SqlSessionStateStore. However, this method does not work with OutOfProcSessionStateStore because the way it was written.

When the ASP.NET session state http module is loaded, it uses the mode attribute of the sessionState element in the Web.Config file to determine which provider to load. If the mode is “StateServer”, the http module will load OutOfProcSessionStateStore and initialize it. The partial key it constructed is stored in the s_uribase private static variable. Since SessionStateModule loads very early in the pipeline, it is difficult to change the _appDomainAppId variable before OutOfProcSessionStateStore is loaded.

The solution is to change modify the data in OutOfProcSessionStateStore using reflection. According to ASP.NET life cycle, an asp.net application can actually load multiple HttpApplication instances. For each HttpApplication instance, ASP.NET will load the entire set of http modules configured in machine.config and web.config without specific order. After loading the http modules, ASP.NET will call the init method of the HttpApplication class, which can be subclassed in global.asax. Therefore, we can access the loaded OutOfProcSessionStateStore in the init method to modify it so that all the ASP.NET applications use the same application id to access the state server. ASP.NET is already good at keeping the sessionid the same cross applications. If this is not enough, we can supply a SessionIdManager.

So, we just need to place the following code in global.asax or code-behind:

As you can see, this code works purely because we hacked according to the way OutOfProcSessionStateStore was written. Future changes of OutOfProcSessionStateStore class may break our code, or may make our life harder or easier. I hope future version of ASP.NET will make it easier for us to control the application id used against the state server. If fact, Windows Azure already has a session state provider that allows sharing session state across applications.

Edited on 10/17/2011:

The previous version of the code only works in IIS6 or IIS7 classic mode. I made a change so that the code works in IIS7 integrated mode as well. What happen is that in IIS7 when Application.Init() is called, the module.init() is not yet called. So the _store variable is not yet initialized. We could change the _appDomainAppId of the HttpRuntime at this time as it will be picked up later when the session store is initialized. The session store will use a key in the form of AppId(Hash) to access the session. The Hash is generated from the AppId and the machine key. To ensure that all applications use the same key, it is important to explicitely specify the machine key in the machine.config or the web.config rather than using auto generated key. See http://msdn.microsoft.com/en-us/library/ff649308.aspx for details and especially scroll down to the section "Sharing Authentication Tickets Across Applications".

-

Theory and Practice of Database and Data Analysis (4) – Capturing additional information on relations

So far in this series, I have been talking about traversing related data by navigating down the referential constraints. The physical database makes no distinction about the nature of the foreign key, whether it is parent-child relationship or another type of reference. In logical ER modeling, we often capture more information. So in this blog, I will explore the idea of capturing additional information so that we can build more powerful tools.

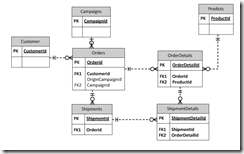

Let me further refrain myself by using the fictitious e-commerce company example introduced in the part 1 of this series: We have an Orders table to capture the order header and OrderDetails table to capture the line items. An order must be placed by a customer, and may or may not originates from marketing campaign. An order may be fulfilled by one or more shipments. Each shipment would ship order line items in part or in full. So we have an ER diagram likes the following:

In the ER diagram, there is a clear parent-child relationship between Orders and OrderDetails and between Shipdments and ShipmentDetails. If we send the data in an XML file, each OrderDetail element would be a child of an Order element and each ShipmentDetail element would be a child element of an Shipment element. However, it appears that ShipmentDetails table has two parents: Shipments and OrderDetails. We will pick the parent by the importance of the relationship so we will pick Shipments as the parent. We then need to establish an additional mechanism to store the cross references between ShipmentDetails and OrderDetails in our XML file.

Let us examine other relations. Customer is a mandatory attribute for the Order table and Product is a mandatory attribute to the OrderDetails. Campaign is an optional attribute of the Orders. We can further classify as Products as system table and Customers as data table.

So we have classified the relations in our simple example into several categories: parent-child, cross-reference, mandatory attribute to system table, mandatory attribute to data table and optional attribute.

Now let us use an example to see how such classification can help us. Supposing we are building a tool to export all data related to a transaction from our production database into an XML and then import into our development database. The ID of an element could change from one system to another. That is not a problem with parent-child as we will get a new key when we insert the parent and we can propagate that down to the children. However, we need to take extra care to ensure that the cross reference is maintained.

We do not have to worry about the system table but we do have to worry about the data table. Our development database may or may not have the same customer. So from our classification, we can generically determine that we need to get a copy of mandatory reference to a data table and its children to check for existence in the destination database.

Besides categorization, other information that we like to capture are one-to-one relationships, some of them can be inferred; if a referential constraint references to primary key in both tables, it has a one-to-one relationship. We also like to capture hidden relationship; they are real references but cannot be enforced by foreign key constraint in Sql Server. For example, some tables contain an ID column that can store multiple types of IDs; a separate column is used to indicate the type of ID stored in the ID column.

With human input of extra information beyond those we can extracted from physical databases, we shall be able to build better tools. I will explorer some tools in upcoming blogs of this series.

-

Theory and Practice of Database and Data Analysis (3) – Exhaustive Search by Traversing Table Relationships

In this post, I will discuss about building a script to find all the relevant data starting from a table name and primary key value. In the previous post, I have discussed how to a list of tables that references a table. From a parent table, we just need to get a list of child tables. We then loop through each child table to select records using the parent primary key value. If the child tables themselves have child tables, we repeat the same approach by recursively getting the grand children.

I often need to remote-desktop into clients system where I only have read privilege; I can neither create stored procedures nor execute them. So I am going to present a script that requires only read privilege.

In transact-SQL (T-SQL) batch, looping through records can be implemented using cursor or temp table. I used the later approach as temp table can grow so that I can also use it for recursion as well.

Recursion is a harder problem here. Recursion can be done in most of programming languages by calling procedures recursively. However, that is not possible here since I am restricted to a batch. In computer science, recursion can be implemented with a loop with a stack. In many programming languages, when a caller calls a callee, the program would save the current local data as well as the returning location of the caller into the stack. The area in the stack used by each call is called a stack frame. Once the callee returns, the program will restore the local data from the stack and resume from the previous location.

In my script, I use temp table #ref_contraints to accomplish both looping and recursion. referential constraints from the top table are added to the table as they are discovered and removed from the table when they are consumed (that is, no longer needed). I used the depth-first traversal in my script. With this structure, I can change to breath-first traversal with minimum efforts.

The #keyvalues temp table contains the primary key values for the table that I have already traversed. I can get the records from the child tables by joining to the key values in this table so that I only need to query each child table once for each foreign key relationship.

In order to make the script simpler, I eliminate the schema and assume all tables are under the schema “dbo”. This works with our database, and works with databases in Microsoft Dynamics CRM 2011.

I have also assumed that all primary keys contain only one integer column. This is true with out database. This is also true with Microsoft Dynamics CRM 2011 except you need to change integer id to guid.

I added comments the script so that one would know where the code would correspond to looping and procedure calling if the code is written in a language like VB.NET or C#. The comments also indicate the insertion points if additional code is needed.

In future posts of this series, I will discuss how to capture human intelligence to make search even more powerful.

-

The case for living in the cloud

These days one cannot have a day without hearing the cloud; vendors are pushing it. Google’s Chrome Book is already nothing but the cloud. Apple’s iOS and Microsoft’s upcoming Windows 8 also have increase cloud features. Until now, I have been skeptical about the cloud. Apart from the security, my primary concern is what if I lose the connection to the internet.

Recently, I have been migrating to the cloud. The main reason is that I triple boot my laptop now. I once run Windows 7 as my primary OS and anything else as virtual machines. However, the performance of these virtual machines has been less than ideal. The Windows 7 Virtual PC would not run 64bit OS, so I have to rely on Virtual Box to run Windows 2008 R2. Recently, I have been booting Windows 8 Developers Preview and Sharepoint/Windows 2008 from VHD. This is the my currently most satisfying configuration. Although the VHD is slightly slower than the real hard drive, I have the full access to the rest of the hardware. The issue I am facing now is that I need to access my data no matter which OS I boot with. That motivate me to migrate to the cloud.

My primary data are my email, document and code.

Email is least of my concern. Both Google and Hotmail have been offering ample space for me to store my email. I do download a copy email into Windows 7 so I can access them when I do not have access to the internet.

Documents also have been easier. Google has been offering Google Docs as well as web based document editing for a while. Recently, Microsoft has been offering 25GB of free space on Windows Live Skydrive. From Skydrive, I can edit documents using Office Web Apps. So accessing and editing document from a boot OS that does not have Office installed is no longer a concern. From Office 2010, it is possible to save documents directly to Skydrive. In addition, Windows Live Mesh can automatically sync the local file system with Skydrive.

Lastly, there are many free online source code versioning systems. Microsoft Codeplex, Google Code and Git, just to name a few, all offer free source code versions systems for open source projects. Other vendors such BitBucket also offer source control for close source projects. Open source version control software such as TortoiseHg or TortoiseSVN are easy to get. The free Visual Studio Express is also becoming more useful for real world projects; the recent Windows 8 Developer Preview has Visual Studio Express 11 preinstalled.

So my conclusion is that the currently available services and software are sufficient to my needs and I am ready to live in the cloud.

-

Theory and Practice of Database and Data Analysis (2) – Navigating the table relationships

In the first part of the series, I discussed how to use the Sql Server meta data to find database objects. In this part, I will discuss how to find all the data related to a transaction by navigating the table relationships. Let us suppose that the transaction is a purchase order stored in a table called Orders. Any one that has seen the Northwind database knows that it has a child entity called OrderDetails. In even a modest real world database, the complexity can grow very fast. If we can ship a partial order and put the rest in back order, or we need to ship from multiple warehouses, we could have multiple Shipping records for each Order record and have each ShippingDetails record linked to an OrderDetails record. The customers can return the order, either in full or in part, with and without return authorization number, and the actually return may or may not match the return authorization. The system needs to be flexible enough to capture all the events related to the order. As you can see, a very modest order transaction could easily grow to a dozen of tables. If you are not familiar with the database, how can you find all the related table? If you need to delete a record, how do you delete it clean? If you need to copy a transaction from a production system to the development system, how do you copy the entire set of the data related to the transaction?

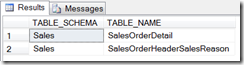

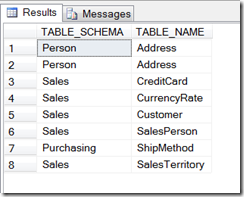

Fortunately, in any well-designed database, table relationships can be navigated through the foreign key relationships. In Sql server, we can usually find the information using the sp_help stored procedure. Here we will use INFORMATION_SCHEMA to obtain more refined results to be used in our tools. The TABLE_CONSTRAINTS view contains the primary and foreign keys on a table. The REFERENTIAL_CONSTRAINTS view contains the name of the foreign key on the many side and the name of the unique constraint on the one side. It is possible to navigate the table relationships using these two views. The following query will find all the tables that references the Sales.SalesOrderHeader table in the AdventureWorks database:

The following query will return all the tables referenced by the Sales.SalesOrderHeader table:

And the results:

The Person.Address table appeared twice because we have two columns referencing the table.

The CONSTRAINT_COLUMN_USAGE view contains the columns in the primary and the foreign keys. By using these 3 views, we can construct a simple tool that drills down into the data. In the next part of this series, we will construct such a tool.

Added by Li Chen 9/26/2011

The KEY_COLUMN_USAGE view also contains the columns in the primary and the foreign keys. I prefer to use this view because it also contains the ORDINAL_POSITION that I can use to match the columns in the primary and the foreign keys when we have composite keys. The following example shows how to match the columns. The last line in the query eliminates the records from a table joining to itself.

-

Theory and Practice of Database and Data Analysis (1) – Searching for objects

Recently, I had to spend a significant portion of my time on production data support. Since we have a full-featured agency management system, I have to deal with parts of the application that I am not familiar with. Through the accumulated experiences and improved procedure, I was able to locate the problems with the increasing speed. In this series, I will try to document my experiences and approaches. In the first part, I will discuss how to search for objects.

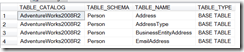

Supposing we were told that there were problems in address data, the very first thing we need to do is to locate the table and the field that contains the data. Microsoft SQL Server has a set of sys* tables. It also supports ANSI style INFORMATION_SCHEMA. In order to reuse the knowledge discussed here to other database systems, I will try to use the INFORMATION_SCHEMA as much as possible. So to find any table that is related to “Address”, we can use the following query:

Using AdventureWorks 2008 sample database, it yields the following results:

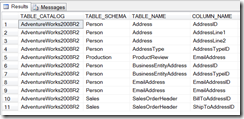

If we suspect there are columns in other tables relating to address, we can search for the columns with the following query:

We will get the following results this time:

Supposing we need to find all the stored procedures and functions that reference a table, we can use sp_depends stored procedure. If we need to search thoroughly using a string, we can use the following query:

However, we cannot find triggers through Information_Schema. We have to use sysobjects:

Once we find the triggers, we can find the text using sp_helptext or query the syscomments table.

In the next part of the series, I will discuss navigating the table relationships.

-

ASP.NET Web Application Project (WAP) vs Website

Recently, we moved a fairly large web application to Website project. This is a very old decision point that we have moved back and forth a few times. A Bing search shows 2 well written entries early in the list:

Here is how we reach the current decision:

- Back to VS2002/VS2003, it was WAP. No choice.

- In VS2005, it was website. No choice initially until WAP was added back to VS2005.

- In VS2008, we moved to WAP. The main reason was that website projects does not have a project file to store assembly and project references. The project reference is stored in the solution file. The reference to GAC assembly was stored in the web.config and the non-GAC DLL reference is through a bunch .refresh files that points to the location of DLL files (library). This makes checking in solution file into version control important.This system is fragile and breaks easily.

- Now we are moving back to Website. We understand website reference mechanism better now so it is less frustrating. In WAP, the entire code-behind is compiled into a single DLL. In website, it can be much granular so it works better in industries (such as financial) that requires clean audit-trail of artifacts. We have several thousands of web pages. WAP will requires us to compile the entire application before debugging while we can compile just a single page with Website. In general, with the adoption of MVVM, most of the business logic now resides in separate library DLLs so we do not have much code other than the data-binding code left in the code-behind. This allows us to have a fine control on the granularity of compilation and deployment units.

-

Moving DLLs from bin to GAC in asp.net

This must be a decade-old question, but surely come up to bite us again during the refactoring today. We decided to moved several shared DLLs from bin to GAC in several of our ASP.NET web sites. Here is what we found out:

- The code-behind files and the code in app_code directory failed to compile. The way to fix is add referenced assemblies to the compilation/assemblies section under system.web in web.config file. ASP.NET compiler will pickup DLLs in bin automatically but will not do so in GAC. We have to explicitly instruct it.

- If we update any DLL in GAC, we need to restart the application (e.g., using IISRESET) for APP.NET applications to pick up new versions of the DLL. ASP.NET shadows a copy of GAC DLLs in temp ASP.NET directory. While ASP.NET can detect DLL changes in the bin folder, it will not detect DLL changes in GAC until the application is restarted.

- Don’t even think of putting DLLs in both bin and GAC. There are well defined rules are which ones get actually used but doing so will only cause confusion. For more information, see http://stackoverflow.com/questions/981142/dll-in-both-the-bin-and-the-gac-which-one-gets-used.

-

Silverlight 4 version of VBScript.NET

I was asked whether my VBScript.NET compiler would run under Silverlight. I indeed updated my VBScript.NET compiler a month ago to support Silverlight 4. So besides Iron languages, VBScript.NET is another dynamic language that you can use in Silverlight. The May 3, 2011 or a later version supports Silverlight 4.

-

Gave 3 presentations at Southern California Code Camp today

I gave 3 presentations at SoCal Code Camp today. The 3 talks are:

It is time to rev up your Javascript skill (slides)

Quick and Dirty JQuery (slides)

ASP.NET: A lap around tools: F12, Fiddler, NuGet, IIS Express, WebMatrix/WebPages (Razor), etc (slides)

The presentation materials can be downloaded from the slides link after each title above.

-

Teaching high school kids web programming (Part II)

A few months ago, I thought of teaching high school kids asp.net programming. I let the idea sit for a few months. Now that summer has started, I think about this again. The following communication with Microsoft Scott Hunter by InfoQ caught my attention:

Web Matrix is the tool for Web Pages, their lightweight IDE to compete directly with PHP.

Three ways to create web pages using ASP.NET and Visual Studio:

- Web Pages: Extremely light weight, easy to build pages. No infrastructure needed, very much like PHP or classic ASP. Full control over the HTML being generated.

- MVC: Same light-weight view engine as Web Pages, but backed by the full MVC framework. Full control over the HTML being generated. Upgrade cycle is approximately 1 year.

- Web Forms: Component-based web development. Use when time to delivery is essential. Especially suited for internal development where full control over the look and feel isn’t essential. Upgrade cycle is tied to the core .NET Framework.

The Scott went further to mention that “the Razor view model was designed for Web Pages first, then adopted by MVC 3.”

So ASP.NET Web Pages is exactly what I needed. Although I have heard WebMatrix and Razor for a while (I even attempted to convert webform to Razor), I have failed to take WebMatrix seriously. We already got Visual Studio and the free Express edition. I always though Razor is just another view syntax to save a few key strokes until I realized that the Web Pages could be used without MVC. I found several tutorials on Web Pages:

The ASP.NET WebMatrix Tutorial (You my download the same material as a PDF eBook)

Web Development 101 using WebMatrix

Web Camp WebMatrix training kit

These tutorial has everything that I need in my original goal without any more work me:

- Be able to teach for just a few hours and get kids productive.

- Close to HTML without the complication of OOP and black box (e.g., the complicated life cycle of WebForm).

- Simple data access with SQL statements.

Just a side note. Although the tutorials use WebMatrix, I could do everything in Visual Studio as well (though I did not try the Express edition). Just need to install ASP.NET MVC3 with latest Visual Studio tools. This will get Razor tools installed. Then create an empty website as the Razor tools are current not in the template for an empty web application.

-

Passing managed objects to unmanaged methods as method argument

It is well documented how to expose a .net class as a COM object through the COM callable wrapper. However, it is not well documented how to pass a .net object to a COM method in an argument and have the COM method treat the .net object as COM. It turns out all we need to do is to add the [ComVisible(true)] to the classes that we need to pass to COM method; runtime would recognize that we are passing the object to COM and generate the COM callable wrapper automatically.

However, there are some impedance mismatches between COM and .NET. For example, .NET supports method overloading while COM does not. COM objects often uses optional arguments so that the client can pass variable number of arguments to the server. I used the following pattern to expose the Assert object to unmanaged VBScript engine in my VBScriptTest project in ASP Classic Compiler:

[ComVisible(false)] public void AreEqual(object expected, object actual) { Assert.AreEqual(expected, actual); } [ComVisible(false)] public void AreEqual(object expected, object actual, string message) { Assert.AreEqual(expected, actual, message); } [ComVisible(true)] public void AreEqual(object expected, object actual, [Optional]object message) { if (message == null || message == System.Type.Missing) AreEqual(expected, actual); else AreEqual(expected, actual, message.ToString()); }Note that I marked the first two overloaded methods with [ComVisible(false)] so that they are only visible to other .net objects. Next, I created a COM callable method with an optional parameter. The optional parameter must have the object type; runtime will supply the System.Type.Missing object if a parameter is not supplied by the called. So in the body of the method, I just need to check whether the parameter equals to System.Type.Missing to determine whether the parameter is passed.

-

/Platform:AnyCPU, /Platform:x64, /Platform:x86, what do they mean

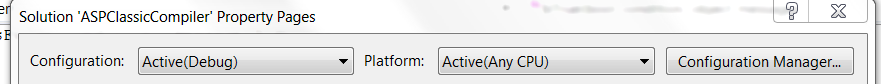

When we build a .net solution, we often see the Platform choice in the configuration manager:

Besides AnyCPU, other choices are x86 ,x64, and Itanium. What do they mean? According to MSDN, “Anycpu” compiles the assembly to run on any platform while the other 3 choices compiles the assembly for 32 bit, 64 bit x64 and 64bit Itanium respectively.

On a 64-bit Windows operating system:

-

Assemblies compiled with /platform:x86 will execute on the 32 bit CLR running under WOW64.

-

Executables compiled with the /platform:anycpu will execute on the 64 bit CLR.

-

DLLs compiled with the /platform:anycpu will execute on the same CLR as the process into which it is being loaded.

If a .net application uses any 32 bit ActiveX component, it will not be able to load the component in-process. We have two choices:

- Host the 32 bit component in an external process, such as COM+ Server Application, or

- Run the .NET application as a 32 bit application.

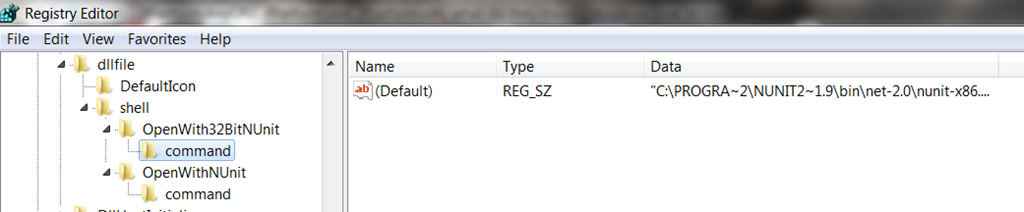

The VBScriptTest project in ASP Classic Compiler contains an unmanaged test. It hosts the msscript.ocx so that I can compare my .net implementation of VBScript against the original VBScript engine from Microsoft. In order to use the 32 bit msscript.ocx, I need to run my unit test in a 32 bit process. NUnit has a 32 bit version of the test runner call NUnit-x86.exe. To use the 32 bit runner, I registered new shell extension as shown below so that I can use the “Run 32 bit NUnit” context menu to invoke the 32 bit NUnit test:

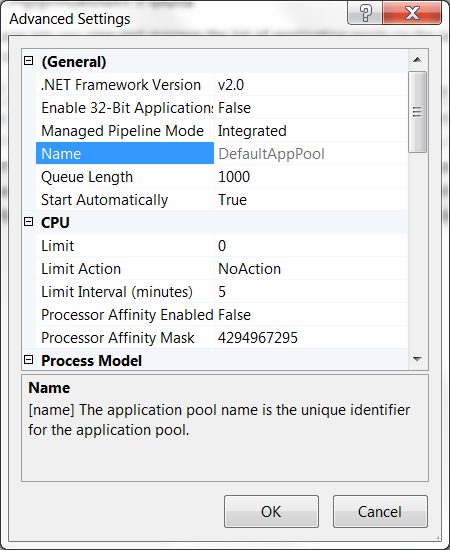

Lastly, how to run ASP.NET in 32 bit? It turned out that this is controlled by the Application Pool. If I set the “Enabled 32 bit Application” to true in the Advance Property Setting of the application pool, the ASP.NET application will run in 32 bit.

-

-

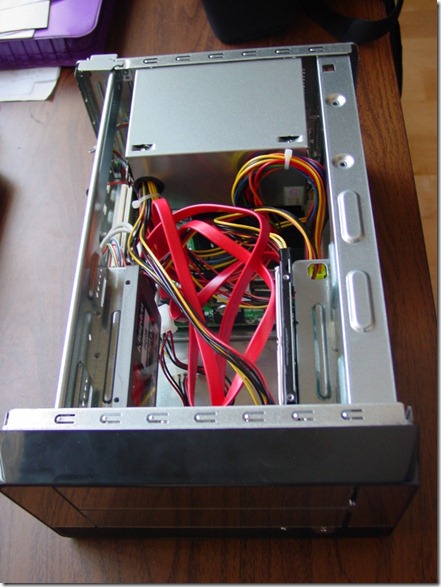

Build a very green Windows Server

My old server consumes about 80W. Given my top tier electricity cost of $0.24/KWh, it costs me about $14 in electricity each month, and generates considerable noise and heat. Recently, I set out to build a greener server. I bought an Intel Atom Dual-Core D525MW Fan-less Motherboard

for $80 and a Apex MI-100 mITX case for $40. I also I bough an Kingston 64GB SSD for $75 after rebate. I used 2 x 2GB DDR3 SODIMM RAM and a Toshiba 250GB notebook drive scratched from my recent laptop upgrade. So my total out-of-pocket expense is just under $200 before tax. If I include the cost of retired parts, the hardware cost is about $300. I cloned my Windows 2003 Server OS from my old server into my new server. Although Intel does not supports server OS from desktop boards, I was actually able to install all the necessary drivers from the Intel CD despite the setup program reported installation failure on 3 out of 4 drivers. The new server is barely audible; it is quieter than a outlet timer. Like any machine powered by an SSD drive, it boots up in seconds.

So how much power does it consume? My Kill-A-Watt meter tells me that in consumes just under 30 watt at idle. To put that in perspective, my previous Dish Network Receiver and my current Time Warner HD Receiver each consumes about 20 watt at idle. It is quite adequate as my server.

-

ASP Classic Compiler: How to contribute

For the project to be viable, we need contributors of all technical levels, from writing simple test cases and examples, to working on the compiler. I have written a wiki page on my project site detailing how to contribute.

-

ASP Classic Compiler: the unit testing framework is released

In my previous post, I stressed the importance to have a unit testing framework to move forward with this project. I was facing the decision whether to use NUnit or MSTest. After some thoughts, I came to the conclusion that the choice of unit testing framework is not critical, at least for now. For a language project, unit testing usually falls into two categories: the testing of a parser and the testing of generated code. For parser testing, most of work lies in comparing AST generated by the parser with the expected AST. Paul Vick has an excellent framework in his VBParser work. For testing of the generated code, it is best to have a unit testing framework that is self-contained in the target language so that is can most accurately test the semantics of the language. For example, Javascript has the QUnit framework and ASP Classic has the ASPUnit framework.

So I went ahead to release my own unit testing framework for VBScript. I created an interface called IAssert that has the following methods:

void AreEqual(object expected, object actual);

void AreEqual(object expected, object actual, string message);

void AreNotEqual(object notExpected, object actual);

void AreNotEqual(object notExpected, object actual, string message);

void Fail();

void Fail(string message);

void IsFalse(bool condition);

void IsFalse(bool condition, string message);

void IsTrue(bool condition);

void IsTrue(bool condition, string message);

These are minimum set of method often found in most of unit testing frameworks. We can always add more when needed. Note that I did not have IsEmpty or IsNothing methods. That is because I believe we should use the language built-in method to best reflect the language semantics. For example, instead of having an IsEmpty method in the testing framework, we should use IsTrue(IsEmpty(myVar)) where we are using the VBScript built-in function.

In environments that we have control over the host, the host will supply an Assert object that can route the testing results to one of the existing unit testing framework, such as NUnit, so that we can reuse the test runners and report functionalities. In the environments that we do not have control of the host, we can supply an Assert class in VBScript itself, much like what ASPUnit does.

If you look at my VBScriptTest project, you will notice that it uses NUnit framework. It has a single NUnit test case that walks the entire hierarchy under the VBScripts folder. When we need to write a new test case, we just simply add a .vbs file under the VBScripts folder. The following is an example VBScript unit test case:

dim a Assert.IsTrue(IsEmpty(a), "Unassigned variable must be empty")Because I have use a single NUnit test case to run all the VBScripts, the message parameter is an important parameter to distinguish the test case in the situation of failed test case.

-

ASP Classic Compiler: the next step

I opened the source code for my ASP Classic Compiler 2 days ago. As this project was started as a research project, proof of concepts has been a major goal. I have cut corners whenever possible to bring out features fast. Going forward, there are many things that need to be tighten up. The following are my top priorities:

- Unit testing: You eager downloaders may have noticed the my project is notoriously missing unit tests. I intentionally took out my unit tests when I upload because my previous unit tests were not well organized. I want to give us chance of a fresh start. Since we will have refactoring and re-architecture of the code generator, unit testing is going to carry this project. Like many open source projects, NUnit or MSTest is a decision to make. I have created this thread in my forum to solicit ideas. I don’t quite need StackOverflow style discussions as there are already plenty. But if you look at recent high profile switches in either directions, you would know this is not an easy decision.

- Making it easier for people diagnose problems. A debugger is not going to happen easily, as I have already tried twice. But with source code, it is still possible for people to diagnose problems. This will help reducing the frustration level and getting bug reported and fixed.

- VBScript 5.0 support. This is my real top goal since many people requested it, but I could not get there without 1 and 2. I want to take over the memory management since I have already pushed DLR to the limit. Controlling the memory management will open up the possibility for debugging. Details is going to be a blog by itself.

- Performance improvement when calling CLR static methods. The TypeModel used in VBScript.NET does not take advantage of call-site caching and is hence very slow. Fortunately, this can be corrected fairly easily.

- Optimize for multiple string concatenations. This has been a habit in many ASP projects. I can discover this in code analysis and generate the code behind the scene using StringBuilder. This is a pretty fun research project and it is not too hard.

- Type checking in many places can still be optimized for callsite-caching.

- Rewrite of the parser. Currently, my VBScript parser is modified from Paul Vick’s VBParser which was written in VB.NET. I have some concern on VB.NET’s timely availability in new platforms (remember Windows Phone 7?). However, since my code generator is tightly coupled to the abstract syntax tree (AST) in the parser project, this is going to be a messy work.

-

ASP Classic Compiler is now open source

After weeks of preparation, I have opened the source code for my ASP Classic Compiler under the Apache 2.0 license. I conceived the project in 2009 when I joint a company that had a large amount of ASP Classic code. The economy was very slow at the time so I had some energy left to start the work as a hobby. As the economy recovers, I was getting busier with my day-time job. We converted most of our ASP Classic code to .net so that this project would no longer be useful for my own use. However, I truly hope that this project will be useful to those who can still use it.

The project had gone a long way to implement most features in the VBScript 3.0 standard. It is capable of running the Microsoft FMStock 1.0 end to end sample application. I chose VBScript 3.0 in my initial implementation because VBScript 3.0 is a language with dynamic type and static membership. ASP Classic Compiler is faster than most of the Javascript implementations and is about 3 times faster than the IIS ASP interpreter in limited benchmark test. Many users have requested VBScript 5.0 features such as Class, Execute and Eval. VBScript 5.0 has dynamic membership like Javascript. To avoid the performance hit, I have done some research to optimize the performance for the mostly static scenariou, but have yet to find time to implement it.

For this project to be successful, I truly need community involvement. In the next few days, I will outline what need to be done. I will stay as the project lead until we find a suitable new leader.

-

New VB.NET Syntax since VS2005

I have not used VB.NET seriously after VS2003. Recently, I had to maintain an old VB.NET application so I had to spend a bit time to find out the VB equivalent of new C# syntax introduced in VS2005 or later:

Category C# VB.NET Generics List<string> List(Of String) Object Initializer new Person() { First=”a”, Last=”b” } New Person() With {.First = “a”, .Last = “b” } Anonymous Type var a = new { First=”a”, Last=”b” }; Dim a= New With {.First = “a”, .Last = “b” } Array or Collection Initializer int[] a = {0, 2, 4, 6, 8};

or

int[] a = new int[] {0, 2, 4, 6, 8};Dim a() as Integer = {0, 2, 4, 6, 8}

or

Dim a() as Integer = New Integer() {0, 2, 4, 6, 8}Dictionary Initializer Dictionary<int, string> d = {{1, "One"}, {2, "Two"}}; Dim d As Dictionary(Of Integer, String) = {{1, "One"}, {2, "Two"}} Lambda Expression x => x * x function(x) x*x Multiline Lambda Expression (input parameters) => {statements;} function(input parameters)

statements

end function -

Relating software refactoring to algebra factoring

Refactoring is a fairly common word in software development. Most people in the software industry have some ideas of it. However, it was not clear to me where the term comes from and how it relates to the factor we learnt in algebra. A Bing search indicates that the word Refactoring origin from Opdyke’s Ph.D. thesis used for restructuring in object oriented software, but is not clear how it relates to the factor we normally know. So I attempt to make some sense out of it after the fact.

In algebra, we know a factor is part that participated in a multiplication. For example, if C = A * B, we call A and B factors of C.

Factors are often used to simplify computation. Taking the reverse distribution identity of multiplication as an example, C * A + C * B can be rewritten as C * (A + B). This will improve computational efficiency if multiplication is far more expensive than addition. C in this case is called the common factor in the two terms, C * A and C * B. If there are no common factors between A and B, we call C the greatest common factor (GCF) the two terms.

To identify the GCF of two numbers, we usually find all the prime factors of numbers and include the greatest common count of those prime factors. For example, if we want to find the GCF of 72 and 120, we would like 72 as 23 * 32 and 120 as 23 * 31 * 51. Then the GCF would be 23 * 31 = 24. (Note that in real computer program we usually calculate GCF using Euclid’s algorithm).

Now let us see an example of analogy to algebra factoring in Boolean algebra. There is an identity (A and B) or (A and C) = A and (B or C). If we relate “and” to * and “or” to +, the above identity would be very similar to distribution identity in algebra.

In software refactoring, we usually identify the longest common sequence that is duplicated (let me set parameterization aside for simplicity) and try to reuse the sequence as a single unit. How do we find an analogy to algebra? Let us consider an instruction in a sequence as a function that take the state of computer as input and return the modified state. Then a sequence of instructions can be written as f1(f2(f3(…fn()))). If we replace the () symbol with *. Then we can write above as f1 * f2 * f3 * … * fn. Now we use + to denote any situation that we need branching. For example, we could write if (cond) { A } then { B } as A + B. Now let use apply this notation to a more complex example:

if (cond) { A; B; C; } else { D; B; E; }A to E above are block of code. So using the above notation, we can rewrite it as A * B * C + D * B * E. Now if we try to factor this expression, we get (A + D) * B * (C + E) (Note that this is a bit different to normal Algebra factoring). Now we expand the above expression into code, we would get something like:

if (cond) { A; } else { D; } B; if (cond) { C; } else { E; }That is in fact one of fairly common types of refactoring.

-

Teaching high school kids ASP.NET programming

During the 2011 Microsoft MVP Global Summit, I have been talking to people about teaching kids ASP.NET programming. I want to work with volunteer organizations to provide kids volunteer opportunities while learning technical skills that can be applied elsewhere. The goal is to teach motivated kids enough skill to be productive with no more than 6 hours of instruction. Based on my prior teaching experience of college extension courses and involvement with high school math and science competitions, I think this is quite doable with classic ASP but a challenge with ASP.NET. I don’t want to use ASP because it does not provide a good path into the future. After some considerations, I think this is possible with ASP.NET and here are my thoughts:

· Create a framework within ASP.NET for kids programming.

· Use existing editor. No extra compiler and intelligence work needed.

· Using a subset of C# like a scripting language. Teaches data type, expression, statements, if/for/while/switch blocks and functions. Use existing classes but no class creation and OOP.

· Linear rendering model. No complicated life cycle.

· Bare-metal html with some MVC style helpers for widget creation; ASP.NET control is optional. I want to teach kids to understand something and avoid black boxes as much as possible.

· Use SQL for CRUD with a helper class. Again, I want to teach understanding rather than black boxes.

· Provide a template to encourage clean separation of concern.

· Provide a conversion utility to convert the code that uses template to ASP.NET MVC. This will allow kids with AP Computer Science knowledge to step up to ASP.NET MVC.

Let me know if you have thoughts or can help.