Serge van den Oever [Macaw]

SharePoint RIP. Azure, Node.js, hybrid mobile apps

-

SPDevExplorer – edit SharePoint content from within Visual studio (1)

There is a great project http://SPDevExplorer.codeplex.com by TheKid that allows you to edit SharePoint content from within Visual studio. This is especially handy when creating SharePoint Publishing sites where you are editing master pages and page layouts. Most people do this in SharePoint designer, hating the fact that SharePoint Designer messes with your code and sometimes locks files mysteriously. SPDevExplorer allows you to do this using Visual Studio using the great Visual Studio editor.

See http://weblogs.asp.net/soever/archive/tags/SPDevExplorer/default.aspx for all myposts on SPDevExplorer.

The release on codeplex is a bit old and had some issues so I decided to dive into the code and solve some issues. I published my first updated version as an Issue on the project, because that allowed me to add attachments. See http://spdevexplorer.codeplex.com/WorkItem/View.aspx?WorkItemId=7799 for the updated version. Both binaries and source code included.

The biggest changes I made:

- Https is now supported

- It is now possible to see and edit all files

I solved the following issues:

- Converted the project into a Visual Studio 2008 project

- Changed spelling error Domin into Domain, make some other texts consistant

- Generated a strong key for the SPDevExplorer.Solution project. I got an error when installing the WSP rthat assembly was not strong-signed.

- Cookies were retrieved on an empty cookies object, this lead to a object not found exception

- Several changes to make sure that https is supported by changing the UI that full path is shown in tree

- You now see https://mysite instead of just mysite.

- Added "Explore working folder..." on site, so the cache on the local file system can be found. Want to turn this into a feature to add files to the cache folders and be able to add these additional files.

- Added "Show info..." on files and folders, shows the cached xml info on the folder/file

- On delete file/folder, ask for confirmation

- On delete file/folder, refresh parent view to make sure it is correct again, make next node current, if not exist previous node

- Made keyboard interaction working, KeyPress was used, didn't work, now using KeyUp

- Del on keyboard now works correctly for deleting files/directories

- F5 on keyboard added for refresh. Parent folder is refreshed if on File, current folder is refreshed if on folder

- Removed (Beta) from name in window title

- Moved "SharePoint Explorer" from "View" menu to "Tools" menu, more appropriate place

- Option on site "Show all folders and files". Normally there is a list of hidden folders, but this also hides files you might want to edit like files in the forms folders of lists

- Removed adding list of webs to a site, gave an error and sites were never added. All sites must be added explicitly using "connect...". I think it is also better this way.

In a next post I will show some of the features of this great tool (video).

Note that it is not possible edit the web parts in web part pages with this tool (web parts are not part of the page), and that it is not possible to edit pages in the Pages document library, the actual content is managed in the meta data of the connected content type.

-

LiveWriter finally working again with my weblogs.asp.net blog!

Thanks to Joe Cheng who was so kind to respond to my previous post on the topic I finally have LiveWriter working again against my blog.

The metablog handler needs to be: http://weblogs.asp.net/metablog.ashx

In the past this was: http://weblogs.asp.net/blogs/metablog.ashx, and it was changed without notification! At least I didn’t receive one!

Joe, thanks again! Time to get blogging again!

-

Macaw Vision on SharePoint Release Management

Developing SharePoint solutions is fun, especially with our Macaw Solutions Factory because we have all the tools to go from development to test to acceptation and to production. But then you want to make a modification to your version 1.0 SharePoint solution. How do you do that? We know how... with our Site Management Tool which is part of the Macaw solutions factory! Read all about our vision and concepts in the blogpost by my collegue Vincent hoogendoorn. Read Macaw Vision on SharePoint Release Management and let us know what you think of it.

Posts available so far on the Macaw Solutions Factory:

- Factory Vision, Part 1: Introducing the Macaw Solutions Factory

- Factory Overview, Part 1: A Bird’s Eye View of the Macaw Solutions Factory

- Macaw Vision on SharePoint Release Management

And if you are interested, tracks us on:

- Weblog Serge van den Oever: http://weblogs.asp.net/soever

- Twitter Serge van den Oever: http://www.twitter.com/svdoever

- Weblog Vincent Hoogendoorn: http://vincenth.net

- Twitter Vincent Hoogendoorn: http://www.twitter.com/vincenth_net

- Factory Vision, Part 1: Introducing the Macaw Solutions Factory

-

Factory Overview, Part 1: A Bird’s Eye View of the Macaw Solutions Factory

A very interesting and extensive overview of our Macaw Solutions Factory has just been published by my collegue Vincent hoogendoorn. It is our first installment in our "Macaw Solutions Factory Overview" blogpost series: Factory Overview, Part 1: A Bird’s Eye View of the Macaw Solutions Factory. This blog post touches on all the work I have been doing in the last years. Check it out, I think it is impressive, and it gives a real look into the kitchen on how our company and our customers are doing software projects. We will continue with a lot more posts, for example on how we do end-to-end SharePoint development with the Macaw Solutions Factory.

The post also describes one of the tools I open-sourced lately: the CruiseControl.NET Build Station. This is a "local build server" running in a Windows Forms application that you can use on your local machine to do builds of your current code tree, and on the code tree as available in source control.

Posts available so far on the Macaw Solutions Factory:

- Factory Vision, Part 1: Introducing the Macaw Solutions Factory

- Factory Overview, Part 1: A Bird’s Eye View of the Macaw Solutions Factory

- Macaw Vision on SharePoint Release Management

And if you are interested, tracks us on:

- Weblog Serge van den Oever: http://weblogs.asp.net/soever

- Twitter Serge van den Oever: http://www.twitter.com/svdoever

- Weblog Vincent Hoogendoorn: http://vincenth.net

- Twitter Vincent Hoogendoorn: http://www.twitter.com/vincenth_net

- Factory Vision, Part 1: Introducing the Macaw Solutions Factory

-

Factory Vision, Part 1: Introducing the Macaw Solutions Factory

We finally started our public "comming out" on a project we are working on for few years already: the Macaw Solutions Factory.

Vincent just posted the first installment in our "Macaw Solutions Factory Vision" blogpost series: Factory Vision, Part 1: Introducing the Macaw Solutions Factory.

We are very interested in your feedback.

Posts available so far on the Macaw Solutions Factory:

- Factory Vision, Part 1: Introducing the Macaw Solutions Factory

- Factory Overview, Part 1: A Bird’s Eye View of the Macaw Solutions Factory

- Macaw Vision on SharePoint Release Management

And if you are interested, tracks us on:

- Weblog Serge van den Oever: http://weblogs.asp.net/soever

- Twitter Serge van den Oever: http://www.twitter.com/svdoever

- Weblog Vincent Hoogendoorn: http://vincenth.net

- Twitter Vincent Hoogendoorn: http://www.twitter.com/vincenth_net

- Factory Vision, Part 1: Introducing the Macaw Solutions Factory

-

Help: Windows LiveWriter not working anymore against http://weblogs.asp.net?

I almost stopped blogging because Windows Livewriter stopped working against my http://weblogs.asp.net account on three different platforms.

I get the following error when trying to post with LiveWriter:

The response to the metaWeblog.newPost method received from the weblog server was invalid:

Invalid response document returned from XmlRpc server

or when I am logged in on the administration part of the web site and try to post using LiveWriter:

An unexpected error has occurred while attempting to log in:

Invalid Server Response - The response to the blogger.getUsersBlogs method received from the weblog server was invalid:

Invalid response document returned from XmlRpc server

Do others experience problems as well? Does anyone know a solution?

Regards, Serge

-

PowerShell: Return values from a function through reference parameters

PowerShell has a special [ref] keyword to specify which parameters are used in a fuction to return values. It's usage is not directly clear however.

If the type of the variable to update is a value type, use the [ref] keyword in the declaration of the function parameter, this specifies that a "pointer" to the variable will be passed.

To pass the "pointer" to the variable, use ([ref]$variable) to create the pointer, and pass this as parameter. To set the value of the value variable pointed to by the "pointer", use the .Value field.

Simple example to show what [ref] does:

C:\Program Files\PowerGUI> $zz = "hoi"

C:\Program Files\PowerGUI> $xz = [ref]$zz

C:\Program Files\PowerGUI> $xz

Value

-----

hoiC:\Program Files\PowerGUI> $xz.Value = "dag"

C:\Program Files\PowerGUI> $zz

dagThis is only required for value types, not is the type is a referece type like for example Hashtable.

Some sample code:

function fn

{

param

(

[ref]$arg1,

[ref]$arg2,

$arg3

)

$arg1.Value = 1

$arg2.Value = "overwrite"

$arg3.key = "overwrite hash value"

}

$x = 0

$y = "original"

$z = @{"key" = "original hashvalue"}

$x

$y

$z.key

fn -arg1 ([ref]$x) -arg2 ([ref]$y) -arg3 $z

$x

$y

$z.key -

Presentation on the Business Productivity Online Suite as given at DIWUG

Yesterday evening I gave a presentation on the Business Productivity Online Suite (BPOS) for the DIWUG. The session was hosted by Sparked in Amsterdam.

I uploaded the presentation at my skydrive.

The presentation starts with a general introduction to BPOS. It then zooms in on the features of SharePoint Online and gives some ideas on how you could integrate Silverlight applications with BPOS.

Part of the presentation is in Dutch, but I think most of the presentation can be understood by English readers as well because most computer related terms are in English.

Interesting links when starting developing for BPOS:

EU regulations:

-

Documenting PowerShell script code

The Macaw Solutions Factory contains a lot of PowerShell script code. A lot of the code is documented, but it is not documented in a consistent way because there were no documentation standards available for PowerShell 1.0, we only had the very complex XML based MAML documentation standard that was not useable for inline documentation.

With the (upcoming) introduction of PowerShell 2.0, a new more readable documentation standard for PowerShell code is introduced. I did not find any formal documentation on it, only examples on the PowerShell team blog. There is even a script to create a weblog post out of a script containing this documentation format. See http://blogs.msdn.com/powershell/archive/tags/Write-CommandBlogPost/default.aspx for this script and an example of the documentation format.

The new documentation format uses a PowerShell 2.0 feature for multi-line comments:

<# : \#>

Because this is not supported in PowerShell 1.0 , we will write the documentation as follows to remain compatible:

\#<# \#: \##>

This will allows us to change the documentation by removing the superfluous # characters when the code-base is moved over to PowerShell 2.0 when it is released.

Documenting PowerShell scripts

Scripts can be provided with documentation in the following format:

#<# #.Synopsis # Short description of the purpose of this script #.Description # Extensive description of the script #.Parameter X # Description or parameter X #.Parameter Y # Description or parameter Y #.Example # First example of usage of the script #.Example # Second example of usage of the script ##> param ( X, Y ) :

function MyFunction { #<# #.Synopsis # Short description of the purpose of this function #.Description # Extensive description of the function #.Parameter X # Description or parameter X #.Parameter Y # Description or parameter Y #.Example # First example of usage of the function #.Example # Second example of usage of the function ##> param ( X, Y ) : }

:

Automatically generating documentation

The next step is to automatically generate documentation on all our scripts based on the new documentation format.

The best approach will possibly be to use PowerShell 2.0 to actually generate the documentation, because using PowerShell 2.0 we can parse PowerShell code. We can easily determine the functions and their parameters.

A nice project for the future.

-

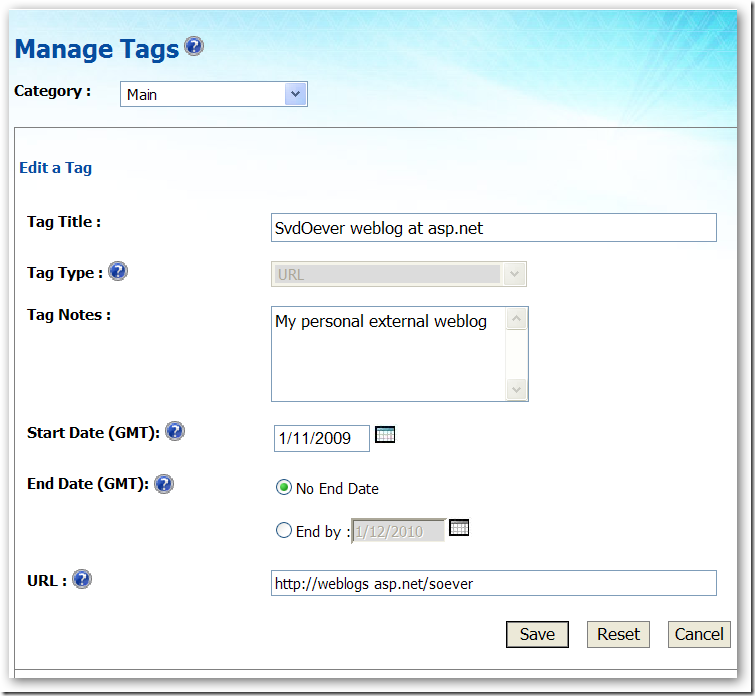

Microsoft Tag, funny idea, will it take off?

Microsoft came up with a simple but funny and possibly really effective idea: Microsoft Tag. You can create a nice visual tag consisting of colorful triangles, and when you scan this tag with the camera of your Internet enabled cell phone you get connected to a web page, vcard, a text note or a dialar (audio) advertisement. I just tried it out, I created a tag to this web log:

- I logged in into http://www.microsoft.com/tag with my live ID account

- Created a new tag to my weblog:

- This results in my first tag on my personal tags site:

- I rendered the tag to a PDF by clicking on the Tag icon:

- I downloaded the Tag Reader application for my iPhone from the AppStire (the link http://gettag.mobi just says that, it provides no download link)

- I snapped a photo from the on screen PDF file, allowing the iPhone to access my location information (smart), and..... nothing. I got an error.

- I was a bit disappointed so i fired up the introductory movie on http://www.microsoft.com/tag, snapped a really bad photo from a tag in this movie and guess what! It worked! It brought me to a Vista advertisement.

I think the idea is good. This can be used very well for print on posters, advertisement, the price and information cards next to a TV in a shop etc. etc. The introductory movie gives some good examples like an advertisement for a concert with a Tag that brings you to the booking page, or at a bus station where the Tag brings you to an online schedule of the bus. Wondering if this will take of.

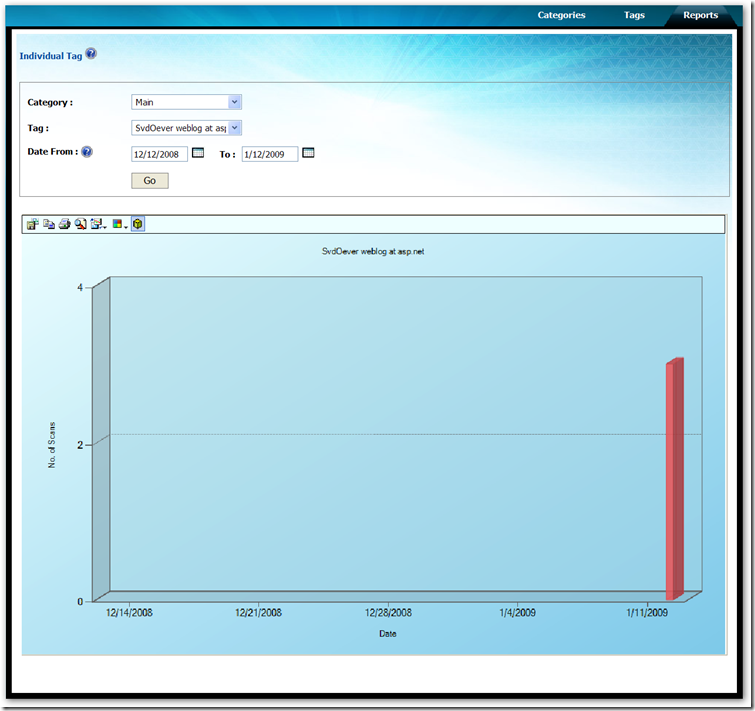

On the site you can get a report on snaps of your Tags:

And hey, I got three hits!! So the Tag was recognized, just the linking failed!

The Tag picture above should point to my weblog, maybe it will start working in a while. Try it all out, and I will let you know about the results in a later blog post.

It would be really interesting if the report would also use the location information that can be provided by the Tag Reader application (the iPhone version does if you allow this), so you know where your Tag was snapped.

Time to print a T-Shirt with my personal Tag...

-

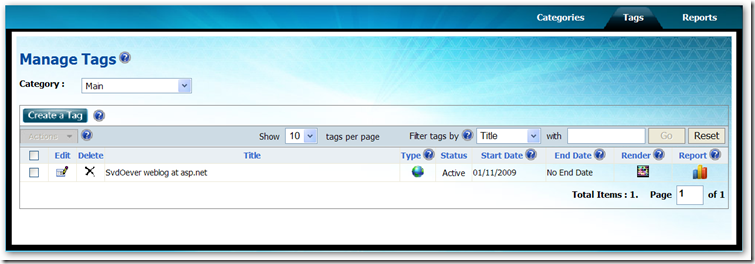

Inspecting SharePoint

When developing for SharePoint you absolutely need a SharePoint inspection tool, a tool that can navigate through the SharePoint object model and SharePoint content.

A nice tool is http://www.codeplex.com/spi that comes with source code, but it has a few issues:

- The latest release 1.0.0.0 is old

- A newer version is available, but you must compile it yourself from the code respository (I included a compiled version on the codeplex site at http://www.codeplex.com/spi/WorkItem/View.aspx?WorkItemId=8785)

- It only shows a small subset of SharePoint information, for example you can't see content type definitions, fields, or SharePoint content

Another great tools in Echo SharePoint Spy. It can be downloaded at http://www.echotechnology.com/products/spy. In their own words:

"This powerful free tool will allow you to spy into the internal data of SharePoint and compare the effects of making a change. Sharepoint Spy also allows you to compare settings between sites, lists, views, etc helping you troubleshoot configurations."

You need to register and will get an e-mail with download details and a registration key.

When I tried to install the Echo SharePoint Spy tool on my Windows Server 2008 development server it refused. The installer is an MSI file, and after some googling I stumbled upon a weblog post on how to extract files from an MSI file using the MSIerables tool that can be found at Softpedia. After opening the MSI file you get the following view:

After extracting the SharePointSpy.exe file you can just run it on Windows Server 2008.

The Echo SharePoint Spy tool has some cool features:

Show the field definitions of a list and their internal mapping to the database fieldShow the schema of a view, right-click on right window (Internet Explorer window) and select View Source to see the actual xml in Notepad.

You can compare any two object by right-clicking an object and select "Compare this object with...", then select a second object and select "... compare with this item".

Check them out!

-

Powershell output capturing and text wrapping: strange quirks... solved!

Summary

To capture (transcript) all output of a Powershell script, and control the way textwrapping is done, use the following approach from for exmaple a batch file or your Powershell code:

PowerShell -Command "`$host.UI.RawUI.BufferSize = new-object System.Management.Automation.Host.Size(512,50); `"c:\temp\testoutputandcapture.ps1`" -argument `"A value`"" >c:\temp\out.txt 2>&1

Note the '`' (backtick characters).

The problem: output capture and text wrap

Powershell is a great language, but I have been fighting and fighting with Powershell on two topics:

- to capture the output

- to get it output text in the max width that I want without unexpected wrapping

After reading the manuals you would think that the PowerShell transcripting possibilities are your stairways to heaven. You just start capturing output as follows:

- start-transcript "c:\temp\transcript.txt"

- do your thing

- stop-transcript

Try this with the following sample file c:\temp\testoutputandcapture.ps1:

Start-Transcript "c:\temp\transcript.txt" function Output { Write-Host "Write-Host" Write-Output "Write-Output" cmd.exe /c "echo Long long long long long long long long yes very long output from external command" PowerShell -Command "Write-Error 'error string'" } Output Stop-Transcript

When you execute this script you get the following output:

PS C:\temp> C:\temp\testoutputandcapture.ps1 Transcript started, output file is c:\temp\transcript.txt Write-Host Write-Output Long long long long long long long long yes very long output from external command

Write-Error 'error string' : error string Transcript stopped, output file is C:\temp\transcript.txtIf we now look at the generated transcript file c:\temp\transcript.txt:

********************** Windows PowerShell Transcript Start Start time: 20081209001108 Username : VS-D-SVDOMOSS-1\Administrator Machine : VS-D-SVDOMOSS-1 (Microsoft Windows NT 5.2.3790 Service Pack 2) ********************** Transcript started, output file is c:\temp\transcript.txt Write-Host Write-Output ********************** Windows PowerShell Transcript End End time: 20081209001108 **********************

The output from the external command (the texts Long long long long long long long long yes very long output from external command and Write-Error 'error string' : error string) is not captured!!!

Step one: piping output of external commands

This can be solved by appending | Write-Output to external commands:

Start-Transcript 'c:\temp\transcript.txt' function Output { Write-Host "Write-Host" Write-Output "Write-Output" cmd.exe /c "echo Long long long long long long long long yes very long output from external command" | Write-Output PowerShell -Command "Write-Error 'error string'" | Write-Output } Output Stop-Transcript

This will result in the following output:

PS C:\temp> C:\temp\testoutputandcapture.ps1 Transcript started, output file is c:\temp\transcript.txt Write-Host Write-Output Long long long long long long long long yes very long output from external command

Write-Error 'error string' : error string Transcript stopped, output file is C:\temp\transcript.txtNote that the error string Write-Error 'error string' : error string is not in red anymore.

The resulting transcript file c:\temp\transcript.txt now looks like:

********************** Windows PowerShell Transcript Start Start time: 20081209220137 Username : VS-D-SVDOMOSS-1\Administrator Machine : VS-D-SVDOMOSS-1 (Microsoft Windows NT 5.2.3790 Service Pack 2) ********************** Transcript started, output file is c:\temp\transcript.txt Write-Host Write-Output Long long long long long long long long yes very long output from external command

Write-Error 'error string' : error string ********************** Windows PowerShell Transcript End End time: 20081209220139 **********************This is what we want in the transcript file, everything is captured, but we need to put | Write-Output after every external command. This is way to cumbersome if you have large scripts. For example in our situation there is a BuildAndPackageAll.ps1 script that includes a lot of files and cosists in totla of thousands of lines of Powershell code. There must be a better way...

Transcripting sucks, redirection?

Ok, so transcript just does not do the job of capturing all output. Lets look at another method: good old redirection.

We go back to our initial version of the script, and do c:\temp\testoutputandcapture.ps1 > c:\temp\out.txt. This results in a c:\temp\out.txt file with the following contents:

Transcript started, output file is c:\temp\transcript.txt Write-Output Long long long long long long long long yes very long output from external command

Write-Error 'error string' : error string Transcript stopped, output file is C:\temp\transcript.txtWe are missing the Write-Host output! Actually, the Write-Host is the only line ending up in the transcript file;-)

This is not good enough, so another try, but now using the Powershell command-line host on the little modified script c:\temp\testoutputandcapture.ps1 that will showcase our next problem:

function Output { Write-Host "Write-Host" Write-Output "Write-Output Long long long long long long long long yes very long output from Write-Output" cmd.exe /c "echo Long long long long long long long long yes very long output from external command" PowerShell -Command "Write-Error 'error string'" } Output

We now do: Powershell -Command "c:\temp\testoutputandcapture.ps1" > c:\temp\out.txt 2>&1 (2>&1 means: redirect stderr to stdout). We capture stderr as well, you never know where it is good for. This results in:

Write-Host Write-Output Long long long long long long long l ong yes very long output from Write-Output Long long long long long long long long yes very long output from external command

Write-Error 'error string' : error stringI hate automatic text wrapping

As you may notice some strange things happen here:

- A long output line generated by PowerShell code is wrapped (at 50 characters in the above case)

- A long line generated by an external command, called from Powershell is not truncated

The reason the Powershell output is wrapped at 50 characters is because the dos box I started the command from is set to 50 characters wide. I did this on purpose to proof a case. Ok, so you can set your console window really wide, so no truncation is done? True. But you are not always in control of that. For example if you call your Powershell script from a batch file which is executed through Windows Explorer or through an external application like in my case CruiseControl.Net where I may provide an external command.

Powershell -PSConfigFile?... no way!

I hoped the solution would be in an extra parameter to the Powershell command-line host: -PSConfigFile. You can specify a Powershell console configuration XML file here that you can generate with the command Export-Console ConsoleConfiguration. This results in the ConsoleConfiguration.psc1 XML file:

<?xml version="1.0" encoding="utf-8"?> <PSConsoleFile ConsoleSchemaVersion="1.0"> <PSVersion>1.0</PSVersion> <PSSnapIns /> </PSConsoleFile>

I searched and searched for documentation on extra configuration like console width or something, but documentation on this is really sparse. So I fired up good old Reflector. After some drilling I ended up with the following code for the Export-Console command:

internal static void WriteToFile(MshConsoleInfo consoleInfo, string path) { using (tracer.TraceMethod()) { _mshsnapinTracer.WriteLine("Saving console info to file {0}.", new object[] { path });

XmlWriterSettings settings = new XmlWriterSettings(); settings.Indent = true; settings.Encoding = Encoding.UTF8; using (XmlWriter writer = XmlWriter.Create(path, settings)) { writer.WriteStartDocument(); writer.WriteStartElement("PSConsoleFile"); writer.WriteAttributeString("ConsoleSchemaVersion", "1.0"); writer.WriteStartElement("PSVersion"); writer.WriteString(consoleInfo.PSVersion.ToString()); writer.WriteEndElement(); writer.WriteStartElement("PSSnapIns"); foreach (PSSnapInInfo info in consoleInfo.ExternalPSSnapIns) { writer.WriteStartElement("PSSnapIn"); writer.WriteAttributeString("Name", info.Name); writer.WriteEndElement(); } writer.WriteEndElement(); writer.WriteEndElement(); writer.WriteEndDocument(); writer.Close(); } _mshsnapinTracer.WriteLine("Saving console info succeeded.", new object[0]); } }So the XML that we already saw is all there is... a missed chance there.

A custom command-line PowerShell host?

I have written a few Powershell hosts already, for example one hosted into Visual Studio, and there I encountered the same wrapping issue which I solved by the following implementation of the PSHostRawUserInterface:

using System; using System.Management.Automation.Host; namespace Macaw.SolutionsFactory.DotNet3.IntegratedUI.Business.Components.Powershell { /// <summary> /// Implementation of PSHostRawUserInterface. /// </summary> public class PowershellHostRawUI : PSHostRawUserInterface { :

public override Size BufferSize { get { // The width is the width of the output, make it very wide! return new Size(512,1); } set { throw new Exception("BufferSize - set: The method or operation is not implemented."); } }

: } }I solved transcripting in my own Powershell hosts by capturing all text output done by Powershell through custom implemented functions for the PSHostUserInterface you need to implement anyway, works like a breeze.

The solution, without custom host...

I was just getting started to solve the transcripting and wrapping issues by implementing again a Powershell host, but now a command-line Powershell host when I did one last digging step. What is you can set the BufferSize at runtime in your Powershell code. And the answer is.... yes you can! Through the $host Powershell variable: $host.UI.RawUI.BufferSize = new-object System.Management.Automation.Host.Size(512,50). This says that the output may be max 512 chars wide, the height may not be 1 (as in my code), bu this height value is not important in this case. It is used for paging in a interactive shell.

And this last statement gives us full control and solves all our transcripting and wrapping issues:

PowerShell -Command "`$host.UI.RawUI.BufferSize = new-object System.Management.Automation.Host.Size(512,50); `"c:\temp\testoutputandcapture.ps1`" -argument `"A value`"" >c:\temp\out.txt 2>&1

And this concludes a long journey into the transcripting and wrapping caves of Powershell. I hope this will save you the days of searching we had to put into it to tame the great but naughty Powershell beast.

-

Powershell: Generate simple XML from text, useful for CruiseControl.Net merge files

I had the problem that I need to include ordinary text files into the CruiseControl.Net build output log file. This log file is a merge of multiple files in xml format. So I needed to get some text files into a simple xml format. I ended up with the following Powershell code to convert an input text file to a simple output xml file

Powershell code:

function Convert-XmlString

{

param

(

[string]$text

)

# Escape Xml markup characters (http://www.w3.org/TR/2006/REC-xml-20060816/#syntax)

$text.replace('&', '&').replace("'", ''').replace('"', '"').replace('<', '<').replace('>', '>')

}function Convert-TextToXml

{

param

(

$rootNode = 'root',

$node = 'node',

$path = $(Throw 'Missing argument: path'),

$destination = $(Throw 'Missing argument: destination')

)

Get-Content -Path $path | ForEach-Object `

-Begin {

Write-Output "<$rootNode>"

} `

-Process {

Write-Output " <$node>$(Convert-XmlString -text $_)</$node>"

} `

-End {

Write-Output "</$rootNode>"

} | Set-Content -Path $destination -Force

}You can call this code as follows:

Convert-TextToXml -rootNode 'BuildAndPackageAll' -node 'message' -path 'c:\temp\in.txt' -destination 'c:\temp\out.xml'

where the file c:\temp\in.txt:

This is line 1

This is a line with the characters <, >, &, ' and "

This is line 3Will be converted the the file c:\temp\out.xml:

<BuildAndPackageAll>

<message>This is line 1</message>

<message>This is a line with the characters <, >, &, ' and "</message>

<message>This is line 3</message>

</BuildAndPackageAll> -

Moss 2007 StsAdm Reference Poster by Microsoft

All SharePoint developers and administrators will be familiar with the Stsadm.exe command line tool in the bin folder of the "12 hive", normally located at: C:\Program Files\Common Files\Microsoft Shared\web server extensions\12\BIN\STSADM.EXE. This tool has many available operations and an extensibility model. For a nice poster on all available operations (Moss2007 SP1) and available properties, see http://go.microsoft.com/fwlink/?LinkId=120150.

- See Index for Stsadm operations and properties (Office SharePoint Server) for MSDN documentation on Stsadm operations and properties.

- See Extending Stsadm.exe with Custom Commands for MSDN documentation on extending Stsadm.

- See the STSADM Custom Extension blog (http://stsadm.blogspot.com/) for a extensive set of extensions by Gary Lapointe.

Although Stsadm is a nice mechanism for managing SharePoint, we really need a PowerShell interface to do all these same operations. There are already some first small PowerShell libraries available, and also Gary Lapointe is starting a PowerShell library. See this blog post for his first steps in this direction.

-

Notes from PDC Session: Extending SharePoint Online

******* REMOVED SOME HARSH WORDS ON THE SESSION *******

I took some notes, and augmented it with some of my own thoughts and information.

-------

SharePoint Online provides:

Managed Services on the net

- No server deployment needed, just a few clicks to bring your instance up and running

- Unified admin center for online services

- Single sign on system, no federated active directory yet

Enterprise class Reliability

- Good uptime

- Anti virus

-...

SharePoint online is available in two tastes: standard (hosted in the cloud) and dedicated (on premises)

Standard is most interesting I think: minimum of 5 seats, max 1TB storage.

On standard we have no custom code deployment, so we need to be inventive!

SharePoint Online is a subset of the standard SharePoint product (extensive slide on this in the slide deck, no access to that yet)

SharePoint online is for intranet, not for anonymous internet publishing.

$15 for the complete suite: Exchange, SharePoint, SharePoint, Office Live Meeting. Separate parts are a few dollars a piece.

Base os SharePoint Online is MOSS, but just a subset of functionality is available. Also just the usual suspect set of site templates is available: blank, team, wiki, blog, meeting.

SharePoint Online can be accessed through the Office apps, SharePoint designer and throuth the web services.

SharePoint Designer:

- No code WF

- Customize content types

- Design custom look and feel

Silverlight:

- talk to the web services of SharePoint online.

- Uses authentication of current user accessing the page hosting the Silverlight control

- See http://silverlight.net/forums/p/26453/92363.aspx for some discussion on getting a SharePoint web service call working

Data View Web Part:

- Consume data from a data source

- Consume RSS feeds through http GET

- Consume http data through HTTP GET/POST

- Consume web services

- ...

- Configure filter, order, paging etc.

- Select columns, rename columns, ...

- Result is an XSLT file

This XSLT code can be modified at will. There are infinite formatting capabilities with XSLT. Also a set of powerful XSLT extension functions is available in the ddwrt namespace (See http://msdn.microsoft.com/en-us/library/aa505323.aspx for a SharePoint 2003 article on this function set, see reflector for additional functions in the 2007 version;-)). See http://www.tonstegeman.com/Blog/Lists/Posts/Post.aspx?ID=85 for writing XSLT extension functions when you are able to deploy code, so not for the online scenario; this was not possible on SharePoint 2003).

Note that the Data View Web Part can only be constructed with SharePoint designer.

InfoPath client: custom forms for workflows

Web services: Can be used from custom apps (command line, win forms, ...), but also from Silverlight to have functionality that is hosted in your SharePoint Online site itself.

You can also host custom web pages on your own server or in the cloud on Windows Azure (the new Microsoft cloud platform), and call SharePoint Online web services in the code behind of these pages.

What can't be done:

- No Farm wide configurations

- No Server side code

- No custom web parts

- No site definitions

- No coded workflows

- No features

- No ...

There is still a lot that can be done, but that will be an adventure to find out exactly....

-

From the PDC: Windows Azure - the Windows cloud server - is here!

New times have arived! Exactly what we hoped for, developing for the cloud using Microsoft technology. Of course, EC2 and Google App Engine did beat Microsoft in timing, but bringing up Linux instances, and learning python happened to be a big hurdle for a guy who has been using Microsoft technology for too long. I did some testing with both platforms and was impressed by their concepts. But the Azure cloud platform that Microsoft announced today is more "my kind of candy".

In the PDC keynote I wrote down some twitter messages on my iPhone (I love Twitterific!), you can read them at: http://twitter.com/svdoever

Also have a look at the great posts by http://weblogs.asp.net/pgielens on Azure. I will blog some more thougths on the new platform in the next days, but now the session on Extending SharePoint Online is starting!

-

SharePoint Wizard and MVP Patrick "Profke" Tisseghem is offline, till the end of time...

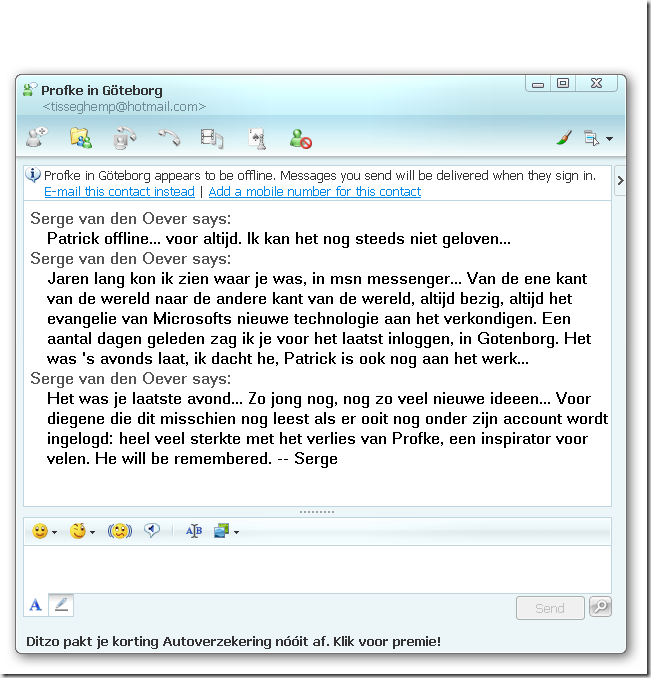

For years and years I have followed the travels of Patrick "Profke" Tisseghem from one end of the world to the other end of the world on my screen in Windows Live Messenger. Patrick was a busy guy, evangelizing, educating, inspiring the world. It was always good to see when he was back @home either in Belgium or Cyprus for some well deserved time with his family. A few days ago I saw his new, and what later appeared to be his last, destination: Goteborg in Sweden. For the last days his status remained offline, nothing for him... Today I understood why....

Patrick and his colleagues did visit Macaw, the company I work for, many times with their great and inspiring courses. Patrick is highly respected, and awell known face within our company.

The last time I spoke to Patrick in person was at Devdays in Amsterdam in May. We had a beer in the sun at "Strand Zuid" and talked about our family and kids, his house on Cyprus, and some new ideas he had on how to have SharePoint feature updates without getting into trouble. We were with a large group of Macaw colleagues, and he bought us all a round of drinks we drank on his luck. It is terrible to see that his luck ran out so quick.

The last chat on Messenger was at the end of July, he was @home in Cyprus. I told him about the birth of my third child. Life comes, life goes... it is a cruel world.

Profke, happy travels in what lies ahead... I wonder if they are using SharePoint on the other side. We will have a beer when I arrive...

My thoughts go out to his wife Linda and his two daughters, let the light be with you all to guide you through this difficult time.

namasté

---------------------------------------------------------------------------------

See Patrick's blog for a last message from his wife Linda

See Jan Thielens' blog for a spontaneous condolence register

See Karine Bosch' blog for a beautiful farewell message from a good colleague and friend

-

Post to self: getting the directory where the CLR is installed in PowerShell

[System.Runtime.InteropServices.RuntimeEnvironment]::GetRuntimeDirectory()

will return a path like: c:\WINDOWS\Microsoft.NET\Framework\v2.0.50727\

-

C:\Program Files\Reference Assemblies for assemblies to reference in your code

I just stumbles across a "new" concept of Microsoft. In the C:\Program Files\Reference Assemblies folder Microsoft installs assemblies for products that can be referenced from your code. Instead of referencing assemblies directly from the GAC or copying an assembly from an installation folder or the GAC to your project for reference, you can now reference the assemblies in this folder.

We have a similar approach in our Macaw Solutions Factory where we have a _SharedAssemblies folder to keep track of all external assemblies to reference. We prefer to keep the assemblies with the source that is checked into source control, because otherwise it is possible that a build server does not contain the expected assemblies when compiling a checked-out solution and can't successfully compile the solution.

On the MsBuild team blog you can read more about this feature that happened to be with us since the .Net 3.0 framework:

http://blogs.msdn.com/msbuild/archive/2007/04/12/new-reference-assemblies-location.aspx

Also other applications like PowerShell keep their assemblies to reference in this location.

-

Use VS2008 to create a VSPackage that runs in VS2008 and VS2005

We all want to use the new features in Visual Studio 2008 during development. When using VS2008 for VsPackage development we need to install the Visual Studio 2008 SDK. The approach taken for building VsPackages in VS2008 is incompatible with the approach taken in VS2005. In this article I explain an approach to use VS2008 to build a compatible VsPackage that can be tested in the experimental hives of both versions of Visual Studio.

About VsPackage

- A VsPackage is a very powerful Visual Studio extensibility mechanism

- Visual studio knows about a VsPackage and its functionality through registry registrations

- To test a VsPackage without messing up your Visual Studio, the Visual Studio SDK created a special experimental “registry hive” containing all required Visual Studio registrations. Visual studio can be started with the name of this hive to test your VsPackage in a “sandbox” environment

- This hive can be reset to its initial state in case of problems or to do a clean test

- In Visual studio 2005 the hive is created under HKEY_LOCAL_MACHINE, this gives issues under Vista where a normal user may not write to this part of the registry

- In Visual studio a “per user” experimental hive is supported, so you can do Run As a Normal User development

In the Visual Studio 2005 SDK the experimental hive was created on installation because the same experimental hive was used for all users. In the Visual studio 2008 SDK the experimental hive is per used, so each user needs to recreate it’s own expirimental hive. You can do this by executing the following command from the Start Menu: Microsoft Visual Studio 2008 SDK -> Tools -> Reset the Microsoft Visual Studio 2008 Experimental hive.

What this command does is executing the following command: VSRegEx.exe GetOrig 9.0 Exp RANU. The RANU argument means: Run As a Normal User.

Referenced assemblies

A VS2008 VsPackage project will normally reference the following assemblies:

<Reference Include="Microsoft.VisualStudio.OLE.Interop" />

<Reference Include="Microsoft.VisualStudio.Shell.Interop" />

<Reference Include="Microsoft.VisualStudio.Shell.Interop.8.0" />

<Reference Include="Microsoft.VisualStudio.Shell.Interop.9.0" />

<Reference Include="Microsoft.VisualStudio.TextManager.Interop" />

<Reference Include="Microsoft.VisualStudio.Shell.9.0" />

If we want to run the VsPackage on VS2005 as well, we may not reference assemblies from VS2008 (the assemblies ending with 9.0). The set of assemblies we should reference are:

<Reference Include="Microsoft.VisualStudio.OLE.Interop" />

<Reference Include="Microsoft.VisualStudio.Shell.Interop" />

<Reference Include="Microsoft.VisualStudio.Shell.Interop.8.0" />

<Reference Include="Microsoft.VisualStudio.TextManager.Interop" />

<Reference Include="Microsoft.VisualStudio.Shell" />

VsPackage registration

But because Visual Studio uses a tool called RegPkg.exe to generate the required registry entries to make the VsPackage known to Visual Studio, and that the RegPkg.exe application for VS2008 uses the Microsoft.VisualStudio.Shell.9.0 assembly and the RegPkg.exe application for VS2005 uses the the Microsoft.VisualStudio.Shell assembly, we have a problem.

But Microsoft was so kind to provide a howto on migrating VsPackage projects to VS2008. See http://msdn.microsoft.com/en-us/library/cc512930.aspx for more information. If you have followed the steps to migrate an existing VS2005 project to VS2008, there is an interesting section on what to do if you want to use the Microsoft.VisualStudio.Shell assembly instead of the Microsoft.VisualStudio.Shell.9.0 assembly, and in that way stay compatible with VS2005.

The steps are as follows:

A VsPackage project has a project file that is actually an MsBuild file. To open this file in Visual studio do the following:

- Right click on the VsPackage project

- Select Unload Project (save changes if requested)

- See the project named changed from FooBar to FooBar (unavailable)

- Right click again and select Edit FooBar.csproj

- Add the following lines to the last PropertyGroup:

<!-- Make sure we are 2005 compatible, and don't rely on RegPkg.exe

of VS2008 which uses Microsoft.VisualStudio.Shell.9.0 -->

<UseVS2005MPF>true</UseVS2005MPF>

<!-- Don't try to run as a normal user (RANA),

create experimental hive in HKEY_LOCAL_MACHINE -->

<RegisterWithRanu>false</RegisterWithRanu> - Save project file

- Right click on the project and select Reload Project

After these changes the RegPkg.exe for VS2005 is used to generate the registry settings. But this tool can only generate registry settings for a hive in HKEY_LOCAL_MACHINE, and not for a hive in HKEY_CURRENT_USER. This means that development must be done as an administrator.

If we now build the application we get the following error: “Failed to retrieve paths under VSTemplate for the specified registry hive.”, we must register a new registry hive for VS2008 in HKEY_LOCAL_MACHINE with the following command: VSRegEx.exe GetOrig 9.0 Exp.

For more information on the RegPkg.exe utility, see the excellent blog post at http://www.architekturaforum.hu/blogs/divedeeper/archive/2008/01/22/LearnVSXNowPart8.aspx. Don’t forget to read the rest of that blog post series.

Last step to do is changing the way VS2008 is launched when debugging the VsPackage with F5. Right-click the VsPackage project and select properties. Now remove RANU from the command line arguments box:

Because we want to target both VS2005 and VS2008 with the VsPackage, we should also test the package on VS2005. So assuming that VS2005 is installed on the development machine as well, create an experimental hive for VS2005 using the command: VSRegEx.exe GetOrig 8.0 Exp. We don’t need to install this experimental hive if we installed the Visual Studio SDK for VS2005 as well.

To register the VsPackage for VS2005 as well and start VS2005 under the experimental hive, you can add a small batch script to your solution. Add a file TestInVS2005.bat in the root of the solution folder as a solution item. The TestInVS2005.bat file should have the following content:

"%VSSDK90Install%VisualStudioIntegration\Tools\Bin\VS2005\regpkg.exe" /root:Software\Microsoft\VisualStudio\8.0Exp /codebase %~dp0FooBar\bin\Debug\FooBar.dll

"%VS80COMNTOOLS%..\IDE\devenv.exe" /rootSuffix exp

Pause

We can now right-click on the TestInVs2005.bat file, select Open With… and browse to the TestInVs2005.bat file itself to open the file with. It then starts the batch script.

-

Edit file in Wss3 web application with SharePoint Designer blows up your web site

I wanted to create a simple html file in the root of my Wss3 web application, so I created an empty file test.htm. I double clicked te file and because I have SharePoint Designer installed, it is the default editor for .htm files. I put some test into the .htm file along the lines of "Hello world!", and boom: my Wss3 web application is dead. I get the following error:

Server Error in '/' Application.

Parser Error

Description: An error occurred during the parsing of a resource required to service this request. Please review the following specific parse error details and modify your source file appropriately.

Parser Error Message: Data at the root level is invalid. Line 1, position 1.

Source Error:Line 1: <browsers> Line 2: <browser id="Safari2" parentID="Safari1Plus"> Line 3: <controlAdapters>

Source File: /App_Browsers/compat.browser Line: 1

Version Information: Microsoft .NET Framework Version:2.0.50727.832; ASP.NET Version:2.0.50727.832After some searching I found what happened:

When you open a file with SharePoint Designer, it creates all kind of FrontPage Server Extension functionality in your web application. One thing it does it that it creates a _vti_cnf folder in every folder you have in your web application. If you remove all these folders you fix your problem.

I don't know of a handy DOS command to delete all these folders recursively, but with some PowerShell I solved the problem.

Open a PowerShell prompt, set the current directory to the folder hosting your web application and execute the following command to see the "damage":

PS C:\Inetpub\wwwroot\wss\VirtualDirectories\wss3dev> get-childitem -path . -name _vti_cnf -recurse

_vti_cnf<br />App_Browsers\_vti_cnf <br />App_GlobalResources\_vti_cnf <br />aspnet_client\system_web\2_0_50727\CrystalReportWebFormViewer3\CSs\_vti_cnf <br />aspnet_client\system_web\2_0_50727\CrystalReportWebFormViewer3\html\_vti_cnf <br />aspnet_client\system_web\2_0_50727\CrystalReportWebFormViewer3\Images\ToolBar\_vti_cnf <br />aspnet_client\system_web\2_0_50727\CrystalReportWebFormViewer3\Images\Tree\_vti_cnf <br />aspnet_client\system_web\2_0_50727\CrystalReportWebFormViewer3\JS\_vti_cnf <br />wpresources\_vti_cnf <br />_app_bin\_vti_cnf</font></p>Now execute the following PowerShell command to kill all these folders and become a happy camper again:

PS C:\Inetpub\wwwroot\wss\VirtualDirectories\wss3dev> get-childitem -path . -name _vti_cnf -recurse | % { remove-item -Path $_ -recurse }

-

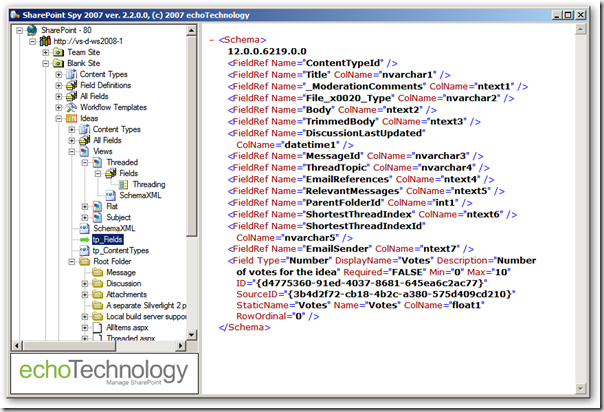

Reduce the size of a virtual hard disk before delivery for reuse

When delivering a virtual hard disk to your team to build their virtual machines on I perform the following steps to minimize the size of the virtual hard disk. It is assumed that Virtual Server 2005 R2 SP1 is installed.

- Make sure you merged the Undo disks, and that differencing disks are merged with their parent disks

- Disable undo disks

- Start the virtual machine to compact

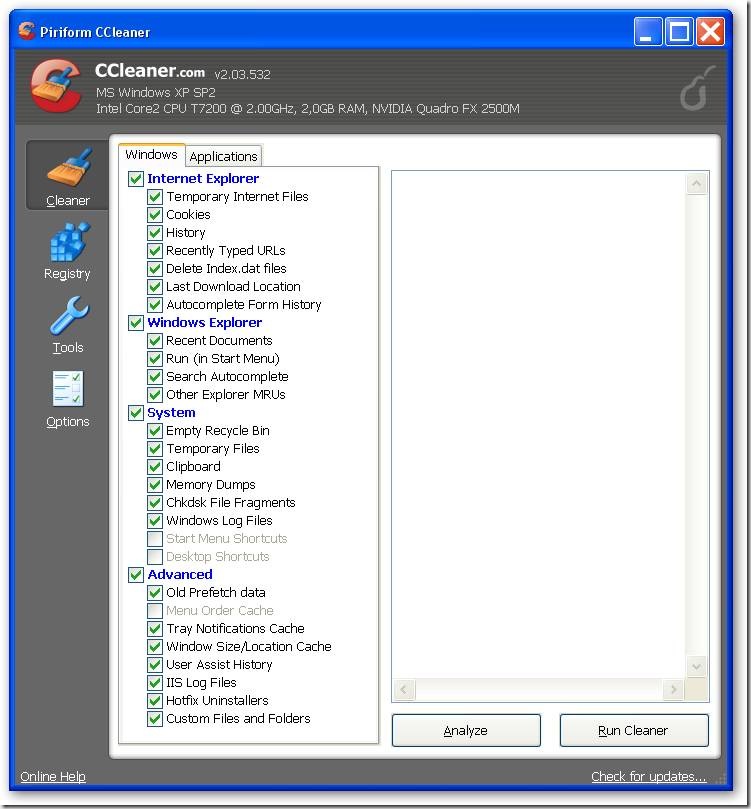

- In the virtual machine download CCleaner on http://www.ccleaner.com . This is a great tool to clean your disk from history files, cache files etc. I run this almost daily on my virtual machines and my host system. Thanks Danny for introducing me to this great tool.

Quote from their website:

CCleaner is a freeware system optimization and privacy tool. It removes unused files from your system - allowing Windows to run faster and freeing up valuable hard disk space. It also cleans traces of your online activities such as your Internet history. But the best part is that it's fast (normally taking less than a second to run) and contains NO Spyware or Adware! :)

During installation beware of the option to install the Yahoo toolbar, you often overlook this co-packaging options from Microsoft, Google and Yahoo:

- In the virtual machine run CCleaner with the options you prefer, my options are:

- Shut down the virtual machine

- Mount the virtual hard disk to compact using vhdmount (part of Virtual Server 2005 R2 SP1):

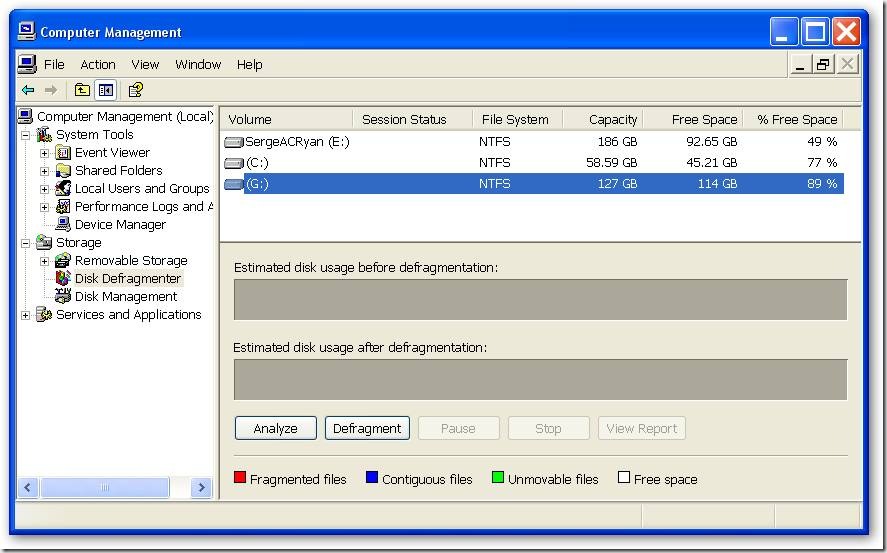

"C:\Program Files\Microsoft Virtual Server\Vhdmount\vhdmount" /p “drive:\folder\YourVirtualHarddiskToCompress.vhd” - Go to Start > My Computer; Right-click and select Manage. Now defragment the disk mounted in step 6

- "C:\Program Files\Microsoft Virtual Server\Vhdmount\vhdmount" /u /c “drive:\folder\YourVirtualHarddiskToCompress.vhd”

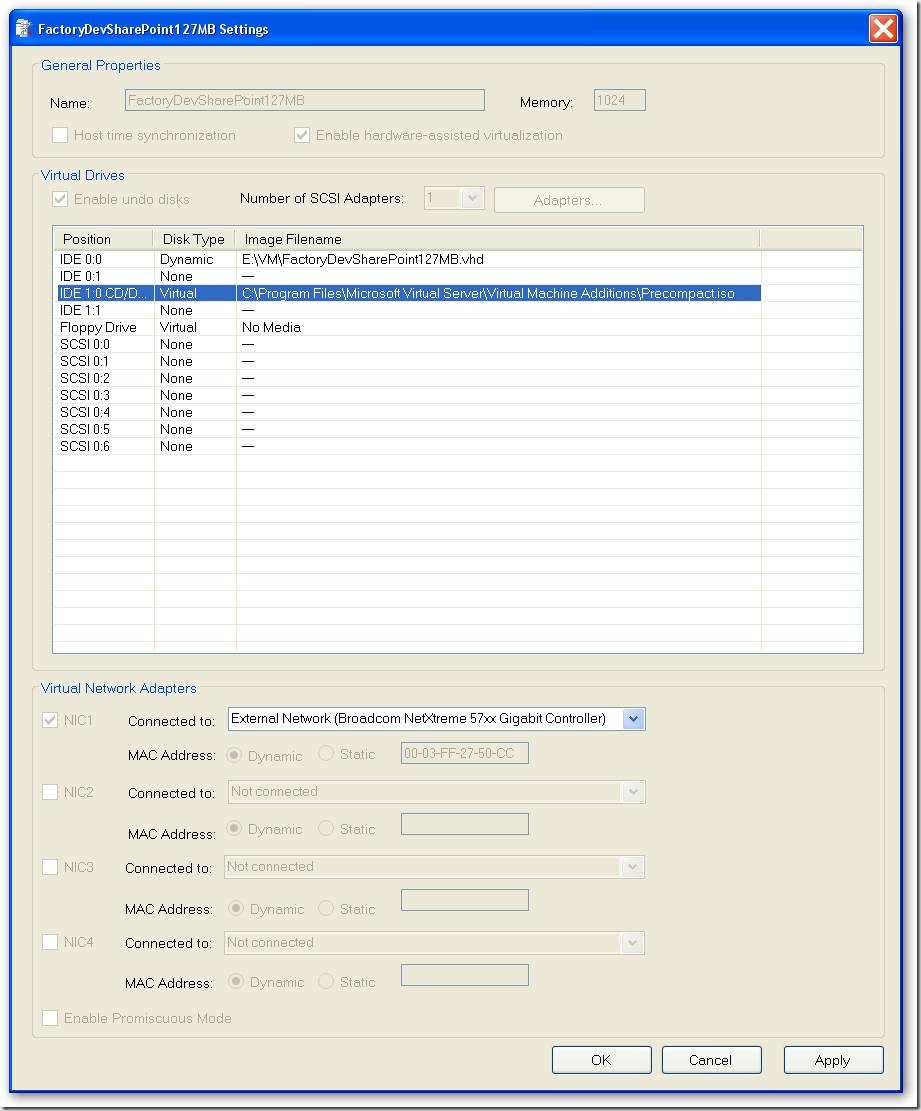

- Now start your virtual machine, and mount the ISO image C:\Program Files\Microsoft Virtual Server\Virtual Machine Additions\Precompact.iso. I personally use VMRCPlus to mount the ISO, right-click on CD-Drive and select Attach Media Image file… as shown in the screenshot below

- When you navigate to the drive where the ISO image is mounted, the precompaction starts. The precompaction writes zero’s in all unused space in the disk, so the disk can be compacted in the next steps.

- Shut down the virtual machine

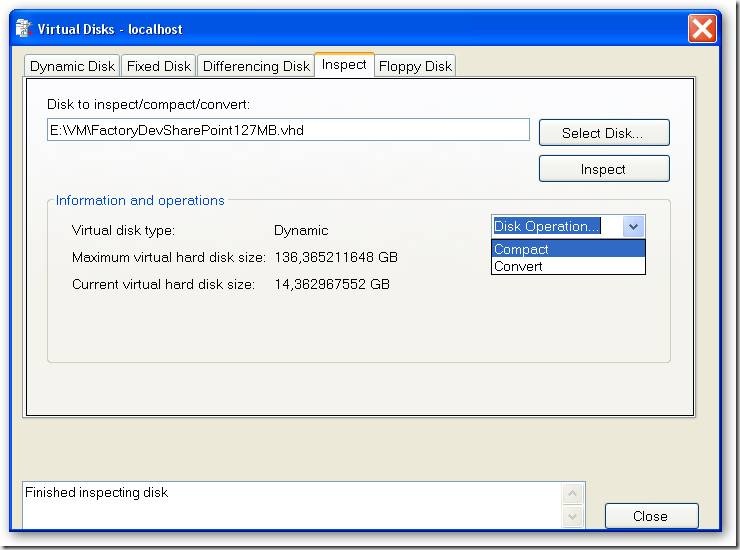

- Now start compaction of the virtual disk. This can be done in VirtualPC 2007, in the administrative web Virtual Server 2005, or in VMRCPlus, which I use here. Navigate to Tools > Virtual Disks > Inspect Virtual Hard disk, select your vhd to compact and select disk operation Compact

- Done! Make your virtual hard disk read only, and start creating differencing disks based on your new base disk.

-

VirtualPC/Virtual Server machine at higher resolution than 1600x1200!

I have always been so annoyed by the fact that on my laptop with 1920x1200 resolution I could only get a connection to my virtual machines at a resolution of 1600x1200, the max resolution by the emulates S3 graphics board, resulting in two 160 pixesl wide black bars on the left and right sides. But on Vista I did get a connection on higher resolutions, after some searching I found that the old Remote Desktop Connection software of XP (SP2) was the problem. Upgrade it to version 6 and you worries are over. See: http://support.microsoft.com/kb/925876/en-us. Happy virtual development!

-

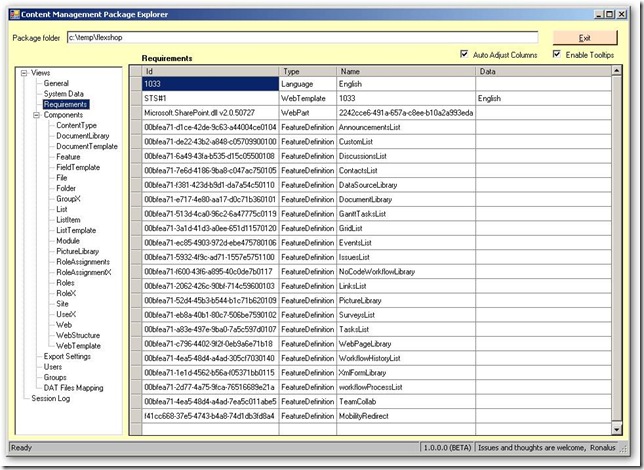

SharePoint Content Management Package (CMP) explorer

Ronalus has created a great small utility to explore the contents of SharePoint Content Management Pages called Content Migration Package Explorer.

This tool can be used to investigate the data exported by the SharePoint Content Migration API. You can create an export either with stsadm.exe, or with custom code.

As example for this weblog I exported a simple site containing some test data using the following command:

Stsadm.exe –o export –url http://wss3dev/FlexShop -filename c:\temp\FlexShop -nofilecompression

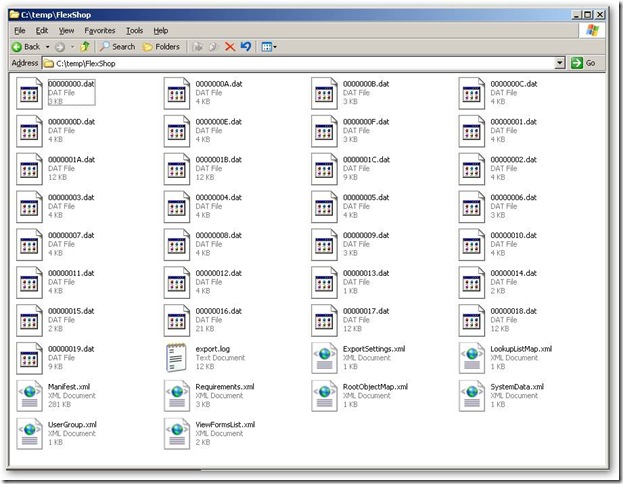

We now have a folder containing the output files, a set XML files and other files:

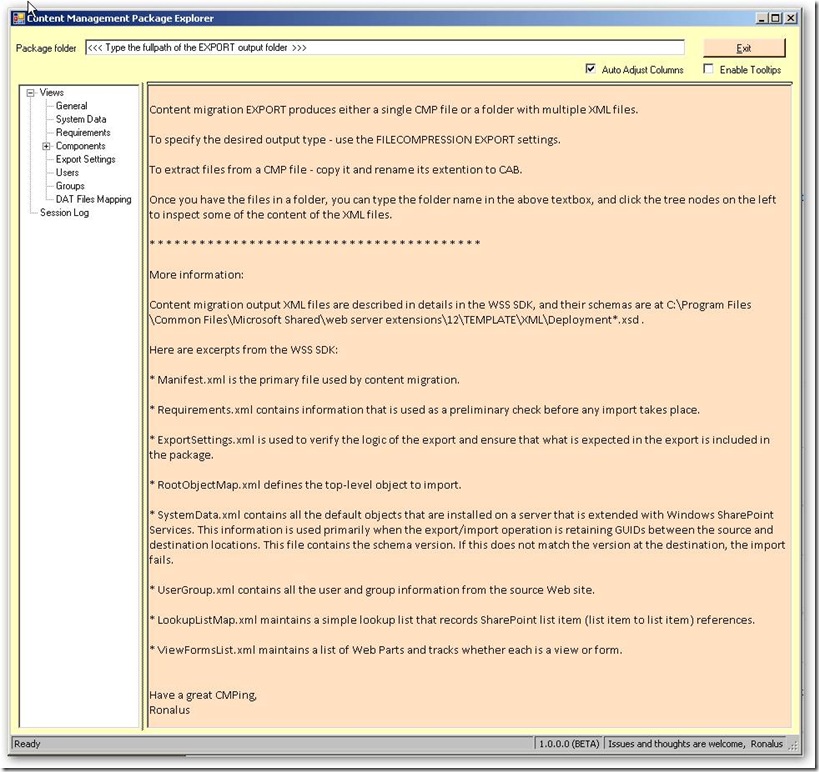

If we fire up the Content Migration Package Explorer we get the following startup screen:

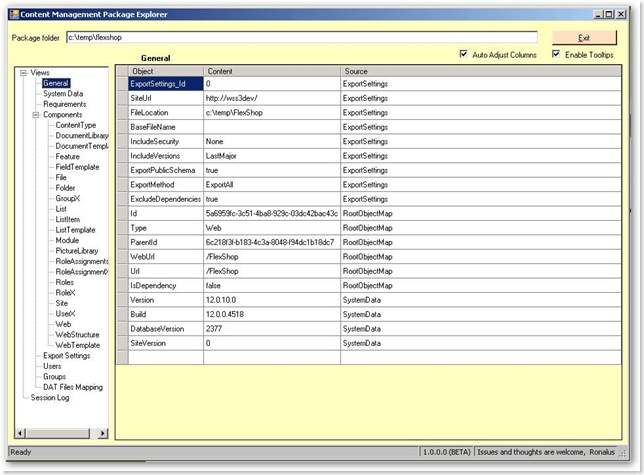

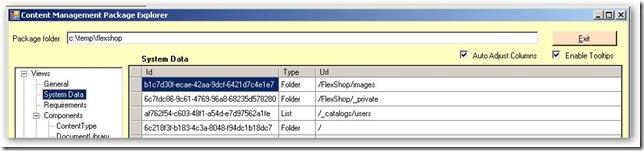

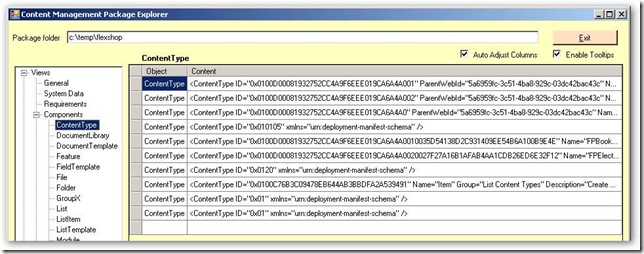

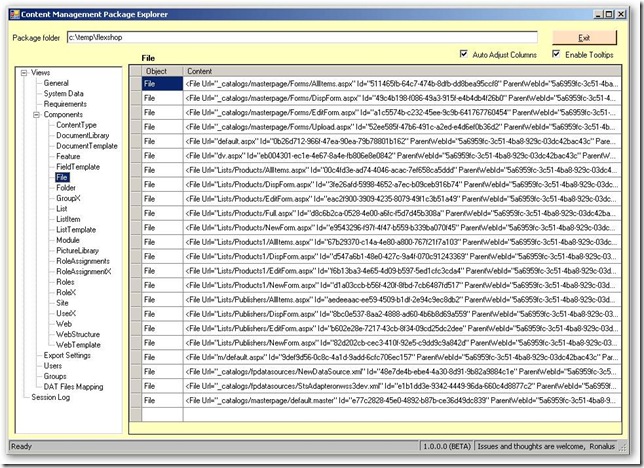

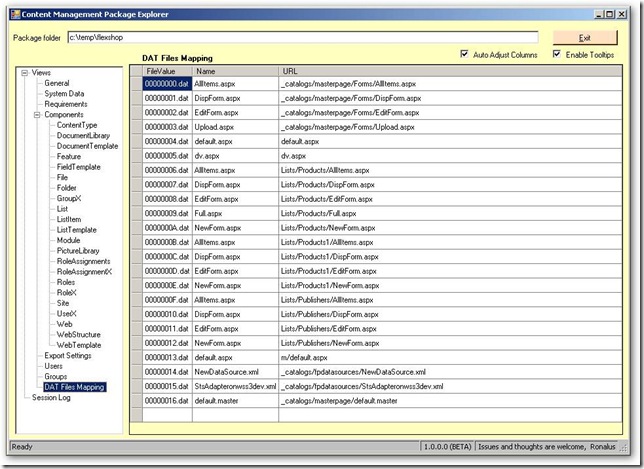

You can now enter the export folder and browse through the exported information using a simple to use explorer, see below for some screenshots:

-

SharePoint development: handy little utility to get GUID’s and Attribute names

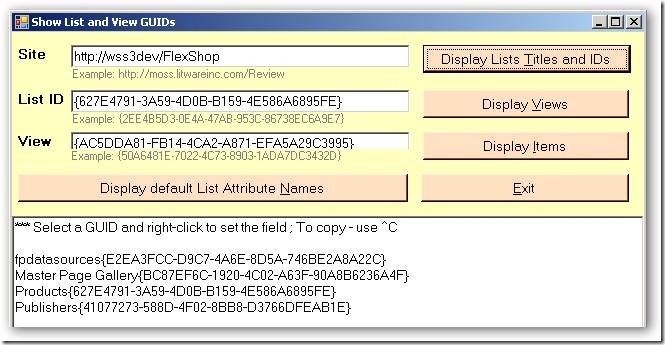

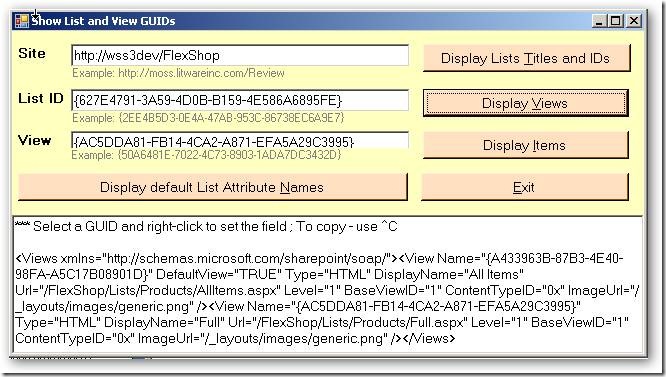

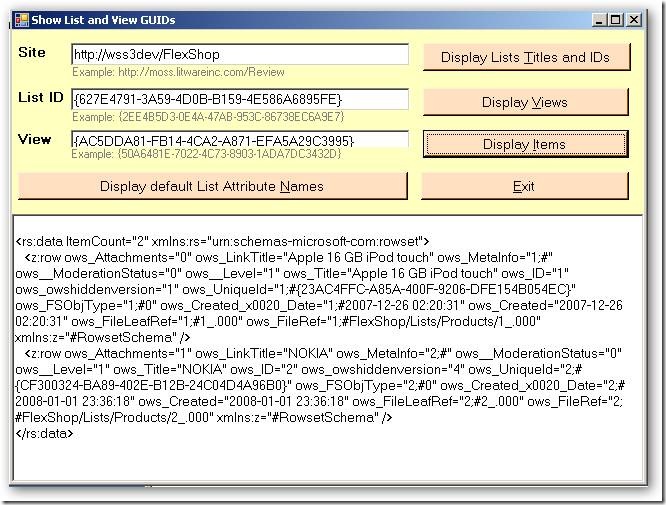

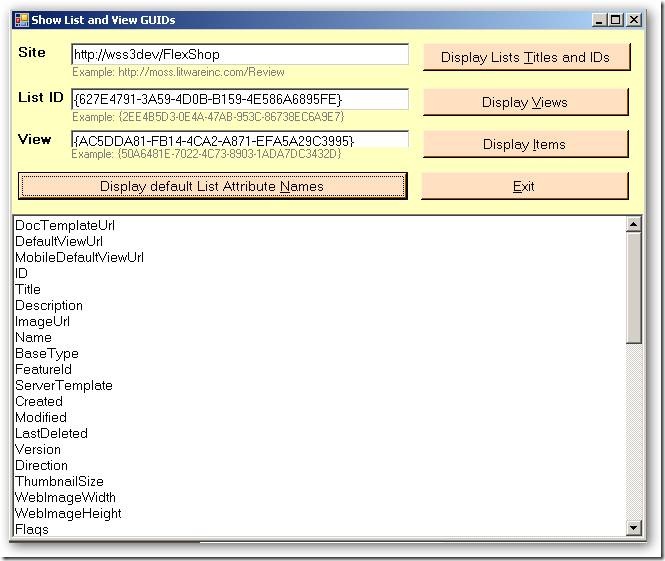

I just stumbled accross a handy little utility that is really handy during SharePoint development: Show List and ViewGUIDs.

Type a Site URL, and select “Display Lists Titles and Ids”. From the list of lists you can select a list GUID, and with right-click copy it to the List ID input field.

After clicking “Display Views” you see the available list views. Again you can select a view guid, and with right-click copy it to the View input field.

Select “Display Items” to display the list items in the view.

With a click on “Display default List Attribute Names” de internal names of the fields in the list are displayed.

Very useful if you don't have tools like SharePoint Explorer or SharePoint Inspector installed, or if you find those tools too large/slow/complex to get some GUIDs and name information fast.

-

SharePoint stsadm.exe and the infamous "Command line error"

The SharePoint command-line utility stsadm.exe was driving me crazy for the last two days. I'm automating all kind of this from PowerShell scripts, but I got the error "Command line error" while I was absolutely sure that what I was doing was correct. I did stsadm.exe -o deletesolution foobar.wsp. And the problem was: character encoding...

First I thought it was a problem with the way I executed this command from PowerShell. When I copied over the command to a different script it worked. What!!!??? Then I copied it over to the cmd.exe shell on the command line and I got the same error.

I started searching on Google and came accross this blogpost. It said it had something to do with encoding. The blog post also pointed to this blog post where people responded in the contents with the most hillarious solutions like:

If I type STSADM.EXE it works, while STSADM.exe does not work, or that a solution file must be in the same folder as stsadm.exe. Read the comments, it is fun how far off people can get.

Another post mentioning the problem is this discussion thread.

The problem happened to be in the encoding. One way or the other it is possible to get different encodings while typing in the same command multiple times in the same cmd.exe shell. Don't ask me how.

If you have problems, type your text in an editor like Notepad++, and switch between "Encode in ANSI" and "Encode in UTF-8" (it is under the Format menu in Notepad++). You see (sometimes)the dash(-) in stsadm -o command... change from '-' to a strange little block... There is the problem. The dash is not always a dash, it is a hyphen... And stsadm then thinks that no command is specified. Remove the strange block, type a hyphen while is UTF-8 encoding, copy that and bingo!! It works.

Ok, how to repeat this:

- Start Notepad++, set encoding to ANSI

- Make sure the path to stsadm.exe is in your path environment variable (or use full path)

- Type stsadm -o deletesolution foobar.wsp

- Start cmd.exe

- Copy the text over and press enter: voila, Command line error.

The strange thing is that even if you remove the dash (-), and type it again, it still gives errors. I have no clue why.

This dash/hypen thing is a common problem if you search Google, for more info see for example:

-

The future of Domain Specific Languages on the Visual Studio platform

Now Visual Studio 2008 is released (I know, I'm blogger #100.000 to mention this) it is interesting to look at the future of one of the important building blocks on the Microsoft platform for building Software Factories: the DSL Tools.

Stuart Kent, Senior Program Manager with the Visual Studio Ecosystem team, reveals a part of the post Visual Studio 2008 roadmap for the DSL tools in its blog post DSL Tools beyond VS2008.

Some of the mentioned concepts are already available in the implementation of the Web Service Software Factory: Modeling Edition, really interesting to have a look at the code.

The next version of Visual Studio will be Rosario. Clemens Rijnders describes some of the new Rosario features with respect to DSLs in his blog post Rosario Team Architect CTP10 Preview. One promising concept is the Designer Bus, which should simplify working with multiple DSLs in the same domain that reference each other. Some support for this functionality in currently available as the DSL integration service power toy.

-

PowerShell Exception handling using "Trap" explained - links

PowerShell has powerful exception handling, but it is badly documented and takes while to understand what the heck they actually want to do to wire up the exception handling.

I'm normally not such a link poster, this is more of a "post-to-self" item that might be useful for others as well.

- See Trap [Exception] { “In PowerShell” } for a very good explantation

- See PowerShell: Try...Catch...Finally Comes To Life for a Try/Catch/Finally implementation in PowerShell for the more c# oriented developers

-

The P&P Web Service Software Factory modeling edition and the Secret Dutch Software Factory Society

It is final! After almost nine months of hard work the version 3 of the Patterns&Practices Service Factory is released on CodePlex.

Johan Danforth already published this announcement here, but it doesn't hurt to bring it to your attention again because this stuff is good!

And the strange thing is that Don Smith, the Technical Product Planner of the Service Factory didn't had the time to blog about it and announce it officially. But I know why: he is a busy man! After TechEd 2007 in Barcelona last week (interview video), he was so kind to take the time to repeat the 3-days Service Factory Customization Workshop in the Netherlands with the Secret Dutch Software Factory Society.

I heard about this workshop through Olaf Conijn, who used to work at our great company Macaw. He announced the Dutch workshop in this blog post.

Olaf and Don did give this fantastic workshop at the domains of InfoSupport in Veenendaal, the Netherlands (thanks for the hospitality Marcel!). Way to far away from home (okay 80km in the Netherlands is trouble in the morning and afternoon), so a good reason to take a hotel and continue in the evenings where you often have the best conversations about beer, woman, and... oh ya, software factories.

Don and Olaf are great guys who know their stuff, and they even both did Bikram yoga, my addiction in life;-)

I personally wasn't really that interested in the web services part of the Web Service software factory, but in the customization part. And that is what we did. We customized the factory without "cracking the factory" (change the source code) through the great extensibility points that are available, and customized it while "cracking the factory" and changing the code. And the good thing is: this scenario is supported, documented and it is actually expected behavior to be done by companies who will be using the Service Factory;-). And the brilliant thing is: they even provided the code to build a complete MSI installer for your modified Service Factory!

The factory is provided with complete (recompileable) source code, and contains a lot of really interesting factory plumbing that I will elaborate on in a later post.

One thing is sure: we are definitely going to integrate the Web Service Software Factory into the Macaw Solutions Factory, our own software factory. It will fit like a glove (after some customization of course;-)). Brilliant work P&P!

Another great thing of three days together with 25 people busy with software factories is the amount of collective knowledge and the wealth of information we did share with each other. My compliments to Don and Olaf for providing a lot of room for this information sharing.

We gave presentations to each other about the things we are doing with software factories:

- Gerben van Loon of Avanade talked about the Avenade's SOA Factory which supports the same domain as the Web Service SoftwareFactory. It is model driven (DSL), already built ages before the Service Factory: modeling edition, and has proved itself in over 20 projects. Many of its ideas actually influenced the P&P Service Factory modeling edition

- Vincent Hoogendoorn en I talked about the Macaw Solutions Factory

- Marcel de Vries of InfoSupport talked about their software factory Endeavour

- Clemens Reijnen of Sogeti talked about his work on integrating the Service Factory with the Distributed System Designer of the Visual Studio Team System for Software Architects SKU

- Gerardo de Geest of Avanade talked about research he has done on Building a framework to support Domain Specific Language evolution using Microsoft DSL Tools (presentation, article). Based on this work Avenade hopes to be able to migrate models from the Avenade factory to the P&P Service Factory

For more information see the MSDN landing page Web Service Software Factory: Modeling Edition. Check it out!

-

Powershell: Is a SharePoint solution installed or deployed?

I was having some fun with PowerShell to talks against the SharePoint object model. I needed to know if a SharePoint solution was installed and if it was deployed. I got to the code below. Might be handy for someone to see how easy it is to get info out of SharePoint.

And the purpose I need it for? In our Macaw Solutions Factory we have a development build and a package build. Development build for SharePoint deploys everything to the bin folder of one or more web applications. Package build creates a solution package (.wsp file) that does install assemblies to the GAC. If this solutions package is deployed on the development machine, we want to detect that, because you will not see any changes after compile in a development build. The GAC assemblies have precedence over the assemblies in the web application bin folder.

So here is the code, have fun with it.

[void][reflection.assembly]::LoadWithPartialName("Microsoft.SharePoint") function global:Test-SharePointSolution { param ( $solutionName = $(throw 'Missing: solutionName'), [switch]$installed, [switch]$deployed )

$solutioncheck = $true } break } } return $solutioncheck } Test-SharePointSolution -solutionName wikidiscussionsolution.wsp -installed Test-SharePointSolution -solutionName wikidiscussionsolution.wsp -deployed</span><span style="color: rgb(0,0,255)">if</span><span style="color: rgb(0,0,0)"> (</span><span style="color: rgb(0,0,0)">!</span><span style="color: rgb(0,0,0)">(</span><span style="color: rgb(0,0,255)">$solutionName</span><span style="color: rgb(0,0,0)">.EndsWith(</span><span style="color: rgb(0,0,128)">'</span><span style="color: rgb(0,0,128)">.cab</span><span style="color: rgb(0,0,128)">'</span><span style="color: rgb(0,0,0)">) </span><span style="color: rgb(0,0,0)">-</span><span style="color: rgb(0,128,128)">or</span><span style="color: rgb(0,0,0)"> </span><span style="color: rgb(0,0,255)">$solutionName</span><span style="color: rgb(0,0,0)">.EndsWith(</span><span style="color: rgb(0,0,128)">'</span><span style="color: rgb(0,0,128)">.wsp</span><span style="color: rgb(0,0,128)">'</span><span style="color: rgb(0,0,0)">) </span><span style="color: rgb(0,0,0)">-</span><span style="color: rgb(0,128,128)">or</span><span style="color: rgb(0,0,0)"> </span><span style="color: rgb(0,0,255)">$solutionName</span><span style="color: rgb(0,0,0)">.EndsWith(</span><span style="color: rgb(0,0,128)">'</span><span style="color: rgb(0,0,128)">.wpp</span><span style="color: rgb(0,0,128)">'</span><span style="color: rgb(0,0,0)">))) { throw </span><span style="color: rgb(0,0,128)">"</span><span style="color: rgb(0,0,128)">solution name '$solutionName' should end with .cab, .wsp or .wpp</span><span style="color: rgb(0,0,128)">"</span><span style="color: rgb(0,0,0)"> } </span><span style="color: rgb(0,0,255)">if</span><span style="color: rgb(0,0,0)"> (</span><span style="color: rgb(0,0,255)">$installed</span><span style="color: rgb(0,0,0)"> </span><span style="color: rgb(0,0,0)">-</span><span style="color: rgb(0,128,128)">and</span><span style="color: rgb(0,0,0)"> </span><span style="color: rgb(0,0,255)">$deployed</span><span style="color: rgb(0,0,0)">) { throw </span><span style="color: rgb(0,0,128)">"</span><span style="color: rgb(0,0,128)">Select either the '-installed' switch parameter or the '-deployed' switch parameter, not both at the same time</span><span style="color: rgb(0,0,128)">"</span><span style="color: rgb(0,0,0)"> } </span><span style="color: rgb(0,0,255)">$farm</span><span style="color: rgb(0,0,0)"> </span><span style="color: rgb(0,0,0)">=</span><span style="color: rgb(0,0,0)"> [Microsoft.SharePoint.Administration.SPFarm]::get_Local() </span><span style="color: rgb(0,0,255)">$solutions</span><span style="color: rgb(0,0,0)"> </span><span style="color: rgb(0,0,0)">=</span><span style="color: rgb(0,0,0)"> </span><span style="color: rgb(0,0,255)">$farm</span><span style="color: rgb(0,0,0)">.get_Solutions() </span><span style="color: rgb(0,0,255)">$solutioncheck</span><span style="color: rgb(0,0,0)"> </span><span style="color: rgb(0,0,0)">=</span><span style="color: rgb(0,0,0)"> </span><span style="color: rgb(0,0,255)">$false</span><span style="color: rgb(0,0,0)"> </span><span style="color: rgb(0,0,255)">foreach</span><span style="color: rgb(0,0,0)"> (</span><span style="color: rgb(0,0,255)">$solution</span><span style="color: rgb(0,0,0)"> </span><span style="color: rgb(0,0,255)">in</span><span style="color: rgb(0,0,0)"> </span><span style="color: rgb(0,0,255)">$solutions</span><span style="color: rgb(0,0,0)">) { </span><span style="color: rgb(0,0,255)">if</span><span style="color: rgb(0,0,0)"> (</span><span style="color: rgb(0,0,255)">$solution</span><span style="color: rgb(0,0,0)">.Name </span><span style="color: rgb(0,0,255)">-ieq</span><span style="color: rgb(0,0,0)"> </span><span style="color: rgb(0,0,255)">$solutionName</span><span style="color: rgb(0,0,0)">) { </span><span style="color: rgb(0,0,255)">if</span><span style="color: rgb(0,0,0)"> (</span><span style="color: rgb(0,0,255)">$deployed</span><span style="color: rgb(0,0,0)">) { </span><span style="color: rgb(0,0,255)">$solutioncheck</span><span style="color: rgb(0,0,0)"> </span><span style="color: rgb(0,0,0)">=</span><span style="color: rgb(0,0,0)"> </span><span style="color: rgb(0,0,255)">$solution</span><span style="color: rgb(0,0,0)">.Deployed } </span><span style="color: rgb(0,0,255)">else</span><span style="color: rgb(0,0,0)"> { </span><span style="color: rgb(0,128,0)">#</span><span style="color: rgb(0,128,0)"> installed, is always true if we get here</span><span style="color: rgb(0,128,0)">

![clip_image002[5]](https://aspblogs.blob.core.windows.net/media/soever/WindowsLiveWriter/UseVS2008tocreateaVSPackagethatrunsinVS2_1471A/clip_image002%255B5%255D_thumb.jpg)