Archives

-

PowerShell: dot source scripts into your module

You can create wrapper modules around existing library script files you “dot source” ‘d into your PowerShell 1.0 code by dot sourcing them into your module.

Problem is that from a module your current location is the location of the script that first imported your module.

You can get the location of your module using the PowerShell variable $PSScriptRoot.

So in your module file Xml.mps1 you can do something like:

Write-Host "Module Location: $PSScriptRoot"

. $PSScriptRoot\..\..\..\DotNet2\MastDeploy\MastPowerShellLib\MastDeploy-Logging.ps1

. $PSScriptRoot\..\..\..\DotNet2\MastDeploy\MastPowerShellLib\MastDeploy-DebugTooling.ps1

. $PSScriptRoot\..\..\..\DotNet2\MastDeploy\MastPowerShellLib\MastDeploy-Util-Xml.ps1Export-ModuleMember -function Xml*

Using this approach you can keep using the “dot source” approach in your PowerShell 1.0 code until you have time to refactor it, and start using modules in your PowerShell 2.0 code which prevents reloading code when the code is already available.

-

DualLayout - Complete HTML freedom in SharePoint Publishing sites!

Main complaint about SharePoint Publishing is HTML output size and quality. DualLayout to the rescue: complete HTML freedom in all your publishing pages!

In this post I will introduce our approach, in later posts I will go into details on my personal vision on how you could build Internet facing sites on the SharePoint platform, and how DualLayout can help you with that.

How SharePoint Publishing works

But first a little introduction into SharePoint Publishing. SharePoint Publishing has the concept of templates that are used for authoring and displaying information. There are two types of templates:

- master pages

- page layouts

The master page template contains the information that is displayed across multiple page layouts like headers, footers, logo’s and navigation. The master page is used to provide a consistent look and feel to your site.

The page layout template is associated with a content type that determines the set of fields that contain the (meta)data that can be stored, authored and displayed on a page based on the page layout. Within the page layout you can use field controls that are bound to the fields to provide editing and display capabilities for a field.

A page is an instance of a page layout. The master page used by the page is configured by the CustomMasterUrl property of the site a page is part of.

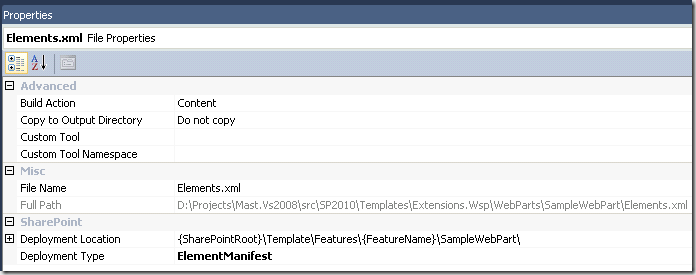

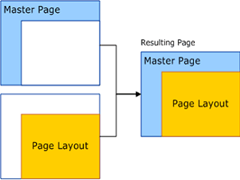

Some images to help describe the concepts:

Image 1: Relationship between page instance, master page and page layout (source)

Image 2: Usage of fields in a page layout (source)The problem

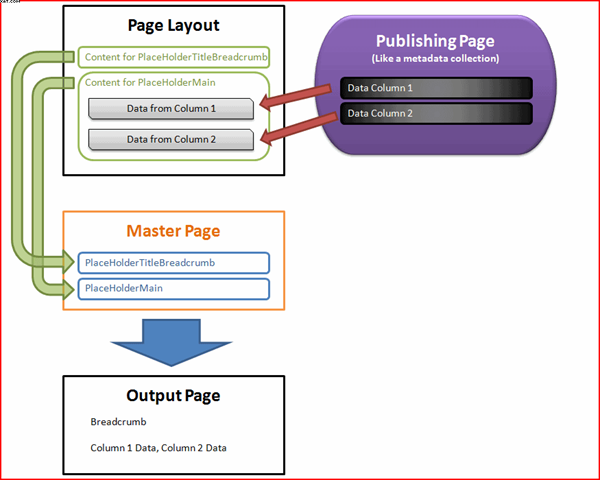

A SharePoint Publishing page can be in edit or display mode. The same SharePoint master page and page layout are used in both the edit mode and display mode. This gives you WYSIWYG editing.

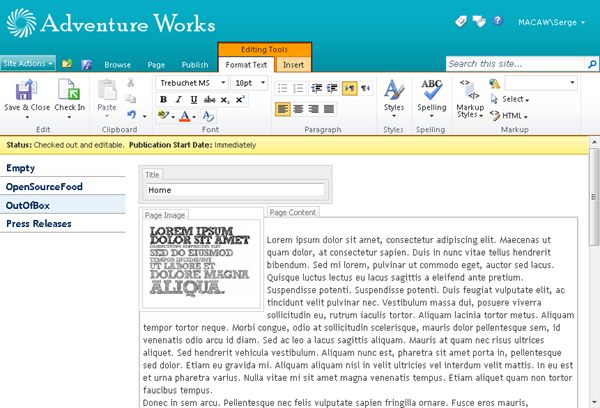

Image 3: A SharePoint Publishing page in WCM edit mode

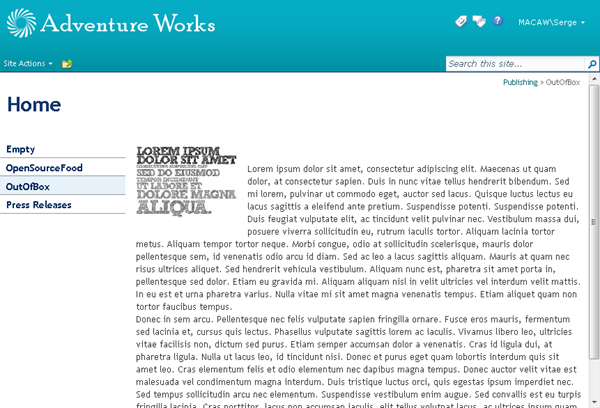

Image 4: A SharePoint Publishing page in WCM display mode

To be able to use a Publishing page in both editing and display mode there are a few requirements:

- The Publishing master page and page layouts need a minimal set of controls to make it possible to use a page in both edit and display mode. See for example http://startermasterpages.codeplex.com for a minimal Publishing master page which has a size of 25 kb to get you started

- Create a master page that works in both the edit and display mode

- The style sheets (CSS) and JavaScript used in publishing pages must not interfere with the standard styles and master pages used by SharePoint to provide the editing experience. This means that designers of the publishing site need to know how to let their style sheets work together with the SharePoint style sheets like core.css

- The page must use the ASP.NET Single Web Form Postback architecture, otherwise editing stops working

All these requirements are not easy to fulfill if you have to migrate an existing internet site to the SharePoint 2010 Publishing platform, or when you get a new design for your Internet site from a design agency. The people who created the design and site interaction in most cases didn’t design with SharePoint in mind, or they don’t have knowledge of the SharePoint platform.

When you want to make your design work together with the SharePoint styles heet you need a lot of knowledge of SharePoint. Especially with MOSS 2007 people like Heather Salomon became famous for dissecting and documenting the SharePoint CSS files to prevent designers from branding nightmare.

One of the design agencies we work with describe SharePoint as the “hostile environment” where their CSS and JavaScript must live in. If you don’t play exactly by the rules of SharePoint you will see things like:

Image 5: SharePoint 2007 usability trouble when the padding and margins of tables are changedThings got a lot better in SharePoint 2010 with the introduction of the context sensitive Ribbon at the top of the page, instead of editor bars injected into the design. But still many of the same issues remain.

Image 6: The SharePoint 2010 RibbonSo in my opinion SharePoint Publishing has a few “problems”:

- A lot of SharePoint knowledge is required by visual designers and interaction designers

- Time consuming and complex construction of master pages and page layouts

- Master pages and page layouts get complex in order to keep them working in both edit and display mode

- Issues with upgrades of master pages / page layouts when going to new version of SharePoint

- Large set of required server control that are only used in edit mode, but still “execute” in display mode

- SharePoint pages are large

- SharePoint pages are difficult to get passing W3C validation

- You are stuck with the ASP.NET Single Web Form Postback architecture

Enough about the problems, lets get to the solution!

The Solution

About a year ago we got completely stuck on a huge project. A design agency delivered a great design completely worked out with perfect html, CSS and JavaScript, using progressive enhancement principles. They created everything without knowledge of SharePoint, and without taking SharePoint into account. We did everything we could to get it working on SharePoint: we wrote compensating CSS, custom controls, did tricks to fix id’s as created by ASP.NET, rewrote multiple form tags back to the single ASP.NET form post, but we could not get it working the way they wanted.

We saw two approaches to solve our issues:

- Use SharePoint for web content management and build an ASP.NET MVC site against the SharePoint services to read the data for the site

- Make the SharePoint Publishing beast dance to our tunes

It became a kind of competition within our company which approach would be best, and we managed to do both.

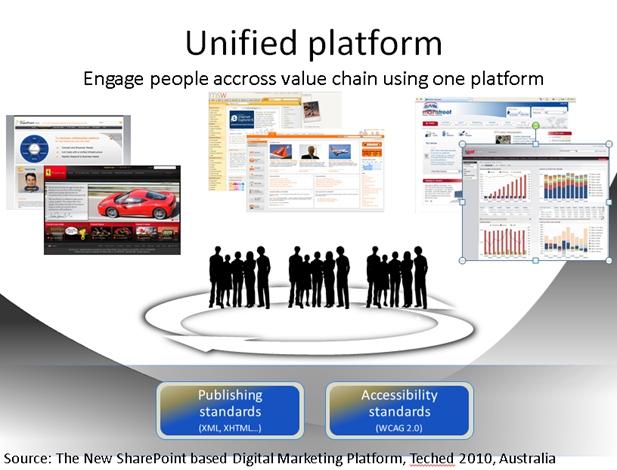

Using ASP.NET MVC works great, but then it isn’t SharePoint. Its just like a custom application that uses data from an external database. Something that is difficult to sell to a company who just decided for very good reasons to go for a unified platform for intranet, extranet and internet. (See also my post Why use SharePoint Publishing for public internet sites?)

But we also managed to do the second which we named DualLayout. We created an approach where we can introduced an additional mode to SharePoint Publishing pages. Besides the SharePoint Publishing WCM mode with it’s edit and display modes, we introduced a view mode. The view mode is a mode that gives you a view-only version of the page where you don’t have to bother about the page going into edit mode, and also don’t need the plumbing to enable your page to go into edit mode.

So now a SharePoint Publishing page can have the following modes:

- SharePoint Publishing WCM Display Mode

The standard SharePoint display mode using the master page and page layout with all the required controls and the field controls rendered in display mode. - SharePoint Publishing WCM Edit Mode

The standard SharePoint edit mode using the master page and page layout with all the required controls and the field controls rendered in edit mode. - SharePoint Publishing View Mode

The additional view, with complete freedom in HTML, no requirements for controls or ASP.NET single web form.

And how is this accomplished? We made it possible to have a master page and page layout for WCM mode (edit, display) and a master page and page layout for view mode (display only). Depending on the mode we display the page with the correct master page and page layout. This gives us complete freedom in designing an end user view separate from a content author / approver view.

The WCM master page and page layout are the domain of the SharePoint specialist. She can go for completely WYSIWYG editing, or use the out of the box v4.master master page to stay as close as possible with the SharePoint look and feel so the content authors recognize the UI from the SharePoint text books.

The View Mode master page and page layout are completely the domain of the web site designer. Any HTML can be used, there is no dependency on core.css of SharePoint JavaScripts. If required the ASP.NET single web form model can be used, for example around a web part zone, but it does not have to be around the complete page, or it can be left out completely.

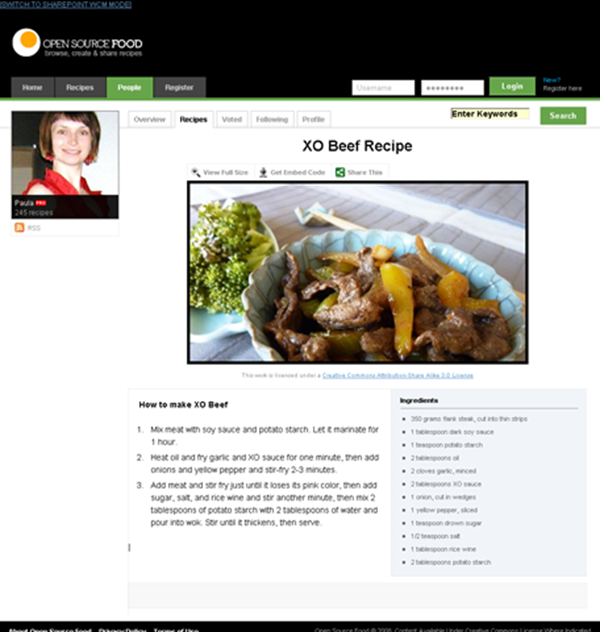

Below some screenshots of an example I made with DualLayout based on the web site http://opensourcefood.com. In a next post I will go into detail on how I created the example using DualLayout. In this example I use the out of the box v4.master master page for the WCM mode (edit/display) where I give a schematic preview of the content in WCM display mode, and provide guidance for the content author in WCM edit mode. In the view mode I generate the exact HTML as is used in the actual site http://opensourcefood.com, without any compromise.

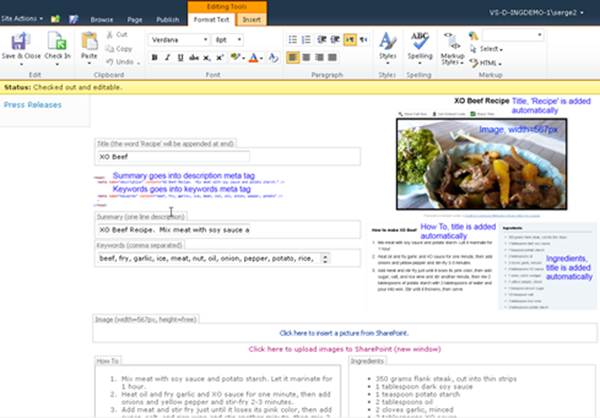

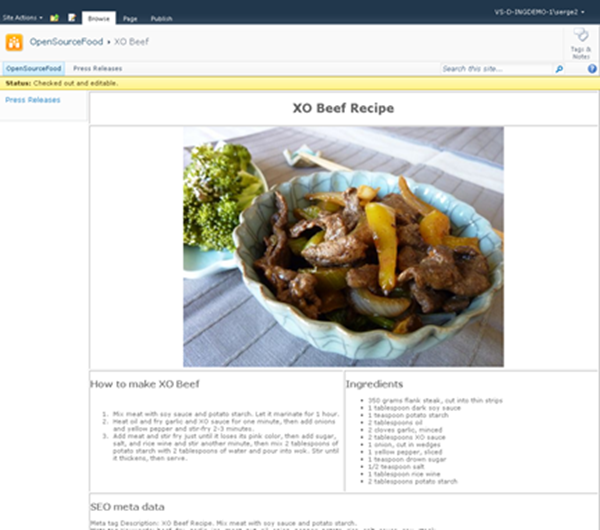

Image 7: OpenSourceFood sample, SharePoint Publishing WCM Edit Mode

Image 8: OpenSourceFood sample, SharePoint Publishing WCM Display Mode

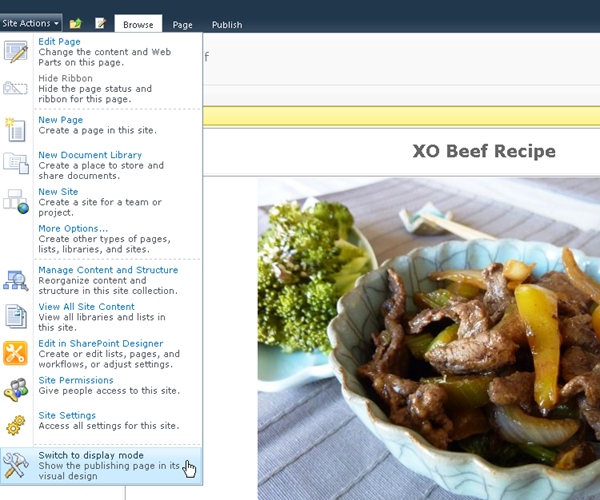

Image 9: OpenSourceFood sample, SharePoint Publishing View ModeIf you are in the SharePoint Publishing View Mode, and you have the rights to author the page, there is a link that the designer can put in any location on the page to switch to WCM mode. In the zoom below you can see that we created it as a simple link at the top of the page. This link is not visible for the end user of your site.

Image 10: Link in View mode back to WCM Display modeIf you are in WCM Edit mode and want to see a preview of your page in View mode, you can go to the Page tab on the ribbon and select the Preview action:

Image 11: See a preview of page you are currently editing in View modeIf you are in WCM Display mode, you can switch to the View mode using an action in the Site Actions menu:

Conclusion

DualLayout gives you all the freedom you need to build Internet facing web sites on the SharePoint Publishing platform,the way you want, without any restrictions. In the next post I will explain how I created the OpenSourceFood sample. DualLayout works on both MOSS 2007 and SharePoint 2010. I will make a full-featured download available soon so you can try it out yourself. Let me know if you are interested.

-

Why use SharePoint Publishing for public internet sites?

Is SharePoint the best Web Content Management System you can get? Is it the best platform for your internet sites? Maybe not. There are specialized WCM platforms like Tridion, SiteCore and EPIServer that might be better. But there are many reasons why you would use SharePoint over the many alternatives.

Strategic platform for many organizations – SharePoint is a platform that works great for intranets and extranets. It is often selected as the strategic web platform within an organization. There is a SharePoint unless policy. So why not do the public facing web sites on SharePoint as well?

Unified web platform – In these organizations SharePoint is used as the unified web platform that is used for team, divisional, intranet, extranet, and internet sites. This unified platform promises to reduce cost and increase agility.

With respect to the Internet this vision is also promoted by Microsoft as Microsoft’s Internet Business Vision with focus on IT Control (existing infrastructure, low TCO) and web agility; one platform to rule them all:

See also: Microsoft's Internet Business Platform Vision Part 1, Microsoft's Internet Business Platform Vision Part 2

Reuse of knowledge and people - Unified Development, Unified Infrastructure; the same skills can be reused for development and maintenance.

Existing relationships – the Communication department (focusing on Intranet/Extranet) and the Marketing department (focusing on Internet) are either the same group of people, or working close together.

Support from the community – SharePoint is one of the Microsoft server products with the best community support in blogs, support sites and open source projects. For example on http://codeplex.com you can find 1300 open source projects related to SharePoint, versus for example 27 projects for EPIServer.

SharePoint is a development platform – SharePoint isn’t just an out of the box product, it is a development platform that you can extend at will, like an infinite number of companies already did to provide you with tools for all the (many) gaps that exist in the out of the box SharePoint offering.

---

But if you look at the Internet sites that are built with SharePoint Publishing, some people are a bit disappointed. Microsoft’s classic showcases like http://ferrari.com and http://www.hawaiianair.com deliver pages with 1000+ lines, and 100+ validation errors when you run the W3C validator. But there are also a lot of great sites that use the SharePoint Publishing platform. Have a look at http://www.wssdemo.com/livepivot/ for a nice overview of those sites. In a future post I will describe our approach to delivering quality HTML on the SharePoint Publishing platform. We call this approach DualLayout.

-

Is your code running in a SharePoint Sandbox?

You could execute a function call that is not allowed in the sandbox (for example call a static method on SPSecurity) and catch the exception. A better approach is to test the friendly name of you app domain:

AppDomain.CurrentDomain.FriendlyName returns "Sandboxed Code Execution Partially Trusted Asp.net AppDomain"

Because you can never be sure that this string changes in the future, a safer approach will be:

AppDomain.CurrentDomain.FriendlyName.Contains("Sandbox")

See http://www.sharepointoverflow.com/questions/2051/how-to-check-if-code-is-running-as-sandboxed-solution for a discussion on this topic.

-

Logging to SharePoint 2010 ULS log from sandbox

You can’t log directly from sandbox code to the SharePoint ULS log. Developing code without any form of logging is out of this time, so you need approaches for the two situations you can end up with when developing sandbox code:

You don’t have control over the server (BPOS scenario):

- You can log to comments in your HTML code, I know it’s terrible, don’t log sensitive information

- Write entries to a SharePoint “log” list (also take care of some form of clean up, for example if list longer that 1000 items, remove oldest item when writing new log message)

You have control over the server:

- You can develop a full-trust sandbox proxy (http://msdn.microsoft.com/en-us/library/ff798427.aspx) that provides you with functionality to do logging. Scot Hillier also provides a ready made WSP package containing such a proxy that must be deployed at farm level (http://sandbox.codeplex.com, SharePoint Logger)

-

Taming the VSX beast from PowerShell

Using VSX from PowerShell is not always a pleasant experience. Most stuff in VSX is still good old COM the System.__ComObject types are flying around. Everything can be casted to everything (for example EnvDTE to EnvDTE2) if you are in C#, but PowerShell can’t make spaghetti of it.

Enter Power Console. In Power Console there are some neat tricks available to help you out of VSX trouble. And most of the trouble solving is done in… PowerShell. You just need to know what to do.

To get out of trouble do the following:

- Head over to the Power Console site

- Right-click on the Download button, and save the PowerConsole.vsix file to your disk

- Rename PowerConsole.vsix to PowerConsole.zip and unzip

- Look in the Scripts folder for the file Profile.ps1 which is full of PowerShell/VSX magic

The PowerShell functions that perform the VSX magic are:

Extract from Profile.ps1- <#

- .SYNOPSIS

- Get an explict interface on an object so that you can invoke the interface members.

- .DESCRIPTION

- PowerShell object adapter does not provide explict interface members. For COM objects

- it only makes IDispatch members available.

- This function helps access interface members on an object through reflection. A new

- object is returned with the interface members as ScriptProperties and ScriptMethods.

- .EXAMPLE

- $dte2 = Get-Interface $dte ([EnvDTE80.DTE2])

- #>

- function Get-Interface

- {

- Param(

- $Object,

- [type]$InterfaceType

- )

- [Microsoft.VisualStudio.PowerConsole.Host.PowerShell.Implementation.PSTypeWrapper]::GetInterface($Object, $InterfaceType)

- }

- <#

- .SYNOPSIS

- Get a VS service.

- .EXAMPLE

- Get-VSService ([Microsoft.VisualStudio.Shell.Interop.SVsShell]) ([Microsoft.VisualStudio.Shell.Interop.IVsShell])

- #>

- function Get-VSService

- {

- Param(

- [type]$ServiceType,

- [type]$InterfaceType

- )

- $service = [Microsoft.VisualStudio.Shell.Package]::GetGlobalService($ServiceType)

- if ($service -and $InterfaceType) {

- $service = Get-Interface $service $InterfaceType

- }

- $service

- }

- <#

- .SYNOPSIS

- Get VS IComponentModel service to access VS MEF hosting.

- #>

- function Get-VSComponentModel

- {

- Get-VSService ([Microsoft.VisualStudio.ComponentModelHost.SComponentModel]) ([Microsoft.VisualStudio.ComponentModelHost.IComponentModel])

- }

The same Profile.ps1 file contains a nice example of how to use these functions:

A lot of other good samples can be found on the Power Console site at the home page.

Now there are two things you can do with respect to the Power Console specific function GetInterface() on line 22:

- Make sure that Power Console is installed and load the assembly Microsoft.VisualStudio.PowerConsole.Host.PowerShell.Implementation.dll

- Fire-up reflector and investigate the GetInterface() function to isolate the GetInterface code into your own library that only contains this functionality (I did this, it is a lot of work!)

For this post we use the first approach, the second approach is left for the reader as an exercise:-)

To try it out I want to present a maybe bit unusual case: I want to be able to access Visual Studio from a PowerShell script that is executed from the MSBuild script building a project.

In the .csproj of my project I added the following line:

<!-- Macaw Software Factory targets -->

<Import Project="..\..\..\..\tools\DotNet2\MsBuildTargets\Macaw.Mast.Targets" />The included targets file loads the PowerShell MSBuild Task (CodePlex) that is used to fire a PowerShell script on AfterBuild. Below a relevant excerpt from this targets file:

Macaw.Mast.Targets- <Project DefaultTargets="AfterBuild" xmlns="http://schemas.microsoft.com/developer/msbuild/2003">

- <UsingTask AssemblyFile="PowershellMSBuildTask.dll" TaskName="Powershell"/>

- <Target Name="AfterBuild" DependsOnTargets="$(AfterBuildDependsOn)">

- <!-- expand $(TargetDir) to _TargetDir, otherwise error on including in arguments list below -->

- <CreateProperty Value="$(TargetDir)">

- <Output TaskParameter="Value" PropertyName="_TargetDir" />

- </CreateProperty>

- <Message Text="OnBuildSuccess = $(@(IntermediateAssembly))"/>

- <Powershell Arguments="

- MastBuildAction=build;

- MastSolutionName=$(SolutionName);

- MastSolutionDir=$(SolutionDir);

- MastProjectName=$(ProjectName);

- MastConfigurationName=$(ConfigurationName);

- MastProjectDir=$(ProjectDir);

- MastTargetDir=$(_TargetDir);

- MastTargetName=$(TargetName);

- MastPackageForDeployment=$(MastPackageForDeployment);

- MastSingleProjectBuildAndPackage=$(MastSingleProjectBuildAndPackage)

- "

- VerbosePreference="Continue"

- Script="& (Join-Path -Path "$(SolutionDir)" -ChildPath "..\..\..\tools\MastDeployDispatcher.ps1")" />

- </Target>

- </Project>

The MastDeployDispatcher.ps1 script is a Macaw Solutions Factory specific script, but you get the idea. To test in which context the PowerShell script is running I added the following lines op PowerShell code to the executed PowerShell script:

$process = [System.Diagnostics.Process]::GetCurrentProcess()

Write-Host "Process name: $($a.ProcessName)"Which returns:

Process name: devenv

So we know our PowerShell script is running in the context of the Visual Studio process. I wonder if this is still the case if you set the maximum number of parallel projects builds to a value higher than 1 (Tools->Options->Projects and Solutions->Build and Run). I did put the value on 10, tried it, and it still worked, but I don’t know if there were more builds running at the same time.

My first step was to try one of the examples on the Power Console home page: show “Hello world” using the IVsUIShell.ShowMessageBox() function.

I added the following code to the PowerShell script:

PowerShell from MSBuild- [void][reflection.assembly]::LoadFrom("C:\Users\serge\Downloads\PowerConsole\Microsoft.VisualStudio.PowerConsole.Host.PowerShell.Implementation.dll")

- [void][reflection.assembly]::LoadWithPartialName("Microsoft.VisualStudio.Shell.Interop")

- function Get-Interface

- {

- Param(

- $Object,

- [type]$InterfaceType

- )

- [Microsoft.VisualStudio.PowerConsole.Host.PowerShell.Implementation.PSTypeWrapper]::GetInterface($Object, $InterfaceType)

- }

- function Get-VSService

- {

- Param(

- [type]$ServiceType,

- [type]$InterfaceType

- )

- $service = [Microsoft.VisualStudio.Shell.Package]::GetGlobalService($ServiceType)

- if ($service -and $InterfaceType) {

- $service = Get-Interface $service $InterfaceType

- }

- $service

- }

- $msg = "Hello world!"

- $shui = Get-VSService `

- ([Microsoft.VisualStudio.Shell.Interop.SVsUIShell]) `

- ([Microsoft.VisualStudio.Shell.Interop.IVsUIShell])

- [void]$shui.ShowMessageBox(0, [System.Guid]::Empty,"", $msg, "", 0, `

- [Microsoft.VisualStudio.Shell.Interop.OLEMSGBUTTON]::OLEMSGBUTTON_OK,

- [Microsoft.VisualStudio.Shell.Interop.OLEMSGDEFBUTTON]::OLEMSGDEFBUTTON_FIRST, `

- [Microsoft.VisualStudio.Shell.Interop.OLEMSGICON]::OLEMSGICON_INFO, 0)

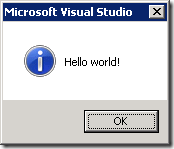

When I build the project I get the following:

So we are in business! It is possible to access the Visual Studio object model from a PowerShell script that is fired from the MSBuild script used to build your project. What you can do with that is up to your imagination. Note that you should differentiate between a build done on your developer box, executed from Visual Studio, and a build executed by for example your build server, or executing from MSBuild directly.

-

Powershell: Finding items in a Visual Studio project

In the Macaw Solutions Factory we execute a lot of PowerShell code in the context of Visual Studio, meaning that we can access the Visual Studio object model directly from our PowerShell code.

There is a great add-in for Visual Studio that provides you with a PowerShell console within Visual Studio that also allows you to access the Visual Studio object model to play with VSX (Visual Studio Extensibility). This add-in is called Power Console.

If you paste the function below in this Power Console, you can find a (selection of) project items in a specified Visual Studio project.

For example:

FindProjectItems -SolutionRelativeProjectFile 'Business.ServiceInterfaces\Business.ServiceInterfaces.csproj' -Pattern '*.asmx' | select-object RelativeFileName

returns:

RelativeFileName ----------------

Internal\AnotherSoapService.asmx

SampleSoapService.asmxWhat I do is that I extend the standard Visual Studio ProjectItem objects with two fields: FileName (this is the full path to the item) and RelativeFileName (this is the path to the item relative to the project folder (line 53-55). I return a collection of Visual Studio project items, with these additional fields.

A great way of testing out this kind of code is by editing it in Visual Studio using the PowerGuiVSX add-in (which uses the unsurpassed PowerGui script editor), and copying over the code into the Power Console.

Find project items- function FindProjectItems

- {

- param

- (

- $SolutionRelativeProjectFile,

- $Pattern = '*'

- )

- function FindProjectItemsRecurse

- {

- param

- (

- $AbsolutePath,

- $RelativePath = '',

- $ProjectItem,

- $Pattern

- )

- $projItemFolder = '{6BB5F8EF-4483-11D3-8BCF-00C04F8EC28C}' # Visual Studio defined constant

- if ($ProjectItem.Kind -eq $projItemFolder)

- {

- if ($ProjectItem.ProjectItems -ne $null)

- {

- if ($RelativePath -eq '')

- {

- $relativeFolderPath = $ProjectItem.Name

- }

- else

- {

- $relativeFolderPath = Join-Path -Path $RelativePath -ChildPath $ProjectItem.Name

- }

- $ProjectItem.ProjectItems | ForEach-Object {

- FindProjectItemsRecurse -AbsolutePath $AbsolutePath -RelativePath $relativeFolderPath -ProjectItem $_ -Pattern $Pattern

- }

- }

- }

- else

- {

- if ($ProjectItem.Name -like $pattern)

- {

- if ($RelativePath -eq '')

- {

- $relativeFileName = $ProjectItem.Name

- }

- else

- {

- if ($RelativePath -eq $null) { Write-Host "Relative Path is NULL" }

- $relativeFileName = Join-Path -Path $RelativePath -ChildPath $ProjectItem.Name

- }

- $fileName = Join-Path -Path $AbsolutePath -ChildPath $relativeFileName;

- $ProjectItem |

- Add-Member -MemberType NoteProperty -Name RelativeFileName -Value $relativeFileName -PassThru |

- Add-Member -MemberType NoteProperty -Name FileName -Value $fileName -PassThru

- }

- }

- }

- $proj = $DTE.Solution.Projects.Item($SolutionRelativeProjectFile)

- $projPath = Split-Path -Path $proj.FileName -Parent

- if ($proj -eq $null) { throw "No project '$SolutionRelativeProjectFile' found in current solution" }

- $proj.ProjectItems | ForEach-Object {

- FindProjectItemsRecurse -AbsolutePath $projPath -ProjectItem $_ -Pattern $Pattern

- }

- }

-

PowerShell internal functions

Working with PowerShell for years already, never knew that this would work! Internal functions in PowerShell (they probably have a better name):

function x

{

function y

{

"function y"

}

y

}PS> x

function y

PS> y

ERROR!

-

Returning an exit code from a PowerShell script

Returning an exit code from a PowerShell script seems easy… but it isn’t that obvious. In this blog post I will show you an approach that works for PowerShell scripts that can be called from both PowerShell and batch scripts, where the command to be executed can be specified in a string, execute in its own context and always return the correct error code.

Below is a kind of transcript of the steps that I took to get to an approach that works for me. It is a transcript of the steps I took, for the conclusions just jump to the end.

In many blog posts you can read about calling a PowerShell script that you call from a batch script, and how to return an error code. This comes down to the following:

c:\temp\exit.ps1:

Write-Host "Exiting with code 12345"

exit 12345c:\temp\testexit.cmd:

@PowerShell -NonInteractive -NoProfile -Command "& {c:\temp\exit.ps1; exit $LastExitCode }"

@echo From Cmd.exe: Exit.ps1 exited with exit code %errorlevel%Executing c:\temp\testexit.cmd results in the following output:

Exiting with code 12345

From Cmd.exe: Exit.ps1 exited with exit code 12345But now we want to call it from another PowerShell script, by executing PowerShell:

c:\temp\testexit.ps1:

PowerShell -NonInteractive -NoProfile -Command c:\temp\exit.ps1

Write-Host "From PowerShell: Exit.ps1 exited with exit code $LastExitCode"Executing c:\temp\testexit.ps1 results in the following output:

Exiting with code 12345

From PowerShell: Exit.ps1 exited with exit code 1This is not what we expected… What happs? If the script just returns the exit code is 0, otherwise the exit code is 1, even if you exit with an exit code!?

But what if we call the script directly, instead of through the PowerShell command?

We change exit.ps1 to:

Write-Host "Global variable value: $globalvariable"

Write-Host "Exiting with code 12345"

exit 12345And we change testexit.ps1 to:

$global:globalvariable = "My global variable value"

& c:\temp\exit.ps1

Write-Host "From PowerShell: Exit.ps1 exited with exit code $LastExitCode"Executing c:\temp\testexit.ps1 results in the following output:

Global variable value: My global variable value

Exiting with code 12345

From PowerShell: Exit.ps1 exited with exit code 12345This is what we wanted! But now we are executing the script exit.ps1 in the context of the testexit.ps1 script, the globally defined variable $globalvariable is still known. This is not what we want. We want to execute it is isolation.

We change c:\temp\testexit.ps1 to:

$global:globalvariable = "My global variable value"

PowerShell -NonInteractive -NoProfile -Command c:\temp\exit.ps1

Write-Host "From PowerShell: Exit.ps1 exited with exit code $LastExitCode"Executing c:\temp\testexit.ps1 results in the following output:

Global variable value:

Exiting with code 12345

From PowerShell: Exit.ps1 exited with exit code 1We are not executing exit.ps1 in the context of testexit.ps1, which is good. But how can we reach the holy grail:

- Write a PowerShell script that can be executed from batch scripts an from PowerShell

- That return a specific error code

- That can specified as a string

- Can be executed both in the context of a calling PowerShell script AND (through a call to PowerShell) in it’s own execution space

We change c:\temp\testexit.ps1 to:

$global:globalvariable = "My global variable value"

PowerShell -NonInteractive -NoProfile -Command { c:\temp\exit.ps1 ; exit $LastExitCode }

Write-Host "From PowerShell: Exit.ps1 exited with exit code $LastExitCode"This is the same approach as when we called it from the batch script. Executing c:\temp\testexit.ps1 results in the following output:

Global variable value:

Exiting with code 12345

From PowerShell: Exit.ps1 exited with exit code 12345This is close. But we want to be able to specify the command to be executed as string, for example:

$command = "c:\temp\exit.ps1 -param1 x -param2 y"

We change c:\temp\exit.ps1 to: (support for variables, test if in its own context)

param( $param1, $param2)

Write-Host "param1=$param1; param2=$param2"

Write-Host "Global variable value: $globalvariable"

Write-Host "Exiting with code 12345"

exit 12345If we change c:\temp\testexit.ps1 to:

$global:globalvariable = "My global variable value"

$command = "c:\temp\exit.ps1 -param1 x -param2 y"

Invoke-Expression -Command $command

Write-Host "From PowerShell: Exit.ps1 exited with exit code $LastExitCode"We get a good exit code, but we are still executing in the context of testexit.ps1.

If we use the same trick as in calling from a batch script, that worked before?

We change c:\temp\testexit.ps1 to:

$global:globalvariable = "My global variable value"

$command = "c:\temp\exit.ps1 -param1 x -param2 y"

PowerShell -NonInteractive -NoProfile -Command { $command; exit $LastErrorLevel }

Write-Host "From PowerShell: Exit.ps1 exited with exit code $LastExitCode"Executing c:\temp\testexit.ps1 results in the following output:

From PowerShell: Exit.ps1 exited with exit code 0

Ok, lets use the Invoke-Expression again. We change c:\temp\testexit.ps1 to:

$global:globalvariable = "My global variable value"

$command = "c:\temp\exit.ps1 -param1 x -param2 y"

PowerShell -NonInteractive -NoProfile -Command { Invoke-Expression -Command $command; exit $LastErrorLevel }

Write-Host "From PowerShell: Exit.ps1 exited with exit code $LastExitCode"Executing c:\temp\testexit.ps1 results in the following output:

Cannot bind argument to parameter 'Command' because it is null.

At :line:3 char:10

+ PowerShell <<<< -NonInteractive -NoProfile -Command { Invoke-Expression -Command $command; exit $LastErrorLevel }From PowerShell: Exit.ps1 exited with exit code 1

We should go back to executing the command as a string, so not within brackets (in a script block). We change c:\temp\testexit.ps1 to:

$global:globalvariable = "My global variable value"

$command = "c:\temp\exit.ps1 -param1 x -param2 y"

PowerShell -NonInteractive -NoProfile -Command $command

Write-Host "From PowerShell: Exit.ps1 exited with exit code $LastExitCode"Executing c:\temp\testexit.ps1 results in the following output:

param1=x; param2=y

Global variable value:

Exiting with code 12345

From PowerShell: Exit.ps1 exited with exit code 1Ok, we can execute the specified command text as if it is a PowerShell command. But we still have the exit code problem, only 0 or 1 is returned.

Lets try something completely different. We change c:\temp\exit.ps1 to:

param( $param1, $param2)

function ExitWithCode

{

param

(

$exitcode

)$host.SetShouldExit($exitcode)

exit

}Write-Host "param1=$param1; param2=$param2"

Write-Host "Global variable value: $globalvariable"

Write-Host "Exiting with code 12345"

ExitWithCode -exitcode 12345

Write-Host "After exit"What we do is specify to the host the exit code we would like to use, and then just exit, all in the simplest utility function.

Executing c:\temp\testexit.ps1 results in the following output:

param1=x; param2=y

Global variable value:

Exiting with code 12345

From PowerShell: Exit.ps1 exited with exit code 12345Ok, this fulfills all our holy grail dreams! But couldn’t we make the call from the batch script also simpler?

Change c:\temp\testexit.cmd to:

@PowerShell -NonInteractive -NoProfile -Command "c:\temp\exit.ps1 -param1 x -param2 y"

@echo From Cmd.exe: Exit.ps1 exited with exit code %errorlevel%Executing c:\temp\testexit.cmd results in the following output:

param1=x; param2=y

Global variable value:

Exiting with code 12345

From Cmd.exe: Exit.ps1 exited with exit code 12345This is even simpler! We can now just call the PowerShell code, without the exit $LastExitCode trick!

========================= CONCLUSIONS ============================

And now the conclusions after this long long story, that took a lot of time to find out (and to read for you):

- Don’t use exit to return a value from PowerShell code, but use the following function:

- Call script from batch using:

PowerShell -NonInteractive -NoProfile -Command "c:\temp\exit.ps1 -param1 x -param2 y"

function ExitWithCode

{

param

(

$exitcode

)$host.SetShouldExit($exitcode)

exit

}- echo %errorlevel%

- Call from PowerShell with: (Command specified in string, execute in own context)$command = "c:\temp\exit.ps1 -param1 x -param2 y"

PowerShell -NonInteractive -NoProfile -Command $command

$LastExitCode contains the exit code - Call from PowerShell with: (Direct command, execute in own context)

PowerShell -NonInteractive -NoProfile -Command { c:\temp\exit.ps1 -param1 x -param2 y } $LastExitCode contains the exit code - Call from Powershell with: (Command specified in string, invoke in caller context)

Invoke-Expression -Command $command- $LastExitCode contains the exit code

- Call from PowerShell with: (Direct command, execute in caller context)

& c:\temp\exit.ps1 -param1 x -param2 y $LastExitCode contains the exit code

-

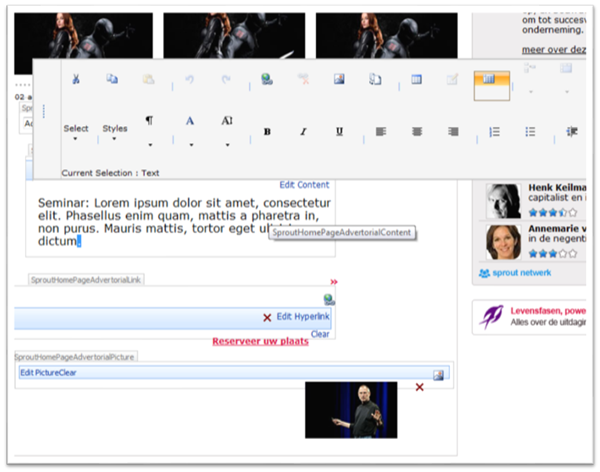

SharePoint 2010 Replaceable Parameter, some observations…

SharePoint Tools for Visual Studio 2010 provides a rudimentary mechanism for replaceable parameters that you can use in files that are not compiled, like ascx files and your project property settings. The basics on this can be found in the documentation at http://msdn.microsoft.com/en-us/library/ee231545.aspx.

There are some quirks however. For example:

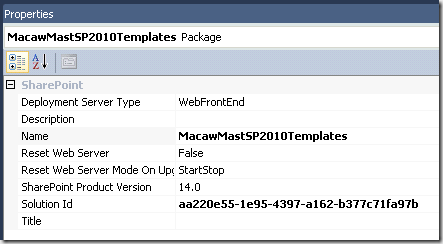

My Package name is MacawMastSP2010Templates, as defined in my Package properties:

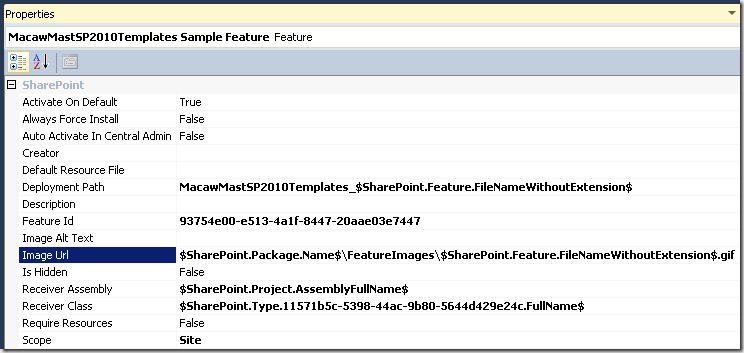

I want to use the $SharePoint.Package.Name$ replaceable parameter in my feature properties. But this parameter does not work in the “Deployment Path” property, while other parameters work there, while it works in the “Image Url” property. It just does not get expanded. So I had to resort to explicitly naming the first path of the deployment path:

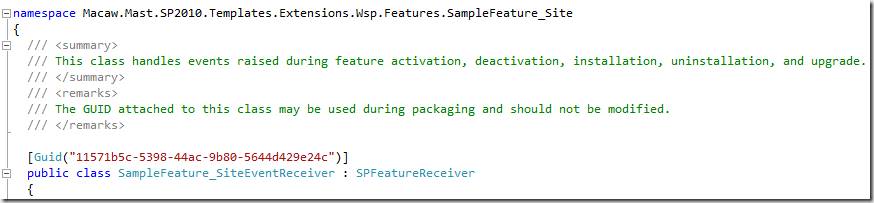

You also see a special property for the “Receiver Class” in the format $SharePoint.Type.<GUID>.FullName$. The documentation gives the following description:The full name of the type matching the GUID in the token. The format of the GUID is lowercase and corresponds to the Guid.ToString(“D”) format (that is, xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx).

Not very clear. After some searching it happened to be the guid as declared in my feature receiver code:

In other properties you see a different set of replaceable parameters:

We use a similar mechanism for replaceable parameter for years in our Macaw Solutions Factory for SharePoint 2007 development, where each replaceable parameter is a PowerShell function. This provides so much more power.

For example in a feature declaration we can say:

Code Snippet- <?xml version="1.0" encoding="utf-8" ?>

- <!-- Template expansion

- [[ProductDependency]] -> Wss3 or Moss2007

- [[FeatureReceiverAssemblySignature]] -> for example: Macaw.Mast.Wss3.Templates.SharePoint.Features, Version=1.0.0.0, Culture=neutral, PublicKeyToken=6e9d15db2e2a0be5

- [[FeatureReceiverClass]] -> for example: Macaw.Mast.Wss3.Templates.SharePoint.Features.SampleFeature.FeatureReceiver.SampleFeatureFeatureReceiver

- -->

- <Feature Id="[[$Feature.SampleFeature.ID]]"

- Title="MAST [[$MastSolutionName]] Sample Feature"

- Description="The MAST [[$MastSolutionName]] Sample Feature, where all possible elements in a feature are showcased"

- Version="1.0.0.0"

- Scope="Site"

- Hidden="FALSE"

- ImageUrl="[[FeatureImage]]"

- ReceiverAssembly="[[FeatureReceiverAssemblySignature]]"

- ReceiverClass="[[FeatureReceiverClass]]"

- xmlns="http://schemas.microsoft.com/sharepoint/">

- <ElementManifests>

- <ElementManifest Location="ExampleCustomActions.xml" />

- <ElementManifest Location="ExampleSiteColumns.xml" />

- <ElementManifest Location="ExampleContentTypes.xml" />

- <ElementManifest Location="ExampleDocLib.xml" />

- <ElementManifest Location="ExampleMasterPages.xml" />

- <!-- Element files -->

- [[GenerateXmlNodesForFiles -path 'ExampleDocLib\*.*' -node 'ElementFile' -attributes @{Location = { RelativePathToExpansionSourceFile -path $_ }}]]

- [[GenerateXmlNodesForFiles -path 'ExampleMasterPages\*.*' -node 'ElementFile' -attributes @{Location = { RelativePathToExpansionSourceFile -path $_ }}]]

- [[GenerateXmlNodesForFiles -path 'Resources\*.resx' -node 'ElementFile' -attributes @{Location = { RelativePathToExpansionSourceFile -path $_ }}]]

- </ElementManifests>

- </Feature>

We have a solution level PowerShell script file named TemplateExpansionConfiguration.ps1 where we declare our variables (starting with a $) and include helper functions:

Code Snippet- # ==============================================================================================

- # NAME: product:\src\Wss3\Templates\TemplateExpansionConfiguration.ps1

- #

- # AUTHOR: Serge van den Oever, Macaw

- # DATE : May 24, 2007

- #

- # COMMENT:

- # Nota bene: define variable and function definitions global to be visible during template expansion.

- #

- # ==============================================================================================

- Set-PSDebug -strict -trace 0 #variables must have value before usage

- $global:ErrorActionPreference = 'Stop' # Stop on errors

- $global:VerbosePreference = 'Continue' # set to SilentlyContinue to get no verbose output

- # Load template expansion utility functions

- . product:\tools\Wss3\MastDeploy\TemplateExpansionUtil.ps1

- # If exists add solution expansion utility functions

- $solutionTemplateExpansionUtilFile = $MastSolutionDir + "\TemplateExpansionUtil.ps1"

- if ((Test-Path -Path $solutionTemplateExpansionUtilFile))

- {

- . $solutionTemplateExpansionUtilFile

- }

- # ==============================================================================================

- # Expected: $Solution.ID; Unique GUID value identifying the solution (DON'T INCLUDE BRACKETS).

- # function: guid:UpperCaseWithoutCurlies -guid '{...}' ensures correct syntax

- $global:Solution = @{

- ID = GuidUpperCaseWithoutCurlies -guid '{d366ced4-0b98-4fa8-b256-c5a35bcbc98b}';

- }

- # DON'T INCLUDE BRACKETS for feature id's!!!

- # function: GuidUpperCaseWithoutCurlies -guid '{...}' ensures correct syntax

- $global:Feature = @{

- SampleFeature = @{

- ID = GuidUpperCaseWithoutCurlies -guid '{35de59f4-0c8e-405e-b760-15234fe6885c}';

- }

- }

- $global:SiteDefinition = @{

- TemplateBlankSite = @{

- ID = '12346';

- }

- }

- # To inherit from this content type add the delimiter (00) and then your own guid

- # ID: <base>00<newguid>

- $global:ContentType = @{

- ExampleContentType = @{

- ID = '0x01008e5e167ba2db4bfeb3810c4a7ff72913';

- }

- }

- # INCLUDE BRACKETS for column id's and make them LOWER CASE!!!

- # function: GuidLowerCaseWithCurlies -guid '{...}' ensures correct syntax

- $global:SiteColumn = @{

- ExampleChoiceField = @{

- ID = GuidLowerCaseWithCurlies -guid '{69d38ce4-2771-43b4-a861-f14247885fe9}';

- };

- ExampleBooleanField = @{

- ID = GuidLowerCaseWithCurlies -guid '{76f794e6-f7bd-490e-a53e-07efdf967169}';

- };

- ExampleDateTimeField = @{

- ID = GuidLowerCaseWithCurlies -guid '{6f176e6e-22d2-453a-8dad-8ab17ac12387}';

- };

- ExampleNumberField = @{

- ID = GuidLowerCaseWithCurlies -guid '{6026947f-f102-436b-abfd-fece49495788}';

- };

- ExampleTextField = @{

- ID = GuidLowerCaseWithCurlies -guid '{23ca1c29-5ef0-4b3d-93cd-0d1d2b6ddbde}';

- };

- ExampleUserField = @{

- ID = GuidLowerCaseWithCurlies -guid '{ee55b9f1-7b7c-4a7e-9892-3e35729bb1a5}';

- };

- ExampleNoteField = @{

- ID = GuidLowerCaseWithCurlies -guid '{f9aa8da3-1f30-48a6-a0af-aa0a643d9ed4}';

- };

- }

This gives so much more possibilities, like for example the elements file expansion where a PowerShell function iterates through a folder and generates the required XML nodes.

I think I will bring back this mechanism, so it can work together with the built-in replaceable parameters, there are hooks to define you custom replacements as described by Waldek in this blog post.

-

A great overview of the features of the different SharePoint 2010 editions

The following document gives a good overview of the features available in the different SharePoint editions: Foundation (free), Standard and Enterprise.

http://sharepoint.microsoft.com/en-us/buy/pages/editions-comparison.aspx

It is good to see the power that is available in the free SharePoint Foundation edition, so there is no reason to not use SharePoint as a foundation for you collaboration applications.

-

weblogs.asp.net no longer usable as a blogging platform?

I get swamped by spam on my weblogs.asp.net weblog. Both comments spam and spam through the contact form. It is getting so bad that I think the platform is becoming useless for me. Why o why are we bloggers from the first hour still in stone age without any protection against spam. Implementing Captcha shouldn’t be that hard… As far as I know this is the same blogging platform used by blogs.msdn.com. Aren’t all Microsoft bloggers getting sick from spam? In the past I tried to contact the maintainers of weblogs.asp.net, but never got a response. Who maintains the platform? Why are we still running on a Community Server Edition of 2007? Please help me out, or I’m out of here.

-

Powershell output capturing and text wrapping… again…

A while a go I wrote a post “Powershell output capturing and text wrapping: strange quirks... solved!” on preventing output wrapping in PowerShell when capturing the output. In this article I wrote that I used the following way to capture the output with less probability of wrapping:

PowerShell -Command "`$host.UI.RawUI.BufferSize = new-object System.Management.Automation.Host.Size(512,50); `"c:\temp\testoutputandcapture.ps1`" -argument `"A value`"" >c:\temp\out.txt 2>&1

In the above situation I start a PowerShell script, but before doing that I set the buffer size.

I had some issues with this lately that my values in setting the buffer size where wither to small or too large. The more defensive approach described in the StackOverflow question http://stackoverflow.com/questions/978777/powershell-output-column-width works better for me.

I use it as follows at the top of my PowerShell script file:

Prevent text wrapping- set-psdebug -strict -trace 0 #variables must have value before usage

- $global:ErrorActionPreference = "Continue" # Stop on errors

- $global:VerbosePreference = "Continue" # set to SilentlyContinue to get no verbose output

- # Reset the $LASTEXITCODE, so we assume no error occurs

- $LASTEXITCODE = 0

- # Update output buffer size to prevent output wrapping

- if( $Host -and $Host.UI -and $Host.UI.RawUI ) {

- $rawUI = $Host.UI.RawUI

- $oldSize = $rawUI.BufferSize

- $typeName = $oldSize.GetType( ).FullName

- $newSize = New-Object $typeName (512, $oldSize.Height)

- $rawUI.BufferSize = $newSize

- }

-

Debugging executing program from PowerShell using EchoArgs.exe

Sometimes you pull you hairs out because the execution of a command just does not seem to work the way you want from PowerShell.

A good example of this is the following case:

Given a folder on the filesystem, I want to determine if the folder is under TFS source control, and if it is, what is the server name of the TFS server and the path of folder in TFS.

If you don’t use integrated security (some development machines are not domain joined) you can determine this using the command tf.exe workfold c:\projects\myproject /login:domain\username,password

From PowerShell I execute this command as follows:

$tfExe = "C:\Program Files\Microsoft Visual Studio 9.0\Common7\IDE\Tf.exe"

$projectFolder = "D:\Projects\Macaw.SolutionsFactory\TEST\Macaw.TestTfs"

$username = "domain\username"

$password = "password"

& $tfExe workfold $projectFolder /login:$username,$passwordBut I got the the following error:

TF10125: The path 'D:\Projects\MyProject' must start with $/

I just couldn’t get it working, so I created a small batch file ExecTfWorkprodCommand.bat with the following content:

@echo off

rem This is a kind of strange batch file, needed because execution of this command in PowerShell gives an error.

rem This script retrieves TFS sourcecontrol information about a local folder using the following command:

rem tf workfold <localpath> /login:domain\user,password

rem %1 is path to the tf.exe executable

rem %2 is the local path

rem %3 is the domain\user

rem %4 is the password

rem Output is in format:

rem ===============================================================================

rem Workspace: MyProject@VS-D-SVDOMOSS-1 (Serge)

rem Server : tfs.yourcompany.nl

rem $/MyProject/trunk: C:\Projects\MyProjectif [%3]==[] goto integratedsecurity

%1 workfold "%2" /login:%3,%4

goto end:integratedsecurity

%1 workfold "%2":end

And called this script file from PowerShell as follows:

$helperScript = "ExecTfWorkprodCommand.bat"

$tfExe = "C:\Program Files\Microsoft Visual Studio 9.0\Common7\IDE\Tf.exe"

$projectFolder = "D:\Projects\Macaw.SolutionsFactory\TEST\Macaw.TestTfs"

$username = "domain\username"

$password = "password"

$helperScript $tfExe "`"$projectFolder`"" $username $passwordThis is way to much work, but I just couldn’t get it working.

Today I read a post that mentioned the tool EchoArgs.exe, available in the PowerShell Community Extensions (http://pscx.codeplex.com), which echo’s the arguments as the executed application receives them from PowerShell.

I changed my script code to:

$tfExe = "C:\Program Files\PowerShell Community Extensions\EchoArgs.exe"

$projectFolder = "D:\Projects\MyProject"

$username = "domain\username"

$password = "password"

& $tfExe workfold $projectFolder /login:$username,$passwordWhich resulted in:

Arg 0 is <workfold>

Arg 1 is <D:\Projects\MyProject>

Arg 2 is </login:domain\username>

Arg 3 is <password>And this directly resolved my issue! the “,” in “/login:$username,$password” did split the argument!

The issue was simple resolved by using the following command from PowerShell:

& $tfExe workfold $projectFolder /login:"$username,$password"

Which results in:

Arg 0 is <workfold>

Arg 1 is <D:\Projects\MyProject>

Arg 2 is </login:domain\username,password>Conclusion: issues with executing programs from PowerShell, check out EchoArgs.exe!