Archives

-

Commenting out Code in C# (Oldie but Goldie Tip)

I usually “comment out” code

- when something does not work as expected and I am looking for a workaround, or

- when I want to quickly try out an idea for an alternative solution

and

- I feel that using source control is too much hassle for what I’m trying to do in this situation.

How?

The term “comment out” obviously comes from using code comments to hide source code from the compiler. C# has two kinds of comments that can be used for this, each with their pros and cons:

- End-of-line comments (“// this is a comment”) can be nested, i.e. you can comment a commented line. But they do not explicitly convey the start and the end of a commented section when looking at adjacent commented lines.

- Block comments (“/* this is a comment, possibly spanning several lines */”) do have an explicit start and end, but cannot be nested; you cannot surround a block comment with a block comment.

For disabling full lines of code, a different approach is to use the “#if … #endif” directive which tells the C# compiler to consider the code inside only if the specified symbol is defined. So to disable code, you simply specify a symbol that does not exist:

#if SOME_UNDEFINED_SYMBOL ...one or more lines... #endif

Using the preprocessor for this purpose is nothing new, especially to those with a C/C++ background. On the other hand, considering how often I watch developers use comments in situations where “#if” would have clear advantages, maybe this blog post benefits at least some people out there.

Why #if?

“#if” directives can be nested

The C# compiler does not blindly look for a closing “#endif”; it understands which “#endif” belongs to which “#if”, so e.g. the following is possible:

#if DISABLED ...some code... #if DEBUG ...debug code... #endif ...some code... #endif

You can express why you disabled a specific block of code

For example, you could use “#if TODO” when you are working on new code, but want to quickly run the program again without that code before continuing.

Something like “#if TO_BE_DELETED” (or maybe “#if DEL” for less typing) could mark code that you intend to remove after compiling the project and running the unit tests. If you are consistent with the naming of the symbol, performing a cleanup across the project is easy, because searching for “#if SYMBOL“ works well.

Obviously, you could choose more descriptive symbols (e.g. “#if TODO_DATA_ACCESS” and “#if TODO_CACHING") to differentiate different places of ongoing work. But if you think you need this, it could be a sign you are trying to juggle too many balls at once.

“#else” is great while you work on replacing code

Sometimes, when I have to deal with a non-obvious or even buggy third-party API, writing code involves a lot of experimentation. Then I like to keep old code around as a reference for a moment:

#if OLD_WORKAROUND ...old code... #else ...new code... #endif

You can easily toggle code on/off

You can enable the disabled code simply by defining the symbol, either using “#define” in the same source file or as a conditional compilation symbol for the whole project.

Alternatively, you can invert the condition for a specific “#if” with a “!” in front of the symbol:

#if !DISABLED ...some code... #endif

Being able to quickly switch code off and back on, or – in conjunction with “#else” – to switch between old and new code without losing the exact start and end positions of the code blocks is a huge plus. I use this e.g. when working on interactions in GUIs where I have to decide whether the old or the new code makes the user interface “feel” better.

Notes on disabled code

- Disabled code in a code base should always be viewed as a temporary measure, because code that never gets compiled “rots” over time. To the compiler/IDE, disabled code is plain text that is not affected by refactoring or renaming, i.e. at some point it is no longer valid.

- Try to get rid of disabled code as soon as possible, preferably before committing to source control.

- Last, but not least: Consider using source control for what it is good at - it exists for a reason. For instance, when experiments involve changes to many files, a new branch may be the better choice.

-

Notes on Migrating a WPF Application to .NET Core 3.0

Recently, I migrated a WPF application from .NET Framework 4.7.2 to .NET Core 3.0, which took me about two hours in total. The application consisted of four assemblies (one EXE, one UI class library, two other library assemblies).

A Google search for “migrate wpf to .net core 3” brings up enough helpful documentation to get started, so this post is mainly a reminder to myself what to look out for the next migration – which may be far enough in the future that I’ll have forgotten important bits by then.

How to get started

My starting point was the Microsoft article “Migrating WPF apps to .NET Core”. A lot of text, which makes it easy to get impatient and start just skimming for keywords. Tip: Do take your time.

In my case, I somehow missed an important detail concerning the

AssemblyInfo.csfile at first.AssemblyInfo.cs

When you migrate to .NET Core and use the new project file format, you have to decide what you want to do about the

AssemblyInfo.csfile.If you create a new .NET Core project, that file is autogenerated from information you can enter in the “Packages” section of the project properties in Visual Studio (the information is stored in the project file).

In the .NET Framework version of the UI class library, I used the attributes

XmlnsDefinitionandXmlnsPrefixto make the XAML in the EXE project prettier. That’s why I wanted to keep using anAssemblyInfo.csfile I could edit manually. For this, I had to add the following property:<GenerateAssemblyInfo>false</GenerateAssemblyInfo>

PreBuild Step

I use a pre-build step in my UI library project (calling a tool called ResMerger), After the migration, macros like

(ProjectDir)were no longer resolved. The migration document does not cover pre/post build events (at the time of this writing). But the article “Additions to the csproj format for .NET Core” does, in the section “Build events”.PreBuild event in the old csproj file:

<PropertyGroup> <PreBuildEvent>"$(SolutionDir)tools\ResMerger.exe" "$(ProjectDir)Themes\\" $(ProjectName) "Generic.Source.xaml" "Generic.xaml" </PreBuildEvent> </PropertyGroup>And in the new csproj file:

<Target Name="PreBuild" BeforeTargets="PreBuildEvent"> <Exec Command=""$(SolutionDir)tools\ResMerger.exe" "$(ProjectDir)Themes\\" $(ProjectName) Generic.Source.xaml Generic.xaml" /> </Target>Note that the text of the PreBuild step is now stored in an XML attribute, and thus needs to be XML escaped. If you have a lot of PreBuild/PostBuild steps to deal with, it’s maybe a good idea to use the Visual Studio UI to copy and paste the texts before and after migrating the project file.

WPF Extended Toolkit

My application uses some controls from the Extended WPF Toolkit. An official version for .NET Core 3 has been announced but is not available yet. For my purposes I had success with a fork on https://github.com/dotnetprojects/WpfExtendedToolkit, your experience may be different.

Switching git branches

Switching back and forth between the git branches for the .NET framework and .NET Core versions inside Visual Studio results in error messages. To get rid of them, I do the typical troubleshooting move of exiting Visual Studio, deleting all

binandobjfolders, restarting Visual Studio and rebuilding the solution. Fortunately, I don’t need to maintain the .NET framework in the future. The migration is done and I can move on.Automating the project file conversion

For the next application, I’ll likely use the Visual Studio extension “Convert Project To .NET Core 3” by Brian Lagunas. I installed the extension and ran it on a test project – a quick look at the result was promising.

-

JSON Serialization in .NET Core 3: Tiny Difference, Big Consequences

Recently, I migrated a web API from .NET Core 2.2 to version 3.0 (following the documentation by Microsoft). After that, the API worked fine without changes to the (WPF) client or the controller code on the server – except for one function that looked a bit like this (simplified naming, obviously):

[HttpPost] public IActionResult SetThings([FromBody] Thing[] things) { ... }The

Thingclass has anItemsproperty of typeList<Item>, theItemclass has aSubItemsproperty of typeList<SubItem>.What I didn’t expect was that after the migration, all

SubItemslists were empty, while theItemslists contained, well, items.But it worked before! I didn’t change anything!

In fact, I didn’t touch my code, but something else changed: ASP.NET Core no longer uses Json.NET by NewtonSoft. Instead, JSON serialization is done by classes in the new System.Text.Json namespace.

The Repro

Here’s a simple .NET Core 3.0 console application for comparing .NET Core 2.2 and 3.0.

The program creates an object hierarchy, serializes it using the two different serializers, deserializes the resulting JSON and compares the results (data structure classes not shown yet for story-telling purposes):

class Program { private static Item CreateItem() { var item = new Item(); item.SubItems.Add(new SubItem()); item.SubItems.Add(new SubItem()); item.SubItems.Add(new SubItem()); item.SubItems.Add(new SubItem()); return item; } static void Main(string[] args) { var original = new Thing(); original.Items.Add(CreateItem()); original.Items.Add(CreateItem()); var json = System.Text.Json.JsonSerializer.Serialize(original); var json2 = Newtonsoft.Json.JsonConvert.SerializeObject(original); Console.WriteLine($"JSON is equal: {String.Equals(json, json2, StringComparison.Ordinal)}"); Console.WriteLine(); var instance1 = System.Text.Json.JsonSerializer.Deserialize<Thing>(json); var instance2 = Newtonsoft.Json.JsonConvert.DeserializeObject<Thing>(json); Console.WriteLine($".Items.Count: {instance1.Items.Count} (System.Text.Json)"); Console.WriteLine($".Items.Count: {instance2.Items.Count} (Json.NET)"); Console.WriteLine(); Console.WriteLine($".Items[0].SubItems.Count: {instance1.Items[0].SubItems.Count} (System.Text.Json)"); Console.WriteLine($".Items[0].SubItems.Count: {instance2.Items[0].SubItems.Count} (Json.NET)"); } }The program writes the following output to the console:

JSON is equal: True .Items.Count: 2 (System.Text.Json) .Items.Count: 2 (Json.NET) .Items[0].SubItems.Count: 0 (System.Text.Json) .Items[0].SubItems.Count: 4 (Json.NET)

As described, the sub-items are missing after deserializing with System.Text.Json.

The Cause

Now let’s take a look at the classes for the data structures:

public class Thing { public Thing() { Items=new List<Item>(); } public List<Item> Items { get; set; } } public class Item { public Item() { SubItems = new List<SubItem>(); } public List<SubItem> SubItems { get; } } public class SubItem { }There’s a small difference between the two list properties:

- The

Itemsproperty of classThinghas a getter and a setter. - The

Subitemsproperty of classItemonly has a getter.

(I don’t even remember why one list-type property does have a setter and the other does not)

Apparently, Json.NET determines that while it cannot set the

SubItemsproperty directly, it can add items to the list (because the property is not null).The new deserialization in .NET Core 3.0, on the other hand, does not touch a property it cannot set.

I don’t see this as a case of “right or wrong”. The different behaviors are simply the result of different philosophies:

- Json.NET favors “it just works.”

- System.Text.Json works along the principle “if the property does not have a setter, there is probably a reason for that.”

The Takeways

- Replacing any non-trivial library “A” with another library “B” comes with a risk.

- Details. It’s always the details.

- Consistency in your code increases the chances of consistent behavior when something goes wrong.

- The

-

ToolTip ToolTip toolTip

While working on a WPF control that handles some aspects of its (optional) tooltip programmatically, I wrote the following lines:

if (ToolTip is ToolTip toolTip) { // … do something with toolTip … }This makes perfect sense and isn’t confusing inside Visual Studio (because of syntax highlighting), but made me smile nevertheless.

Explanation:

- The code uses the “is” shorthand introduced in C# 7 that combines testing for “not null” and the desired type with declaring a corresponding variable (I love it!).

- The first “ToolTip” is the control’s ToolTip property (FrameworkElement.ToolTip), which is of type “object”.

- The second “ToolTip” is the class “System.Windows.Control.ToolTip”.

-

Vortrag bei UXBN am 22. August

Am 22. August halte ich im Rahmen des User Experience Bonn (UXBN) Meetups den folgenden Vortrag:

Fragen, Fragen, Fragen, …

Die richtige Frage zum richtigen Zeitpunkt kann Diskussionen über UI-Designs wertvolle Impulse geben. Dabei muss weder die Frage noch die Antwort besonders weltbewegend sein – ein kleines Stückchen vorher unbekannter Information verändert manchmal massiv den Lauf der Dinge.

In seinem Vortrag vermittelt Roland Weigelt, dass eine analytische Denkweise auch in den vermeintlich eher von Emotionen bestimmten Bereichen Design und User Experience ein wertvolles Werkzeug ist. Und damit es nicht zu trocken wird, geht es nebenbei um Problemlösung à la Indiana Jones, außerirdische Lebensformen und den Einfluss von Rallye-Streifen auf die Geschwindigkeit von Sportwagen.

Die Teilnahme ist kostenlos, wegen der begrenzten Teilnehmerzahl ist allerdings eine Anmeldung erforderlich.

Infos und Anmeldung auf http://uxbn.de/

-

Speakers, Check Your Visual Studio Code Theme!

tl;dr: If you use Visual Studio Code in your talk, please do the audience a favor, press Ctrl+K, Ctrl-T and choose the theme that best fits the lighting situation, not your personal taste.

Some people like “dark” UI color schemes, i.e. dark background with light text and icons. Others prefer black text on light background. Visual Studio 2019 comes with a light theme by default, Visual Studio Code with a dark theme. So, what is better?

As long you are on your own at work or at home, the answer is “whatever gives you the best experience”.

But as soon as you speak at a conference or a user group meetup, or maybe just in front of your colleagues, it’s no longer about you. It’s about what is best for your audience.

Source code is different from slides

When you are showing source code in a talk, you must find a compromise between the font size and the amount of text that is visible without too much horizontal scrolling. The result is usually a font size that is smaller than what you would use on a PowerPoint slide. That means that each character consists of far less pixels that either lighten or darken the screen. Which would not a problem per se, if we only had to care about the legibility of white text on dark background or black text on light background.

But source code is usually shown with syntax highlighting, i.e. as text that switches between a variety of colors. Because not all colors have an equal brightness, some parts of the source code can be much harder to read than other. This is especially true with dark themes, when the “dark” background does not appear as dark in the projection as intended, because of a weak projector and/or a bright room. The weaker contrast appears even worse when the colored text stands between white text – exactly the situation with syntax highlighting.

Care about legibility first

Proponents of dark themes cite the reduced eye strain when using a dark background. And they are right, staring at a bright screen in a dark room for a long time can be painful. On the other hand, first make sure the audience members in the back do not have to look twice because parts of the source code are hard to read.

Personally, in all the sessions I attended, I had more problems reading source code on dark-themed IDEs than I suffered from eye strain. Your experience may be different, of course.

Don’t theorize, test

When you set up your computer in the session room before the talk, not only check the font size, but also how well both dark and light themes are readable.

Fortunately, you can switch Visual Studio Code’s color scheme quickly. So, before your talk

- press Ctrl+K, Ctrl+T (or choose File > Preferences > Color Theme in the main menu)

- use the up/down cursor keys to select a theme (e.g. “Light+” or “Dark+”, Code’s default)

- and press Enter to use the theme.

Walk to the back of the room and look for yourself. And… be honest.

It’s not about “it ain’t that bad”

When you test your favorite theme in the room, don’t go for “it’s good enough” just because you like that theme. Switch to a different theme and make an honest assessment: Is “your” theme really better in this room, for this lighting situation? If not, choose another theme.

Thank you!

-

Emaroo: How to Get Visual Studio Code’s New Icon

Emaroo is a free utility for browsing most recently used (MRU) file lists of programs like Visual Studio, Word, Excel, PowerPoint and more. Quickly open files, jump to their folder in Windows Explorer, copy them (and their path) to the clipboard - or run your own tools on the MRU items! And all this with just a few keystrokes or mouse clicks.

- Download Emaroo on www.roland-weigelt.de/emaroo

Emaroo vs. Icons

The May 2019 update for Visual Studio Code (version 1.35) features a new application icon. After installing the update, Emaroo will continue to use the old icon, though.

This is because Emaroo caches the icons of executables (as well as those of MRU files and folders) for a faster startup.

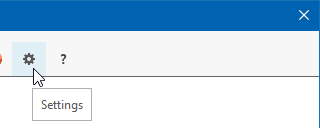

To get the new icon, switch to the “settings” page…

…and click “Refresh icons” in the bottom right corner:

Confirm you want to restart Emaroo…

…and the new icon (which is not exactly dramatically different) will appear after Emaroo has refreshed the icons:

-

Interesting Read: The future UWP

Morton Nielsen wrote an article called “The future UWP” about the situation for desktop UI development in the Microsoft technology stack which I found very interesting.

The main takeaways (not actually surprising):

- A lot is happening/changing/developing and it will take some time until the future really becomes clear.

- Quote: “Whether you do WPF, WinForms or UWP, if it works for you now, continue the course you’re on”

Personally, I’ll stay with WPF for my desktop UIs. When .NET Core 3 comes out, I’ll dip a toe into porting my applications.

-

Emaroo 4.4 – Support for Visual Studio 2019

Emaroo is a free utility for browsing most recently used (MRU) file lists of programs like Visual Studio, VS Code, Word, Excel, PowerPoint, Photoshop, Illustrator and more. Quickly open files, jump to their folder in Windows Explorer, copy them (and their path) to the clipboard - or run your own tools on files and folders with custom actions!

- Download Emaroo on www.roland-weigelt.de/emaroo

About this Release

- Added: Support for Visual Studio 2019.

- Added: The “run or read” feature (Ctrl+R) now also supports “.lnk” files.

Fixed: Single files not appearing in Visual Studio Code’s MRU list.

-

Design/UI/UX-Praxiswissen für Entwickler in Köln und Nürnberg

Am 10. Mai 2019 halte ich auf der dotnet Cologne 2019 einen Vortrag mit dem Namen “Kochrezepte für pragmatisches GUI-Design”.

Am 26. und 27. Juni bin ich in Nürnberg auf der Developer Week 2019. Am 26. Juni ebenfalls mit “Kochrezepte für pragmatisches GUI-Design”, am Tag darauf folgt dann der ganztägige Workshop “Von Null auf GUI – Design/UI/UX-Praxiswissen für Entwickler”.

Abstracts

Kochrezepte für pragmatisches GUI-Design

- Wie entscheidet man, was man sich von anderen GUIs abschauen sollte - und was nicht?

- Wie stellt man Daten in Formularen und Detailansichten geeignet dar, wenn man von der Fachlichkeit eigentlich keine Ahnung hat?

- Wie bändigt man GUIs mit vielen Funktionen?

- Wie sorgt man dafür, dass Anwender sich nicht von der GUI "ausgebremst" fühlen?

Diese und weitere Fragen beantwortet Roland Weigelt in seinem Vortrag. Am Beispiel konkreter Lösungsansätze bietet er einen generellen Einstieg in eine abstrakte und doch praxisorientierte Denkweise in "User Interface Patterns". Roland greift dabei auf seine langjährige Erfahrung in der Produktentwicklung zurück, wo Pragmatismus und Weitsicht gleichermaßen gefragt sind.

Von Null auf GUI – Design/UI/UX-Praxiswissen für Entwickler

Überall dort, wo kein ausgewiesener UI-/UX-Spezialist zur Verfügung steht, ist es umso wichtiger, dass auch Software-Entwickler grundlegende Kenntnisse in diesem Thema haben. Sei es, um die UI einer typischen Business-Anwendung von "schlimm" nach "brauchbar" zu verbessern. Oder auch, um eine informierte Entscheidung treffen zu können, was man sich von anderen UIs abschaut.

In diesem Workshop vermittelt Roland Weigelt Entwicklern ohne jegliche UI/UX-Vorkenntnisse Gestaltungsprinzipien des visuelles Designs, User Experience-Grundlagen sowie das Denken in User Interface Patterns. Und das stets mit einem Blick darauf, was in der Praxis mit begrenztem Budget machbar und tatsächlich hilfreich ist.

Vortragsteile und praktische Übungen wechseln sich ab, um das Erlernte in Einzel- und Gruppenarbeiten direkt vertiefen zu können.

Für diesen Workshop sind von Teilnehmerseite her keine Vorbereitungen notwendig. Einfach hinkommen, zuhören, mitmachen, Spaß haben und viel lernen.

Anmeldung

- dotnet Cologne 2019: Die Anmeldung startet am 20. März um 12:00. Die Kölner Community-Konferenz wird wie in den letzten Jahren wohl wieder in wenigen Minuten ausgebucht sein – kein Wunder bei Preisen von 35,- bis 65,- Euro für Privatpersonen bzw. 139,- Euro für Firmentickets.

- Developer Week 2019: Die Anmeldung ist bereits möglich, bis zum 9. April gelten noch Frühbucherpreise.

-

Emaroo 4.3.2 – Support for VS Code 1.32

Emaroo is a free utility for browsing most recently used (MRU) file lists of programs like Visual Studio, VS Code, Word, Excel, PowerPoint, Photoshop, Illustrator and more. Quickly open files, jump to their folder in Windows Explorer, copy them (and their path) to the clipboard - or run your own tools on files and folders with custom actions!

- Download Emaroo on www.roland-weigelt.de/emaroo

About this Release

- Updated: Support for Visual Studio Code 1.32 (changes regarding of most recently used folders/workspaces). Previous versions of Visual Studio Code are still supported.

Fixed: The MRU list for Notepad++ remained empty if the configuration file config.xml is not a valid XML file. This problem was already (kind of) addressed in 4.0.0, but now Emaroo performs a more thorough cleanup of the XML file. To be clear: Emaroo only reads the file, it does not write to it at any point. The file corruption is caused by Notepad+++ itself, which doesn't seem to have problems with it.

Fixed: Custom actions without any file or directory macros for the currently selected MRU item did not work if the MRU list was empty.

-

How to Approach Problems in Development (and Pretty Much Everywhere Else)

When you plan a feature, write some code, design a user interface, etc., and a non-trivial problem comes your way, you have more or less the following options:

- Attack – invest time and effort, give it all to make it happen, no matter what!

- Sneak around – identify alternative options, or find a way to drastically limit the scope to reduce the amount of work.

- Postpone – do nothing and wait until a later point in time; if the problem hasn’t become irrelevant by then, you know that it’s worth to be dealt with.

- Don’t do it – sometimes you have to make the hard decision to not do something.

As obvious as this seems to me now that these options exist: When I look back at my younger self more than twenty years ago, fresh from university, I was all about attacking problems head-on, because problem-solving was fun! Which sometimes lead to me spending too much energy at the wrong location, at the wrong time. In this blog post I try to write down some words of wisdom which my younger self may have found useful – maybe it helps somebody else out there.

Do a minimum of research

You want to work on the problem, you are eager to write code, but hold on for a second. Don’t dive into it head first. Always imagine a meeting in the future where one of the following questions is asked:

- “What exactly do you know about the problem?”

- “What have other people done so far?”

- “Are there samples/libraries/frameworks? What can we at least learn from them?”

- And of course the killer question: ”Why didn’t you think for a second about X?” (with “X” being super-obvious in your context)

You definitely want to know as early as possible about critical obstacles, e.g.

- the only choice for an algorithm for your envisioned approach has exponential complexity,

- you require hardware performance that your target system simply cannot provide,

- you have nowhere near enough pixel space in your user interface to display an envisioned feature, or

- your application only makes sense if your users behave in a completely unrealistic way in the actual usage context.

if you don’t do anything else, gain at least a basic understanding what you don’t know. You can deal with a “known unknown” on a meta-level, prioritize it, think of possible risks, talk to other people about it. An “unknown unknown”, on the other hand, can sneak up to you and hit you at the worst possible moment.

Do not confuse means and ends

As developers, we’re problem solvers – that is a good thing. But it sometimes makes us concentrate on the means (i.e. the technical solution to the problem) instead of the ends (what exactly do we want to achieve?). We all know these situations in meetings where no progress is made until somebody broadens the focus.

Be that somebody and ask: In which way does solving that one specific problem contribute to the overall goal? And: Is this the easiest way to achieve the desired result?

Think of the (in)famous “sword fight” scene from Raiders of the Lost Ark: Indiana Jones is facing a bad guy with a sword. Indy, on the other hand, does not have sword. With a narrow focus on the problem of not being able to participate in a (deadly) sword-fighting competition, finding a sword would be the next step. But the actual goal, though, is to get rid of the attacker as quickly and with as little risk as possible – which can be achieved by shooting the bad guy with a gun.

Here’s a real-life example that I often present in my UI/UX talks:

My software for the LED advertising system in the local sports arena uses pixel shaders for controlling the brightness of the displayed images and videos. The UI consists of a slider (0-100%) which I move until the apparent LED brightness looks right for the lighting in the arena (which is not constant due to necessary warm-up/down-down phases of the old lamps).

It was very easy to modify the pixel shaders to influence opacity or displacement of pixels, which gave me the idea for smooth transitions between different content sources for the LEDs. My first choice for the UI: the same as for the brightness, a slider. Copy/paste, simple as that. And from a UI point of view, using a slider promised full flexibility in regard to timing and dynamics of the transition. But what was the actual goal? Perform a smooth transition to the next image or video to be shown. For that, flexibility was much less of a concern than consistency and reliability. And that could be achieved with a simple “Next” button that triggered a transition with a fixed duration of 0.5 seconds.

Tackle problems as early as necessary, as late as possible

How do you decide which problem to be solved first? Of course, if one problem depends on a solution for another, that dictates a specific order. Often enough, tough, you have a certain freedom to choose.

If that is the case, ask yourself whether you have to solve a specific problem now. If you postpone working on something that is more or less isolated, there’s always a chance that priorities change. Something that was essential to reaching a specific goal suddenly becomes obsolete because the goal no longer exists.

On the other hand, be careful when delaying work, especially if you don’t know much about possible challenges. If part “A” of a system depends on part “B”, young developers like to work “bottom up”, first building “B” into a nice foundation that will make things easier when it comes to working on “A” (I have been there, too). But you definitely want to avoid a situation where the thing that was supposed to use your framework has known or unknown “unknowns“ that turn out to be insurmountable obstacles. So better make sure to have a working proof of concept and work from there.

This is related to the conflict between the (deliberately) very narrow scope of work items, tasks, user stories, etc. on one hand, and the question when the broader architecture of a system should be planned and implemented. Possible approaches range from BDUF (big design up front) in an almost “waterfall” fashion up to doing only the absolute minimum at a given time combined with continuous refactoring whenever needed. The truth is somewhere in between.

The secret is to find the right balance between…

- You ain’t gonna need it (YAGNI): Do not implement things when you just foresee that you need them. For example: extension points in your software that later are never used (but have to be maintained nevertheless). Or architectures with swappable components on a fine-grained level – if you’re not 100% sure that this is vital for the success of the software.

…and…

- Do it or get bitten in the end (DOGBITE): While you can get away with not doing something for a long time (all in with the best intentions of delivering value to the user of your application), postponing some work items can really come at a high cost. For example, if you want to have an undo-redo feature in your finished product. In theory, you could add that with a refactoring at a later time. In practice, good luck with getting the budget for stopping all feature work for weeks of refactoring late in the development.

In my experience, consider YAGNI as the rule, but don’t use it as “kill all”. Missing a DOGBITE situation is bad and will haunt you for years.

Ask yourself:

- Do you have a rough idea how you would add a feature at a certain point? If yes, that’s good enough, don’t add code as a preparation.

- Are you doing things now that actively prevent adding a feature in the future, or at least make that very expensive? Try to avoid that whenever possible.

- If you consider something DOGBITE, would you be able to defend it if somebody would grill you on that? You better be, because that’s what will happen at some point.

Heed the universal truths

- Everything is more complicated than it appears at first sight. If it doesn’t, you haven’t asked the right questions yet.

- Truly generic, reusable solutions are hard. First get the job done, then (maybe) think about reuse.

- “Good enough” is perfectly fine most of the time.

- Leaving things out is usually the better choice than adding too much up front. Once something (an API, a feature or some UI) is in your software, removing it will make somebody unhappy.

One more thing…

The Wikipedia article comparing the Amundsen and Scott Expeditions is very interesting. You’ll quickly understand why I mention it here.

-

WPF with Web API and SignalR – Why and How I Use It

Preface: This blog post is less about going deep into the technical aspects of ASP.NET Core Web API and SignalR. Instead it’s a beginner’s story about why and how I started using ASP.NET Core. Maybe it contains bits of inspiration here and there for others in a similar situation.

The why

Years ago, I wrote a WPF application that manages media files, organizes them in playlists and displays the media on a second screen. Which is a bit of an understatement, because that “second screen” is in fact mapped onto the LED modules of a perimeter advertising system in a Basketball arena for 6000 people.

Unfortunately, video playback in WPF has both performance and reliability problems, and a rewrite in UWP didn’t go as planned (as mentioned in previous blog posts). Experiments with HTML video playback, on the other hand, went really well.

At this point, I decided against simply replacing the display part of the old application with a hosted browser control. Because my plans for the future (e.g. synchronizing the advertising system with other LED screens in the arena) already involved networking, I planned the new software to have three separate parts right from the beginning:

- A server process written in C#/ASP.NET Core for managing files and playlists. For quick results, I chose to do this as a command line program using Kestrel (with the option to move to a Windows service later).

- A (non-interactive) media playback display written in TypeScript/HTML, without any framework. By using a browser in kiosk mode, I didn’t have to write an actual “display application” – but I still have the option to do this at a later time.

- A C#/WPF application for creating and editing playlists, starting and stopping media playback, which I call the cockpit. I chose WPF because I had a lot of existing code and custom controls from the old application that I could reuse.

The how: Communication between server, cockpit and display

For communication, I use Web API and SignalR.

Not having much prior experience, I started with the “Use ASP.NET Core SignalR with TypeScript and Webpack” sample and read the accompanying documentation. Then I added

app.UseMvc()inStartup.Configureandservices.AddMvcinStartup.ConfigureServicesto enable Web API. I mention this detail because that was a positive surprise for me. When learning new technologies, I sometimes was in situations where I had created an example project A and struggled to incorporate parts of a separate example project B.For quick tests of the Web API controllers, PostMan turned out to be a valuable tool.

Before working on “the real thing”, I read up on best practices on the web and tried to follow them to my best knowledge.

Web API

I use Web API to

- create, read, update or delete (“CRUD”) lists of “things”

- create, read, update or delete a single “thing”

In my case, the “things” are both meta data (playlists, information about a media file, etc.) as well as the actual media files.

Side note: I wrote about my experiences serving video files in my post “ASP.Net Core: Slow Start of File (Video) Download in Internet Explorer 11 and Edge”.

SignalR

While I use Web API to deal with “things”, I use SignalR for “actions”:

- I want something to happen

- I want to be notified when something happens.

Currently the server distinguishes between “display” and “cockpit” roles for the communication. In the future, it’s likely I will have more than one “cockpit” (e.g. a “remote control” on a mobile device) – and more roles when the application grows beyond simple media playback only one display. Using the SignalR feature of groups, the clients of the server receive only those SignalR messages they are interested in as part of their role(s).

Example

When I select a video file in the “cockpit”, I want the “display” to preload the video. This means:

- The cockpit tells the server via SignalR that a video file with a specific ID should be be preloaded in the display.

- The server tells* the display (again, via SignalR) that the video file should be preloaded.

- The display creates an HTML video tag and sets the source to a Web API URL that serves the media file.

- When the video tag has been created and added to the browser DOM, the display tells the server to – in turn – tell the cockpit that a video with the specified is ready to be played.

*) When I write “the server tells X”, this actually means that the server sends a message to all connections in group “X”.

The cockpit

In my WPF applications, I use the model-view-view model (MVVM) and the application service pattern.

Using application services, view models can “do stuff” in an abstracted fashion. For example, when the code in a view model requires a confirmation from the user. In this case, I don’t want to open a WPF dialog box directly from a view model. Instead, my code tells a “user interaction service” to get a confirmation. The view model does not see the application service directly, only an interface. This means that the view model does not know (and does not care) whether the response really comes from a dialog shown to the user or some unit test code (that may just confirm everything).

Application services can also offer events that view models can subscribe to, so the view models are notified when something interesting happens.

Back to Web API and SignalR: I don’t let any view model use a SignalR connection or call a Web API URL directly. Instead I hide the communication completely behind the abstraction of an application service:

- Web API calls and outgoing SignalR communication are encapsulated using async methods.

- Incoming SignalR communication triggers events that view models (and other application services) can subscribe to.

Minor pitfall: When a SignalR hub method is invoked and the handler is called in the WPF program, that code does not run on the UI thread. Getting around this threading issue (using the

Post()method ofSynchronizationContext.Current) is a good example of an implementation detail that an application service can encapsulate. The application service makes sure that the offered event is raised on the UI thread, and if a view model subscribes to this event, things “just work”.