Archives

-

ALT.NET Seattle Participants

Registration for the ALT.NET event in Seattle (April 18-20) is closed so if you register you'll get put onto the waiting list (there are only a few people on it right now). However the participant list is simply amazing. It's like the who's who of the Agile software development world.

Phil Haack, Tom Opgenorth, Craig Beck, Kevin Hegg, Miguel Angel Saez, Dustin Campbell, Justin-Josef Angel, David Pokluda, Carlin Pohl, Matthew Podwysocki, Adam Dymitruk, Wendy Friedlander, Oliver, Chris Bilson, Jeff Certain, Dave Foley, Joe Pruitt, Jeff Tucker, Jeffrey Palermo, Anil Verma, Greg Banister, Chris Salahub, Jesse Johnston, Robert Ream, Jim Hugunin, Chantal Laplante, Owen Rogers, Mike Stockdale, Cameron Frederick, Dan Miser, Greg Sangha, Joey Beninghove, Jean-Paul S. Boodhoo, Ben Scheirman, D'Arcy Lussier, Chris Patterson, Ronald S Woan, Rob Reynolds, Adam Tybor, Eric Holton, Scott Hanselman, Gabriel Schenker, Wade Hatler, Arvind Palaniswamy, Weston Binford, Jonathan de Halleux, Joseph Hill, Matt Hinze, Dave Laribee, Nick Parker, Ray Houston, Steven "Doc" List, Jason Grundy, Brian Donahue, John Quach, Alex Hung, James Thigpen, Chris Sutton, Ian Cooper, Rajbeer Dhatt, John Teague, Eli Lopian, Eric Ness, Scott Allen, Aaron Jensen, Rustan Leino, Bil Simser, Rob Zelt, Jeff Brown, Phil Dennis, Tom Dean, Tim Barcz, Sean Solbak, David Pehrson, James Franco, Bryce Budd, Scott Guthrie, Jay Flowers, david p buchanan, Howard Dierking, David Airth, Jonathan Wanagel, Matt Pisut, Julie Poole, Jarod Ferguson, Jacob Lewallen, Rhys Campbell, Joe Ocampo, Brad Abrams, Russell Ball, Michael Bradley, Bertrand Le Roy, Simon Guest, Alvin Lee, khalil El haitami, Roy Osherove, Scott Koon, Charlie Poole, Pete McKinstry, Sergio Pereira, Brad Wilson, Piriya Thongtanunam, Neil Blake, Brian Henderson, Martin Salias, Grant Carpenter, Colin Jack, James Shore, Kirk Jackson, Rod Paddock, Alan Buck, John Nuechterlein, Rajiv Das, Jeremy D. Miller, Chris Ortman, Robert Smith, Kelly Leahy, Chris Sells, Dru Sellers, Robin Clowers, Terry Hughes, Ashwin ParthasarathyOsidosi, Drew Miller, Dennis Olano, Anand Raju Narayan, Glenn Block, Brandon Lang, Pete Coupland, Trevor Redfern, Ward Cunningham, Troy Gould, Don Demsak, Neil Bourgeois, John Lam, Donald Belcham, Phil MCmillan, Udi Dahan, Martin Fowler, James Kovacs, Ayende Rahien, Danieljakob Homan, Raymond Lewallen, Jeff Olson, Justice Gray, Douglas Schroeder, Justin Bozonier, Luke Foust, Michael Henderson, Shawn Wildermuth, Dave Woods, Chad Myers, Shane Bauer, Michael Nelson, Kyle Baley, Buchanan Dunn, Scott C Reynolds, Greg Young.

I wish I had a tag cloud for the names or something as so many people have so much influence on ALT.NET practices today. It's going to be an awesome get together!

-

The ME Conference

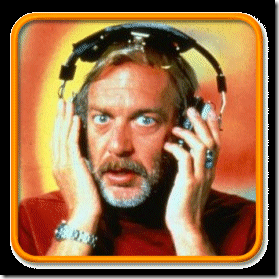

I found it rather funny (thanks Jenn!) having a conference named after me. Well, not exactly named *after* me, but when you're basically the only guy on the planet who spells his name "BIL" you have to laugh when you see this.

Best of all is the description of What is BIL? (something I always ponder myself each morning as I head into work).

"BIL is to TED, what BarCamp is to FooCamp".

We should probably break down and tell TED this at some point.

-

Using NAnt to Build SharePoint Solutions

Andrew Connell wrote an excellent blog entry on building your WSS solution packages with MSBuild. My problem is that I can't stand MSBuild and find it crazy complicated for even the simplest of tasks. Andrew's post possibly led to the creation (or at least contribution) of STSDEV, a very interesting value-added tool by Ted Pattison and co. that helps ease the pain of building SharePoint solutions. However I found it has it's issues and doesn't really work the way I like to (for example I don't like having everything in one single assembly).

My choice of build tool these days is NAnt (although I'm starting to look at something like Rake or even Boo to make building easier using a DSL) and I find it easier (in my feeble brain anyway) to build and deploy SharePoint solutions with NAnt. I've blogged about it before, but that was v2.0 and here we are in 2008 with new shiny happy solution packages. So here we go.

First we'll start with a basic NAnt build script. When I start a project I create it and set a default target of "help" then in that describe the targets you can run. This provides some documentation on the build process and let's me get a build file up and running.

<?xml version="1.0" encoding="utf-8"?>

<project name="SharePointForums" default="help">

<target name="help">

<echo message="--------------------" />

<echo message="targets in this file" />

<echo message="--------------------" />

<echo message="help - display targets in the nant script. This is the default target." />

<echo message="clean - cleans up the temporary directories" />

<echo message="init - sets up the temporary directories" />

<echo message="compile - compiles the solution assemblies" />

<echo message="test - compiles the solution assemblies then runs unit tests" />

<echo message="build - builds the entire solution for packaging/installation/distribution" />

<echo message="dist - creates a distribution zip file containing solution installer, wsp, and config files" />

<echo message="---------------------------" />

<echo message="targets in SharePoint.build" />

<echo message="---------------------------" />

<echo message="addsolution - installs the solution on the SharePoint server making it avaialble for deployment" />

<echo message="deploysolution - deploy the solution to the local server for the first time" />

<echo message="retractsolution - removes the deployed solution from the local server" />

<echo message="deletesolution - removes the solution from the server completely. calls retractsolution first" />

</target>

</project>

In fact, at this point I can check this into CruiseControl.NET and it'll build successfully.

Doesn't do much at this point, but it's our roadmap. You'll notice there are a few targets listed in a file called SharePoint.build. I've found that these are typical and never change, you just change the properties of the filenames they act on. Let's take a look at this file:

<?xml version="1.0" encoding="utf-8"?>

<project name="SharePoint">

<!-- directory and file names, generally won't change -->

<property name="build.dir" value="${root.dir}\build" />

<property name="solution.dir" value="${source.dir}\solution" />

<property name="deploymentfiles.dir" value="${solution.dir}\DeploymentFiles" />

<property name="tools.dir" value="${root.dir}\tools" />

<!-- executable files that shouldn't change -->

<property name="makecab.exe" value="${tools.dir}\makecab\makecab.exe" />

<property name="stsadm.exe" value="${tools.dir}\stsadm\stsadm.exe" />

<target name="buildsolutionfile">

<exec program="${makecab.exe}" workingdir="${solution.dir}">

<arg value="/F" />

<arg value="${deploymentfiles.dir}\${directives.file}" />

<arg value="/D" />

<arg value="CabinetNameTemplate=${package.file}" />

</exec>

<move

file="${deploymentfiles.dir}\${package.file}"

tofile="${build.dir}\${package.file}" />

</target>

<!-- stsadm targets for deployment -->

<target name="addsolution">

<exec program="${stsadm.exe}" verbose="${verbose}">

<arg value="-o" />

<arg value="addsolution" />

<arg value="-filename" />

<arg value="${build.dir}\${package.file}" />

</exec>

<call target="spwait" />

</target>

<target name="spwait" description="Waits for the timer job to complete.">

<exec program="${stsadm.exe}" verbose="${verbose}">

<arg value="-o" />

<arg value="execadmsvcjobs" />

</exec>

</target>

<target name="deploysolution" depends="addsolution">

<exec program="${stsadm.exe}" workingdir="${build.dir}" verbose="${verbose}">

<arg value="-o" />

<arg value="deploysolution" />

<arg value="-name" />

<arg value="${package.file}" />

<arg value="-immediate" />

<arg value="-allowgacdeployment" />

<arg value="-allcontenturls" />

<arg value="-force" />

</exec>

<call target="spwait" />

</target>

<target name="retractsolution">

<exec program="${stsadm.exe}" verbose="${verbose}">

<arg value="-o" />

<arg value="retractsolution" />

<arg value="-name" />

<arg value="${package.file}" />

<arg value="-immediate" />

<arg value="-allcontenturls" />

</exec>

<call target="spwait" />

</target>

<target name="deletesolution" depends="retractsolution">

<exec program="${stsadm.exe}" verbose="${verbose}">

<arg value="-o" />

<arg value="deletesolution" />

<arg value="-name" />

<arg value="${package.file}" />

</exec>

<call target="spwait" />

</target>

</project>

This file contains a few targets for directly installing and deploying solutions into SharePoint (using stsadm.exe). It simply calls makecab.exe or stsadm.exe (which are local to the project in a tools directory) and executes them with the appropriate filenames. The filenames are set as properties in your main build file, then that build file includes this one. This SharePoint.build file generally never has to change and you can use it from project to project.

You might notice a "DeploymentFiles" folder used as the "deploymentfiles.dir" property. This is taking a queue from STSDEV, using it as a root folder in the solution where the *.ddf and manifest.xml file live for the solution. There's also a RootFiles folder which contains various subfolders with the webparts, features, images, resources, etc. in it. Here's a look at the development tree:

All source code that will be compiled into assemblies lives under "src". The "solution" folder is the root folder where DeploymentFiles and RootFiles lives as I consider things like feature and site defintions to be part of the solution and source code, just like say a SQL script. Under "src" I have "app" which contains the web parts, feature receivers, etc. and "test" which contains unit test assemblies. This allows you (as you'll see) to build each independently as I don't want my unit test code mixing up with my web parts or domain code. Under "src" they'll be many projects, but in the build file we collapse them all down to one assembly for testing purposes.

The "lib" folder contains external assemblies I reference (but not necessarily deploy) in the solution. This actually contains a copy of SharePoint.dll and SharePoint.Search.dll. You might wonder why I have copies of the files here while they exist in the GAC or buried in the 12 hive. It's because I prefer to have my solution trees to be self-contained. Anyone can grab this entire tree, no matter what version of what they have installed and build it (that includes the tools folder with all the tools they need to build it).

In the "tools" folder I have a copy of stsadm.exe (again, if the version changes on the server I'm protected and using the version I need), NAnt (for the build itself), makecab.exe to create the .wsp file and SharePoint Solution Installer, a really cool tool that runs against my WSP and lets you install and configure it without having to write an installer. You just edit a .config file and provide it the .wsp file.

Back to our project.build file. We'll setup some properties that will get used both in our build file and the SharePoint.build one (like the root directory where things are, etc.)

<!-- global properties, generally won't change -->

<property name="nant.settings.currentframework" value="net-2.0" />

<!-- filenames and directories, generally won't change -->

<property name="root.dir" value="${directory::get-current-directory()}" />

<property name="source.dir" value="${root.dir}\src" />

<property name="directives.file" value="${project::get-name()}.ddf" />

<property name="package.file" value="${project::get-name()}.wsp" />

<property name="dist.dir" value="${root.dir}\dist" />

<property name="lib.dir" value="${root.dir}\lib" />

<!-- properties that change from project to project but not often -->

<property name="webpart.source.dir" value="${source.dir}\app\SharePointForums.FeatureReceiver" />

<property name="feature.source.dir" value="${source.dir}\app\SharePointForums.WebParts" />

<property name="test.source.dir" value="${source.dir}\test" />

<property name="webpart.lib" value="${project::get-name()}.WebParts.dll" />

<property name="feature.lib" value="${project::get-name()}.Feature.dll" />

<property name="test.lib" value="${project::get-name()}.Test.dll" />

<!-- "typical" properties that change -->

<property name="version" value="2.0.0.0" />

<property name="debug" value="true" />

<property name="verbose" value="false" />

Then we'll include our SharePoint.build file:

<!-- include common SharePoint targets -->

<include buildfile="SharePoint.build" />

Recently I've switched to using patternsets inside of filesets in NAnt as it's more flexible. So we'll define a patternset for source files and assemblies, then use this in our filesets for the webpart, feature, and test sources and assembly files.

<!-- filesets and pattern sets for use instead of naming files in targets -->

<patternset id="cs.sources">

<include name="**/*.cs" />

</patternset>

<patternset id="lib.sources">

<include name="**/*.dll" />

</patternset>

<fileset id="feature.sources" basedir="${feature.source.dir}">

<patternset refid="cs.sources" />

</fileset>

<fileset id="webpart.sources" basedir="${webpart.source.dir}">

<patternset refid="cs.sources" />

</fileset>

<fileset id="test.sources" basedir="${test.source.dir}">

<patternset refid="cs.sources" />

</fileset>

<fileset id="sharepoint.assemblies" basedir="${lib.dir}">

<patternset refid="lib.sources" />

</fileset>

<fileset id="solution.assemblies" basedir="${build.dir}">

<patternset refid="lib.sources" />

</fileset>

Finally here are the targets in our project build file.

Clean will just remove any temporary directories we built:

<target name="clean">

<delete dir="${build.dir}" />

<delete dir="${dist.dir}" />

</target>

Init will first call clean, then create the directories:

<target name="init" depends="clean">

<mkdir dir="${build.dir}" />

<mkdir dir="${dist.dir}" />

</target>

Compile will call init, then using the <csc> task, build our sources into assemblies. We're using a strongly named keyfile so we specify this in our csc task (otherwise when we deploy we'll get warnings about unsigned assembies, feature receivers must be put into the GAC and require signing).

<target name="compile" depends="init">

<csc output="${build.dir}\${feature.lib}" target="library" keyfile="${project::get-name()}.snk" debug="${debug}">

<sources refid="feature.sources" />

<references refid="sharepoint.assemblies" />

</csc>

<csc output="${build.dir}\${webpart.lib}" target="library" keyfile="${project::get-name()}.snk" debug="${debug}">

<sources refid="webpart.sources" />

<references refid="sharepoint.assemblies" />

</csc>

</target>

Test first calls compile to get all the web part, feature, and domain assemblies built then compiles the unit test assembly. Finally it will call our unit test runner (MbUnit.Cons.exe or whatever). The output of the unit test run can be used in a CI tool like CruiseControl.NET.

<target name="test" depends="compile">

<csc output="${build.dir}\${test.lib}" target="library" debug="${debug}">

<sources refid="test.sources" />

<references refid="sharepoint.assemblies" />

<references refid="solution.assemblies" />

</csc>

<!-- run unit tests with test runner (mbunit, nunit, etc.) -->

</target>

Our "build" task just calls test (to ensure everything compiles and works) then delegates to the SharePoint.build file to build the solution. This will create our .wsp file and put us in a position to deploy our solution.

<target name="build" depends="test">

<call target="buildsolutionfile" />

</target>

Finally in this build file we have a dist task. This will build the entire solution then zip up the Solution Installer files and wsp file into a zip file that we'll distribute. End users just download this, unzip it, and run Setup.exe to install the solution.

<target name="dist" depends="build">

<zip zipfile="${dist.dir}\${project::get-name()}-${version}.zip">

<fileset basedir="${build.dir}">

<include name="**\*.wsp" />

</fileset>

<fileset basedir="${tools.dir}\SharePointSolutionInstaller">

<include name="**\*" />

</fileset>

</zip>

</target>

There's a lot of NAnt script here, but it's all pretty basic stuff. The nice thing is that from the command line I can build my system, install it locally, deploy it for testing, and event create my distribution for release on CodePlex (or whatever site you use). There's a go.bat file that lives in the root of the solution and looks like this:

@echo off

tools\nant\nant.exe -buildfile:SharePointForums.build %*

It simply calls NAnt with the buildfile name and passes any parameters to the build. For example from the command line here's the output of "go build deploysolution" which will compile my system, run all the unit tests, then add the solution to SharePoint and deploy it. After this I can simply browse to my website and do some integration testing.

NAnt 0.86 (Build 0.86.2898.0; beta1; 12/8/2007)

Copyright (C) 2001-2007 Gerry Shaw

http://nant.sourceforge.net

Buildfile: file:///C:/Development/Forums/SharePointForums.build

Target framework: Microsoft .NET Framework 2.0

Target(s) specified: build deploysolution

clean:

[delete] Deleting directory 'C:\Development\Forums\build'.

[delete] Deleting directory 'C:\Development\Forums\dist'.

init:

[mkdir] Creating directory 'C:\Development\Forums\build'.

[mkdir] Creating directory 'C:\Development\Forums\dist'.

compile:

[csc] Compiling 22 files to 'C:\Development\Forums\build\SharePointForums.Feature.dll'.

[csc] Compiling 182 files to 'C:\Development\Forums\build\SharePointForums.WebParts.dll'.

test:

[csc] Compiling 41 files to 'C:\Development\Forums\build\SharePointForums.Test.dll'.

build:

buildsolutionfile:

[exec] Microsoft (R) Cabinet Maker - Version (32) 1.00.0601 (03/18/97)

[exec] Copyright (c) Microsoft Corp 1993-1997. All rights reserved.

[exec]

[exec] Parsing directives

[exec] Parsing directives (C:\Development\Forums\src\solution\DeploymentFiles\SharePointForums.ddf: 1 lines)

[exec] 140,309 bytes in 7 files

[exec] Executing directives

[exec] 0.00% - manifest.xml (1 of 7)

[exec] 0.00% - SharePointForums.Feature.dll (2 of 7)

[exec] 0.00% - SharePointForums.WebParts.dll (3 of 7)

[exec] 0.00% - SharePointForums\Feature.xml (4 of 7)

[exec] 0.00% - SharePointForums\WebParts.xml (5 of 7)

[exec] 0.00% - SharePointForums\WebParts\SharePointForums.webpart (6 of 7)

[exec] 0.00% - IMAGES\SharePointForums\SharePointForums32.gif (7 of 7)

[exec] 100.00% - IMAGES\SharePointForums\SharePointForums32.gif (7 of 7)

[exec] 0.00% [flushing current folder]

[exec] 93.59% [flushing current folder]

[exec] 5.60% [flushing current folder]

[exec] 100.00% [flushing current folder]

[exec] Total files: 7

[exec] Bytes before: 140,309

[exec] Bytes after: 50,736

[exec] After/Before: 40.09% compression

[exec] Time: 0.04 seconds ( 0 hr 0 min 0.04 sec)

[exec] Throughput: 349.34 Kb/second

[move] 1 files moved.

addsolution:

[exec]

[exec] Operation completed successfully.

[exec]

spwait:

[exec]

[exec] Operation completed successfully.

[exec]

deploysolution:

[exec]

[exec] Timer job successfully created.

[exec]

spwait:

[exec]

[exec] Executing solution-deployment-sharepointforums.wsp-0.

[exec] Operation completed successfully.

[exec]

BUILD SUCCEEDED

Total time: 22.2 seconds.

Hope this helps your build process. Creating SharePoint solutions is a complicated matter. There are features, web parts, solutions, manifests, various tools, and lots of command line tools to make it all go. NAnt helps you tackle this and these scripts boil the solution down to only a few simple commands you need to remember.

-

Getting Sites and Webs during Feature Activation in SharePoint

One of the cool features in SharePoint 2007 are Feature Event Classes. These classes allow you to trap and respond to an event that fires when a feature is installed, activated, deactived, or removed. While you can't cancel an installation or activation through the events, you can use them to your advantage to manipulate the scoped item they're operating on.

In my SharePoint Forums Web Part, I had delegated the creation of the lists to the web part itself whenever it was added to a site. Of course I didn't go farther and clean up the lists the web part created when it was removed because there was no way to tell when someone removed a web part from a page (since it might not be the only one on the page). This has led to various problems (users had to be server admins to make this work 100% of the time, lists left over from old installs) but in the 2007 version Feature Event Recievers come to the rescue. Now when you activate the Forums on a web, it creates the needed lists and when you deactivate it the receiver removes them. You create a Feature Reciever by inheriting from the base class of SPFeatureReceiver. In it there are 4 methods you can override (activating, deactivating, installing, uninstalling).

One thing you don't have is a Context object so it makes it a little tricky to get the SPSite/SPWeb object the feature is activating on. Luckily there's a nice property (of type object) in the SPFeatureReceiverProperites object that gets passed to each method. This class contains a property class Feature which in turn contains a property called Parent. The Parent property is the scoped item that is working against the feature so if you scope your feature to Web, you'll get a SPWeb object (Site for an SPSite object, etc.). This is the key in getting a hold of and manipulating your farm/server/site/web when a feature is accessed.

Here's an example of a feature. When the feature is activated, it creates a new list. When it's deactivated it removes the list.

public class FeatureReceiver : SPFeatureReceiver

{public override void FeatureActivated(SPFeatureReceiverProperties properties)

{using (SPWeb web = (SPWeb) properties.Feature.Parent){web.Lists.Add("test", "test", SPListTemplateType.GenericList);

}

}

public override void FeatureDeactivating(SPFeatureReceiverProperties properties)

{using (SPWeb web = (SPWeb) properties.Feature.Parent){SPList list = web.Lists["test"];web.Lists.Delete(list.ID);

}

}

public override void FeatureInstalled(SPFeatureReceiverProperties properties)

{/* no op */}

public override void FeatureUninstalling(SPFeatureReceiverProperties properties)

{/* no op */}

}

BTW, it's not clear to me if getting an SPWeb object through this means requires the disposing of it. See Scott Harris and Mike Ammerlaans excellent must-read article here on scoped items which might help. For me, this is the safest approch (the object will always fall out of scope and dispose of itself). It might be overkill but it works. Feature receivers are not the easiest things to debug.

Enjoy!

-

WPF or WinForms, choose wisely

WPF is all the rage (at least that's what they tell me) and it's IMHO one of the best technologies to come out of Microsoft. Still, however, companies choose to stay the course with building on WinForms. Karl Shifflett has a great blog entry on choosing WPF over ASP.NET (and great entries on WPF in general so check his blog out here). To me it's a no-brainer choosing WPF over ASP.NET, unless you're really enamored with a browser app (or forced to build one due to some constraints) and with Silverlight and XBAP (and the new features coming out shortly in Silverlight 2) building a rich interface for the web gets better and better. AJAX just doesn't cut it and is a hack IMHO.

WPF is all the rage (at least that's what they tell me) and it's IMHO one of the best technologies to come out of Microsoft. Still, however, companies choose to stay the course with building on WinForms. Karl Shifflett has a great blog entry on choosing WPF over ASP.NET (and great entries on WPF in general so check his blog out here). To me it's a no-brainer choosing WPF over ASP.NET, unless you're really enamored with a browser app (or forced to build one due to some constraints) and with Silverlight and XBAP (and the new features coming out shortly in Silverlight 2) building a rich interface for the web gets better and better. AJAX just doesn't cut it and is a hack IMHO.Making the decision between WPF and WinForms however is a different story. Sure, WPF is the new hotness and WinForms is old and busted but is it the right choice? Obviously "it depends" on the situation and Microsoft is continuing to deliver and support WinForms so it won't be going away anytime soon. So what are the compelling factors to choose WPF over WinForms? Karl hints at choices of WPF over WinForms in his WPF Business Application series, but the reasons might be subtle for some.

If you're struggling here are some reasons for choosing WPF over WinForms, and let's play devils advocate as you might have to fight for some of these.

Latest Technology

Why start new development on old technologies? There's bleeding edge (Silverlight 2 perhaps) and then there's cutting edge (WPF?) and we can probably start to talk about WinForms as legacy. Start, not come to that conclusion. WinForms development can be painful (much like moose bites) but the latest technology debate is a tough one. One on hand it's lickety-split to create WPF using the tools available today (see below) and from a development perspective WPF shines because everything is an object. The crazy hoops you have to jump through just to get an image on a button or menu are all but gone when you try embedding an object onto another one in XAML. On the flipside though, most of the large UI suites (DevExpress, Infragistics, Component One, Telerik) haven't fully completed their WPF implementations and the maturity lies in their WinForm incantations. Still, starting a new project today that might be delivered say 6-12 months from now doesn't make a lot of sense building on what some might consider legacy but as usual, you have to pick the right tool for the right job.

Mature Product

While WPF is pretty young in the eyes of consumers, Microsoft has invested 5+ years of development in it. WinForms arguably has the edge on maturity here (existing since the .NET 1.0 days) but don't knock WPF as a babe in the woods. It popped up on the R&D radar back in shortly after .NET 1.1 and Visual Studio 2003 came out and has been gestating ever since. This is a plus point if you're in a boardroom or meeting with some stuffies who think it's new and shiny but with no meat behind it. Combined with its own set of unique features, try something like UI automation and WinForms and we'll talk maturity. 10 years after WinForms was born and we're still struggling with UI automation. WPF solves this in one fell swoop, and does a nice job of it to boot.

Silverlight

WPF is based on XAML for it's definitions (both application code and UI design). Silverlight is the same because after all crunching down and serializing XML is dead simple these days. While Silverlight uses a subset of WPF for it's rendering, you can re-use a lot of what you might create in WPF and your application. This makes for building multiple UIs a happy-happy-joy-joy scenario. Too many times I've been faced with the problem of building a system for web users *and* desktop users. Too many times we've had to dumb down the web because it couldn't handle the rich experience the desktop provides, or be faced with 100k of JavaScript (yeah, try debugging that mess after a few sleepless nights) so anything has to be better than this. Silverlight lets you leverage a lot of your XAML investment you make in a WPF app and with technologies like BAML you can push the envelope even further. It's a win-win scenario for everyone and lays the smack down on Flash or Java anyday.

Tools

While we live in a domain driven design world (at least some of us do, you have come out of your cave right?) with objects and collections and tests oh my, there is still the UI to design. I'm not a huge fan of the move to CSS validated Expression Web, but I understand (and agree with) the choices Microsoft made with the model. Kicking it up a notch and delivering Expression Blend with it's integration into Visual Studio makes building WPF apps a breeze. In fact, I strongly advocate and support handing the UI design off to someone better suited to it. Let's face it, developers suck the big one at building UIs (unless it's "Hello World" with a big button and an image of Scott Hanselmans face on it) so let's let the UI designers design. Blend lets you do this by just letting the designers "go wild" as it were, without having to worry about "how in the heck am I going to hook this up later". Giving a designer a copy of Visual Studio to design a WinForm app is just plain crazy, and don't even try to convert their JPG mockups that have been signed off on into a Windows Form (been there, more t-shirts, I have a lot of them) but getting a XAML file from them just plugs right into our development environment and is dead simple to wire up to whatever back-end you have going at the time.

UI Resolution

How many bugs do you have logged on your current project that say something like "cannot see button x when my screen resolution is 800x600"? As a developer, we generally work at crazy resolutions that no sane person would run at (my current desktop runs at 1680x1050) so building forms on this just plain doesn't translate well (read: at all) to a users desktop of 800x600 or 1024x768. Buttons vanish, menu options disappear, and that oh so beautiful grid that is the lynchpin of your appplication is missing the bottom 20 rows and last 10 columns. Sure, WinForm containers and whatnot help but far too many times we forget about this and end up building things off in unseen areas of the screen. WPF doesn't solve this problem, but really helps. Not only that, we're not asking users to change the resolution or font size on their screen to see things clearly. In this day and age, users need to be able to dynamically change the system at will when they're working. I've seen users running with the extra large font theme as their eyes give out on them but apps just plain don't work well when your system font is 36pt Verdana. Look at the iPhone as an example of clever UI integration. It dynamically zooms in and out as you choose to make things readable. We need more of this on the desktop applications we build to suite the needs of users who want "to see it all" at once. WPF let's us do this with less pain than WinForms.

Databinding

WPF allows for much easier data binding through its model and this can result in faster development time. Now Unka Bil isn't telling you to go out and bind your WPF creations directly to ADO.NET models. I still live and die by Domain Driven Design so binding happens on objects (probably best through a Binding<T> adapter of your domain classes) but WPF does make it easier to do this if that's your thang.

So overall it's a better experience, both from the development side and consumer side. Again, you might have some battles to fight with Corporate to jump onto the technology band-wagon, but this is might be a battle worth fighting for. WPF is no silver bullet (as I always harp, the is no silver bullet unless you're fighting werewolves) but hopefully this will help you make a more informed choice. The choice is yours, but choose wisely.

-

Do SharePoint Developers Want a Developer Version of SharePoint?

First off let me start the disclaimer. I do not work for Microsoft and have no decision making powers in anything that goes into a piece of software. You might call me an "influencer" as we MVPs may suggest things at times (sometimes very verbally) but we don't take a laundry list to Microsoft and make demands of new features. Keep that in mind while you read this post.

First off let me start the disclaimer. I do not work for Microsoft and have no decision making powers in anything that goes into a piece of software. You might call me an "influencer" as we MVPs may suggest things at times (sometimes very verbally) but we don't take a laundry list to Microsoft and make demands of new features. Keep that in mind while you read this post.One of the biggest complaints a lot of us hear about SharePoint development is the need to build on Windows 2003 server. I still to this day have no idea what the critical dependency on Windows 2003 Server is, but obviously there must be one. Otherwise we would be building solutions on XP and Vista. As an ASP.NET developer, we have a local IIS (5.1) instance available to us for development and with VS2005 there's even a built-in web server for building websites. So forcing developers to use a server for SharePoint development can seem a little harsh, hence all the complaints.

Working on a real server (say a development one in your network) is a no-go, unless you're the only developer on the machine. You just can't do things like hang the worker process or force a iisreset for other developers. That's just not cool. And from a SharePoint perspective, you'll be clobbering each other all the time if you're on a shared server (been there, done that, got the t-shirt).

That leaves virtual development (using either Virtual PC, Virtual Server, or VMWare). This is probably the best choice but it's costly. Setting up the VM requires a significant time investment to get all your ducks in a row, even more if you want to keep this long term so you're going to have to create some parent-child relationships on VM hierarchies (see AC's post here about this).

Hardware *is* cheap but in the corporate world it's not always cheap that wins. Trying to get network services convinced you're not going to bring down their network with your little VM isn't easy (more t-shirts) and you'll battle issues of software updates, virus protection, licensing, and a host of others. It's a hard battle, but perhaps one worth fighting.

The MVP community is a little splintered on whether or not a Developer Version of SharePoint would be value-add (BizTalk has one, so why not SharePoint?). Some think it's necessary, others would rather have MS focus their efforts elsewhere and live with VM development. Microsoft is listening and going over ideas and approaches to ease the pain here but nothing to tell you from the trenches right now.

So what say you? Would you want a Developer Edition of SharePoint (maybe only WSS so no Excel/InfoPath/BDC services that MOSS offers) that you could install on XP/Vista to local development? Should the efforts be focused on making the Virtual Experience better? Or does none of this matter and all is rosy in the world when it comes to building SharePoint solutions?

Feel free to chime in here. Like I said, I'm not guarantying this is going to get back to Microsoft but some people read my blog (or so they tell me) so you never know.

-

Automated UI Testing with Project White

A co-worker turned me onto Project White, an automated UI testing framework by ThoughtWorks. This along the same lines as NUnitForms and other automated systems. It's basically Selenium for WinForms (which rocks in its own right) so I thought I would dig more into White (it has support for WPF as well, but I haven't tried that out yet). It was good timing as we've been talking and coming up with strategies for testing and UI testing is a big problem (and it is everywhere else based on people I've talked to).

A co-worker turned me onto Project White, an automated UI testing framework by ThoughtWorks. This along the same lines as NUnitForms and other automated systems. It's basically Selenium for WinForms (which rocks in its own right) so I thought I would dig more into White (it has support for WPF as well, but I haven't tried that out yet). It was good timing as we've been talking and coming up with strategies for testing and UI testing is a big problem (and it is everywhere else based on people I've talked to).The White library is nice and simple. All you really need to do is add in the Core.dll from White and your unit test framework and write some tests. I tested it with MbUnit but any framework seems to work. Ben Hall posted a blog entry about White along with some sample code. This, combined with the library got me started.

As Ben did, I created a simple application with a single form and started to write some tests. I couldn't use Ben's complete sample as it was written for VS2008 and I only had VS2005 for my testing. No problem. You can use White with VS2005, but you'll need the 3.0 framework installed.

I came across the intial problem with testing though. The first test that failed left the window up on the screen. This was an issue. I also wrote the same test Ben did, looking for a non-existant form, which appropriately threw a UIActionException. The test passed as it threw the exception I was looking for, but again the form was up on the screen. The Application.Kill() method wasn't being called if the test would fail or an exception was thrown. Ben's method was to put a call to Application.Kill in the [TearDown] method on the test fixture. This is great but I'm not a big fan of [SetUp] and [TearDown] methods. Another option was to surround each test with a try/catch/finally and in the finally code call out to the Application.Kill() method. This was ugly as I would have to do this on every test.

Following Ben's example I created a WhiteWrapper class which would handle the White library features for me. I made it implement IDisposable so I could do something like this:

1 using(WhiteWrapper wrapper = new WhiteWrapper(_path)) 2 { 3 ... 4 } 5

I also added a method to fetch me a control from the main window (using a Generic) so I could grab a control, execute a method on it (like .Click()) and check the result of another control (like a Label). Note that these are not WinForm controls but rather a White wrapper around them called UIItem. This provides general features for any control like a .Click method or a .Text property or items in a listbox.

Here's my WhiteWrapper code:

1 class WhiteWrapper : IDisposable 2 { 3 private readonly Application _host = null; 4 private readonly Window _mainWindow = null; 5 6 public WhiteWrapper(string path) 7 { 8 _host = Application.Launch(path); 9 } 10 11 public WhiteWrapper(string path, string mainWindowTitle) : this(path) 12 { 13 _mainWindow = GetWindow(mainWindowTitle); 14 } 15 16 public void Dispose() 17 { 18 if(_host != null) 19 _host.Kill(); 20 } 21 22 public Window GetWindow(string title) 23 { 24 return _host.GetWindow(title, InitializeOption.NoCache); 25 } 26 27 public TControl GetControl<TControl>(string controlName) where TControl : UIItem 28 { 29 return _mainWindow.Get<TControl>(controlName); 30 } 31 } 32

And here are the refactored tests to use the wrapper (implemented via a using statement which makes using the library fairly clean in my test code):

1 public class Form1Test 2 { 3 private readonly string _path = Path.Combine(Directory.GetCurrentDirectory(), "WhiteLibSpike.WinForm.exe"); 4 5 [Test] 6 public void ShouldDisplayMainForm() 7 { 8 using(WhiteWrapper wrapper = new WhiteWrapper(_path)) 9 { 10 Window win = wrapper.GetWindow("Form1"); 11 Assert.IsNotNull(win); 12 Assert.IsTrue(win.DisplayState == DisplayState.Restored); 13 } 14 } 15 16 [Test] 17 public void ShouldDisplayCorrectTitleForMainForm() 18 { 19 using (WhiteWrapper wrapper = new WhiteWrapper(_path)) 20 { 21 Window win = wrapper.GetWindow("Form1"); 22 Assert.AreEqual("Form1", win.Title); 23 } 24 } 25 26 [Test] 27 [ExpectedException(typeof(UIActionException))] 28 public void ShouldThrowExceptionIfInvalidFormCalled() 29 { 30 using (WhiteWrapper wrapper = new WhiteWrapper(_path)) 31 { 32 wrapper.GetWindow("Form99"); 33 } 34 } 35 36 [Test] 37 public void ShouldUpdateLabelWhenButtonIsClicked() 38 { 39 using (WhiteWrapper wrapper = new WhiteWrapper(_path, "Form1")) 40 { 41 Label label = wrapper.GetControl<Label>("label1"); 42 Button button = wrapper.GetControl<Button>("button1"); 43 button.Click(); 44 Assert.AreEqual("Hello World", label.Text); 45 } 46 } 47 48 [Test] 49 public void ShouldContainListOfItemsInDropDownOnLoadOfForm() 50 { 51 using (WhiteWrapper wrapper = new WhiteWrapper(_path, "Form1")) 52 { 53 ListBox listbox = wrapper.GetControl<ListBox>("listBox1"); 54 Assert.AreEqual(3, listbox.Items.Count); 55 Assert.AreEqual("Red", listbox.Items[0].Text); 56 Assert.AreEqual("Versus", listbox.Items[1].Text); 57 Assert.AreEqual("Blue", listbox.Items[2].Text); 58 } 59 } 60 }

The advantage I found here was handling exceptions and unknown states. For example in the last test, ShouldUpdateLabelWhenButtonIsClicked I ran the test before I even had the controls on the form. The test failed but it didn't hang or crash the system. That's what the IDisposable gave me, a nice way to always clean up without having to remember to create a [TearDown] method.

One of the philosophical questions we have to ask here is when is this kind of testing appropriate? For example, if I have good presenters I can test these kind of things with mocked out views and presenter/model tests. So am I duplicating effort here by testing the UI directly? Should I get my QA people to write these kind of tests? There's a long discussion to have in your organization around this so it's not just a "tool problem". You need to dig deep into what you're testing and how. At some point, you begin to divorce yourself from behaviour driven development and you end up testing UI edge cases and integration from a UI perspective. If your UI doesn't line up with your domain, how do you reconcile this? There are probably more questions than answers for this type of thing and software design is more art than science. The answer "it depends" goes a long way, but don't try to solve your business or design problems with a tool. There is no silver bullet here, just a few goodies to help you along the way. It's you who needs to decide what's appropriate for the situation and how much time, money, and resources you're going to invest in something.

The library works pretty good and I'm happy with the test code so far. We'll have to see now how it deals with far more complex UIs (we have things like crazy 40-column grids with all kinds of functionality). Back later on how that goes. In the meantime, check out Project White here on CodePlex to help you with your automated UI testing.

-

Winnipeg Code Camp, the aftermath...

Finished up at Winnipeg Code Camp today with a good turnout for my sessions. I was pretty happy as lots of people are interested in the topics I presented on (BDD and DDD) which is a good thing. The more the merrier.

Of course finding the location was a bit of a problem for me. I punched in Red River College into my Garmin GPS we got with the rental (thank god for technology) and it instructed that my destination was a mere 5 minutes away from the hotel. Sounded about right (I know I was close, wasn't sure how close). I got there and wandered around for a bit. It was a little off because the map on site didn't seem to resemble the school. I pulled it up on my BlackBerry and showed it to a few people, but nobody seemed to know what I was talking about (someone commented on the BlackBerry and it's ability to show JPG files from the web, but that was about it). Finally I tracked down a security guy who told me I was on the wrong campus and wanted the downtown campus. Silly rabbit. Should have read the GPS before clicking "Go".

It was a good day, the sessions went well and I think the Winnipeg guys did a bang up job on their first (and not last) code camp. I got to draw the lucky winner who walked away with a new XBox 360 Arcade so that made my day.

I'll post the code and resources for the two sessions later here on my blog (and I think the Code Camp guys are setting up a resource page that we'll add info to as well).

I also did a quick two-minute interview with D'Arcy before my sessions. Brad Pitt I am not, but you can watch the painful video below by clicking on the big giant arrow that looks like my head.

-

Winnipeg here I come... what was I thinking?

I'm heading out tomorrow to the airport to spend the weekend in Winnipeg for the first annual Winnipeg Code Camp. I'm very honoured to be invited to speak there but there is that weather. Grant you, Calgary isn't all that great these days. We went through a spell where it was -50 C with the windchill factor (yeah, that's "5-0"). Checking the weather site for Winterpeg, tomorrow its currently minus 34 and the high tomorrow is minus 21. Oh well.

I'm heading out tomorrow to the airport to spend the weekend in Winnipeg for the first annual Winnipeg Code Camp. I'm very honoured to be invited to speak there but there is that weather. Grant you, Calgary isn't all that great these days. We went through a spell where it was -50 C with the windchill factor (yeah, that's "5-0"). Checking the weather site for Winterpeg, tomorrow its currently minus 34 and the high tomorrow is minus 21. Oh well.I'm doing two sessions, one on Behaviour Driven Development and getting the "test" word out of your vocabulary (as well as some tricks with turning executable specs into end-user documentation). The other session is on Domain Driven Design. We'll do a brief overview of what DDD is and cover the patterns usually associated with it. Then we'll dig into validation techniques and keeping your domain clean (including bubbling things up to the UI layer).

Should be fun and it's my first time in Winnipeg for any amount of time. Not sure with the weather what we'll be doing but Vista and Mommy are in tow and we'll see if we can paint the town red while we're there (do the drinking laws in Winnipeg preclude 9 month olds I wonder?). See you there!

-

Registration for ALT.NET Open Spaces Seattle is alive!

Dave Laribee and team have done an excellent job of getting the next Seattle ALT.NET open space conference up and going. I'm pleased to say registration is open up now (and will probably fill up by the time I finish writing this blog entry). So get going and register now!

Dave Laribee and team have done an excellent job of getting the next Seattle ALT.NET open space conference up and going. I'm pleased to say registration is open up now (and will probably fill up by the time I finish writing this blog entry). So get going and register now!We've made things hopefully easier by incorporating OpenID so all you need is an OpenID identity (I use myopenid.com and it's quite good but any old one will do, including Yahoo) with your name and email set up (other information is optional). Note: Please do not use openid.org as it doesn't seem to work. We're not sure why and even if the openid.org site is real or a phishing site, so stick with myopenid.com or other provider.

In addition to the OpenID integration, we've negotiated a discount for the hotel nearby so that'll be available to you. As always, the event is free but like I said, it's limited to 100 participants. First come, first served.

Get going and see you in Seattle!

-

Wakoopa - Social Networking kicked up a notch

I was bugging Scott Hanselman about his Ultimate Tools List on Friday. The guys at the office were talking and we thought it would be more valuable to find out not what Scott recommends, but what he's using (and how much). The last check he was trying to track some of it, but his latest results were "Visual Studio". Then I stumbled onto Wakoopa, which really looks interesting as you pull back the covers.

Wakoopa is yet-another-social-networking site but in disguise. Wakoopa tracks what kind of software or games you use, and lets you create your own software profile. It does this by having you run a small app in your tray and it tracks what you're running. Fairly simple concept. It's when you start logging your work and you see the results is where IMHO it kicks social networking up a notch.

Rather than me going onto say Facebook and setting up my profile with my interests, what games I play, etc. Wakoopa sort of does this for you. Then combined with the results of every one else on the site, shows you what's going on. I've been running it for a couple of hours, just ignoring that it's there. Then I went to my personal Wakoopa page and found a wealth of information, not only about me but well, everyone.

The main page contains a scrolling marquee of all the apps people are using. Of course, when you have information like this to mine you use it. So there's a list of the most used titles (including World of Warcraft in the top 10, figures) and some newcomers, popular titles that are just gaining momentum with enough people. Each title has it's own dedicated page with some stats and a brief description. I was intrigued by a title called Flock as I had never heard of it and it turned up on the most used page so here's it's page. In addition to the stats, there are user reviews and a list of who's using it.

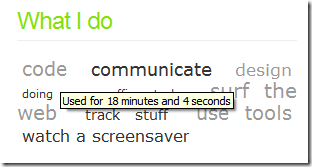

It even creates a cloud tag on your personal profile page about "what you do". Here's mine:

The mouse cursor isn't present in my screen grab, but I was hovering over the "code" tag, indicating I had been using various tools relating to coding for 18 minutes and 4 seconds. The other tags are interesting. Obviously communicate relates to Outlook (which I generally have running all the time). Surf the web are my IE and Firefox windows and the screensaver kicked in so it tracked that (that feature should probably be turned off, as everyone will be doing this). The usage page lists what you're actively using, but since the little widget is running on your desktop it has access to everything so items you have running in the background are separated out and listed here too.

The "kick it up a notch" aspect to all this, is on your profile page where it suggest software you might like (based on your own usage and popular titles others are using) and goes so far as to list "people I might like". Of course I'm not going to go out and make friends with DynaCharge1033 just because they use Notepad++ too, but it's a nice feature.

All in all, Wakoopa is an interesting twist on what you do and providing the ability to share that information. Here's hoping to see this little community grow and expand and maybe provide services for others to hook into. Then we'll get into some serious cross-pollination of social aspects across multiple contexts, which is something I think the whole social networking scene might be missing.

-

Scrumming with the Trac Project

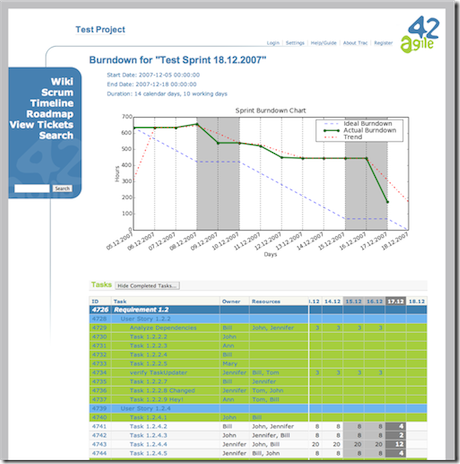

I got an email from ScrumMaster Andrea about an update I should do to my Scrum Tools Roundup post. Andrea drew my attention to the Agilo for Scrum tool, an open source add-on for the Trac Project. The Trac project is a wiki/issue tracking system (written in Python, my #2 favourite language next to C# these days) which has been around for ages and quite successful in it's own right. Agilo for Scrum is an add-on that sits on top of Trac and provides features to support the Scrum process.

I tried Agilo out this morning with a few projects. I always keep some data in Excel of some past projects with things like user stories, releases, iteration lengths, tasks, etc. that can be plugged into some tool for testing. It's my reference data for doing evaluations of tools like this.

The tool looks great. It has all the basics you need in a tool to support your use of the Scrum process (daily stand-up, burndown charts, etc.). A nice feature is the ability to link items together. This also has the capability of copying information from parent to child. Being able to do this, you can create some useful relationships with tasks relating to features, features relating to iterations or sprints, and all of these rolling up to releases (or whatever way you want to organize your projects). A key thing missing from tracking tools is the ability to link these items together easily. This facilitates creating a dashboard view of the project so you know at a glance where things are. Not something easily accomplished with an Excel spreadsheet. An added bonus with Agilo for Scrum is the ability to navigate back and forth between the relationships. Neat.

Something that I've come to realize over the years, it's not the tool that fixes the problem. Taking a more lean approach to things, if you need a tool to fix some problem you have a real problem on your hands. For example if you *need* a tool to manage your Scrum process, it might be an indicator that your Scrum process is too complicated. While I'm happy to see all of these tools out there evolving (and more new ones popping up), I'm a strong advocate of "fix the problem" rather than "get a tool" mentality. YMMV.

One note I wanted to mention. Being a blogger you make posts of course (well, duh!). These are sometimes series, or popular individual posts but they come back. 6 or 12 months later that original post might need some update love. That's the cool thing is that you can go back, look at what you've done and apply some new knowledge to it creating something interesting for everyone out there. I have a large backlog in my blog queue of just posts I've written that need updating like this, this, and this. Nothing like keeping yourself busy with your own work eh?

Anywho, check out the Agilo for Scrum tool here if you have Trac and if you're looking for a good bug tracking tool, you can't go wrong with Trac so check it out here.

-

Fun and Adventures with VMware

My most favorite feature of VMware Workstation today. The ability to right-click on a .vmdk file (VMware virtual disk file) and create a mapping to the inside of the disk contents to a new drive letter in your system. Pure goodness for pulling out files from an image when the image might not boot or you don't want to start it up.

My most favorite feature of VMware Workstation today. The ability to right-click on a .vmdk file (VMware virtual disk file) and create a mapping to the inside of the disk contents to a new drive letter in your system. Pure goodness for pulling out files from an image when the image might not boot or you don't want to start it up.My least favorite feature of VMware Workstation today. Resizing a parent disk when you have linked clones causes all of the linked clones to be invalidated. I needed more space in my guest OS (apparently 8Gb just doesn't cut it anymore with VS2008 and Windows Server 2008) so I used the vmware-vdiskmanager.exe console tool to expand the disk. Then found out all my linked clones were now invalid. Guess how many VMs I'm recreating this weekend?

Sometimes I feel like a nut...

-

SharePoint 2007 Rant #1

A new year, a new series as I get my WSS site online and finish up some crusty old 2007 SharePoint projects.

Dear Microsoft,

Why in the name of all that is holy did you make the Slide Library a MOSS only feature? I still fail to see what "enterprise features" a modified document library that has some extra functionality for slide shows needs from MOSS. Sigh.

P.S. Deleting all the content in a slide library when you deactivate the feature was a nice touch too, thanks for that.

-

Spinning SharePoint Plates on RunAsRadio.com

The RunAsRadio.com guys invited me to chat with them for a show yesterday about SharePoint. In it Richard Campbell, Greg Hughes, and I talk about SharePoint deployment, management, logging chains, tools, DotNetNuke, taxonomies, concealed lizards, information architecture, security, and spinning plates. All in 30 minutes.

The RunAsRadio.com guys invited me to chat with them for a show yesterday about SharePoint. In it Richard Campbell, Greg Hughes, and I talk about SharePoint deployment, management, logging chains, tools, DotNetNuke, taxonomies, concealed lizards, information architecture, security, and spinning plates. All in 30 minutes.Greg and I go way back in SharePoint history with our experience, struggling (and surviving) with the early incarnations (Microsoft's "digital dashboard" technology from 2000) and Richard continues to think of SharePoint deployments as a "virus" (we'll cut him some slack as he's Canadian and it's snowing in Vancouver).

It was a fun, relaxed show that's now online in all the flavours they usually offer (MP3, WMA, etc.) with full downloads or torrents (which is frickin' awesome if you ask me). You can check out RunAsRadio.com here and my show, show #43, here. I think it's awesome that we're talking about cool stuff one day and it gets published on the site the next. That's efficiency from the PWOP Productions team! PDF transcript should follow in a couple of weeks.

-

Pex - A Tool in Search of an Identity

A cohort turned my attention to something from Microsoft Research called "Pex: Dynamic Analysis and Test Generation for .NET".

A cohort turned my attention to something from Microsoft Research called "Pex: Dynamic Analysis and Test Generation for .NET".I only took a quick glance at it (there doesn't seem to be any downloads available, just whitepapers and a screencast), but from what I see I already don’t like it.

First off, I have an issue with a statement almost right off the bat “By automatically generating unit tests, it helps to find bugs early”. First, I don’t believe “automatically generating unit tests” is of very much value. TDD (and more recently BDD) is about building a system that meets a business need with a solution and driving that solution out with executable specifications that can be understood by anyone. With the release of VS2005 Microsoft gave us “automatically generated unit tests” by pointing it at code and creating a bunch of crap tests that more or less really only tested the .NET framework (make sure string x is n long, lather, rinse, repeat). Also I'm not sure how automatically generating unit tests can find bugs early (which is what Pex claims). That seems to be a mystical conjuration I missed out on.

Pex claims to be taking test driven development to the next level. I don't think it even knows what level it itself is at yet.

Pex feels to me like it's trying to be like an automated FxCop (and we all know what that might be like). Looking at the walkthrough you still write a test (now called a "Parameterized Unit Test"). This smells to me like a RowTest in MbUnit terms but doesn't look like one and is used to generate more tests (it seems as partial classes to your own test class). Then you run Pex against it from inside the IDE. Here's where it gets a little fuzzy. Pex generates test cases and reports from them, with suggestions as to how to fix the failing code. For example in the walkthrough the test case suggestion is to validate the length of a string before trying to extract a substring. What is a little obscure is what exactly that suggested snippet is for, the test case or the code you're testing?

"High Code Coverage". The test cases generated by Pex "give high code coverage". Again a monkey's paw here. High code coverage means very little in the real world. Just because you have some automated "thing" hitting your code, doesn't mean it's right. Or that your code is really doing what you intended it to. I can have 100% code coverage on a lot of crap code and still have a buggy system. At best you'll catch stupid programmer errors like bounds checking and null object references. While this is a good thing, just writing a little code you can accomplish the same task a lot quicker than writing a specific unit test to generate other tests for you. Maybe it's grunt work and silly unit test code to write and maybe that's the benefit of Pex.

"Integrates with Unit Testing Frameworks". This is another red herring. What it really means is "Integrates with VSTS Unit Testing Framework". Nowhere in the documentation or site can I see it integration with MbUnit or NUnit. It does however mention it can run with MbUnit or NUnit so I assume something can be done here (maybe through template generation), but little substance is available right now.

Then there's the mock objects, [PexMock]. Again, no meat here as these are early looks but Pex supports mocking interfaces and virtual methods. Yes, in addition to building it's own NUnit clone (MSTest), NDoc clone (SandCastle), Castle.Windsor (DIAB), and NAnt (MSBuild), you can now get your very own Rhino clone in the form of PexMock! It looks a little more complex to setup and use than Rhino, but then who says Microsoft tools are simple. If it's simple to use, it can't be powerful can it?

I watched the screencast which walks through the chunker demo (apparently the only demo code they have as everything is based around it). It starts innocently enough with someone writing a test, decorated with the [PexTest] attribute. Once enough code is written to make it compile (red) you "Pex It" from the context menu. This generates some unit tests, somehow giving 73% coverage and failing (because at this point the Chunker class returns null). Pex suggests how to fix your business code along with suggestions for modifying the test.

From the error list you can jump to the generated test code (there's also an option to "Fix it" which we'll get to in a sec). The developer then implements the logic code to try to fix the test. By selecting the "Fix it" option, Pex finds the place where the null reference might occur (in the constructor) and injects code into your logic (by surrounding it with "// [Pex]" tags, ugh, horror flashbacks of Rational Rose come to my mind).

The problem with the tool is that generated tests come out like "DomainObjectValueTypeOperation_70306_211024_0_01" and "DomainObjectValueTypeOperation_70306_211024_0_02". One of the values of TDD and unit tests is for someone to look at a set of unit tests and know how the domain is supposed to behave. I know for example exactly what a spec or test called "Should_update_customer_balance_when_adding_a_new_item_to_an_existing_order" does. I don't have to crack open my Customer.cs, Order.cs and CustomerOrder.cs files to see what's going on. "CustomerStringInt32_1234_102965_0_01" means nothing to me. Okay, these are generated tests so why should I care?

This probably gets to the crux of what Pex is doing. It's generating tests for code coverage. Nothing more. I can't tell what my Pex system does from test names or maybe even looking at the tests themselves. Maybe there's an option in Pex to template the naming but even that's just going to make it a little more readable, but far from soluble to a new developer coming onto the project. Maybe I'm wrong, but if all Pex is doing is covering my butt for having bad developers, then I would rather train my developers better (like checking null references) than to have them rely on a tool to do their job for them.

A lot of smart dudes (much smarter than me) have worked on this and obviously Microsoft is putting a lot of effort into it. So who am I to say this is good, bad, or ugly. I suppose time will tell as it gets released and we see what we can really do with it. These are casual observations from a casual developer who really doesn't have any clout in the grand scheme of things. For me, I'm going to continue to write executable specs in a more readable BDD form that helps me understand the problems I'm trying to solve and not focus on how much code coverage I get from string checking, but YMMV.

-

The Return of the Plumbers - Episode 12

Plumbers @ Work is a podcast I do with NHibernate Mafia leader James Kovacs and John "The Pimp" Bristowe, Microsoft Canada Developer Advisor and 5 time winner of the Buckeye Newshawk award (hey, I need a nickname!). We blabber about goings on in the .NET community and whatever else is out there to complain about.

Plumbers @ Work is a podcast I do with NHibernate Mafia leader James Kovacs and John "The Pimp" Bristowe, Microsoft Canada Developer Advisor and 5 time winner of the Buckeye Newshawk award (hey, I need a nickname!). We blabber about goings on in the .NET community and whatever else is out there to complain about.We're back after a 6 month European tour with the Spice Girls with our new lean, mean ready-in-30-minutes format for you to iPod to your hearts content. In our latest episode we stumble over:

- Heroes Happen Here Launch

- SQL Server 2008

- Visual Studio 2008

- Extension methods

- JavaScript debugging and Intellisense

- Lambdas, LINQ, and PLINQ

- DevTeach past and future

- ALT.NET Open Space Conference coming to Canada

- ASP.NET MVC Framework

- MVCContrib Project on CodePlex

- 360Voice.com

You can download the podcast directly here in MP3 format or visit our site here. We're aiming to produce the 30 minute version of our show every 2 weeks now. Come back later to see how that goes...